Monte Carlo Tree Search: a Tutorial

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Development of Games for Users with Visual Impairment Czech Technical University in Prague Faculty of Electrical Engineering

Development of games for users with visual impairment Czech Technical University in Prague Faculty of Electrical Engineering Dina Chernova January 2017 Acknowledgement I would first like to thank Bc. Honza Had´aˇcekfor his valuable advice. I am also very grateful to my supervisor Ing. Daniel Nov´ak,Ph.D. and to all participants that were involved in testing of my application for their precious time. I must express my profound gratitude to my loved ones for their support and continuous encouragement throughout my years of study. This accomplishment would not have been possible without them. Thank you. 5 Declaration I declare that I have developed this thesis on my own and that I have stated all the information sources in accordance with the methodological guideline of adhering to ethical principles during the preparation of university theses. In Prague 09.01.2017 Author 6 Abstract This bachelor thesis deals with analysis and implementation of mobile application that allows visually impaired people to play chess on their smart phones. The application con- trol is performed using special gestures and text{to{speech engine as a sound accompanier. For human against computer game mode I have used currently the best game engine called Stockfish. The application is developed under Android mobile platform. Keywords: chess; visually impaired; Android; Bakal´aˇrsk´apr´acese zab´yv´aanal´yzoua implementac´ımobiln´ıaplikace, kter´aumoˇzˇnuje zrakovˇepostiˇzen´ymlidem hr´atˇsachy na sv´emsmartphonu. Ovl´ad´an´ıaplikace se prov´ad´ı pomoc´ıspeci´aln´ıch gest a text{to{speech enginu pro zvukov´edoprov´azen´ı.V reˇzimu ˇclovˇek versus poˇc´ıtaˇcjsem pouˇzilasouˇcasnˇenejlepˇs´ıhern´ıengine Stockfish. -

Monte-Carlo Tree Search Solver

Monte-Carlo Tree Search Solver Mark H.M. Winands1, Yngvi BjÄornsson2, and Jahn-Takeshi Saito1 1 Games and AI Group, MICC, Universiteit Maastricht, Maastricht, The Netherlands fm.winands,[email protected] 2 School of Computer Science, Reykjav¶³kUniversity, Reykjav¶³k,Iceland [email protected] Abstract. Recently, Monte-Carlo Tree Search (MCTS) has advanced the ¯eld of computer Go substantially. In this article we investigate the application of MCTS for the game Lines of Action (LOA). A new MCTS variant, called MCTS-Solver, has been designed to play narrow tacti- cal lines better in sudden-death games such as LOA. The variant di®ers from the traditional MCTS in respect to backpropagation and selection strategy. It is able to prove the game-theoretical value of a position given su±cient time. Experiments show that a Monte-Carlo LOA program us- ing MCTS-Solver defeats a program using MCTS by a winning score of 65%. Moreover, MCTS-Solver performs much better than a program using MCTS against several di®erent versions of the world-class ®¯ pro- gram MIA. Thus, MCTS-Solver constitutes genuine progress in using simulation-based search approaches in sudden-death games, signi¯cantly improving upon MCTS-based programs. 1 Introduction For decades ®¯ search has been the standard approach used by programs for playing two-person zero-sum games such as chess and checkers (and many oth- ers). Over the years many search enhancements have been proposed for this framework. However, in some games where it is di±cult to construct an accurate positional evaluation function (e.g., Go) the ®¯ approach was hardly success- ful. -

Αβ-Based Play-Outs in Monte-Carlo Tree Search

αβ-based Play-outs in Monte-Carlo Tree Search Mark H.M. Winands Yngvi Bjornsson¨ Abstract— Monte-Carlo Tree Search (MCTS) is a recent [9]. In the context of game playing, Monte-Carlo simulations paradigm for game-tree search, which gradually builds a game- were first used as a mechanism for dynamically evaluating tree in a best-first fashion based on the results of randomized the merits of leaf nodes of a traditional αβ-based search [10], simulation play-outs. The performance of such an approach is highly dependent on both the total number of simulation play- [11], [12], but under the new paradigm MCTS has evolved outs and their quality. The two metrics are, however, typically into a full-fledged best-first search procedure that replaces inversely correlated — improving the quality of the play- traditional αβ-based search altogether. MCTS has in the past outs generally involves adding knowledge that requires extra couple of years substantially advanced the state-of-the-art computation, thus allowing fewer play-outs to be performed in several game domains where αβ-based search has had per time unit. The general practice in MCTS seems to be more towards using relatively knowledge-light play-out strategies for difficulties, in particular computer Go, but other domains the benefit of getting additional simulations done. include General Game Playing [13], Amazons [14] and Hex In this paper we show, for the game Lines of Action (LOA), [15]. that this is not necessarily the best strategy. The newest version The right tradeoff between search and knowledge equally of our simulation-based LOA program, MC-LOAαβ , uses a applies to MCTS. -

Iaj 10-3 (2019)

Vol. 10 No. 3 2019 Arthur D. Simons Center for Interagency Cooperation, Fort Leavenworth, Kansas FEATURES | 1 About The Simons Center The Arthur D. Simons Center for Interagency Cooperation is a major program of the Command and General Staff College Foundation, Inc. The Simons Center is committed to the development of military leaders with interagency operational skills and an interagency body of knowledge that facilitates broader and more effective cooperation and policy implementation. About the CGSC Foundation The Command and General Staff College Foundation, Inc., was established on December 28, 2005 as a tax-exempt, non-profit educational foundation that provides resources and support to the U.S. Army Command and General Staff College in the development of tomorrow’s military leaders. The CGSC Foundation helps to advance the profession of military art and science by promoting the welfare and enhancing the prestigious educational programs of the CGSC. The CGSC Foundation supports the College’s many areas of focus by providing financial and research support for major programs such as the Simons Center, symposia, conferences, and lectures, as well as funding and organizing community outreach activities that help connect the American public to their Army. All Simons Center works are published by the “CGSC Foundation Press.” The CGSC Foundation is an equal opportunity provider. InterAgency Journal FEATURES Vol. 10, No. 3 (2019) 4 In the beginning... Special Report by Robert Ulin Arthur D. Simons Center for Interagency Cooperation 7 Military Neuro-Interventions: The Lewis and Clark Center Solving the Right Problems for Ethical Outcomes 100 Stimson Ave., Suite 1149 Shannon E. -

(2021), 2814-2819 Research Article Can Chess Ever Be Solved Na

Turkish Journal of Computer and Mathematics Education Vol.12 No.2 (2021), 2814-2819 Research Article Can Chess Ever Be Solved Naveen Kumar1, Bhayaa Sharma2 1,2Department of Mathematics, University Institute of Sciences, Chandigarh University, Gharuan, Mohali, Punjab-140413, India [email protected], [email protected] Article History: Received: 11 January 2021; Accepted: 27 February 2021; Published online: 5 April 2021 Abstract: Data Science and Artificial Intelligence have been all over the world lately,in almost every possible field be it finance,education,entertainment,healthcare,astronomy, astrology, and many more sports is no exception. With so much data, statistics, and analysis available in this particular field, when everything is being recorded it has become easier for team selectors, broadcasters, audience, sponsors, most importantly for players themselves to prepare against various opponents. Even the analysis has improved over the period of time with the evolvement of AI, not only analysis one can even predict the things with the insights available. This is not even restricted to this,nowadays players are trained in such a manner that they are capable of taking the most feasible and rational decisions in any given situation. Chess is one of those sports that depend on calculations, algorithms, analysis, decisions etc. Being said that whenever the analysis is involved, we have always improvised on the techniques. Algorithms are somethingwhich can be solved with the help of various software, does that imply that chess can be fully solved,in simple words does that mean that if both the players play the best moves respectively then the game must end in a draw or does that mean that white wins having the first move advantage. -

Smartk: Efficient, Scalable and Winning Parallel MCTS

SmartK: Efficient, Scalable and Winning Parallel MCTS Michael S. Davinroy, Shawn Pan, Bryce Wiedenbeck, and Tia Newhall Swarthmore College, Swarthmore, PA 19081 ACM Reference Format: processes. Each process constructs a distinct search tree in Michael S. Davinroy, Shawn Pan, Bryce Wiedenbeck, and Tia parallel, communicating only its best solutions at the end of Newhall. 2019. SmartK: Efficient, Scalable and Winning Parallel a number of rollouts to pick a next action. This approach has MCTS. In SC ’19, November 17–22, 2019, Denver, CO. ACM, low communication overhead, but significant duplication of New York, NY, USA, 3 pages. https://doi.org/10.1145/nnnnnnn.nnnnnnneffort across processes. 1 INTRODUCTION Like root parallelization, our SmartK algorithm achieves low communication overhead by assigning independent MCTS Monte Carlo Tree Search (MCTS) is a popular algorithm tasks to processes. However, SmartK dramatically reduces for game playing that has also been applied to problems as search duplication by starting the parallel searches from many diverse as auction bidding [5], program analysis [9], neural different nodes of the search tree. Its key idea is it uses nodes’ network architecture search [16], and radiation therapy de- weights in the selection phase to determine the number of sign [6]. All of these domains exhibit combinatorial search processes (the amount of computation) to allocate to ex- spaces where even moderate-sized problems can easily ex- ploring a node, directing more computational effort to more haust computational resources, motivating the need for par- promising searches. allel approaches. SmartK is our novel parallel MCTS algo- Our experiments with an MPI implementation of SmartK rithm that achieves high performance on large clusters, by for playing Hex show that SmartK has a better win rate and adaptively partitioning the search space to efficiently utilize scales better than other parallelization approaches. -

Download Source Engine for Pc Free Download Source Engine for Pc Free

download source engine for pc free Download source engine for pc free. Completing the CAPTCHA proves you are a human and gives you temporary access to the web property. What can I do to prevent this in the future? If you are on a personal connection, like at home, you can run an anti-virus scan on your device to make sure it is not infected with malware. If you are at an office or shared network, you can ask the network administrator to run a scan across the network looking for misconfigured or infected devices. Another way to prevent getting this page in the future is to use Privacy Pass. You may need to download version 2.0 now from the Chrome Web Store. Cloudflare Ray ID: 67a0b2f3bed7f14e • Your IP : 188.246.226.140 • Performance & security by Cloudflare. Download source engine for pc free. Completing the CAPTCHA proves you are a human and gives you temporary access to the web property. What can I do to prevent this in the future? If you are on a personal connection, like at home, you can run an anti-virus scan on your device to make sure it is not infected with malware. If you are at an office or shared network, you can ask the network administrator to run a scan across the network looking for misconfigured or infected devices. Another way to prevent getting this page in the future is to use Privacy Pass. You may need to download version 2.0 now from the Chrome Web Store. Cloudflare Ray ID: 67a0b2f3c99315dc • Your IP : 188.246.226.140 • Performance & security by Cloudflare. -

Monte-Carlo Tree Search

Monte-Carlo Tree Search PROEFSCHRIFT ter verkrijging van de graad van doctor aan de Universiteit Maastricht, op gezag van de Rector Magnificus, Prof. mr. G.P.M.F. Mols, volgens het besluit van het College van Decanen, in het openbaar te verdedigen op donderdag 30 september 2010 om 14:00 uur door Guillaume Maurice Jean-Bernard Chaslot Promotor: Prof. dr. G. Weiss Copromotor: Dr. M.H.M. Winands Dr. B. Bouzy (Universit´e Paris Descartes) Dr. ir. J.W.H.M. Uiterwijk Leden van de beoordelingscommissie: Prof. dr. ir. R.L.M. Peeters (voorzitter) Prof. dr. M. Muller¨ (University of Alberta) Prof. dr. H.J.M. Peters Prof. dr. ir. J.A. La Poutr´e (Universiteit Utrecht) Prof. dr. ir. J.C. Scholtes The research has been funded by the Netherlands Organisation for Scientific Research (NWO), in the framework of the project Go for Go, grant number 612.066.409. Dissertation Series No. 2010-41 The research reported in this thesis has been carried out under the auspices of SIKS, the Dutch Research School for Information and Knowledge Systems. ISBN 978-90-8559-099-6 Printed by Optima Grafische Communicatie, Rotterdam. Cover design and layout finalization by Steven de Jong, Maastricht. c 2010 G.M.J-B. Chaslot. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronically, mechanically, photocopying, recording or otherwise, without prior permission of the author. Preface When I learnt the game of Go, I was intrigued by the contrast between the simplicity of its rules and the difficulty to build a strong Go program. -

Machine Learning Explained

Learning Deep or not Deep… De MENACE à AlphaGo JM Alliot Le Deep Learning en Version Simple Qu’est-ce que c’est ? Croisement entre deux univers • L’apprentissage • Par renforcement: on est capable d’évaluer le résultat et de l’utiliser pour améliorer le programme • Supervisé: une base de données d’apprentissage est disponible cas par cas • Non supervisé: algorithmes de classification (k- means,…),… • Les neurosciences DLVS L’apprentissage par renforcement • Turing: • « Instead of trying to produce a program to simulate the adult mind, why not rather try to produce one which simulates the child’s? If this were then subjected to an appropriate course of education one would obtain the adult brain. Presumably the child brain is something like a notebook as one buys it from the stationers. Rather little mechanism, and lots of blank sheets.” • “The use of punishments and rewards can at best be a part of the teaching process. Roughly speaking, if the teacher has no other means of communicating to the pupil, the amount of information which can reach him does not exceed the total number of rewards and punishments applied. By the time a child has learnt to repeat “Casabianca” he would probably feel very sore indeed, if the text could only be discovered by a “Twenty Questions” technique, every “NO” taking the form of a blow.” DLVS L’apprentissage par renforcement • Arthur Samuel (1959): « Some Studies in Machine Learning Using the Game of Checkers » • Memoization • Ajustement automatique de paramètres par régression. • Donald Michie (1962): MENACE -

CS234 Notes - Lecture 14 Model Based RL, Monte-Carlo Tree Search

CS234 Notes - Lecture 14 Model Based RL, Monte-Carlo Tree Search Anchit Gupta, Emma Brunskill June 14, 2018 1 Introduction In this lecture we will learn about model based RL and simulation based tree search methods. So far we have seen methods which attempt to learn either a value function or a policy from experience. In contrast model based approaches first learn a model of the world from experience and then use this for planning and acting. Model-based approaches have been shown to have better sample efficiency and faster convergence in certain settings. We will also have a look at MCTS and its variants which can be used for planning given a model. MCTS was one of the main ideas behind the success of AlphaGo. Figure 1: Relationships among learning, planning, and acting 2 Model Learning By model we mean a representation of an MDP < S; A; R; T; γ> parametrized by η. In the model learning regime we assume that the state and action space S; A are known and typically we also assume conditional independence between state transitions and rewards i.e P [st+1; rt+1jst; at] = P [st+1jst; at]P [rt+1jst; at] Hence learning a model consists of two main parts the reward function R(:js; a) and the transition distribution P (:js; a). k k k k K Given a set of real trajectories fSt ;At Rt ; :::; ST gk=1 model learning can be posed as a supervised learning problem. Learning the reward function R(s; a) is a regression problem whereas learning the transition function P (s0js; a) is a density estimation problem. -

The SSDF Chess Engine Rating List, 2019-02

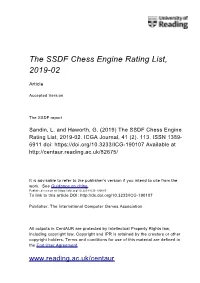

The SSDF Chess Engine Rating List, 2019-02 Article Accepted Version The SSDF report Sandin, L. and Haworth, G. (2019) The SSDF Chess Engine Rating List, 2019-02. ICGA Journal, 41 (2). 113. ISSN 1389- 6911 doi: https://doi.org/10.3233/ICG-190107 Available at http://centaur.reading.ac.uk/82675/ It is advisable to refer to the publisher’s version if you intend to cite from the work. See Guidance on citing . Published version at: https://doi.org/10.3233/ICG-190085 To link to this article DOI: http://dx.doi.org/10.3233/ICG-190107 Publisher: The International Computer Games Association All outputs in CentAUR are protected by Intellectual Property Rights law, including copyright law. Copyright and IPR is retained by the creators or other copyright holders. Terms and conditions for use of this material are defined in the End User Agreement . www.reading.ac.uk/centaur CentAUR Central Archive at the University of Reading Reading’s research outputs online THE SSDF RATING LIST 2019-02-28 148673 games played by 377 computers Rating + - Games Won Oppo ------ --- --- ----- --- ---- 1 Stockfish 9 x64 1800X 3.6 GHz 3494 32 -30 642 74% 3308 2 Komodo 12.3 x64 1800X 3.6 GHz 3456 30 -28 640 68% 3321 3 Stockfish 9 x64 Q6600 2.4 GHz 3446 50 -48 200 57% 3396 4 Stockfish 8 x64 1800X 3.6 GHz 3432 26 -24 1059 77% 3217 5 Stockfish 8 x64 Q6600 2.4 GHz 3418 38 -35 440 72% 3251 6 Komodo 11.01 x64 1800X 3.6 GHz 3397 23 -22 1134 72% 3229 7 Deep Shredder 13 x64 1800X 3.6 GHz 3360 25 -24 830 66% 3246 8 Booot 6.3.1 x64 1800X 3.6 GHz 3352 29 -29 560 54% 3319 9 Komodo 9.1 -

Hearing on China's Military Reforms and Modernization: Implications for the United States Hearing Before the U.S.-China Economic

HEARING ON CHINA'S MILITARY REFORMS AND MODERNIZATION: IMPLICATIONS FOR THE UNITED STATES HEARING BEFORE THE U.S.-CHINA ECONOMIC AND SECURITY REVIEW COMMISSION ONE HUNDRED FIFTEENTH CONGRESS SECOND SESSION THURSDAY, FEBRUARY 15, 2018 Printed for use of the United States-China Economic and Security Review Commission Available via the World Wide Web: www.uscc.gov UNITED STATES-CHINA ECONOMIC AND SECURITY REVIEW COMMISSION WASHINGTON: 2018 U.S.-CHINA ECONOMIC AND SECURITY REVIEW COMMISSION ROBIN CLEVELAND, CHAIRMAN CAROLYN BARTHOLOMEW, VICE CHAIRMAN Commissioners: HON. CARTE P. GOODWIN HON. JAMES TALENT DR. GLENN HUBBARD DR. KATHERINE C. TOBIN HON. DENNIS C. SHEA MICHAEL R. WESSEL HON. JONATHAN N. STIVERS DR. LARRY M. WORTZEL The Commission was created on October 30, 2000 by the Floyd D. Spence National Defense Authorization Act for 2001 § 1238, Public Law No. 106-398, 114 STAT. 1654A-334 (2000) (codified at 22 U.S.C. § 7002 (2001), as amended by the Treasury and General Government Appropriations Act for 2002 § 645 (regarding employment status of staff) & § 648 (regarding changing annual report due date from March to June), Public Law No. 107-67, 115 STAT. 514 (Nov. 12, 2001); as amended by Division P of the “Consolidated Appropriations Resolution, 2003,” Pub L. No. 108-7 (Feb. 20, 2003) (regarding Commission name change, terms of Commissioners, and responsibilities of the Commission); as amended by Public Law No. 109- 108 (H.R. 2862) (Nov. 22, 2005) (regarding responsibilities of Commission and applicability of FACA); as amended by Division J of the “Consolidated Appropriations Act, 2008,” Public Law Nol. 110-161 (December 26, 2007) (regarding responsibilities of the Commission, and changing the Annual Report due date from June to December); as amended by the Carl Levin and Howard P.