An Empirical Analysis of the Docker Container Ecosystem on Github

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Systemd – Easy As 1, 2, 3

Systemd – Easy as 1, 2, 3 Ben Breard, RHCA Solutions Architect, Red Hat [email protected] Agenda ● Systemd functionality ● Coming to terms ● Learning the basics ● More advanced topics ● Learning the journal ● Available resources 2 Systemd is more than a SysVinit replacement 3 Systemd is a system and service manager 4 Systemd Overview ● Controls “units” rather than just daemons ● Handles dependency between units. ● Tracks processes with service information ● Services are owned by a cgroup. ● Simple to configure “SLAs” based on CPU, Memory, and IO. ● Properly kill daemons ● Minimal boot times ● Debuggability – no early boot messages are lost ● Easy to learn and backwards compatible. 5 Closer look at Units 6 Systemd - Units ● Naming convention is: name.type ● httpd.service, sshd.socket, or dev-hugepages.mount ● Service – Describe a daemon's type, execution, environment, and how it's monitored. ● Socket – Endpoint for interprocess communication. File, network, or Unix sockets. ● Target – Logical grouping of units. Replacement for runlevels. ● Device – Automatically created by the kernel. Can be provided to services as dependents. ● Mounts, automounts, swap – Monitor the mounting/unmounting of file systems. 7 Systemd – Units Continued ● Snapshots – save the state of units – useful for testing ● Timers – Timer-based activation ● Paths – Uses inotify to monitor a path ● Slices – For resource management. ● system.slice – services started by systemd ● user.slice – user processes ● machine.slice – VMs or containers registered with systemd 8 Systemd – Dependency Resolution ● Example: ● Wait for block device ● Check file system for device ● Mount file system ● nfs-lock.service: ● Requires=rpcbind.service network.target ● After=network.target named.service rpcbind.service ● Before=remote-fs-pre.target 9 That's all great .......but 10 Replace Init scripts!? Are you crazy?! 11 We're not crazy, I promise ● SysVinit had a good run, but leaves a lot to be desired. -

Cisco Virtualized Infrastructure Manager Installation Guide, 2.4.9

Cisco Virtualized Infrastructure Manager Installation Guide, 2.4.9 First Published: 2019-01-09 Last Modified: 2019-05-20 Americas Headquarters Cisco Systems, Inc. 170 West Tasman Drive San Jose, CA 95134-1706 USA http://www.cisco.com Tel: 408 526-4000 800 553-NETS (6387) Fax: 408 527-0883 THE SPECIFICATIONS AND INFORMATION REGARDING THE PRODUCTS IN THIS MANUAL ARE SUBJECT TO CHANGE WITHOUT NOTICE. ALL STATEMENTS, INFORMATION, AND RECOMMENDATIONS IN THIS MANUAL ARE BELIEVED TO BE ACCURATE BUT ARE PRESENTED WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED. USERS MUST TAKE FULL RESPONSIBILITY FOR THEIR APPLICATION OF ANY PRODUCTS. THE SOFTWARE LICENSE AND LIMITED WARRANTY FOR THE ACCOMPANYING PRODUCT ARE SET FORTH IN THE INFORMATION PACKET THAT SHIPPED WITH THE PRODUCT AND ARE INCORPORATED HEREIN BY THIS REFERENCE. IF YOU ARE UNABLE TO LOCATE THE SOFTWARE LICENSE OR LIMITED WARRANTY, CONTACT YOUR CISCO REPRESENTATIVE FOR A COPY. The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB's public domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California. NOTWITHSTANDING ANY OTHER WARRANTY HEREIN, ALL DOCUMENT FILES AND SOFTWARE OF THESE SUPPLIERS ARE PROVIDED “AS IS" WITH ALL FAULTS. CISCO AND THE ABOVE-NAMED SUPPLIERS DISCLAIM ALL WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING, WITHOUT LIMITATION, THOSE OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THIS MANUAL, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. -

Continuous Integration with Jenkins

Automated Deployment … of Debian & Ubuntu Michael Prokop About Me Debian Developer Project lead of Grml.org ounder of Grml-Forensic.org #nvolved in A#$ initramf"-tools$ etc. Member in Debian orensic Team Author of &ook $$Open Source Projektmanagement) #T *on"ultant Disclaimer" Deployment focuses on Linux (everal tools mentioned$ but there exist even more :. We'll cover some sections in more detail than others %here's no one-size-fits-all solution – identify what works for you Sy"tems Management Provisioning 4 Documentation &oot"trapping #nfrastructure 'rche"tration 4 Development Dev'ps Automation 6isualization/Trends *onfiguration 4Metric" + Logs Management Monitoring + *loud Service Updates Deployment Systems Management Remote Acce"" ipmi, HP i+'$ IBM RSA,... irm3are Management 9Vendor Tools Provisioning / Bootstrapping :ully) A(utomatic) I(n"tallation) Debian, Ubuntu$ Cent'( + Scientific +inu, http://fai-project.org/ ;uju Ubuntu <Charms= https-44juju.ubuntu.com/ grml-debootstrap netscript=http://example.org/net"cript.sh http-44grml.org4 d-i preseeding auto url>http-44debian.org/releases4\ "queeze/example-preseed.txt http-443iki.debian.org/DebianInstaller/Preseed Kickstart Cobbler Foreman AutoYa(%$ openQRM, (pace3alk,... Orche"tration / Automation Fabric (Python) % cat fabfile.py from fabric.api import run def host_type(): run('uname -s') % fab -H host1, host2,host3 host_type Capistrano (Ruby) % cat Capfile role :hosts, "host1", "host2", "host3" task :host_type, :roles => :hosts do run "uname -s" end % cap host_type 7undeck apt-dater % cat .config/apt-dater/hosts.conf [example.org] [email protected];mika@ mail.example.org;... *ontrolTier, Func$ MCollective$... *luster((8$ dsh, TakTuk,... *obbler$ Foreman$ openQRM, Spacewalk,... *onfiguration Management Puppet Environment" :production4"taging/development. -

Power State Management in Automotive Linux

Power State Management in Automotive Linux Table of Contents Terminology As automotive, consumer electronics, and embedded Executive Summary ............................................................1 software and hardware engineering intersect, technical Vehicle Power States and Device Energy traditions and vocabulary for in-car systems design begin Management ......................................................................1 to overlap. The following terms are defined for use in this Key Linux/Open Source Software Technologies document. for Automotive ...................................................................2 Power state management: Automakers and their supply CAN and MOST Drivers and Protocols ..........................2 chains use power management to describe the state of D-Bus ..............................................................................3 vehicles and in-vehicle systems relative to their policies for and use of electric power flowing from a vehicle’s Energy Management ......................................................3 alternator and battery. To minimize confusion between Initng: Next-Generation Init System ..............................3 familiar automotive terms and current embedded and System Health Monitoring – Monit ................................4 mobile terms, in this document power state management Conclusion ..........................................................................4 is used to describe the software infrastructure to support vehicle power states and transitions. Energy -

BSD Magazine

5-2600 High-Density iXsystems Servers powered by the E Intel® Xeon® Processor E5-2600 Family and Intel® High Performance, C600 series chipset can pack up to 768GB of RAM High Density Servers for into 1U of rack space or up to 8 processors - with up to 128 threads - in 2U. Data Center, Virtualization, & HPC On-board 10 Gigabit Ethernet and Infiniband for Greater Throughput in less Rack Space. Servers from iXsystems based on the Intel® Xeon® Processor E5-2600 Family feature high-throughput connections on the motherboard, saving critical expansion space. The Intel® C600 Series chipset supports up to 384GB of RAM per processor, allowing performance in a single server to reach new heights. This ensures that you’re not paying for more than you need to achieve the performance you want. The iXR-1204 +10G features dual onboard 10GigE + dual onboard 1GigE network controllers, up to 768GB of RAM and dual Intel® Xeon® IXR-1204+10G: 10GbE On-Board Processors E5-2600 Family, freeing up critical expansion card space for application-specific hardware. T he uncompromised performance and flexibility of the iXR-1204 +10G makes it suitable for clustering, high-traffic webservers, virtualization, and cloud computing applications - anywhere MODEL: iXR-22X4IB you need the most resources available. For even greater performance density, the iXR-22X4IB squeezes four server nodes into two units of rack space, each with dual Intel® Xeon® Processors E5-2600 Family, up to 256GB of RAM, and an on-board Mellanox® ConnectX QDR 40Gbp/s Infiniband w/QSFP Connector. T he iXR-22X4IB is http://www.iXsystems.com/e5 perfect for high-powered computing, virtualization, or business intelligence 768GB applications that require the computing power of the Intel® Xeon® Processor of RAM in 1U E5-2600 Family and the high throughput of Infiniband. -

Company Profile

CCORPORATEORPORATE 20212021PROFILEPROFILE www.met-technologies.com EXPERIENCE PERFECTION AND EXCELLENCE KNOW US We are a leading software development company with a 360-degree approach to deliver the latest & quality IT solutions. MET Technologies Pvt. Ltd. is a global IT solutions, BPO & KPO service provider, widely recognized by clients all over the world as a one-stop-solution serving businesses of diverse domains and scales. We focus on implementing proper software development techniques and efficient customer support in achieving the finest results with foreseeable growth outcomes. A standout amongst the most proficient software developing organizations, we offer the most cutting edge IT solutions for you. 1 OUR MISSION, VISION & PHILOSOPHY MISSION VISION PHILOSOPHY To deliver bespoke solutions We aim to become a globally Strive only for excellence that meets client’s needs trusted IT/ITES services in all fields of development, within estimated timeline. provider for our clients. design & delivery. 2 ABOUT MET Technologies Pvt Ltd is a global web development and IT solutions service provider focused on providing innovative solutions since past 11 years. We aim to deliver technology-based business solutions that can fulfil the strategic requirements of our clients. At MET Technologies, we provide the best quality business outsourcing solutions across international clients. MET believes in continuous improvement to achieve greater client satisfaction. Our workforce continues to render quality processes to build trust with our clients. FITSER- MET’S GLOBAL IT/ ITES UNIT FITSER is the Global Software Development Unit of MET and in just a short span of time it has become the one-stop solution for all needs of our clients globally. -

Deploying Rails Applications a Step by Step Guide

Extracted from: Deploying Rails Applications A Step by Step Guide This PDF file contains pages extracted from Deploying Rails Applications, published by the Pragmatic Bookshelf. For more information or to purchase a paperback or PDF copy, please visit http://www.pragprog.com. Note: This extract contains some colored text (particularly in code listing). This is available only in online versions of the books. The printed versions are black and white. Pagination might vary between the online and printer versions; the content is otherwise identical. Copyright © 2008The Pragmatic Programmers, LLC. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form, or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior consent of the publisher. Many of the designations used by manufacturers and sellers to distinguish their prod- ucts are claimed as trademarks. Where those designations appear in this book, and The Pragmatic Programmers, LLC was aware of a trademark claim, the designations have been printed in initial capital letters or in all capitals. The Pragmatic Starter Kit, The Pragmatic Programmer, Pragmatic Programming, Pragmatic Bookshelf and the linking g device are trademarks of The Pragmatic Programmers, LLC. Every precaution was taken in the preparation of this book. However, the publisher assumes no responsibility for errors or omissions, or for damages that may result from the use of information (including program listings) contained herein. Our Pragmatic courses, workshops, and other products can help you and your team create better software and have more fun. For more information, as well as the latest Pragmatic titles, please visit us at http://www.pragprog.com Copyright © 2008 Ezra Zygmuntowicz, Bruce A. -

Tripwire Ip360 9.0.1 License Agreements

TRIPWIRE® IP360 TRIPWIRE IP360 9.0.1 LICENSE AGREEMENTS FOUNDATIONAL CONTROLS FOR SECURITY, COMPLIANCE & IT OPERATIONS © 2001-2018 Tripwire, Inc. All rights reserved. Tripwire is a registered trademark of Tripwire, Inc. Other brand or product names may be trademarks or registered trademarks of their respective companies or organizations. Contents of this document are subject to change without notice. Both this document and the software described in it are licensed subject to Tripwire’s End User License Agreement located at https://www.tripwire.com/terms, unless a valid license agreement has been signed by your organization and an authorized representative of Tripwire. This document contains Tripwire confidential information and may be used or copied only in accordance with the terms of such license. This product may be protected by one or more patents. For further information, please visit: https://www.tripwire.com/company/patents. Tripwire software may contain or be delivered with third-party software components. The license agreements and notices for the third-party components are available at: https://www.tripwire.com/terms. Tripwire, Inc. One Main Place 101 SW Main St., Suite 1500 Portland, OR 97204 US Toll-free: 1.800.TRIPWIRE main: 1.503.276.7500 fax: 1.503.223.0182 https://www.tripwire.com [email protected] TW 1190-05 Contents License Agreements 4 Academic Free License ("AFL") 5 Apache License V2.0 (ASL 2.0) 9 BSD 20 Boost 28 CDDLv1.1 29 EPLv1 30 FreeType License 31 GNU General Public License V2 34 GNU General Public License V3 45 IBM 57 ISC 62 JasPer 63 Lesser General Public License V2 65 LibTiff 76 MIT 77 MPLv1.1 83 MPLv2 92 OpenLDAP 98 OpenSSL 99 PostgreSQL 102 Public Domain 104 Python 108 TCL 110 Vim 111 wxWidgets 113 Zlib 114 Contact Information 115 Tripwire IP360 9.0.1 License Agreements 3 Contents License Agreements This document contains licensing information relating to Tripwire's use of free and open-source software with or within the Tripwire IP360 product (collectively, "FOSS"). -

Maik Schmidt — «Enterprise Recipes with Ruby and Rails

What readers are saying about Enterprise Recipes with Ruby and Rails Enterprise Recipes with Ruby and Rails covers most of the tasks you need to accomplish in the enterprise, including integration with other systems, databases, and security measures. I wish I’d had this book three years ago. Ola Bini JRuby Core Developer, ThoughtWorks Studios This book is full of practical, relevant advice instead of theoretical background or “Hello, World” samples. Once you move beyond the basic skills of using Ruby and Rails, this is exactly what you need— real-world recipes that you can put to use immediately. It’s like getting condensed experience on paper, giving you a two-year head start on those who have to acquire this knowledge by making their own mis- takes. Stefan Tilkov CEO and Principal Consultant, innoQ If you’re responsible for developing enterprise software, after reading this book you’ll want to review all your projects to see where you can save time and money with Ruby and Rails. Maik Schmidt shows us once again that enterprise software doesn’t have to be “enterprisey.” Steve Vinoski IEEE Internet Computing Columnist and Member of Technical Staff, Verivue, Inc. On exactly the right level, this book explains many interesting libraries and tools invaluable for enterprise developers. Even experi- enced Ruby and Rails developers will find new information. Thomas Baustert Rails Book Author, b-simple.de Enterprise Recipes with Ruby and Rails Maik Schmidt The Pragmatic Bookshelf Raleigh, North Carolina Dallas, Texas Many of the designations used by manufacturers and sellers to distinguish their prod- ucts are claimed as trademarks. -

Gestion De Services Sous Unix

Gestion de services sous Unix Baptiste Daroussin [email protected] [email protected] sysadmin #6 Paris 19 Février 2016 Disclaimer sysadmin#6 Gestion de services sous Unix 2 of 16 L’arlésienne supervisor daemontools procd OpenRC DEMONS Runit Epoch ninit finitrcNG sinit minitsystemdinitng rc svc perp eINIT SMF SysVinit nosh SystemXVI upstart launchd monit Shepherd relaunchd sparkrc watchmancircus sysadmin#6 Gestion de services sous Unix 3 of 16 I I’m your father(c)(tm) I laisse la main a d’autres pour la gestion des services... presque I /etc/inittab I /etc/ttys I Généralement des scripts shell I SysVinit I rcNG I rc Il était une fois init(8) I petit mais costaud sysadmin#6 Gestion de services sous Unix 4 of 16 I laisse la main a d’autres pour la gestion des services... presque I /etc/inittab I /etc/ttys I Généralement des scripts shell I SysVinit I rcNG I rc Il était une fois init(8) I petit mais costaud I I’m your father(c)(tm) sysadmin#6 Gestion de services sous Unix 4 of 16 presque I /etc/inittab I /etc/ttys I Généralement des scripts shell I SysVinit I rcNG I rc Il était une fois init(8) I petit mais costaud I I’m your father(c)(tm) I laisse la main a d’autres pour la gestion des services... sysadmin#6 Gestion de services sous Unix 4 of 16 I /etc/inittab I /etc/ttys I Généralement des scripts shell I SysVinit I rcNG I rc Il était une fois init(8) I petit mais costaud I I’m your father(c)(tm) I laisse la main a d’autres pour la gestion des services.. -

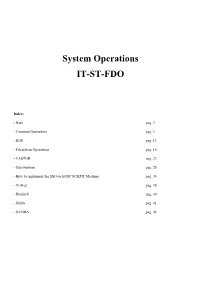

System Operations IT-ST-FDO

System Operations IT-ST-FDO Index: - Bash pag. 2 - Common Operations pag. 3 - EOS pag. 13 - Filesystem Operations pag. 16 - CASTOR pag. 23 - Interventions pag. 28 - How to implement the SSO on EOSCOCKPIT Machine pag. 34 - XrdFed pag. 38 - Rundeck pag. 40 - Gitlab pag. 41 - SAMBA pag. 45 Please remind that commands and procedures could be no longer updated. Please verify them before any use. Bash: Shell command line --> “command” “options” “arguments”. - scp [source] [dest] --> scp log.cron root@lxbst2277:/etc/file.conf --> Secure copy to other machines. - cp [options]... Source Dest --> Copy Source to Dest or Directory. - lp [options]...[file...] --> Send files to a printer. - cd [Options] [Directory] --> Change Directory - change the current working directory to a specific folder. - pwd [-LP] --> Print Working Directory. - ls [Options]... [File]... --> List information about files. - ll = ls -l [file] --> List directory contents using long list format. - cat [Options] [File]... --> Concatenate and print (display) the content of files. - grep [options] PATTERN [FILE...] --> Search file(s) for specific text. - sort [options] [file...] --> Sort text files. Sort, merge, or compare all lines from files given. - cut [OPTION]... [FILE]... --> Divide a file into several parts (columns). - tr [options]... SET1 [SET2] --> Translate, squeeze, and/or delete characters. - mv [options]... Source... Directory --> Move or rename files or directories. - source filename [arguments] --> Read and execute commands from the filename argument in the current shell context. - mkdir [Options] folder... --> Create new folder(s), if they do not already exist. - xrdcp [options] source destination --> Copies one or more files from one location to another. - df [option]... [file]... --> Disk Free - display free disk space. -

The Seven Pillars of Operational Wisdom: Selected Topics in Blackboard System Administration

The Seven Pillars of Operational Wisdom: Selected Topics in Blackboard System Administration Abstract The Blackboard Learning Management System presents many challenges for the system administrator due to its complexity. Some of these challenges are common to production web applications while many others are results of the design and implementation of Blackboard. Seven key areas of system administration are explored in terms of both theory and practice and illustrative problems, root causes, methods of diagnosis and solution (if any) are discussed. The areas are version control, configuration management, monitoring, automated recovery, restore and backup, security, and “operational hygiene” -- other Blackboard-specific operational issues. The tools and techniques described are for a load- balanced Enterprise system running on linux, but much of the underlying theory and practice applies to all platforms. Preamble, Caveats, and Intent This work is based on a few assumptions about the audience's goals and understanding of system administration in general and the design of robust, scalable web systems in particular. First, institutionally we are constrained by budget and knowledge – we cannot be expected to have enterprise Oracle or Java web application design and analysis experience, and we cannot afford to throw money (hardware, consultants, diagnostic tools, certifications) at problems to solve them. Our customers and management have high expectations for reliability and as administrators we will do everything reasonable to meet those expectations, including remote and on-site administration at all hours. Our goal is a to maintain a cost-effective and reliable learning management service that minimizes the consumption of our limited time, budget, and patience.