The Seven Pillars of Operational Wisdom: Selected Topics in Blackboard System Administration

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Introduction Use Runit with Traditional Init (Sysvinit)

2021/07/26 19:10 (UTC) 1/12 Runit Runit Introduction runit is a UNIX init scheme with service supervision. It is a cross-platform Unix init scheme with service supervision, a replacement for sysvinit, and other init schemes and supervision that are used with the traditional init. runit is compatible with djb's daemontools. In Unix-based computer operating systems, init (short for initialization) is the first process started during booting of the computer system. Init is a daemon process that continues running until the system is shut down. Slackware comes with its own legacy init (/sbin/init) from the sysvinit package, that used to be included in almost all other major Linux distributions. The init daemon (or its replacement) is characterised by Process ID 1 (PID 1). To read on the benefits of runit, see here: http://smarden.org/runit/benefits.html * Unless otherwise stated, all commands in this article are to be run by root. Use runit with traditional init (sysvinit) runit is not provided by Slackware, but a SlackBuild is maintained on https://slackbuilds.org/. It does not have any dependencies. As we do not want yet to replace init with runit, run the slackbuild with CONFIG=no: CONFIG=no ./runit.SlackBuild Then install the resulting package and proceed as follows: mkdir /etc/runit/ /service/ cp -a /usr/doc/runit-*/etc/2 /etc/runit/ /sbin/runsvdir-start & Starting via rc.local For a typical Slackware-stlyle service, you can edit /etc/rc.d/rc.local file if [ -x /sbin/runsvdir-start ]; then /sbin/runsvdir-start & fi and then edit write /etc/rc.d/rc.local_shutdown #!/bin/sh SlackDocs - https://docs.slackware.com/ Last update: 2020/05/06 08:08 (UTC) howtos:slackware_admin:runit https://docs.slackware.com/howtos:slackware_admin:runit RUNIT=x$( /sbin/pidof runsvdir ) if [ "$RUNIT" != x ]; then kill $RUNIT fi Then give rc.local_shutdown executive permission: chmod +x /etc/rc.d/rc.local_shutdown and reboot Starting via inittab (supervised) Remove the entries in /etc/rc.d/rc.local and /etc/rc.d/rc.local_shutdown described above. -

Systemd – Easy As 1, 2, 3

Systemd – Easy as 1, 2, 3 Ben Breard, RHCA Solutions Architect, Red Hat [email protected] Agenda ● Systemd functionality ● Coming to terms ● Learning the basics ● More advanced topics ● Learning the journal ● Available resources 2 Systemd is more than a SysVinit replacement 3 Systemd is a system and service manager 4 Systemd Overview ● Controls “units” rather than just daemons ● Handles dependency between units. ● Tracks processes with service information ● Services are owned by a cgroup. ● Simple to configure “SLAs” based on CPU, Memory, and IO. ● Properly kill daemons ● Minimal boot times ● Debuggability – no early boot messages are lost ● Easy to learn and backwards compatible. 5 Closer look at Units 6 Systemd - Units ● Naming convention is: name.type ● httpd.service, sshd.socket, or dev-hugepages.mount ● Service – Describe a daemon's type, execution, environment, and how it's monitored. ● Socket – Endpoint for interprocess communication. File, network, or Unix sockets. ● Target – Logical grouping of units. Replacement for runlevels. ● Device – Automatically created by the kernel. Can be provided to services as dependents. ● Mounts, automounts, swap – Monitor the mounting/unmounting of file systems. 7 Systemd – Units Continued ● Snapshots – save the state of units – useful for testing ● Timers – Timer-based activation ● Paths – Uses inotify to monitor a path ● Slices – For resource management. ● system.slice – services started by systemd ● user.slice – user processes ● machine.slice – VMs or containers registered with systemd 8 Systemd – Dependency Resolution ● Example: ● Wait for block device ● Check file system for device ● Mount file system ● nfs-lock.service: ● Requires=rpcbind.service network.target ● After=network.target named.service rpcbind.service ● Before=remote-fs-pre.target 9 That's all great .......but 10 Replace Init scripts!? Are you crazy?! 11 We're not crazy, I promise ● SysVinit had a good run, but leaves a lot to be desired. -

Cisco Virtualized Infrastructure Manager Installation Guide, 2.4.9

Cisco Virtualized Infrastructure Manager Installation Guide, 2.4.9 First Published: 2019-01-09 Last Modified: 2019-05-20 Americas Headquarters Cisco Systems, Inc. 170 West Tasman Drive San Jose, CA 95134-1706 USA http://www.cisco.com Tel: 408 526-4000 800 553-NETS (6387) Fax: 408 527-0883 THE SPECIFICATIONS AND INFORMATION REGARDING THE PRODUCTS IN THIS MANUAL ARE SUBJECT TO CHANGE WITHOUT NOTICE. ALL STATEMENTS, INFORMATION, AND RECOMMENDATIONS IN THIS MANUAL ARE BELIEVED TO BE ACCURATE BUT ARE PRESENTED WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED. USERS MUST TAKE FULL RESPONSIBILITY FOR THEIR APPLICATION OF ANY PRODUCTS. THE SOFTWARE LICENSE AND LIMITED WARRANTY FOR THE ACCOMPANYING PRODUCT ARE SET FORTH IN THE INFORMATION PACKET THAT SHIPPED WITH THE PRODUCT AND ARE INCORPORATED HEREIN BY THIS REFERENCE. IF YOU ARE UNABLE TO LOCATE THE SOFTWARE LICENSE OR LIMITED WARRANTY, CONTACT YOUR CISCO REPRESENTATIVE FOR A COPY. The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB's public domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California. NOTWITHSTANDING ANY OTHER WARRANTY HEREIN, ALL DOCUMENT FILES AND SOFTWARE OF THESE SUPPLIERS ARE PROVIDED “AS IS" WITH ALL FAULTS. CISCO AND THE ABOVE-NAMED SUPPLIERS DISCLAIM ALL WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING, WITHOUT LIMITATION, THOSE OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THIS MANUAL, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. -

Slides for the S6 Lightning Talk

The s6 supervision suite Laurent Bercot, 2017 What is an init system ? - “init” is vague terminology. “init wars” happened because nobody had a clear vision on what an init system even is or should be. - The 4 elements of an init system: /sbin/init, pid 1, process supervision, service management. - Not necessarily in the same process. Definition: process supervision A long-lived process (daemon) is supervised when it’s spawned by the supervision tree, a set of stable, long-lived processes started at boot time by pid 1. (Often just pid 1.) Supervision is a good pattern: the service is stable and launched in a reproducible env. Supervision only applies to daemons. Service management: definition - Boot time: bring all services up - Shutdown time: bring all services down - More generally: change services’ states Services can be oneshots (short-lived programs with side effects) or longruns (daemons). They have dependencies, which the service manager should enforce. What features do “init”s offer ? - Integrated init systems (systemd, launchd, upstart): “the big guys”. All four elements in one package, plus out-of-scope stuff. - sysvinit, BSD init: /sbin/init, pid 1, supervision (/etc/inittab, /etc/gettys). Service manager not included: sysv-rc, /etc/rc - OpenRC: service manager. - Epoch: similar to sysvinit + sysv-rc The “daemontools family” - /etc/inittab supervision is impractical; nobody uses it for anything else than gettys. - daemontools (DJB, 1998): the first project offering flexible process supervision. Realistic to supervise all daemons with it. - daemontools-encore, runit, perp, s6: supervision suites. - nosh: suite of tools similar to s6, in C++ Supervision suites are not enough - Only ¼ of an init system. -

Continuous Integration with Jenkins

Automated Deployment … of Debian & Ubuntu Michael Prokop About Me Debian Developer Project lead of Grml.org ounder of Grml-Forensic.org #nvolved in A#$ initramf"-tools$ etc. Member in Debian orensic Team Author of &ook $$Open Source Projektmanagement) #T *on"ultant Disclaimer" Deployment focuses on Linux (everal tools mentioned$ but there exist even more :. We'll cover some sections in more detail than others %here's no one-size-fits-all solution – identify what works for you Sy"tems Management Provisioning 4 Documentation &oot"trapping #nfrastructure 'rche"tration 4 Development Dev'ps Automation 6isualization/Trends *onfiguration 4Metric" + Logs Management Monitoring + *loud Service Updates Deployment Systems Management Remote Acce"" ipmi, HP i+'$ IBM RSA,... irm3are Management 9Vendor Tools Provisioning / Bootstrapping :ully) A(utomatic) I(n"tallation) Debian, Ubuntu$ Cent'( + Scientific +inu, http://fai-project.org/ ;uju Ubuntu <Charms= https-44juju.ubuntu.com/ grml-debootstrap netscript=http://example.org/net"cript.sh http-44grml.org4 d-i preseeding auto url>http-44debian.org/releases4\ "queeze/example-preseed.txt http-443iki.debian.org/DebianInstaller/Preseed Kickstart Cobbler Foreman AutoYa(%$ openQRM, (pace3alk,... Orche"tration / Automation Fabric (Python) % cat fabfile.py from fabric.api import run def host_type(): run('uname -s') % fab -H host1, host2,host3 host_type Capistrano (Ruby) % cat Capfile role :hosts, "host1", "host2", "host3" task :host_type, :roles => :hosts do run "uname -s" end % cap host_type 7undeck apt-dater % cat .config/apt-dater/hosts.conf [example.org] [email protected];mika@ mail.example.org;... *ontrolTier, Func$ MCollective$... *luster((8$ dsh, TakTuk,... *obbler$ Foreman$ openQRM, Spacewalk,... *onfiguration Management Puppet Environment" :production4"taging/development. -

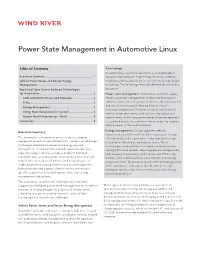

Power State Management in Automotive Linux

Power State Management in Automotive Linux Table of Contents Terminology As automotive, consumer electronics, and embedded Executive Summary ............................................................1 software and hardware engineering intersect, technical Vehicle Power States and Device Energy traditions and vocabulary for in-car systems design begin Management ......................................................................1 to overlap. The following terms are defined for use in this Key Linux/Open Source Software Technologies document. for Automotive ...................................................................2 Power state management: Automakers and their supply CAN and MOST Drivers and Protocols ..........................2 chains use power management to describe the state of D-Bus ..............................................................................3 vehicles and in-vehicle systems relative to their policies for and use of electric power flowing from a vehicle’s Energy Management ......................................................3 alternator and battery. To minimize confusion between Initng: Next-Generation Init System ..............................3 familiar automotive terms and current embedded and System Health Monitoring – Monit ................................4 mobile terms, in this document power state management Conclusion ..........................................................................4 is used to describe the software infrastructure to support vehicle power states and transitions. Energy -

27Th Large Installation System Administration Conference (LISA '13)

conference proceedings Proceedings of the 27th Large Installation System Administration Conference 27th Large Installation System Administration Conference (LISA ’13) Washington, D.C., USA November 3–8, 2013 Washington, D.C., USA November 3–8, 2013 Sponsored by In cooperation with LOPSA Thanks to Our LISA ’13 Sponsors Thanks to Our USENIX and LISA SIG Supporters Gold Sponsors USENIX Patrons Google InfoSys Microsoft Research NetApp VMware USENIX Benefactors Akamai EMC Hewlett-Packard Linux Journal Linux Pro Magazine Puppet Labs Silver Sponsors USENIX and LISA SIG Partners Cambridge Computer Google USENIX Partners Bronze Sponsors Meraki Nutanix Media Sponsors and Industry Partners ACM Queue IEEE Security & Privacy LXer ADMIN IEEE Software No Starch Press CiSE InfoSec News O’Reilly Media Computer IT/Dev Connections Open Source Data Center Conference Distributed Management Task Force IT Professional (OSDC) (DMTF) Linux Foundation Server Fault Free Software Magazine Linux Journal The Data Center Journal HPCwire Linux Pro Magazine Userfriendly.org IEEE Pervasive © 2013 by The USENIX Association All Rights Reserved This volume is published as a collective work. Rights to individual papers remain with the author or the author’s employer. Permission is granted for the noncommercial reproduction of the complete work for educational or research purposes. Permission is granted to print, primarily for one person’s exclusive use, a single copy of these Proceedings. USENIX acknowledges all trademarks herein. ISBN 978-1-931971-05-8 USENIX Association Proceedings of the 27th Large Installation System Administration Conference November 3–8, 2013 Washington, D.C. Conference Organizers Program Co-Chairs David Nalley, Apache Cloudstack Narayan Desai, Argonne National Laboratory Adele Shakal, Metacloud, Inc. -

Your Init; Your Choice

Your Computer; Your Init; Your Choice By Steve Litt Version 20150108_1348 Copyright © 2015 by Steve Litt Creative Commons Attribution-NoDerivatives 4.0 International License http://creativecommons.org/licenses/by-nd/4.0/legalcode Available online at http://www.troubleshooters.com/linux/presentations/golug_inits/golug_inits.pdf NO WARRANTY, use at your own risk. Slide 1 of 26 Your Computer; Your Init; Your Choice Steve Litt System Overview ● Kernel runs one program, init. ● Everything else run directly or indirectly by init. Slide 2 of 26 Your Computer; Your Init; Your Choice Steve Litt Many Different Init Systems ● Epoch ● nosh ● OpenRC ● perp ● RichFelker ● runit ● s6 ● systemd ● sysvinit ● Upstart ● uselessd ● Many more ● There's an init for every situation ● You can make your own Slide 3 of 26 Your Computer; Your Init; Your Choice Steve Litt Full vs Partial ● Kernel->full-init at PID1->daemons – Systemd, sysvinit, runit, Epoch, Upstart, etc. ● Kernel->PID1->partial-init->daemons – OpenRC, daemontools, damontools-encore, etc. Slide 4 of 26 Your Computer; Your Init; Your Choice Steve Litt Many Features ● Socket Activation ● Parallel starting ● Event controlled ● Sequential starting ● Daemontools-like ● Numeric ordering ● Simplicity ● Dependency ordering ● Descriptive config ● Work with sysvinit scripts ● Script config ● OS toolkit ● Forget features ● Look for benefits that fit your priorities and situation Slide 5 of 26 Your Computer; Your Init; Your Choice Steve Litt Many Routes to Benefits ● Within and outside of init ● With or without sockets ● With or without packaging ● Cutting edge or oldschool Slide 6 of 26 Your Computer; Your Init; Your Choice Steve Litt Bogus Characterizations ● ___ is a toy. – What does that even mean? ● ___ is not ready for prime time. -

The Qmail Handbook by Dave Sill ISBN:1893115402 Apress 2002 (492 Pages)

< Free Open Study > The qmail Handbook by Dave Sill ISBN:1893115402 Apress 2002 (492 pages) This guide begins with a discussion of qmail s history, architecture and features, and then goes into a thorough investigation of the installation and configuration process. Table of Contents The qmail Handbook Introduction Ch apt - Introducing qmail er 1 Ch apt - Installing qmail er 2 Ch apt - Configuring qmail: The Basics er 3 Ch apt - Using qmail er 4 Ch apt - Managing qmail er 5 Ch apt - Troubleshooting qmail er 6 Ch apt - Configuring qmail: Advanced Options er 7 Ch apt - Controlling Junk Mail er 8 Ch apt - Managing Mailing Lists er 9 Ch apt - Serving Mailboxes er 10 Ch apt - Hosting Virtual Domain and Users er 11 Ch apt - Understanding Advanced Topics er 12 Ap pe ndi - How qmail Works x A Ap pe ndi - Related Packages x B Ap pe ndi - How Internet Mail Works x C Ap pe ndi - qmail Features x D Ap pe - Error Messages ndi x E Ap pe - Gotchas ndi x F Index List of Figures List of Tables List of Listings < Free Open Study > < Free Open Study > Back Cover • Provides thorough instruction for installing, configuring, and optimizing qmail • Includes coverage of secure networking, troubleshooting issues, and mailing list administration • Covers what system administrators want to know by concentrating on qmail issues relevant to daily operation • Includes instructions on how to filter spam before it reaches the client The qmail Handbook will guide system and mail administrators of all skill levels through installing, configuring, and maintaining the qmail server. -

Supervisor a Process Control System

Supervisor A Process Control System Sergej Kurakin Need for Long Running Scripts under Linux ● Job Queues in PHP (or any other language) ● Application Servers in PHP (or any other language) ● NodeJS Applications ● Selenium WebDriver + Xvfb ● You name it Solutions ● Self-Made Daemons ● SysV / systemd / launchd ● Screen or tmux ● nohup ● Five star crons ● Daemontools ● Might be more possible solutions ● Supervisor ● Docker Needed options ● Automatic start ● Automatic restart ● Execute under some user account ● Logs (stdout and stderr) ● Easy to use Self-Made Daemons ● Wrote once by myself ● I will never do it twice ● Third-party is not always good or maintainable ● Lots of debugging ● All features must be implemented by yourself ● Depends on SysV/launchd/systemd Screen / tmux or nohup ● No automatic start ● No automatic restart ● Logs... daemontools ● Old (but not obsolete) ● Does not work under some virtualizations ● Might be hard to configure Five star cron ● Still popular and usable ● Perfect for job queues (in some situations) Docker This topic deserves a separate talk from someone who has good experience with Docker in production environment. Supervisor! Supervisor? Supervisor is a client/server system that allows its users to monitor and control a number of processes on UNIX-like operating systems. Features ● Simple - INI-style files ● Centralized - one place to manage ● Efficient - fork, don’t daemonize ● Extensible - events and XML-RPC interface ● Compatible - Linux, Mac OS X, Solaris and FreeBSD ● Proven Components supervisord -

BSD Magazine

5-2600 High-Density iXsystems Servers powered by the E Intel® Xeon® Processor E5-2600 Family and Intel® High Performance, C600 series chipset can pack up to 768GB of RAM High Density Servers for into 1U of rack space or up to 8 processors - with up to 128 threads - in 2U. Data Center, Virtualization, & HPC On-board 10 Gigabit Ethernet and Infiniband for Greater Throughput in less Rack Space. Servers from iXsystems based on the Intel® Xeon® Processor E5-2600 Family feature high-throughput connections on the motherboard, saving critical expansion space. The Intel® C600 Series chipset supports up to 384GB of RAM per processor, allowing performance in a single server to reach new heights. This ensures that you’re not paying for more than you need to achieve the performance you want. The iXR-1204 +10G features dual onboard 10GigE + dual onboard 1GigE network controllers, up to 768GB of RAM and dual Intel® Xeon® IXR-1204+10G: 10GbE On-Board Processors E5-2600 Family, freeing up critical expansion card space for application-specific hardware. T he uncompromised performance and flexibility of the iXR-1204 +10G makes it suitable for clustering, high-traffic webservers, virtualization, and cloud computing applications - anywhere MODEL: iXR-22X4IB you need the most resources available. For even greater performance density, the iXR-22X4IB squeezes four server nodes into two units of rack space, each with dual Intel® Xeon® Processors E5-2600 Family, up to 256GB of RAM, and an on-board Mellanox® ConnectX QDR 40Gbp/s Infiniband w/QSFP Connector. T he iXR-22X4IB is http://www.iXsystems.com/e5 perfect for high-powered computing, virtualization, or business intelligence 768GB applications that require the computing power of the Intel® Xeon® Processor of RAM in 1U E5-2600 Family and the high throughput of Infiniband. -

Company Profile

CCORPORATEORPORATE 20212021PROFILEPROFILE www.met-technologies.com EXPERIENCE PERFECTION AND EXCELLENCE KNOW US We are a leading software development company with a 360-degree approach to deliver the latest & quality IT solutions. MET Technologies Pvt. Ltd. is a global IT solutions, BPO & KPO service provider, widely recognized by clients all over the world as a one-stop-solution serving businesses of diverse domains and scales. We focus on implementing proper software development techniques and efficient customer support in achieving the finest results with foreseeable growth outcomes. A standout amongst the most proficient software developing organizations, we offer the most cutting edge IT solutions for you. 1 OUR MISSION, VISION & PHILOSOPHY MISSION VISION PHILOSOPHY To deliver bespoke solutions We aim to become a globally Strive only for excellence that meets client’s needs trusted IT/ITES services in all fields of development, within estimated timeline. provider for our clients. design & delivery. 2 ABOUT MET Technologies Pvt Ltd is a global web development and IT solutions service provider focused on providing innovative solutions since past 11 years. We aim to deliver technology-based business solutions that can fulfil the strategic requirements of our clients. At MET Technologies, we provide the best quality business outsourcing solutions across international clients. MET believes in continuous improvement to achieve greater client satisfaction. Our workforce continues to render quality processes to build trust with our clients. FITSER- MET’S GLOBAL IT/ ITES UNIT FITSER is the Global Software Development Unit of MET and in just a short span of time it has become the one-stop solution for all needs of our clients globally.