Active Techniques for Revealing and Analyzing the Security of Hidden Servers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Security Analysis of Voatz, the First Internet Voting Application Used in U.S

The Ballot is Busted Before the Blockchain: A Security Analysis of Voatz, the First Internet Voting Application Used in U.S. Federal Elections∗ Michael A. Specter James Koppel Daniel Weitzner MIT† MIT‡ MIT§ Abstract The company has recently closed a $7-million series A [22], and is on track to be used in the 2020 Primaries. In the 2018 midterm elections, West Virginia became the In this paper, we present the first public security review of first state in the U.S. to allow select voters to cast their bal- Voatz. We find that Voatz is vulnerable to a number of attacks lot on a mobile phone via a proprietary app called “Voatz.” that could violate election integrity (summary in Table1). For Although there is no public formal description of Voatz’s se- example, we find that an attacker with root access to a voter’s curity model, the company claims that election security and device can easily evade the system’s defenses (§5.1.1), learn integrity are maintained through the use of a permissioned the user’s choices (even after the event is over), and alter the blockchain, biometrics, a mixnet, and hardware-backed key user’s vote (§5.1). We further find that their network protocol storage modules on the user’s device. In this work, we present can leak details of the user’s vote (§5.3), and, surprisingly, that the first public security analysis of Voatz, based on a reverse that the system’s use of the blockchain is unlikely to protect engineering of their Android application and the minimal against server-side attacks (§5.2). -

Poster: Introducing Massbrowser: a Censorship Circumvention System Run by the Masses

Poster: Introducing MassBrowser: A Censorship Circumvention System Run by the Masses Milad Nasr∗, Anonymous∗, and Amir Houmansadr University of Massachusetts Amherst fmilad,[email protected] ∗Equal contribution Abstract—We will present a new censorship circumvention sys- side the censorship regions, which relay the Internet traffic tem, currently being developed in our group. The new system of the censored users. This includes systems like Tor, VPNs, is called MassBrowser, and combines several techniques from Psiphon, etc. Unfortunately, such circumvention systems are state-of-the-art censorship studies to design a hard-to-block, easily blocked by the censors by enumerating their limited practical censorship circumvention system. MassBrowser is a set of proxy server IP addresses [14]. (2) Costly to operate: one-hop proxy system where the proxies are volunteer Internet To resist proxy blocking by the censors, recent circumven- users in the free world. The power of MassBrowser comes from tion systems have started to deploy the proxies on shared-IP the large number of volunteer proxies who frequently change platforms such as CDNs, App Engines, and Cloud Storage, their IP addresses as the volunteer users move to different a technique broadly referred to as domain fronting [3]. networks. To get a large number of volunteer proxies, we This mechanism, however, is prohibitively expensive [11] provide the volunteers the control over how their computers to operate for large scales of users. (3) Poor QoS: Proxy- are used by the censored users. Particularly, the volunteer based circumvention systems like Tor and it’s variants suffer users can decide what websites they will proxy for censored from low quality of service (e.g., high latencies and low users, and how much bandwidth they will allocate. -

Threat Modeling and Circumvention of Internet Censorship by David Fifield

Threat modeling and circumvention of Internet censorship By David Fifield A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science in the Graduate Division of the University of California, Berkeley Committee in charge: Professor J.D. Tygar, Chair Professor Deirdre Mulligan Professor Vern Paxson Fall 2017 1 Abstract Threat modeling and circumvention of Internet censorship by David Fifield Doctor of Philosophy in Computer Science University of California, Berkeley Professor J.D. Tygar, Chair Research on Internet censorship is hampered by poor models of censor behavior. Censor models guide the development of circumvention systems, so it is important to get them right. A censor model should be understood not just as a set of capabilities|such as the ability to monitor network traffic—but as a set of priorities constrained by resource limitations. My research addresses the twin themes of modeling and circumvention. With a grounding in empirical research, I build up an abstract model of the circumvention problem and examine how to adapt it to concrete censorship challenges. I describe the results of experiments on censors that probe their strengths and weaknesses; specifically, on the subject of active probing to discover proxy servers, and on delays in their reaction to changes in circumvention. I present two circumvention designs: domain fronting, which derives its resistance to blocking from the censor's reluctance to block other useful services; and Snowflake, based on quickly changing peer-to-peer proxy servers. I hope to change the perception that the circumvention problem is a cat-and-mouse game that affords only incremental and temporary advancements. -

In 2017, Broad Federal Search Warrants, As Well As

A PUBLICATION OF THE SILHA CENTER FOR THE STUDY OF MEDIA ETHICS AND LAW | FALL 2017 Federal Search Warrants and Nondisclosure Orders Lead to Legal Action; DOJ Changes Gag Order Practices n 2017, broad federal search warrants, as well as from disclosing the fact that it had received such a request. nondisclosure orders preventing technology and social On Oct. 12, 2017, the Floyd Abrams Institute for Freedom of media companies from informing their customers that their Expression at Yale Law School and 20 First Amendment Scholars, information had been handed over to the government, led to including Silha Center Director and Silha Professor of Media legal action and raised concerns from observers. However, Ethics and Law Jane Kirtley, fi led an amici brief in response Ithe U.S. Department of Justice (DOJ) also changed its rules on the to the ruling, explaining that National Security Letters (NSL) gag orders, leading a large technology company to drop its lawsuit issued by the FBI are accompanied by a nondisclosure order, against the agency regarding the orders. which “empowers the government to preemptively gag a wire or In 2017, the DOJ fi led two search warrants seeking extensive electronic communication service provider from speaking about information from web hosting company DreamHost and from the government’s request for information about a subscriber.” Facebook in connection to violent protests in Washington, The brief contended that these orders constitute prior restraints D.C. during President Donald Trump’s January 20 inauguration in violation of the U.S. Constitution and U.S. Supreme Court festivities. On Aug. -

BRKSEC-2011.Pdf

#CLUS About Garlic and Onions A little journey… Tobias Mayer, Technical Solutions Architect BRKSEC-2011 #CLUS Me… CCIE Security #14390, CISSP & Motorboat driving license… Working in Content Security & TLS Security tmayer{at}cisco.com Writing stuff at “blogs.cisco.com” #CLUS BRKSEC-2011 © 2018 Cisco and/or its affiliates. All rights reserved. Cisco Public 3 Agenda • Why anonymization? • Using Tor (Onion Routing) • How Tor works • Introduction to Onion Routing • Obfuscation within Tor • Domain Fronting • Detect Tor • I2P – Invisible Internet Project • Introduction to Garlic Routing • Conclusion #CLUS BRKSEC-2011 © 2018 Cisco and/or its affiliates. All rights reserved. Cisco Public 4 Cisco Webex Teams Questions? Use Cisco Webex Teams (formerly Cisco Spark) to chat with the speaker after the session How 1 Find this session in the Cisco Events App 2 Click “Join the Discussion” 3 Install Webex Teams or go directly to the team space 4 Enter messages/questions in the team space Webex Teams will be moderated cs.co/ciscolivebot#BRKSEC-2011 by the speaker until June 18, 2018. #CLUS © 2018 Cisco and/or its affiliates. All rights reserved. Cisco Public 5 Different Intentions Hide me from Government! Hide me from ISP! Hide me from tracking! Bypass Corporate Bypass Country Access Hidden policies restrictions (Videos…) Services #CLUS BRKSEC-2011 © 2018 Cisco and/or its affiliates. All rights reserved. Cisco Public 6 Browser Identity Tracking does not require a “Name” Tracking is done by examining parameters your browser reveals https://panopticlick.eff.org #CLUS BRKSEC-2011 © 2018 Cisco and/or its affiliates. All rights reserved. Cisco Public 7 Proxies EPIC Browser #CLUS BRKSEC-2011 © 2018 Cisco and/or its affiliates. -

People's Tech Movement to Kick Big Tech out of Africa Could Form a Critical Part of the Global Protests Against the Enduring Legacy of Racism and Colonialism

CONTENTS Acronyms ................................................................................................................................................ 1 1 Introduction: The Rise of Digital Colonialism and Surveillance Capitalism ..................... 2 2 Threat Modeling .......................................................................................................................... 8 3 The Basics of Information Security and Software ............................................................... 10 4 Mobile Phones: Talking and Texting ...................................................................................... 14 5 Web Browsing ............................................................................................................................ 18 6 Searching the Web .................................................................................................................... 23 7 Sharing Data Safely ................................................................................................................... 25 8 Email Encryption ....................................................................................................................... 28 9 Video Chat ................................................................................................................................... 31 10 Online Document Collaboration ............................................................................................ 34 11 Protecting Your Data ................................................................................................................ -

Blocking-Resistant Communication Through Domain Fronting

Proceedings on Privacy Enhancing Technologies 2015; 2015 (2):46–64 David Fifield*, Chang Lan, Rod Hynes, Percy Wegmann, and Vern Paxson Blocking-resistant communication through domain fronting Abstract: We describe “domain fronting,” a versatile 1 Introduction censorship circumvention technique that hides the re- mote endpoint of a communication. Domain fronting Censorship is a daily reality for many Internet users. works at the application layer, using HTTPS, to com- Workplaces, schools, and governments use technical and municate with a forbidden host while appearing to com- social means to prevent access to information by the net- municate with some other host, permitted by the cen- work users under their control. In response, those users sor. The key idea is the use of different domain names at employ technical and social means to gain access to the different layers of communication. One domain appears forbidden information. We have seen an ongoing conflict on the “outside” of an HTTPS request—in the DNS re- between censor and censored, with advances on both quest and TLS Server Name Indication—while another sides, more subtle evasion countered by more powerful domain appears on the “inside”—in the HTTP Host detection. header, invisible to the censor under HTTPS encryp- Circumventors, at a natural disadvantage because tion. A censor, unable to distinguish fronted and non- the censor controls the network, have a point working fronted traffic to a domain, must choose between allow- in their favor: the censor’s distaste for “collateral dam- ing circumvention traffic and blocking the domain en- age,” incidental overblocking committed in the course of tirely, which results in expensive collateral damage. -

Download Or Upload

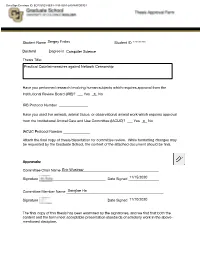

DocuSign Envelope ID: ECF321E3-5E94-4153-9254-2A048AFD03E4 Student Name ______________________________Sergey Frolov _______ Student ID___________________********* ___ Doctoral______________ Degree in ______________________________Computer Science _____________________________ Thesis Title: Practical Countermeasures against Network Censorship Have you performed research involving human subjects which requires approval from the Institutional Review Board (IRB)? ___ Yes ___X No IRB Protocol Number ________________ Have you used live animals, animal tissue, or observational animal work which requires approval from the Institutional Animal Care and Use Committee (IACUC)? ___ Yes ___X No IACUC Protocol Number _______________ Attach the final copy of thesis/dissertation for committee review. While formatting changes may be requested by the Graduate School, the content of the attached document should be final. Approvals: Committee Chair Name ______________________________________________________Eric Wustrow Signature _____________________________________ Date Signed _________________11/15/2020 Committee Member Name ______________________________________________________Sangtae Ha Signature _____________________________________ Date Signed _________________11/10/2020 The final copy of this thesis has been examined by the signatories, and we find that both the content and the form meet acceptable presentation standards of scholarly work in the above- mentioned discipline. DocuSign Envelope ID: ECF321E3-5E94-4153-9254-2A048AFD03E4 Practical Countermeasures -

Domain Shadowing: Leveraging Content Delivery Networks for Robust Blocking-Resistant Communications

Domain Shadowing: Leveraging Content Delivery Networks for Robust Blocking-Resistant Communications Mingkui Wei Cybersecurity Engineering George Mason University, Fairfax, VA, 22030 Abstract according to the Host header but have the TLS connection still appear to belong to the allowed domain. The blocking- We debut domain shadowing, a novel censorship evasion resistance of domain fronting derives from the significant technique leveraging content delivery networks (CDNs). Do- “collateral damage”, i.e., to disable domain fronting, the censor main shadowing exploits the fact that CDNs allow their cus- needs to block users from accessing the entire CDN, resulting tomers to claim arbitrary domains as the back-end. By set- in all domains on the CDN inaccessible. Because today’s ting the front-end of a CDN service as an allowed domain Internet relies heavily on web caches and many high-profile and the back-end a blocked one, a censored user can access websites also use CDNs to distribute their content, completely resources of the blocked domain with all “indicators”, includ- blocking access to a particular CDN may not be a feasible ing the connecting URL, the SNI of the TLS connection, and option for the censor. Because of its strong blocking-resistant the Host header of the HTTP(S) request, appear to belong power, domain fronting has been adopted by many censorship to the allowed domain. Furthermore, we demonstrate that evasion systems since it has been proposed [24, 28, 34, 36]. domain shadowing can be proliferated by domain fronting, In the last two years, however, many CDNs began to disable a censorship evasion technique popularly used a few years domain fronting by enforcing the match between the SNI and ago, making it even more difficult to block. -

Enhancing Cybersecurity for Industry 4.0 in Asia and the Pacific

Enhancing Cybersecurity for Industry 4.0 in Asia and the Pacific Asia-Pacific Information Superhighway (AP-IS) Working Paper Series P a g e | 2 The Economic and Social Commission for Asia and the Pacific (ESCAP) serves as the United Nations’ regional hub promoting cooperation among countries to achieve inclusive and sustainable development. The largest regional intergovernmental platform with 53 member States and 9 associate members, ESCAP has emerged as a strong regional think tank offering countries sound analytical products that shed insight into the evolving economic, social and environmental dynamics of the region. The Commission’s strategic focus is to deliver on the 2030 Agenda for Sustainable Development, which it does by reinforcing and deepening regional cooperation and integration to advance connectivity, financial cooperation and market integration. ESCAP’s research and analysis coupled with its policy advisory services, capacity building and technical assistance to governments aim to support countries’ sustainable and inclusive development ambitions. The shaded areas of the map indicate ESCAP members and associate members. Disclaimer : The Asia-Pacific Information Superhighway (AP-IS) Working Papers provide policy-relevant analysis on regional trends and challenges in support of the development of the AP-IS and inclusive development. The findings should not be reported as representing the views of the United Nations. The views expressed herein are those of the authors. This working paper has been issued without formal editing, and the designations employed and material presented do not imply the expression of any opinion whatsoever on the part of the Secretariat of the United Nations concerning the legal status of any country, territory, city or area, or of its authorities, or concerning the delimitation of its frontiers or boundaries. -

By Israa Alsars

QATAR UNIVERSITY COLLEGE OF ENGINEERING [Free Chain: Enabling Freedom of Expression through Public Blockchains ] BY ISRAA ALSARSOUR A Thesis Submitted to the Faculty of the College of Engineering in Partial Fulfillment of the Requirements for the Degree of Masters of Science in Computing Masters of Science in Computing June 2021 © 2021 Israa Alsarsour. All Rights Reserved. COMMITTEE PAGE The members of the Committee approve the Thesis of Israa Alsarsour defended on 19/04/2021. Qutaiba M. Malluhi Thesis/Dissertation Supervisor Abdelaziz Bouras Committee Member Aiman Erbad Committee Member Devrim Unal Committee Member Approved: Khalifa Nasser Al-Khalifa, Dean, College of Engineering ii ABSTRACT ALSARSOUR, ISRAA SALEM, Masters : June :2021, Masters of Science in Computing Title: Free Chain: Enabling Freedom of Expression through Public Blockchains Supervisor of Thesis: Qutaibah M, Malluhi. Everyone should have the right to expression and opinion without interference. Nevertheless, Internet censorship is often misused to block freedom of speech. The distributed ledger technology provides a globally shared database geographically dispersed and cannot be controlled by a central authority. Blockchain is an emerging technology that enabled distributed ledgers and has recently been employed for building various types of applications. This thesis demonstrates a unique application of blockchain technologies to create a platform supporting freedom of expression. The thesis adopts permissionless and public blockchains to leverage their advantages of providing an on-chain immutable and tamper-proof publication medium. The study shows the blockchain potential for delivering a censorship-resistant publication platform. The thesis presents and evaluates possible methods for building such a system using the Bitcoin and Ethereum blockchain networks. -

Supreme Court of the United States

No. 19-783 IN THE Supreme Court of the United States NATHAN VAN BUREN, Petitioner, v. UNITED STATES, Respondent. ON WRIT OF CERTIORARI TO THE UNITED STATES CouRT OF APPEALS FOR THE ELEVENTH CIRcuIT BRIEF OF AMICI CURIAE COMPUTER SECURITY RESEARCHERS, ELECTRONIC FRONTIER FOUNDATION, CENTER FOR DEMOCRACY & TECHNOLOGY, BUGCROWD, RAPID7, SCYTHE, AND TENABLE IN SUPPORT OF PETITIONER ANDREW CROCKER Counsel of Record NAOMI GILENS ELECTRONic FRONTIER FOUNDATION 815 Eddy Street San Francisco, California 94109 (415) 436-9333 [email protected] Counsel for Amici Curiae 296514 A (800) 274-3321 • (800) 359-6859 i TABLE OF CONTENTS Page TABLE OF CONTENTS..........................i TABLE OF CITED AUTHORITIES ..............iii INTEREST OF AMICI CURIAE ..................1 SUMMARY OF ARGUMENT .....................4 ARGUMENT....................................5 I. The Work of the Computer Security Research Community Is Vital to the Public Interest...................................5 A. Computer Security Benefits from the Involvement of Independent Researchers ...........................5 B. Security Researchers Have Made Important Contributions to the Public Interest by Identifying Security Threats in Essential Infrastructure, Voting Systems, Medical Devices, Vehicle Software, and More ...................10 II. The Broad Interpretation of the CFAA Adopted by the Eleventh Circuit Chills Valuable Security Research. ................16 ii Table of Contents Page A. The Eleventh Circuit’s Interpretation of the CFAA Would Extend to Violations of Website Terms of Service and Other Written Restrictions on Computer Use. .................................16 B. Standard Computer Security Research Methods Can Violate Written Access Restrictions...........................18 C. The Broad Interpretation of the CFAA Discourages Researchers from Pursuing and Disclosing Security Flaws ...............................22 D. Voluntary Disclosure Guidelines and Industry-Sponsored Bug Bounty Programs A re Not Sufficient to Mitigate the Chill .