Simulation of Fractional Brownian Motion

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Stationary Processes and Their Statistical Properties

Stationary Processes and Their Statistical Properties Brian Borchers March 29, 2001 1 Stationary processes A discrete time stochastic process is a sequence of random variables Z1, Z2, :::. In practice we will typically analyze a single realization z1, z2, :::, zn of the stochastic process and attempt to esimate the statistical properties of the stochastic process from the realization. We will also consider the problem of predicting zn+1 from the previous elements of the sequence. We will begin by focusing on the very important class of stationary stochas- tic processes. A stochastic process is strictly stationary if its statistical prop- erties are unaffected by shifting the stochastic process in time. In particular, this means that if we take a subsequence Zk+1, :::, Zk+m, then the joint distribution of the m random variables will be the same no matter what k is. Stationarity requires that the mean of the stochastic process be a constant. E[Zk] = µ. and that the variance is constant 2 V ar[Zk] = σZ : Also, stationarity requires that the covariance of two elements separated by a distance m is constant. That is, Cov(Zk;Zk+m) is constant. This covariance is called the autocovariance at lag m, and we will use the notation γm. Since Cov(Zk;Zk+m) = Cov(Zk+m;Zk), we need only find γm for m 0. The ≥ correlation of Zk and Zk+m is the autocorrelation at lag m. We will use the notation ρm for the autocorrelation. It is easy to show that γk ρk = : γ0 1 2 The autocovariance and autocorrelation ma- trices The covariance matrix for the random variables Z1, :::, Zn is called an auto- covariance matrix. -

Markovian Bridges: Weak Continuity and Pathwise Constructions

The Annals of Probability 2011, Vol. 39, No. 2, 609–647 DOI: 10.1214/10-AOP562 c Institute of Mathematical Statistics, 2011 MARKOVIAN BRIDGES: WEAK CONTINUITY AND PATHWISE CONSTRUCTIONS1 By Lo¨ıc Chaumont and Geronimo´ Uribe Bravo2,3 Universit´ed’Angers and Universidad Nacional Aut´onoma de M´exico A Markovian bridge is a probability measure taken from a disin- tegration of the law of an initial part of the path of a Markov process given its terminal value. As such, Markovian bridges admit a natural parameterization in terms of the state space of the process. In the context of Feller processes with continuous transition densities, we construct by weak convergence considerations the only versions of Markovian bridges which are weakly continuous with respect to their parameter. We use this weakly continuous construction to provide an extension of the strong Markov property in which the flow of time is reversed. In the context of self-similar Feller process, the last result is shown to be useful in the construction of Markovian bridges out of the trajectories of the original process. 1. Introduction and main results. 1.1. Motivation. The aim of this article is to study Markov processes on [0,t], starting at x, conditioned to arrive at y at time t. Historically, the first example of such a conditional law is given by Paul L´evy’s construction of the Brownian bridge: given a Brownian motion B starting at zero, let s s bx,y,t = x + B B + (y x) . s s − t t − t arXiv:0905.2155v3 [math.PR] 14 Mar 2011 Received May 2009; revised April 2010. -

Stochastic Process - Introduction

Stochastic Process - Introduction • Stochastic processes are processes that proceed randomly in time. • Rather than consider fixed random variables X, Y, etc. or even sequences of i.i.d random variables, we consider sequences X0, X1, X2, …. Where Xt represent some random quantity at time t. • In general, the value Xt might depend on the quantity Xt-1 at time t-1, or even the value Xs for other times s < t. • Example: simple random walk . week 2 1 Stochastic Process - Definition • A stochastic process is a family of time indexed random variables Xt where t belongs to an index set. Formal notation, { t : ∈ ItX } where I is an index set that is a subset of R. • Examples of index sets: 1) I = (-∞, ∞) or I = [0, ∞]. In this case Xt is a continuous time stochastic process. 2) I = {0, ±1, ±2, ….} or I = {0, 1, 2, …}. In this case Xt is a discrete time stochastic process. • We use uppercase letter {Xt } to describe the process. A time series, {xt } is a realization or sample function from a certain process. • We use information from a time series to estimate parameters and properties of process {Xt }. week 2 2 Probability Distribution of a Process • For any stochastic process with index set I, its probability distribution function is uniquely determined by its finite dimensional distributions. •The k dimensional distribution function of a process is defined by FXX x,..., ( 1 x,..., k ) = P( Xt ≤ 1 ,..., xt≤ X k) x t1 tk 1 k for anyt 1 ,..., t k ∈ I and any real numbers x1, …, xk . -

Autocovariance Function Estimation Via Penalized Regression

Autocovariance Function Estimation via Penalized Regression Lina Liao, Cheolwoo Park Department of Statistics, University of Georgia, Athens, GA 30602, USA Jan Hannig Department of Statistics and Operations Research, University of North Carolina, Chapel Hill, NC 27599, USA Kee-Hoon Kang Department of Statistics, Hankuk University of Foreign Studies, Yongin, 449-791, Korea Abstract The work revisits the autocovariance function estimation, a fundamental problem in statistical inference for time series. We convert the function estimation problem into constrained penalized regression with a generalized penalty that provides us with flexible and accurate estimation, and study the asymptotic properties of the proposed estimator. In case of a nonzero mean time series, we apply a penalized regression technique to a differenced time series, which does not require a separate detrending procedure. In penalized regression, selection of tuning parameters is critical and we propose four different data-driven criteria to determine them. A simulation study shows effectiveness of the tuning parameter selection and that the proposed approach is superior to three existing methods. We also briefly discuss the extension of the proposed approach to interval-valued time series. Some key words: Autocovariance function; Differenced time series; Regularization; Time series; Tuning parameter selection. 1 1 Introduction Let fYt; t 2 T g be a stochastic process such that V ar(Yt) < 1, for all t 2 T . The autoco- variance function of fYtg is given as γ(s; t) = Cov(Ys;Yt) for all s; t 2 T . In this work, we consider a regularly sampled time series f(i; Yi); i = 1; ··· ; ng. Its model can be written as Yi = g(i) + i; i = 1; : : : ; n; (1.1) where g is a smooth deterministic function and the error is assumed to be a weakly stationary 2 process with E(i) = 0, V ar(i) = σ and Cov(i; j) = γ(ji − jj) for all i; j = 1; : : : ; n: The estimation of the autocovariance function γ (or autocorrelation function) is crucial to determine the degree of serial correlation in a time series. -

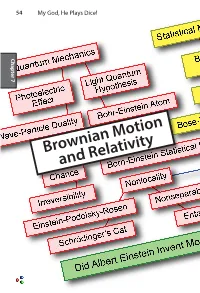

Brownian Motion and Relativity Brownian Motion 55

54 My God, He Plays Dice! Chapter 7 Chapter Brownian Motion and Relativity Brownian Motion 55 Brownian Motion and Relativity In this chapter we describe two of Einstein’s greatest works that have little or nothing to do with his amazing and deeply puzzling theories about quantum mechanics. The first,Brownian motion, provided the first quantitative proof of the existence of atoms and molecules. The second,special relativity in his miracle year of 1905 and general relativity eleven years later, combined the ideas of space and time into a unified space-time with a non-Euclidean 7 Chapter curvature that goes beyond Newton’s theory of gravitation. Einstein’s relativity theory explained the precession of the orbit of Mercury and predicted the bending of light as it passes the sun, confirmed by Arthur Stanley Eddington in 1919. He also predicted that galaxies can act as gravitational lenses, focusing light from objects far beyond, as was confirmed in 1979. He also predicted gravitational waves, only detected in 2016, one century after Einstein wrote down the equations that explain them.. What are we to make of this man who could see things that others could not? Our thesis is that if we look very closely at the things he said, especially his doubts expressed privately to friends, today’s mysteries of quantum mechanics may be lessened. As great as Einstein’s theories of Brownian motion and relativity are, they were accepted quickly because measurements were soon made that confirmed their predictions. Moreover, contemporaries of Einstein were working on these problems. Marion Smoluchowski worked out the equation for the rate of diffusion of large particles in a liquid the year before Einstein. -

Patterns in Random Walks and Brownian Motion

Patterns in Random Walks and Brownian Motion Jim Pitman and Wenpin Tang Abstract We ask if it is possible to find some particular continuous paths of unit length in linear Brownian motion. Beginning with a discrete version of the problem, we derive the asymptotics of the expected waiting time for several interesting patterns. These suggest corresponding results on the existence/non-existence of continuous paths embedded in Brownian motion. With further effort we are able to prove some of these existence and non-existence results by various stochastic analysis arguments. A list of open problems is presented. AMS 2010 Mathematics Subject Classification: 60C05, 60G17, 60J65. 1 Introduction and Main Results We are interested in the question of embedding some continuous-time stochastic processes .Zu;0Ä u Ä 1/ into a Brownian path .BtI t 0/, without time-change or scaling, just by a random translation of origin in spacetime. More precisely, we ask the following: Question 1 Given some distribution of a process Z with continuous paths, does there exist a random time T such that .BTCu BT I 0 Ä u Ä 1/ has the same distribution as .Zu;0Ä u Ä 1/? The question of whether external randomization is allowed to construct such a random time T, is of no importance here. In fact, we can simply ignore Brownian J. Pitman ()•W.Tang Department of Statistics, University of California, 367 Evans Hall, Berkeley, CA 94720-3860, USA e-mail: [email protected]; [email protected] © Springer International Publishing Switzerland 2015 49 C. Donati-Martin et al. -

Constructing a Sequence of Random Walks Strongly Converging to Brownian Motion Philippe Marchal

Constructing a sequence of random walks strongly converging to Brownian motion Philippe Marchal To cite this version: Philippe Marchal. Constructing a sequence of random walks strongly converging to Brownian motion. Discrete Random Walks, DRW’03, 2003, Paris, France. pp.181-190. hal-01183930 HAL Id: hal-01183930 https://hal.inria.fr/hal-01183930 Submitted on 12 Aug 2015 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Discrete Mathematics and Theoretical Computer Science AC, 2003, 181–190 Constructing a sequence of random walks strongly converging to Brownian motion Philippe Marchal CNRS and Ecole´ normale superieur´ e, 45 rue d’Ulm, 75005 Paris, France [email protected] We give an algorithm which constructs recursively a sequence of simple random walks on converging almost surely to a Brownian motion. One obtains by the same method conditional versions of the simple random walk converging to the excursion, the bridge, the meander or the normalized pseudobridge. Keywords: strong convergence, simple random walk, Brownian motion 1 Introduction It is one of the most basic facts in probability theory that random walks, after proper rescaling, converge to Brownian motion. However, Donsker’s classical theorem [Don51] only states a convergence in law. -

And Nanoemulsions As Vehicles for Essential Oils: Formulation, Preparation and Stability

nanomaterials Review An Overview of Micro- and Nanoemulsions as Vehicles for Essential Oils: Formulation, Preparation and Stability Lucia Pavoni, Diego Romano Perinelli , Giulia Bonacucina, Marco Cespi * and Giovanni Filippo Palmieri School of Pharmacy, University of Camerino, 62032 Camerino, Italy; [email protected] (L.P.); [email protected] (D.R.P.); [email protected] (G.B.); gianfi[email protected] (G.F.P.) * Correspondence: [email protected] Received: 23 December 2019; Accepted: 10 January 2020; Published: 12 January 2020 Abstract: The interest around essential oils is constantly increasing thanks to their biological properties exploitable in several fields, from pharmaceuticals to food and agriculture. However, their widespread use and marketing are still restricted due to their poor physico-chemical properties; i.e., high volatility, thermal decomposition, low water solubility, and stability issues. At the moment, the most suitable approach to overcome such limitations is based on the development of proper formulation strategies. One of the approaches suggested to achieve this goal is the so-called encapsulation process through the preparation of aqueous nano-dispersions. Among them, micro- and nanoemulsions are the most studied thanks to the ease of formulation, handling and to their manufacturing costs. In this direction, this review intends to offer an overview of the formulation, preparation and stability parameters of micro- and nanoemulsions. Specifically, recent literature has been examined in order to define the most common practices adopted (materials and fabrication methods), highlighting their suitability and effectiveness. Finally, relevant points related to formulations, such as optimization, characterization, stability and safety, not deeply studied or clarified yet, were discussed. -

![Arxiv:2004.05394V1 [Math.PR] 11 Apr 2020 Not Equal](https://docslib.b-cdn.net/cover/4532/arxiv-2004-05394v1-math-pr-11-apr-2020-not-equal-444532.webp)

Arxiv:2004.05394V1 [Math.PR] 11 Apr 2020 Not Equal

SIZE AND SHAPE OF TRACKED BROWNIAN BRIDGES ABDULRAHMAN ALSOLAMI, JAMES BURRIDGE AND MICHALGNACIK Abstract. We investigate the typical sizes and shapes of sets of points obtained by irregularly tracking two-dimensional Brownian bridges. The tracking process consists of observing the path location at the arrival times of a non-homogeneous Poisson process on a finite time interval. The time varying intensity of this observation process is the tracking strategy. By analysing the gyration tensor of tracked points we prove two theorems which relate the tracking strategy to the average gyration radius, and to the asphericity { a measure of how non-spherical the point set is. The act of tracking may be interpreted either as a process of observation, or as process of depositing time decaying \evidence" such as scent, environmental disturbance, or disease particles. We present examples of different strategies, and explore by simulation the effects of varying the total number of tracking points. 1. Introduction Understanding the statistical properties of human and animal movement processes is of interest to ecologists [1, 2, 3], epidemiologists [4, 5, 6], criminologists [7], physicists and mathematicians [8, 9, 10, 11], including those interested in the evolution of human culture and language [12, 13, 14]. Advances in information and communication technologies have allowed automated collection of large numbers of human and animal trajectories [15, 16], allowing real movement patterns to be studied in detail and compared to idealised mathematical models. Beyond academic study, movement data has important practical applications, for example in controlling the spread of disease through contact tracing [6]. Due to the growing availability and applications of tracking information, it is useful to possess a greater analytical understanding of the typical shape and size characteristics of trajectories which are observed, or otherwise emit information. -

Rescaled Range Analysis and Detrended Fluctuation Analysis: Finite Sample Properties and Confidence Intervals

AUCO Czech Economic Review 4 (2010) 236–250 Acta Universitatis Carolinae Oeconomica Rescaled Range Analysis and Detrended Fluctuation Analysis: Finite Sample Properties and Confidence Intervals Ladislav Kristoufekˇ ∗ Received 14 September 2009; Accepted 1 March 2010 Abstract We focus on finite sample properties of two mostly used methods of Hurst exponent H estimation—rescaled range analysis (R/S) and detrended fluctuation analysis (DFA). Even though both methods have been widely applied on different types of financial assets, only seve- ral papers have dealt with the finite sample properties which are crucial as the properties differ significantly from the asymptotic ones. Recently, R/S analysis has been shown to overestimate H when compared to DFA. However, we show that even though the estimates of R/S are truly significantly higher than an asymptotic limit of 0.5, for random time series with lengths from 29 to 217, they remain very close to the estimates proposed by Anis & Lloyd and the estimated standard deviations are lower than the ones of DFA. On the other hand, DFA estimates are very close to 0.5. The results propose that R/S still remains useful and robust method even when compared to newer method of DFA which is usually preferred in recent literature. Keywords Rescaled range analysis, detrended fluctuation analysis, Hurst exponent, long-range dependence, confidence intervals JEL classification G1, G10, G14, G15 ∗ 1. Introduction Long-range dependence and its presence in the financial time series has been discussed in several recent papers (Czarnecki et al. 2008; Grech and Mazur 2004; Carbone et al. 2004; Matos et al. -

On Conditionally Heteroscedastic Ar Models with Thresholds

Statistica Sinica 24 (2014), 625-652 doi:http://dx.doi.org/10.5705/ss.2012.185 ON CONDITIONALLY HETEROSCEDASTIC AR MODELS WITH THRESHOLDS Kung-Sik Chan, Dong Li, Shiqing Ling and Howell Tong University of Iowa, Tsinghua University, Hong Kong University of Science & Technology and London School of Economics & Political Science Abstract: Conditional heteroscedasticity is prevalent in many time series. By view- ing conditional heteroscedasticity as the consequence of a dynamic mixture of in- dependent random variables, we develop a simple yet versatile observable mixing function, leading to the conditionally heteroscedastic AR model with thresholds, or a T-CHARM for short. We demonstrate its many attributes and provide com- prehensive theoretical underpinnings with efficient computational procedures and algorithms. We compare, via simulation, the performance of T-CHARM with the GARCH model. We report some experiences using data from economics, biology, and geoscience. Key words and phrases: Compound Poisson process, conditional variance, heavy tail, heteroscedasticity, limiting distribution, quasi-maximum likelihood estimation, random field, score test, T-CHARM, threshold model, volatility. 1. Introduction We can often model a time series as the sum of a conditional mean function, the drift or trend, and a conditional variance function, the diffusion. See, e.g., Tong (1990). The drift attracted attention from very early days, although the importance of the diffusion did not go entirely unnoticed, with an example in ecological populations as early as Moran (1953); a systematic modelling of the diffusion did not seem to attract serious attention before the 1980s. For discrete-time cases, our focus, as far as we are aware it is in the econo- metric and finance literature that the modelling of the conditional variance has been treated seriously, although Tong and Lim (1980) did include conditional heteroscedasticity. -

Improved Estimates for the Rescaled Range and Hurst

App ears in Neural Networks in the Capital Markets Pro ceedings of the Third International Conference London Octob er A Refenes Y AbuMostafa J Mo o dy and A Weigend eds World Scientic London IMPROVED ESTIMATES FOR THE RESCALED RANGE AND HURST EXPONENTS John Mo o dy and Lizhong Wu Computer Science Dept Oregon Graduate Institute Portland OR Email mo o dycseogiedu lwucseogiedu ABSTRACT Rescaled Range RS analysis and Hurst Exp onents are widely used as measures of longterm memory structures in sto chastic pro cesses Our empirical studies show however that these statistics can incorrectly indicate departures from random walk b ehavior on short and intermediate time scales when very short term correlations are present A mo dication of rescaled range estimation RS analysis intended to correct bias due to shortterm dep endencies was prop osed by Lo Weshow however that Los RS statistic is itself biased and intro duces other problems including distortion of the Hurst exp onents We prop ose a new statistic RS that corrects for mean bias in the range Rbut do es not suer from the short term biases of RS or Los RS We supp ort our conclusions with exp eriments on simulated random walk and AR pro cesses and exp eriments using high frequency interbank DEM USD exchange rate quotes We conclude that the DEM USD series is mildly trending on time scales of to ticks and that the mean reversion suggested on these time RS analysis is spurious scales by RS or Intro duction and Overview There are three widely used metho ds for longterm dep endence