Stochastic Local Search and the Lovasz Local Lemma

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

FOCS 2005 Program SUNDAY October 23, 2005

FOCS 2005 Program SUNDAY October 23, 2005 Talks in Grand Ballroom, 17th floor Session 1: 8:50am – 10:10am Chair: Eva´ Tardos 8:50 Agnostically Learning Halfspaces Adam Kalai, Adam Klivans, Yishay Mansour and Rocco Servedio 9:10 Noise stability of functions with low influences: invari- ance and optimality The 46th Annual IEEE Symposium on Elchanan Mossel, Ryan O’Donnell and Krzysztof Foundations of Computer Science Oleszkiewicz October 22-25, 2005 Omni William Penn Hotel, 9:30 Every decision tree has an influential variable Pittsburgh, PA Ryan O’Donnell, Michael Saks, Oded Schramm and Rocco Servedio Sponsored by the IEEE Computer Society Technical Committee on Mathematical Foundations of Computing 9:50 Lower Bounds for the Noisy Broadcast Problem In cooperation with ACM SIGACT Navin Goyal, Guy Kindler and Michael Saks Break 10:10am – 10:30am FOCS ’05 gratefully acknowledges financial support from Microsoft Research, Yahoo! Research, and the CMU Aladdin center Session 2: 10:30am – 12:10pm Chair: Satish Rao SATURDAY October 22, 2005 10:30 The Unique Games Conjecture, Integrality Gap for Cut Problems and Embeddability of Negative Type Metrics Tutorials held at CMU University Center into `1 [Best paper award] Reception at Omni William Penn Hotel, Monongahela Room, Subhash Khot and Nisheeth Vishnoi 17th floor 10:50 The Closest Substring problem with small distances Tutorial 1: 1:30pm – 3:30pm Daniel Marx (McConomy Auditorium) Chair: Irit Dinur 11:10 Fitting tree metrics: Hierarchical clustering and Phy- logeny Subhash Khot Nir Ailon and Moses Charikar On the Unique Games Conjecture 11:30 Metric Embeddings with Relaxed Guarantees Break 3:30pm – 4:00pm Ittai Abraham, Yair Bartal, T-H. -

The Complexity of Nash Equilibria by Constantinos Daskalakis Diploma

The Complexity of Nash Equilibria by Constantinos Daskalakis Diploma (National Technical University of Athens) 2004 A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science in the Graduate Division of the University of California, Berkeley Committee in charge: Professor Christos H. Papadimitriou, Chair Professor Alistair J. Sinclair Professor Satish Rao Professor Ilan Adler Fall 2008 The dissertation of Constantinos Daskalakis is approved: Chair Date Date Date Date University of California, Berkeley Fall 2008 The Complexity of Nash Equilibria Copyright 2008 by Constantinos Daskalakis Abstract The Complexity of Nash Equilibria by Constantinos Daskalakis Doctor of Philosophy in Computer Science University of California, Berkeley Professor Christos H. Papadimitriou, Chair The Internet owes much of its complexity to the large number of entities that run it and use it. These entities have different and potentially conflicting interests, so their interactions are strategic in nature. Therefore, to understand these interactions, concepts from Economics and, most importantly, Game Theory are necessary. An important such concept is the notion of Nash equilibrium, which provides us with a rigorous way of predicting the behavior of strategic agents in situations of conflict. But the credibility of the Nash equilibrium as a framework for behavior-prediction depends on whether such equilibria are efficiently computable. After all, why should we expect a group of rational agents to behave in a fashion that requires exponential time to be computed? Motivated by this question, we study the computational complexity of the Nash equilibrium. We show that computing a Nash equilibrium is an intractable problem. -

From the AMS Secretary

From the AMS Secretary Society and delegate to such committees such powers as Bylaws of the may be necessary or convenient for the proper exercise American Mathematical of those powers. Agents appointed, or members of com- mittees designated, by the Board of Trustees need not be Society members of the Board. Nothing herein contained shall be construed to em- Article I power the Board of Trustees to divest itself of responsi- bility for, or legal control of, the investments, properties, Officers and contracts of the Society. Section 1. There shall be a president, a president elect (during the even-numbered years only), an immediate past Article III president (during the odd-numbered years only), three Committees vice presidents, a secretary, four associate secretaries, a Section 1. There shall be eight editorial committees as fol- treasurer, and an associate treasurer. lows: committees for the Bulletin, for the Proceedings, for Section 2. It shall be a duty of the president to deliver the Colloquium Publications, for the Journal, for Mathemat- an address before the Society at the close of the term of ical Surveys and Monographs, for Mathematical Reviews; office or within one year thereafter. a joint committee for the Transactions and the Memoirs; Article II and a committee for Mathematics of Computation. Section 2. The size of each committee shall be deter- Board of Trustees mined by the Council. Section 1. There shall be a Board of Trustees consisting of eight trustees, five trustees elected by the Society in Article IV accordance with Article VII, together with the president, the treasurer, and the associate treasurer of the Society Council ex officio. -

Conference Program

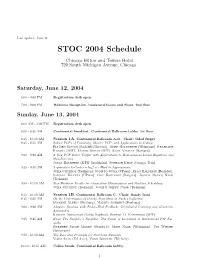

Last update: June 11 STOC 2004 Schedule Chicago Hilton and Towers Hotel 720 South Michigan Avenue, Chicago Saturday, June 12, 2004 5:00 - 9:00 PM Registration desk open 7:00 - 9:00 PM Welcome Reception. Boulevard Room and Foyer, 2nd floor Sunday, June 13, 2004 8:00 AM - 5:00 PM Registration desk open 8:00 - 8:35 AM Continental breakfast. Continental Ballroom Lobby, 1st floor 8:35 - 10:10 AM Session 1A. Continental Ballroom A-B. Chair: Oded Regev 8:35 - 8:55 AM Robust PCPs of Proximity, Shorter PCPs and Applications to Coding Eli Ben-Sasson (Radcliffe/Harvard), Oded Goldreich (Weizmann) Prahladh Harsha (MIT), Madhu Sudan (MIT), Salil Vadhan (Harvard) 9:00 - 9:20 AM A New PCP Outer Verifier with Applications to Homogeneous Linear Equations and Max-Bisection Jonas Holmerin (KTH, Stockholm), Subhash Khot (Georgia Tech) 9:25 - 9:45 AM Asymmetric k-Center is log∗ n - Hard to Approximate Julia Chuzhoy (Technion), Sudipto Guha (UPenn), Eran Halperin (Berkeley), Sanjeev Khanna (UPenn), Guy Kortsarz (Rutgers), Joseph (Seffi) Naor (Technion) 9:50 - 10:10 AM New Hardness Results for Congestion Minimization and Machine Scheduling Julia Chuzhoy (Technion), Joseph (Seffi) Naor (Technion) 8:35 - 10:10 AM Session 1B. Continental Ballroom C. Chair: Sandy Irani 8:35 - 8:55 AM On the Performance of Greedy Algorithms in Packet Buffering Susanne Albers (Freiburg), Markus Schmidt (Freiburg) 9:00 - 9:20 AM Adaptive Routing with End-to-End Feedback: Distributed Learning and Geometric Approaches Baruch Awerbuch (Johns Hopkins), Robert D. Kleinberg (MIT) 9:25 - 9:45 AM Know Thy Neighbor’s Neighbor: The Power of Lookahead in Randomized P2P Net- works Gurmeet Singh Manku (Stanford), Moni Naor (Weizmann), Udi Wieder (Weizmann) 9:50 - 10:10 AM The Zero-One Principle for Switching Networks Yossi Azar (Tel Aviv), Yossi Richter (Tel Aviv) 10:10 - 10:35 AM Coffee break. -

Download and Use Untrusted Code Without Fear

2 KELEY COMPUTER SCIENC CONTENTS INTRODUCTION1 CITRIS AND2 MOTES 30 YEARS OF INNOVATION GENE MYERS4 Q&A 1973–20 0 3 6GRAPHICS INTELLIGENT SYSTEMS 1RESEARCH0 DEPARTMENT STATISTICS14 ROC-SOLID SYSTEMS16 USER INTERFACE DESIGN AND DEVELOPMENT20 INTERDISCIPLINARY THEOR22Y 30PROOF-CARRYING CODE 28 COMPLEXITY 30THEORY FACULTY32 THE COMPUTER SCIENCE DIVISION OF THE DEPARTMENT OF EECS AT UC BERKELEY WAS CREATED IN 1973. THIRTY YEARS OF INNOVATION IS A CELEBRATION OF THE ACHIEVEMENTS OF THE FACULTY, STAFF, STUDENTS AND ALUMNI DURING A PERIOD OF TRULY BREATHTAKING ADVANCES IN COMPUTER SCIENCE AND ENGINEERING. THE FIRST CHAIR OF COMPUTER research in theoretical computer science received a Turing Award in 1989 for this work. learning is bringing us ever closer to the dream SCIENCE AT BERKELEY was Richard Karp, at Berkeley. In the area of programming languages and of truly intelligent machines. who had just shown that the hardness of well- software engineering, Berkeley research has The impact of Berkeley research on the practi- Berkeley’s was the one of the first top comput- known algorithmic problems, such as finding been noted for its flair for combining theory cal end of computer science has been no less er science programs to invest seriously in com- the minimum cost tour for a travelling sales- and practice, as exemplified in these pages significant. The development of Reduced puter graphics, and our illustrious alumni in person, could be related to NP-completeness— by George Necula’s research on proof- Instruction Set computers by David Patterson that area have done us proud. We were not so a concept proposed earlier by former Berkeley carrying code. -

Testing Closeness of Discrete Distributions

Testing Closeness of Discrete Distributions The MIT Faculty has made this article openly available. Please share how this access benefits you. Your story matters. Citation Tugkan Batu, Lance Fortnow, Ronitt Rubinfeld, Warren D. Smith, and Patrick White. 2013. Testing Closeness of Discrete Distributions. J. ACM 60, 1, Article 4 (February 2013), 25 pages. As Published http://dx.doi.org/10.1145/2432622.2432626 Publisher Association for Computing Machinery (ACM) Version Original manuscript Citable link http://hdl.handle.net/1721.1/90630 Terms of Use Creative Commons Attribution-Noncommercial-Share Alike Detailed Terms http://creativecommons.org/licenses/by-nc-sa/4.0/ Testing Closeness of Discrete Distributions∗ Tu˘gkan Batu† Lance Fortnow‡ Ronitt Rubinfeld§ Warren D. Smith¶ Patrick White November 5, 2010 Abstract Given samples from two distributions over an n-element set, we wish to test whether these dis- tributions are statistically close. We present an algorithm which uses sublinear in n, specifically, O(n2/3ǫ−8/3 log n), independent samples from each distribution, runs in time linear in the sample size, makes no assumptions about the structure of the distributions, and distinguishes the cases when the distance between the distributions is small (less than max ǫ4/3n−1/3/32,ǫn−1/2/4 ) { } or large (more than ǫ) in ℓ1 distance. This result can be compared to the lower bound of Ω(n2/3ǫ−2/3) for this problem given by Valiant [54]. Our algorithm has applications to the problem of testing whether a given Markov process is rapidly mixing. We present sublinear algorithms for several variants of this problem as well. -

Lenstree: Browsing and Navigating Large Hierarchical Information

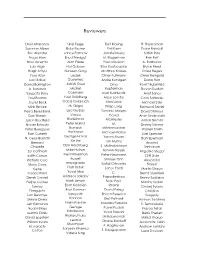

Reviewers Eric Allender Michel Goemans Alexander Rabinovich Noga Alon Leslie Goldberg Prabhakar Raghavan Kevin Atteson Oded Goldreich Ran Raz Hagit Attiya Michael Goodrich Nick Reingold Yonatan Aumann Mor Harchol Phil Rogaway Baruch Awerbuch Bruce Hendrickson Ronitt Rubinfeld Yossi Azar Diane Hernek Steven Rudich Eric Bach Dorit Hochbaum Larry Ruzzo Amotz Bar-Noy Neil Immerman Katherine St. John Iavid Mix Barrington Piotr Indyk Jeanette Schmidt Paul Beame Tao Jiang Steve Seiden Michael Ben-Or David Johnson David Shmoys Andras Benczur Nabil Kahale Peter Shor Charles H. Bennett Sampath Kannan Meera Sitharam Avrim Blum Sanjeev Khanna Cliff Stein Ryan Borgstrom Samir Khuller Craig Silverstein Sam Buss Joe Kilian Alistair Sinclair Paul Callahan Valerie King Greg So&in Ran Canetti Jon Kleinberg Aravind Srinivasan Pei Cao Phokion Kolaitis Madhu Sudan Moses Charikar Elias Koutsoupias Elizabeth Sweedyk Chandra Chekuri Hugo M. Krawczyk Eva Tardos Joseph Cheriyan Eyal Kushilevitz Jacob0 Toran Ken Clarkson Richard Ladner Shang-Hua Teng Richard Cole Vitus Leung Manfred Warmuth Don Coppersmith Jack Lutz John Watrous David Eppstein Gary Miller Joel Wein Martin Farach John Mitchell Scott Weinstein Joan Feigenbaum S. Muthukrishnan Avi Wigderson Anj a Feldmann Joseph (Seffi) Naor David P. Williamson Faith Fich Moni Naor David Bruce Wilson Lance Fortnow Andrew Odylzko Rebecca Wright Matt Franklin Frank J. Oles Shibu Yooseph Alan Frieze Steven Phillips Neal Young Eli Gafni Nick Pippenger William Gasarch Tal Rabin xii . -

Sponsored by IEEE Computer Society Technical Committee On

Reviewers Dorit Aharonov Uriel Feige Ralf Klasing R. Rajaraman Susanne Albers Eldar Fischer Phil Klein Dana Randall Eric Allender Lance Fortnow Jon Kleinberg Satish Rao Noga Alon Ehud Friedgut M. Klugerman Ran Raz Nina Amenta Alan Frieze Pascal Koiran A. Razborov Lars Arge Hal Gabow Elias Koutsoupias Bruce Reed Hagit Attiya Naveen Garg Matthias Krause Oded Regev Yossi Azar Leszek Oliver Kullmann Omer Reingold Laci Babai Gasieniec Andre Kundgen Dana Ron David Barrington Ashish Goel Orna Ronitt Rubinfeld A. Barvinok Michel Kupferman Steven Rudich Saugata Basu Goemans Eyal Kushilevitz Amit Sahai Paul Beame Paul Goldberg Arjen Lenstra Cenk Sahinalp Jozsef Beck Oded Goldreich Nati Linial Michael Saks Mihir Bellare M. Grigni Philip Long Raimund Seidel Petra Berenbrink Leo Guibas Dominic Mayers David Shmoys Dan Boneh Vassos David Amin Shokrollahi Julian Bradfield Hadzilacos McAllester Alistair Sinclair Nader Bshouty Peter Hajnal M. Danny Sleator Peter Buergisser Ramesh Mitzenmacher Warren Smith Hariharan Ran Canetti Michael Molloy Joel Spencer George Havas N. Cesa-Bianchi Yoram Moses Dan Spielman Xin He Bernard Ian Munro Aravind Chazelle Dan Hirschberg S. Muthukrishnan Srinivasan Ed Coffman Mike Hutton Ashwin Nayak Angelika Steger Edith Cohen Neil Immerman Peter Neumann Cliff Stein Richard Cole Russell Shmuel Onn Alexander Impagliazzo Steve Cook Rafail Ostrovsky Stolyar Piotr Indyk Gene Janos Pach Martin Strauss Cooperman Yuval Ishai C. Bernd Sturmfels Derek Corneil Andreas Jakoby Papadimitriou Benny Sudakov Felipe Cucker Mark Jerrum Boaz Patt- Madhu Sudan Sanjoy Erich Kaltofen Shamir Ondrej Sykora Dasgupta Ravi Kannan David Peleg Christian Luc Devroye Sampath Enoch Peserico Szegedy Shlomi Dolev Kannan Erez Petrank Amnon Ta-Shma Jeff Edmonds Haim Kaplan Maurizio Pizzonia E. -

Novel Frameworks for Mining Heterogeneous and Dynamic

Novel Frameworks for Mining Heterogeneous and Dynamic Networks A dissertation submitted to the Graduate School of the University of Cincinnati in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Department of Electrical and Computer Engineering and Computer Science of the College of Engineering by Chunsheng Fang B.E., Electrical Engineering & Information Science, June 2006 University of Science & Technology of China, Hefei, P.R.China Advisor and Committee Chair: Prof. Anca L. Ralescu November 3, 2011 Abstract Graphs serve as an important tool for discrete data representation. Recently, graph representations have made possible very powerful machine learning algorithms, such as manifold learning, kernel methods, semi-supervised learning. With the advent of large-scale real world networks, such as biological networks (disease network, drug target network, etc.), social networks (DBLP Co- authorship network, Facebook friendship, etc.), machine learning and data mining algorithms have found new application areas and have contributed to advance our understanding of proper- ties, and phenomena governing real world networks. When dealing with real world data represented as networks, two problems arise quite naturally: I) How to integrate and align the knowledge encoded in multiple and heterogeneous networks? For instance, how to find out the similar genes in co-disease and protein-protein inter- action networks? II) How to model and predict the evolution of a dynamic network? A real world exam- ple is, given N years snapshots of an evolving social network, how to build a model that can cap- ture the temporal evolution and make reliable prediction? In this dissertation, we present an innovative graph embedding framework, which identifies the key components of modeling the evolution in time of a dynamic graph. -

The Computational Worldview and the Sciences: a Report on Two Workshops

The Computational Worldview and the Sciences: a Report on Two Workshops Sanjeev Arora∗ Avrim Blumy Leonard J. Schulmanz Alistair Sinclairx Vijay V. Vazirani{ October 12, 2007 ∗Computer Science Dept, 35 Olden St, Princeton NJ 08540, [email protected] yDepartment of Computer Science, Carnegie Mellon University, Pittsburgh PA 15213-3891. [email protected] zCaltech, MC256-80, Pasadena CA 91125, [email protected] xComputer Science Division, University of California, Berkeley, CA 94720-1776. [email protected] {College of Computing, Georgia Tech, Atlanta, GA 30332-0765, [email protected] 1 Summary In many natural sciences and fields of engineering the processes being studied are computational in nature: for example, protein production in living cells, neuronal processes in the brain, or activities of economic agents as they incorporating current prices and market behavior into their strategies. We believe that viewing natural or engineered systems through the lens of their computational requirements or capabilities, made rigorous through the theory of algorithms and computational complexity, has the potential to provide important new insights into these systems. This impact on scientific methodology is distinct from and complementary to the impact computers have had and will continue to have through optimization and scientific computing. Thus the \algorithmic way of thinking" could play the role of a key enabling science of the 21st century, similar to and entwined with the role played by mathematics. For instance, within the past quarter-century, viewing quantum mechanics from a computational perspective gave rise to quantum computing, and viewing genomic sequencing as an algorithmic process rather than a wet lab process led to the fast sequencing of the human genome. -

Lecture Notes in Computer Science 1853 Edited by G

Lecture Notes in Computer Science 1853 Edited by G. Goos, J. Hartmanis and J. van Leeuwen 3 Berlin Heidelberg New York Barcelona Hong Kong London Milan Paris Singapore Tokyo Ugo Montanari José D.P. Rolim Emo Welzl (Eds.) Automata, Languages and Programming 27th International Colloquium, ICALP 2000 Geneva, Switzerland, July 9-15, 2000 Proceedings 13 Series Editors Gerhard Goos, Karlsruhe University, Germany Juris Hartmanis, Cornell University, NY, USA Jan van Leeuwen, Utrecht University, The Netherlands Volume Editors Ugo Montanari University of Pisa, Department of Computer Sciences Corso Italia, 40, 56125 Pisa, Italy E-mail: [email protected] José D.P. Rolim University of Geneva, Center for Computer Sciences 24, Rue Général Dufour, 1211 Geneva 4, Switzerland E-mail: [email protected] Emo Welzl ETH Zurich, Department of Computer Sciences 8092 Zurich, Switzerland E-mail: [email protected] Cataloging-in-Publication Data applied for Die Deutsche Bibliothek - CIP-Einheitsaufnahme Automata, languages and programming : 27th international colloquium ; proceedings / ICALP 2000, Geneva, Switzerland, July 9 - 15, 2000. Ugo Montanari . (ed.). - Berlin ; Heidelberg ; New York ; Barcelona ; Hong Kong ; London ; Milan ; Paris ; Singapore ; Tokyo : Springer, 2000 (Lecture notes in computer science ; Vol. 1853) ISBN 3-540-67715-1 CR Subject Classification (1998): F, D, C.2-3, G.1-2 ISSN 0302-9743 ISBN 3-540-67715-1 Springer-Verlag Berlin Heidelberg New York This work is subject to copyright. All rights are reserved, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, re-use of illustrations, recitation, broadcasting, reproduction on microfilms or in any other way, and storage in data banks. -

Mathematics and Computation

Mathematics and Computation Avi Wigderson March 27, 2018 1 Avi Wigderson Mathematics and Computation Draft: March 27, 2018 Dedicated to the memory of my father, Pinchas Wigderson (1921{1988), who loved people, loved puzzles, and inspired me. Ashkhabad, Turkmenistan, 1943 2 Avi Wigderson Mathematics and Computation Draft: March 27, 2018 Acknowledgments In this book I tried to present some of the knowledge and understanding I acquired in my four decades in the field. The main source of this knowledge was the Theory of Computation commu- nity, which has been my academic and social home throughout this period. The members of this wonderful community, especially my teachers, students, postdocs and collaborators, but also the speakers in numerous talks I attended, have been the source of this knowledge and understanding often far more than the books and journals I read. Ours is a generous and interactive community, whose members are happy to share their own knowledge and understanding with others, and are trained by the culture of the field to do so well. These interactions made (and still makes) learning a greatly joyful experience for me! More directly, the content and presentation in this book benefited directly by many. These are friends who carefully read earlier drafts, responded with valuable constructive comments at all levels, which made the book much better. For this I am grateful to Scott Aaronson, Dorit Aharonov, Noga Alon, Sanjeev Arora, Boaz Barak, Zeb Brady, Mark Braverman, Bernard Chazelle, Tom Church, Geoffroy Couteau, Andy Drucker, Ron Fagin, Yuval Filmus, Michael Forbes, Ankit Garg, Sumegha Garg, Oded Goldreich, Renan Gross, Nadia Heninger, Gil Kalai, Vickie Kearn, Pravesh Kothari, James Lee, Alex Lubotzky, Assaf Naor, Ryan O'Donnell, Toni Pitassi, Tim Roughgarden, Sasha Razborov, Mike Saks, Peter Sarnak, Susannah Shoemaker, Amir Shpilka, Alistair Sinclair, Bill Steiger, Arpita Tripathi, Salil Vadhan, Les Valiant, Thomas Vidick, BenLee Volk, Edna Wigderson, Yuval Wigderson, Ronald de Wolf, Amir Yehudayoff, Rich Zemel and David Zuckerman.