Learning Deep Architectures for Protein Structure Prediction 1

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Bivalent Chromatin Structure Marks Key Developmental Genes in Embryonic Stem Cells

A Bivalent Chromatin Structure Marks Key Developmental Genes in Embryonic Stem Cells Bradley E. Bernstein,1,2,3,* Tarjei S. Mikkelsen,3,4 Xiaohui Xie,3 Michael Kamal,3 Dana J. Huebert,1 James Cuff,3 Ben Fry,3 Alex Meissner,5 Marius Wernig,5 Kathrin Plath,5 Rudolf Jaenisch,5 Alexandre Wagschal,6 Robert Feil,6 Stuart L. Schreiber,3,7 and Eric S. Lander3,5 1 Molecular Pathology Unit and Center for Cancer Research, Massachusetts General Hospital, Charlestown, MA 02129, USA 2 Department of Pathology, Harvard Medical School, Boston, MA 02115, USA 3 Broad Institute of Harvard and MIT, Cambridge, MA 02139, USA 4 Division of Health Sciences and Technology, MIT, Cambridge, MA 02139, USA 5 Whitehead Institute for Biomedical Research, MIT, Cambridge, MA 02139, USA 6 Institute of Molecular Genetics, CNRS UMR-5535 and University of Montpellier-II, Montpellier, France 7 Howard Hughes Medical Institute at the Department of Chemistry and Chemical Biology, Harvard University, Cambridge, MA 02138, USA *Contact: [email protected] DOI 10.1016/j.cell.2006.02.041 SUMMARY which in turn modulate chromatin structure (Jenuwein and Allis, 2001; Margueron et al., 2005). The core histones The most highly conserved noncoding ele- H2A, H2B, H3, and H4 are subject to dozens of different ments (HCNEs) in mammalian genomes cluster modifications, including acetylation, methylation, and within regions enriched for genes encoding de- phosphorylation. Histone H3 lysine 4 (Lys4) and lysine velopmentally important transcription factors 27 (Lys27) methylation are of particular interest as these (TFs). This suggests that HCNE-rich regions modifications are catalyzed, respectively, by trithorax- may contain key regulatory controls involved and Polycomb-group proteins, which mediate mitotic in- heritance of lineage-specific gene expression programs in development. -

The Bioperl Toolkit: Perl Modules for the Life Sciences

Downloaded from genome.cshlp.org on January 25, 2012 - Published by Cold Spring Harbor Laboratory Press The Bioperl Toolkit: Perl Modules for the Life Sciences Jason E. Stajich, David Block, Kris Boulez, et al. Genome Res. 2002 12: 1611-1618 Access the most recent version at doi:10.1101/gr.361602 Supplemental http://genome.cshlp.org/content/suppl/2002/10/20/12.10.1611.DC1.html Material References This article cites 14 articles, 9 of which can be accessed free at: http://genome.cshlp.org/content/12/10/1611.full.html#ref-list-1 Article cited in: http://genome.cshlp.org/content/12/10/1611.full.html#related-urls Email alerting Receive free email alerts when new articles cite this article - sign up in the box at the service top right corner of the article or click here To subscribe to Genome Research go to: http://genome.cshlp.org/subscriptions Cold Spring Harbor Laboratory Press Downloaded from genome.cshlp.org on January 25, 2012 - Published by Cold Spring Harbor Laboratory Press Resource The Bioperl Toolkit: Perl Modules for the Life Sciences Jason E. Stajich,1,18,19 David Block,2,18 Kris Boulez,3 Steven E. Brenner,4 Stephen A. Chervitz,5 Chris Dagdigian,6 Georg Fuellen,7 James G.R. Gilbert,8 Ian Korf,9 Hilmar Lapp,10 Heikki Lehva¨slaiho,11 Chad Matsalla,12 Chris J. Mungall,13 Brian I. Osborne,14 Matthew R. Pocock,8 Peter Schattner,15 Martin Senger,11 Lincoln D. Stein,16 Elia Stupka,17 Mark D. Wilkinson,2 and Ewan Birney11 1University Program in Genetics, Duke University, Durham, North Carolina 27710, USA; 2National Research Council of -

Research Computing Facility an Update from Dr

Research Computing Facility An Update from Dr. Francesca Dominici June 20, 2013 Dear all, We are very excited to provide you some important updates regarding the research computing facility at the Faculty of Arts and Science (FASRC) http://rc.fas.harvard.edu. Please note that we are phasing out the HSPH cluster, and if you are currently leasing nodes on the HSPH cluster we will be working with you to migrate to FASRC. We have developed a FAQ document, which is available at the web link https://rc.fas.harvard.edu/hsph-at-fas-rc-frequently- asked-questions/ and also included in this message. Updates: 1. 158 HSPH accounts have been opened on FASRC, enabling users to run computing jobs on the FAS High Performance Computing Cluster (HPCC), also known as Odyssey 2. Several HSPH faculty have worked with the FASRC team to purchase data storage equipment and hardware that have been deployed at FASRC in Cambridge and linked to Odyssey via a secured network 3. FASRC has developed personalized solutions for our faculty to transfer secure data from HSPH to FAS in accordance with data user agreements. 4. FASRC has been mentioned as a key strength in training and research grant applications from HSPH, and high impact papers have been published that previously were delayed for lack of computing power 5. Please bookmark the web site http://rc.fas.harvard.edu/hsph- overview/ for additional and up to date information To access FASRC you will be charged approximately $3000 per year per account. Access for PhD and ScD students is free. -

Convergent Regulatory Evolution and Loss of Flight in Paleognathous Birds

Convergent regulatory evolution and loss of flight in paleognathous birds The Harvard community has made this article openly available. Please share how this access benefits you. Your story matters Citation Sackton, Timothy B., Phil Grayson, Alison Cloutier, Zhirui Hu, Jun S. Liu, Nicole E. Wheeler, Paul P. Gardner, et al. 2019. Convergent Regulatory Evolution and Loss of Flight in Paleognathous Birds. Science 364 (6435): 74–78. Citable link http://nrs.harvard.edu/urn-3:HUL.InstRepos:39865637 Terms of Use This article was downloaded from Harvard University’s DASH repository, and is made available under the terms and conditions applicable to Open Access Policy Articles, as set forth at http:// nrs.harvard.edu/urn-3:HUL.InstRepos:dash.current.terms-of- use#OAP Convergent regulatory evolution and loss of flight in palaeognathous birds Timothy B. Sackton* (1,2), Phil Grayson (2,3), Alison Cloutier (2,3), Zhirui Hu (4), Jun S. Liu (4), Nicole E. Wheeler (5,6), Paul P. Gardner (5,7), Julia A. Clarke (8), Allan J. Baker (9,10), Michele Clamp (1), Scott V. Edwards* (2,3) Affiliations: 1) Informatics Group, Harvard University, Cambridge, USA 2) Department of Organismic and Evolutionary Biology, Harvard University, Cambridge, USA 3) Museum of Comparative Zoology, Harvard University, Cambridge, USA 4) Department of Statistics, Harvard University, Cambridge, USA 5) School of Biological Sciences, University of Canterbury, New Zealand 6) Wellcome Sanger Institute, Wellcome Genome Campus, Cambridge, UK 7) Department of Biochemistry, University of Otago, New Zealand 8) Jackson School of Geosciences, The University of Texas at Austin, Austin, USA 9) Department of Natural History, Royal Ontario Museum, Toronto, Canada 10) Department of Ecology and Evolutionary Biology, University of Toronto, Toronto, Canada *correspondence to: TBS ([email protected]) or SVE ([email protected]) 1 Whether convergent phenotypic evolution is driven by convergent molecular changes, in proteins or regulatory regions, are core questions in evolutionary biology. -

Motif Selection Using Simulated Annealing Algorithm with Application to Identify Regulatory Elements

Motif Selection Using Simulated Annealing Algorithm with Application to Identify Regulatory Elements A thesis presented to the faculty of the Russ College of Engineering and Technology of Ohio University In partial fulfillment of the requirements for the degree Master of Science Liang Chen August 2018 © 2018 Liang Chen. All Rights Reserved. 2 This thesis titled Motif Selection Using Simulated Annealing Algorithm with Application to Identify Regulatory Elements by LIANG CHEN has been approved for the Department of Electrical Engineering and Computer Science and the Russ College of Engineering and Technology by Lonnie Welch Professor of Electrical Engineering and Computer Science Dennis Irwin Dean, Russ College of Engineering and Technology 3 Abstract CHEN, LIANG, M.S., August 2018, Computer Science Master Program Motif Selection Using Simulated Annealing Algorithm with Application to Identify Regulatory Elements (106 pp.) Director of Thesis: Lonnie Welch Modern research on gene regulation and disorder-related pathways utilize the tools such as microarray and RNA-Seq to analyze the changes in the expression levels of large sets of genes. In silico motif discovery was performed based on the gene expression profile data, which generated a large set of candidate motifs (usually hundreds or thousands of motifs). How to pick a set of biologically meaningful motifs from the candidate motif set is a challenging biological and computational problem. As a computational problem it can be modeled as motif selection problem (MSP). Building solutions for motif selection problem will give biologists direct help in finding transcription factors (TF) that are strongly related to specific pathways and gaining insights of the relationships between genes. -

Computational Analysis of Protein Function Within Complete Genomes

Computational Analysis of Protein Function within Complete Genomes Anton James Enright Wolfson College A dissertation submitted to the University of Cambridge for the degree of Doctor of Philosophy European Molecular Biology Laboratory, European Bioinformatics Institute, Wellcome Trust Genome Campus, Hinxton, Cambridge, CB10 1SD, United Kingdom. Email: [email protected] March 7, 2002 To My Parents and Kerstin This thesis is the result of my own work and includes nothing which is the outcome of work done in collaboration except where specifically indicated in the text. This thesis does not exceed the specified length limit of 300 pages as de- fined by the Biology Degree Committee. This thesis has been typeset in 12pt font using LATEX2ε accordingtothe specifications defined by the Board of Graduate Studies and the Biology Degree Committee. ii Computational Analysis of Protein Function within Complete Genomes Summary Anton James Enright March 7, 2002 Wolfson College Since the advent of complete genome sequencing, vast amounts of nucleotide and amino acid sequence data have been produced. These data need to be effectively analysed and verified so that they may be used for biologi- cal discovery. A significant proportion of predicted protein sequences from these complete genomes have poorly characterised or unknown functional annotations. This thesis describes a number of approaches which detail the computational analysis of amino acid sequences for the prediction and analy- sis of protein function within complete genomes. The first chapter is a short introduction to computational genome analysis while the second and third chapters describe how groups of related protein sequences (termed protein families) may be characterised using sequence clustering algorithms. -

The Myth of Junk DNA

The Myth of Junk DNA JoATN h A N W ells s eattle Discovery Institute Press 2011 Description According to a number of leading proponents of Darwin’s theory, “junk DNA”—the non-protein coding portion of DNA—provides decisive evidence for Darwinian evolution and against intelligent design, since an intelligent designer would presumably not have filled our genome with so much garbage. But in this provocative book, biologist Jonathan Wells exposes the claim that most of the genome is little more than junk as an anti-scientific myth that ignores the evidence, impedes research, and is based more on theological speculation than good science. Copyright Notice Copyright © 2011 by Jonathan Wells. All Rights Reserved. Publisher’s Note This book is part of a series published by the Center for Science & Culture at Discovery Institute in Seattle. Previous books include The Deniable Darwin by David Berlinski, In the Beginning and Other Essays on Intelligent Design by Granville Sewell, God and Evolution: Protestants, Catholics, and Jews Explore Darwin’s Challenge to Faith, edited by Jay Richards, and Darwin’s Conservatives: The Misguided Questby John G. West. Library Cataloging Data The Myth of Junk DNA by Jonathan Wells (1942– ) Illustrations by Ray Braun 174 pages, 6 x 9 x 0.4 inches & 0.6 lb, 229 x 152 x 10 mm. & 0.26 kg Library of Congress Control Number: 2011925471 BISAC: SCI029000 SCIENCE / Life Sciences / Genetics & Genomics BISAC: SCI027000 SCIENCE / Life Sciences / Evolution ISBN-13: 978-1-9365990-0-4 (paperback) Publisher Information Discovery Institute Press, 208 Columbia Street, Seattle, WA 98104 Internet: http://www.discoveryinstitutepress.com/ Published in the United States of America on acid-free paper. -

Computational Molecular Coevolution

Western University Scholarship@Western Electronic Thesis and Dissertation Repository 12-13-2013 12:00 AM Computational Molecular Coevolution Russell J. Dickson The University of Western Ontario Supervisor Dr. Gregory B. Gloor The University of Western Ontario Graduate Program in Biochemistry A thesis submitted in partial fulfillment of the equirr ements for the degree in Doctor of Philosophy © Russell J. Dickson 2013 Follow this and additional works at: https://ir.lib.uwo.ca/etd Part of the Bioinformatics Commons Recommended Citation Dickson, Russell J., "Computational Molecular Coevolution" (2013). Electronic Thesis and Dissertation Repository. 1798. https://ir.lib.uwo.ca/etd/1798 This Dissertation/Thesis is brought to you for free and open access by Scholarship@Western. It has been accepted for inclusion in Electronic Thesis and Dissertation Repository by an authorized administrator of Scholarship@Western. For more information, please contact [email protected]. COMPUTATIONAL MOLECULAR COEVOLUTION (Thesis format: Integrated Article) by Russell Dickson Graduate Program in Biochemistry A thesis submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy The School of Graduate and Postdoctoral Studies The University of Western Ontario London, Ontario, Canada c Russell J Dickson 2013 Abstract A major goal in computational biochemistry is to obtain three-dimensional structure informa- tion from protein sequence. Coevolution represents a biological mechanism through which structural information can be obtained from a family of protein sequences. Evolutionary rela- tionships within a family of protein sequences are revealed through sequence alignment. Statis- tical analyses of these sequence alignments reveals positions in the protein family that covary, and thus appear to be dependent on one another throughout the evolution of the protein family. -

Copy of NAR30 7.Book(Gkf245.Fm)

© 2002 Oxford University Press Nucleic Acids Research, 2002, Vol. 30, No. 7 1575–1584 An efficient algorithm for large-scale detection of protein families A. J. Enright*, S. Van Dongen1 and C. A. Ouzounis Computational Genomics Group, The European Bioinformatics Institute, EMBL Cambridge Outstation, Cambridge CB10 1SD, UK and 1Centrum voor Wiskunde en Informatica, Kruislaan 413, NL-1098 SJ Amsterdam, The Netherlands Received December 14, 2001; Revised and Accepted February 6, 2002 ABSTRACT algorithms should take into account all similarity relationships in a given arbitrary set of sequences, a process that is defined Detection of protein families in large databases is as ‘sequence clustering’ (7). This approach is usually based on one of the principal research objectives in structural grouping homologous proteins together via a similarity and functional genomics. Protein family classification measure obtained from direct sequence comparison. Ideally, can significantly contribute to the delineation of the resulting clusters should correspond to protein families, functional diversity of homologous proteins, the whose members are related by a common evolutionary history prediction of function based on domain architecture (8). Well characterised proteins within a family can hence or the presence of sequence motifs as well as allow one to reliably assign functions to family members comparative genomics, providing valuable evolu- whose functions are not known or not well understood (7). The tionary insights. We present a novel approach called detection of protein families is also instrumental in the field of TRIBE-MCL for rapid and accurate clustering of comparative genomics (9). Families may be specific to certain protein sequences into families. The method relies taxonomic groups or widespread across all domains of life (10), facts that can provide evolutionary insights into the on the Markov cluster (MCL) algorithm for the assign- underlying biology of organisms (11). -

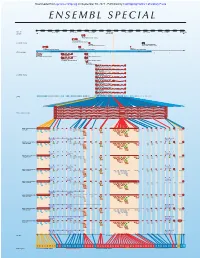

ENSEMBL SPECIAL Downloaded from Genome.Cshlp.Org on September 30, 2021 - Published by Cold Spring Harbor Laboratory Press

Downloaded from genome.cshlp.org on September 30, 2021 - Published by Cold Spring Harbor Laboratory Press ENSEMBL SPECIAL Downloaded from genome.cshlp.org on September 30, 2021 - Published by Cold Spring Harbor Laboratory Press By Elof Axel Carlson, Professor Emeritus New York University at Stony Brook his latest book by Elof Carlson is a first history of classical genetics, T the era in which the chromosome theory of heredity was proposed and developed. Highly illustrated and based heavily on early 20th century original sources, the book traces the roots of genetics in breeding analysis and studies of cytology, evolution, and reproductive biology that began in Europe but were synthesized in the United States through new Ph.D. programs and expanded academic funding. Carlson argues that, influenced largely by new technologies and instrumentation, the life sciences progressed though incremental change rather than paradigm shifts, and he describes how molecular biology emerged from the key ideas and model systems of classical genetics. Readable and original, this narrative will interest historians and science educators as well as today’s practitioners of genetics 2004, 332 pp., illus., index Hardcover $45 ISBN 0-87969-675-3 CONTENTS Introduction: What Is Classical Genetics? Part I: The Tributaries of Genetics Part II: An American Amalgam: The Chromosome Theory of Heredity Part III: Mendelism Takes Over: 1900-1910 Part IV: The Organism of Choice: Drosophila Part V: Classical Genetics Permeates Biology Part VI: Classical Genetics Examines Homo sapiens To order or request additional information: Call: 1–800–843–4388 (Continental US and Canada) 516–422–4100 (All other locations) FAX: 516–422–4097 E–mail:[email protected] or WWW Site http://www.cshlpress.com/ Write: Cold Spring Harbor Laboratory Press, 500 Sunnyside Blvd, Woodbury, NY 11797–2924 Downloaded from genome.cshlp.org on September 30, 2021 - Published by Cold Spring Harbor Laboratory Press ENSEMBL Special An Overview of Ensembl Ewan Birney,1,3 T. -

Analyses of Deep Mammalian Sequence Alignments and Constraint Predictions for 1% of the Human Genome

Downloaded from genome.cshlp.org on October 3, 2021 - Published by Cold Spring Harbor Laboratory Press Article Analyses of deep mammalian sequence alignments and constraint predictions for 1% of the human genome Elliott H. Margulies,2,7,8,21 Gregory M. Cooper,2,3,9 George Asimenos,2,10 Daryl J. Thomas,2,11,12 Colin N. Dewey,2,4,13 Adam Siepel,5,12 Ewan Birney,14 Damian Keefe,14 Ariel S. Schwartz,13 Minmei Hou,15 James Taylor,15 Sergey Nikolaev,16 Juan I. Montoya-Burgos,17 Ari Löytynoja,14 Simon Whelan,6,14 Fabio Pardi,14 Tim Massingham,14 James B. Brown,18 Peter Bickel,19 Ian Holmes,20 James C. Mullikin,8,21 Abel Ureta-Vidal,14 Benedict Paten,14 Eric A. Stone,9 Kate R. Rosenbloom,12 W. James Kent,11,12 NISC Comparative Sequencing Program,1,8,21 Baylor College of Medicine Human Genome Sequencing Center,1 Washington University Genome Sequencing Center,1 Broad Institute,1 UCSC Genome Browser Team,1 British Columbia Cancer Agency Genome Sciences Center,1 Stylianos E. Antonarakis,16 Serafim Batzoglou,10 Nick Goldman,14 Ross Hardison,22 David Haussler,11,12,24 Webb Miller,22 Lior Pachter,24 Eric D. Green,8,21 and Arend Sidow9,25 A key component of the ongoing ENCODE project involves rigorous comparative sequence analyses for the initially targeted 1% of the human genome. Here, we present orthologous sequence generation, alignment, and evolutionary constraint analyses of 23 mammalian species for all ENCODE targets. Alignments were generated using four different methods; comparisons of these methods reveal large-scale consistency but substantial differences in terms of small genomic rearrangements, sensitivity (sequence coverage), and specificity (alignment accuracy). -

CV Burkhard Rost TUM Technical University of Munich Affiliation: Columbia University

Burkhard Rost CV BURKHARD ROST TUM Informatics/Bioinformatics i12 Boltzmannstrasse 3 (Rm 01.09.052) 85748 Garching/München, Germany & Dept. Biochemistry & Molecular Biophysics Columbia University, New York, USA Email [email protected] Tel +49-89-289-17-811 Web www.rostlab.org Fax +49-89-289-19-414 Photo: © Eckert & Heddergott, TUM Document: CV Burkhard Rost TUM Technical University of Munich Affiliation: Columbia University Highlights: • 208 invited talks in 37 countries (incl. TedX) • 272 publications (212 peer-review, 183 first/last author) • 2019/03: >38,500 citations, h-index=91 (Google) • PredictProtein 1st Internet server in mol. biol. (since 1992) • 8 years ISCB President (International Society for Computational Biology) • 207 trained (27% female, 55% foreigners from 37 nations on 6 continents) • co-published with over 984 scientists Brief narrative: Burkhard Rost obtained his doctoral degree (Dr. rer. nat.) from the Univ. of Heidelberg (Germany) in the field of theoretical physics. He began his research working on the thermo-dynamical properties of spin glasses and brain-like artificial neural networks. A short project on peace/arms control research sketched a simple, non-intrusive sensor networks to monitor aircraft (1988-1990). He entered the field of molecular biology at the European Molecular Biology Laboratory (EMBL, Heidelberg, Germany, 1990-1995), spent a year at the European Bioinformatics Institute (EBI, Hinxton, Cambridgshire, England, 1995), returned to the EMBL (1996-1998), joined the company LION Biosciences for a brief interim (1998), became faculty in the Medical School of Columbia University in 1998, and joined the TUM Munich to become an Alexander von Humboldt professor in 2009.