The Problems and Challenges of Managing Crowd Sourced Audio-Visual Evidence

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Cat's Whisker

cerdd gymunedol cymru community music THE CAT’S WHISKER wales Community Music Wales Summer Newsletter July 2009 Welcome ! Complete Control Music Reaches New Heights to the first CMW The Cat’s Whisker In May 2009 CMW was named as a recording opportunities, possible joint sponsor of the Reach the Heights releases, gigs, support and advice. We will be bringing you the latest project. This major project has This project works with a wide through the Community Music been awarded £27 million from the range of young people from many Wales new quarterly newsletter. European Social Fund. It aims to raise different musical genres, from singer This will be a way to stay in touch the skills and aspirations of young songwriters to bands to DJs. CCM and a place to find out about people across West Wales and the pairs young people with mentors who upcoming events and the latest Valleys to help them build a brighter have worked professionally within the news from the world of CMW. future by staying in education or music industry to provide specialised finding a job or training. It will target face to face mentoring. It targets Over the past year CMW has support locally to improve confidence, disaffected young people who may undergone a number of changes, decision-making and basic skills. not reach their full potential through amongst them, my appointment as traditional learning, and through the new Director last September. The Deputy First Minister Ieuan Wyn recognising and encouraging their I am primarily responsible for Jones said “Reach the Heights is a musical talents seeks to improve the effective creative and artistic major investment in the future of our their sense of worth, self confidence, management of the charity and will young people. -

Ian Watkins and B and P

Case No: 62CA1726112 The Law Courts, Cathays Park, Cardiff CF10 3PG Date: 18 December 2013 Before: THE HONOURABLE MR JUSTICE ROYCE - - - - - - - - - - - - - - - - - - - - - Between: THE QUEEN - v - IAN WATKINS AND B AND P SENTENCING REMARKS ............................. R V Watkins and P and B. Sentencing remarks. Mr Justice Royce : 1. Those who have appeared in these Courts at the Bar or on the Bench over many years see and hear a large number of horrific cases. This case however breaks new ground. 2. Any decent person looking at and listening to the material here will experience shock; revulsion; anger and incredulity. What you three did plumbed new depths of depravity. 3. You Watkins achieved fame and success as the lead singer of the Lostprophets. You had many fawning fans. That gave you power. You knew you could use that power to induce young female fans to help satisfy your apparently insatiable lust and to take part in the sexual abuse of their young children. Away from the highlights of your public performances lay a dark and sinister side. 4. What is the background ? Count 18 dates back to March 2007. You met TT after a Lostprophets concert when she was a 16 year old virgin. The prospect of taking her virginity excited you. You got her to dress in a schoolgirl’s outfit and you videoed her and you having oral, vaginal and anal sex. You asked her whether she enjoyed being your underage slut. At the end you urinated over her face and told her to drink it. That gives some insight into your attitude to young females at that time over 5 years before the counts relating to B and P. -

Song Pack Listing

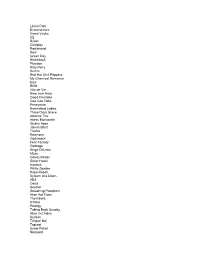

TRACK LISTING BY TITLE Packs 1-86 Kwizoke Karaoke listings available - tel: 01204 387410 - Title Artist Number "F" You` Lily Allen 66260 'S Wonderful Diana Krall 65083 0 Interest` Jason Mraz 13920 1 2 Step Ciara Ft Missy Elliot. 63899 1000 Miles From Nowhere` Dwight Yoakam 65663 1234 Plain White T's 66239 15 Step Radiohead 65473 18 Til I Die` Bryan Adams 64013 19 Something` Mark Willis 14327 1973` James Blunt 65436 1985` Bowling For Soup 14226 20 Flight Rock Various Artists 66108 21 Guns Green Day 66148 2468 Motorway Tom Robinson 65710 25 Minutes` Michael Learns To Rock 66643 4 In The Morning` Gwen Stefani 65429 455 Rocket Kathy Mattea 66292 4Ever` The Veronicas 64132 5 Colours In Her Hair` Mcfly 13868 505 Arctic Monkeys 65336 7 Things` Miley Cirus [Hannah Montana] 65965 96 Quite Bitter Beings` Cky [Camp Kill Yourself] 13724 A Beautiful Lie` 30 Seconds To Mars 65535 A Bell Will Ring Oasis 64043 A Better Place To Be` Harry Chapin 12417 A Big Hunk O' Love Elvis Presley 2551 A Boy From Nowhere` Tom Jones 12737 A Boy Named Sue Johnny Cash 4633 A Certain Smile Johnny Mathis 6401 A Daisy A Day Judd Strunk 65794 A Day In The Life Beatles 1882 A Design For Life` Manic Street Preachers 4493 A Different Beat` Boyzone 4867 A Different Corner George Michael 2326 A Drop In The Ocean Ron Pope 65655 A Fairytale Of New York` Pogues & Kirsty Mccoll 5860 A Favor House Coheed And Cambria 64258 A Foggy Day In London Town Michael Buble 63921 A Fool Such As I Elvis Presley 1053 A Gentleman's Excuse Me Fish 2838 A Girl Like You Edwyn Collins 2349 A Girl Like -

Karaoke Mietsystem Songlist

Karaoke Mietsystem Songlist Ein Karaokesystem der Firma Showtronic Solutions AG in Zusammenarbeit mit Karafun. Karaoke-Katalog Update vom: 13/10/2020 Singen Sie online auf www.karafun.de Gesamter Katalog TOP 50 Shallow - A Star is Born Take Me Home, Country Roads - John Denver Skandal im Sperrbezirk - Spider Murphy Gang Griechischer Wein - Udo Jürgens Verdammt, Ich Lieb' Dich - Matthias Reim Dancing Queen - ABBA Dance Monkey - Tones and I Breaking Free - High School Musical In The Ghetto - Elvis Presley Angels - Robbie Williams Hulapalu - Andreas Gabalier Someone Like You - Adele 99 Luftballons - Nena Tage wie diese - Die Toten Hosen Ring of Fire - Johnny Cash Lemon Tree - Fool's Garden Ohne Dich (schlaf' ich heut' nacht nicht ein) - You Are the Reason - Calum Scott Perfect - Ed Sheeran Münchener Freiheit Stand by Me - Ben E. King Im Wagen Vor Mir - Henry Valentino And Uschi Let It Go - Idina Menzel Can You Feel The Love Tonight - The Lion King Atemlos durch die Nacht - Helene Fischer Roller - Apache 207 Someone You Loved - Lewis Capaldi I Want It That Way - Backstreet Boys Über Sieben Brücken Musst Du Gehn - Peter Maffay Summer Of '69 - Bryan Adams Cordula grün - Die Draufgänger Tequila - The Champs ...Baby One More Time - Britney Spears All of Me - John Legend Barbie Girl - Aqua Chasing Cars - Snow Patrol My Way - Frank Sinatra Hallelujah - Alexandra Burke Aber Bitte Mit Sahne - Udo Jürgens Bohemian Rhapsody - Queen Wannabe - Spice Girls Schrei nach Liebe - Die Ärzte Can't Help Falling In Love - Elvis Presley Country Roads - Hermes House Band Westerland - Die Ärzte Warum hast du nicht nein gesagt - Roland Kaiser Ich war noch niemals in New York - Ich War Noch Marmor, Stein Und Eisen Bricht - Drafi Deutscher Zombie - The Cranberries Niemals In New York Ich wollte nie erwachsen sein (Nessajas Lied) - Don't Stop Believing - Journey EXPLICIT Kann Texte enthalten, die nicht für Kinder und Jugendliche geeignet sind. -

Music Globally Protected Marks List (GPML) Music Brands & Music Artists

Music Globally Protected Marks List (GPML) Music Brands & Music Artists © 2012 - DotMusic Limited (.MUSIC™). All Rights Reserved. DotMusic reserves the right to modify this Document .This Document cannot be distributed, modified or reproduced in whole or in part without the prior expressed permission of DotMusic. 1 Disclaimer: This GPML Document is subject to change. Only artists exceeding 1 million units in sales of global digital and physical units are eligible for inclusion in the GPML. Brands are eligible if they are globally-recognized and have been mentioned in established music trade publications. Please provide DotMusic with evidence that such criteria is met at [email protected] if you would like your artist name of brand name to be included in the DotMusic GPML. GLOBALLY PROTECTED MARKS LIST (GPML) - MUSIC ARTISTS DOTMUSIC (.MUSIC) ? and the Mysterians 10 Years 10,000 Maniacs © 2012 - DotMusic Limited (.MUSIC™). All Rights Reserved. DotMusic reserves the right to modify this Document .This Document 10cc can not be distributed, modified or reproduced in whole or in part 12 Stones without the prior expressed permission of DotMusic. Visit 13th Floor Elevators www.music.us 1910 Fruitgum Co. 2 Unlimited Disclaimer: This GPML Document is subject to change. Only artists exceeding 1 million units in sales of global digital and physical units are eligible for inclusion in the GPML. 3 Doors Down Brands are eligible if they are globally-recognized and have been mentioned in 30 Seconds to Mars established music trade publications. Please -

Led Zeppelin R.E.M. Queen Feist the Cure Coldplay the Beatles The

Jay-Z and Linkin Park System of a Down Guano Apes Godsmack 30 Seconds to Mars My Chemical Romance From First to Last Disturbed Chevelle Ra Fall Out Boy Three Days Grace Sick Puppies Clawfinger 10 Years Seether Breaking Benjamin Hoobastank Lostprophets Funeral for a Friend Staind Trapt Clutch Papa Roach Sevendust Eddie Vedder Limp Bizkit Primus Gavin Rossdale Chris Cornell Soundgarden Blind Melon Linkin Park P.O.D. Thousand Foot Krutch The Afters Casting Crowns The Offspring Serj Tankian Steven Curtis Chapman Michael W. Smith Rage Against the Machine Evanescence Deftones Hawk Nelson Rebecca St. James Faith No More Skunk Anansie In Flames As I Lay Dying Bullet for My Valentine Incubus The Mars Volta Theory of a Deadman Hypocrisy Mr. Bungle The Dillinger Escape Plan Meshuggah Dark Tranquillity Opeth Red Hot Chili Peppers Ohio Players Beastie Boys Cypress Hill Dr. Dre The Haunted Bad Brains Dead Kennedys The Exploited Eminem Pearl Jam Minor Threat Snoop Dogg Makaveli Ja Rule Tool Porcupine Tree Riverside Satyricon Ulver Burzum Darkthrone Monty Python Foo Fighters Tenacious D Flight of the Conchords Amon Amarth Audioslave Raffi Dimmu Borgir Immortal Nickelback Puddle of Mudd Bloodhound Gang Emperor Gamma Ray Demons & Wizards Apocalyptica Velvet Revolver Manowar Slayer Megadeth Avantasia Metallica Paradise Lost Dream Theater Temple of the Dog Nightwish Cradle of Filth Edguy Ayreon Trans-Siberian Orchestra After Forever Edenbridge The Cramps Napalm Death Epica Kamelot Firewind At Vance Misfits Within Temptation The Gathering Danzig Sepultura Kreator -

Cultural Profile Resource: Wales

Cultural Profile Resource: Wales A resource for aged care professionals Birgit Heaney Dip. 13/11/2016 A resource for aged care professionals Table of Contents Introduction ....................................................................................................................................................................... 3 Location and Demographic ............................................................................................................................................... 4 Everyday Life ................................................................................................................................................................... 5 Etiquette ............................................................................................................................................................................ 5 Cultural Stereotype ........................................................................................................................................................... 6 Family ............................................................................................................................................................................... 8 Marriage, Family and Kinship .......................................................................................................................................... 8 Personal Hygiene ........................................................................................................................................................... -

Issue 184.Pmd

email: [email protected] website: nightshift.oxfordmusic.net Free every month. NIGHTSHIFT Issue 184 November Oxford’s Music Magazine 2010 Oxford’s Greatest Metal Bands Ever Revealed! photos: rphimagies The METAL!issue featuring Desert Storm Sextodecimo JOR Sevenchurch Dedlok Taste My Eyes and many more NIGHTSHIFT: PO Box 312, Kidlington, OX5 1ZU. Phone: 01865 372255 NEWNEWSS Nightshift: PO Box 312, Kidlington, OX5 1ZU Phone: 01865 372255 email: [email protected] Online: nightshift.oxfordmusic.net COMMON ROOM is a two-day mini-festival taking place at the Jericho Tavern over the weekend of 4th-5th December. Organised by Back & To The Left, the event features sets from a host of local bands, including Dead Jerichos, The Epstein, Borderville, Alphabet Backwards, Huck & the Handsome MOTOWN LEGENDS MARTHA REEVES & THE VANDELLAS play Fee, The Yarns, Band of Hope, at the O2 Academy on Sunday 12th December. Reeves initially retired Spring Offensive, Our Lost Infantry, from performing in 1972 due to illness and had until recently been a Damn Vandals, Minor Coles, The member of Detroit’s city council. In nine years between 1963-72 the trio Scholars, Samuel Zasada, The had 26 chart hits, including the classic `Dancing In The Streets’, `Jimmy Deputees, Message To Bears, Above Mack’ and `Heatwave’. Tickets for the show are on sale now, priced £20, Us The Waves, The Gullivers, The from the Academy box office an online at www.o2academyoxford.co.uk. DIVE DIVE have signed a deal with Moulettes, Toliesel, Sonny Liston, Xtra Mile Records, home to Frank Cat Matador and Treecreeper. Early Turner, with whom they are bird tickets, priced £8 for both days, on Friday 19th November, with be released and the campaign bandmates, and fellow Oxford stars A are on sale now from support from Empty Vessels plus culminates with the release of ‘Fine Silent Film. -

Media® JUNE 1, 2002 / VOLUME 20 / ISSUE 23 / £3.95 / EUROS 6.5

Music Media® JUNE 1, 2002 / VOLUME 20 / ISSUE 23 / £3.95 / EUROS 6.5 THE MUCH ANTICIPATED NEW ALBUM 10-06-02 INCLUDES THE SINGLE 'HERE TO STAY' Avukr,m',L,[1] www.korntv.com wwwamymusweumpecun IMMORTam. AmericanRadioHistory.Com System Of A Down AerialscontainsThe forthcoming previously single unreleased released and July exclusive 1 Iostprophetscontent.Soldwww.systemofadown.com out AtEuropean radio now. tour. theFollowing lostprophets massive are thefakesoundofprogressnow success on tour in thethroughout UK and Europe.the US, featuredCatch on huge the new O'Neill single sportwear on"thefakesoundofprogress", MTVwww.lostprophets.com competitionEurope during running May. CreedOne Last Breath TheCommercialwww.creednet.com new single release from fromAmerica's June 17.biggest band. AmericanRadioHistory.Com TearMTVDrowning Europe Away Network Pool Priority! Incubuswww.drowningpool.comCommercialOn tour in Europe release now from with June Ozzfest 10. and own headlining shows. CommercialEuropean release festivals fromAre in JuneAugust.You 17.In? www.sonymusiceurope.cornSONY MUSIC EUROPE www.enjoyincubus.com Music Mediae JUNE 1, 2002 / VOLUME 20 / ISSUE 23 / £3.95 / EUROS 6.5 AmericanRadioHistory.Com T p nTA T_C ROCK Fpn"' THE SINGLE THE ALBUM A label owned by Poko Rekords Oy 71e um!010111, e -e- Number 1in Finland for 4 weeks Number 1in Finland Certified gold Certified gold, soon to become platinum Poko Rekords Oy, P.O. Box 483, FIN -33101 Tampere, Finland I Tel: +358 3 2136 800 I Fax: +358 3 2133 732 I Email: [email protected] I Website: www.poko.fi I www.pariskills.com Two rriot girls and a boy present: Album A label owned by Poko Rekords Oy \member of 7lit' EMI Cji0//p "..rriot" AmericanRadioHistory.Com UPFRONT Rock'snolonger by Emmanuel Legrand, Music & Media editor -in -chief Welcome to Music & Media's firstspecial inahard place issue of the year-an issue that should rock The fact that Ozzy your socks off. -

Linkin Park Evanescence Vama Veche U2 Byron Coldplay

Linkin Park Evanescence Vama Veche U2 Byron Coldplay Radiohead Korn Green Day Nickelback Placebo Katy Perry Kumm Red Hot Chili Peppers My Chemical Romance Eels REM Vita de Vie Nine Inch Nails Good Charlotte Goo Goo Dolls Pennywise Barenaked Ladies Three Days Grace Alkaline Trio Alanis Morissette Guano Apes James Blunt Travka Reamonn Godsmack Fear Factory Garbage Kings Of Leon Muse Gandul Matei Sister Hazel Incubus White Zombie Papa Roach System of a Down AB4 Oasis Seether Smashing Pumpkins Alien Ant Farm The Killers Ill Nino Prodigy Taking Back Sunday Alice in Chains Kutless Timpuri Noi Taproot Snow Patrol Nonpoint Dashboard Confessional The Cure The Cranberries Stereophonics Blink 182 Phish Blur Rage Against the Machine Gorillaz Pulp Bowling For Soup Citizen Cope Manic Street Preachers Luna Amara Nirvana The Verve Breaking Benjamin Chevelle Modest Mouse Jeff Buckley Beck Arctic Monkeys Bloodhound Gang Sevendust Coma Lostprophets Keane Blue October 30 Seconds to Mars Suede Collective Soul Kill Hannah Foo Fighters The Cult Feeder Veruca Salt Skunk Anansie 3 Doors Down Deftones Sugar Ray Counting Crows Mushroomhead Electric Six Filter Lynyrd Skynyrd Biffy Clyro Death Cab For Cutie Nick Cave and the Bad Seeds The White Stripes James Morrison Texas Crazy Town Mudvayne Placebo Crash Test Dummies Poets Of The Fall Jason Mraz Linkin Park Sum 41 Grimus Guster A Perfect Circle Tool The Strokes Flyleaf Chris Cornell Smile Empty Soul Audioslave Nirvana Gogol Bordello Bloc Party Thousand Foot Krutch Brand New Razorlight Puddle Of Mudd Sonic Youth -

'We Are Examining Pupils Too Much'

JOBS PROPERTY DIRECTORY FAMILY NOTICES TRAVEL DATING BOOK AN AD BUYSELL BUY A PHOTO COOKIE POLICY SUBSCRIPTIONS View our FREE mobile Subscribe and save up and tablet apps to 30% Most read Live feeds What's on News Rugby Football Business Fun Stuff In Your Area TRENDING LETTERS .WALES CARDIFF 10K NATIONAL TRUST WALES HEALTH CHECK WALES SCHOOL RATINGS Sport Homes Showbiz Food & Drink News Local News ‘We are examining pupils too much’ Feb 11, 2008 09:11 By WalesOnline THE head of Britain’s most famous public school has called for a dramatic overhaul of the exams system. Share Share Tweet +1 Email THE head of Britain’s most famous public school has called for a dramatic overhaul of the exams system. Tony Little, headmaster of Eton College, said the number of GCSEs teenagers take should be slashed by half to no more than five or six. He condemned the prevailing culture of assessment that dominates education and argued for more room to be given to broader courses that are not externally examined. open in browser PRO version Are you a developer? Try out the HTML to PDF API pdfcrowd.com Mr Little said, “There is a very good case to look again and ask the question, ‘are we .Wales examining too much?’ I think we are. The .cymru or .wales domain “We have got into a habit of assessing everything that young people do. It is seen names - showcase a business often by young people themselves as a virtue that they have 11 or 12 GCSEs. in Wales on a global scale “Not so long ago it would be quite normal for young people to take five or six O- levels and a range of other courses that were not examined.” He suggested limiting the number of GCSEs that pupils can take. -

(12) Patent Application Publication (10) Pub. No.: US 2009/0248.614 A1 Bhagwan Et Al

US 20090248.614A1 (19) United States (12) Patent Application Publication (10) Pub. No.: US 2009/0248.614 A1 Bhagwan et al. (43) Pub. Date: Oct. 1, 2009 (54) SYSTEMAND METHOD FOR Related U.S. Application Data CONSTRUCTING TARGETED RANKING FROMMULTIPLE INFORMATION SOURCES (60) Provisional application No. 61/041,128, filed on Mar. 31, 2008. (75) Inventors: Varun Bhagwan, San Jose, CA O O (US); Tyrone Wilberforce Andre Publication Classification Grandison, San Jose, CA (US); (51) Int. Cl. Daniel Frederick Gruhl, San Jose, G06F 7/30 (2006.01) CA (US); Jan Hendrik Pieper, San Jose, CA (US) (52) U.S. Cl. ............... 707/1: 707/E17.005: 707/E17.134 Correspondence Address: (57) ABSTRACT VAN N. NGUY IBM CORPORATION ALMADEN RESEARCH Embodiments of the invention provide a system and method CENTER 9 for determining preferences from information mashups and, INTELLECTUAL PROPERTY LAW DEPT in particular, a system and method for constructing a ranked C4TAAU2B 650 HARRY ROAD list from multiple sources. In an exemplary embodiment, the SAN JOSE CA 95120-6099 (US) system and method tunably combines multiple ranked lists by 9 computing a score for each item within the list, wherein the (73) Assignee: INTERNATIONAL BUSINESS score is a function of the associated rank of the item within the MACHINES CORPORATION list. In one exemplary embodiment, the function is equal to Armonk, NY (US) s 1/(n(1/p)), where p is a tuning parameter that enables selec s tion between responsiveness in the combined ranking to one (21) Appl. No.: 12/195,098 candidate ranked highly in one source versus responsiveness in the combined ranking to a candidate with lower but broader (22) Filed: Aug.