Building a Security Appliance Based on Freebsd

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Introduzione Al Mondo Freebsd

Introduzione al mondo FreeBSD Corso avanzato Netstudent Netstudent http://netstudent.polito.it E.Richiardone [email protected] maggio 2009 CC-by http://creativecommons.org/licenses/by/2.5/it/ The FreeBSD project - 1 ·EÁ un progetto software open in parte finanziato ·Lo scopo eÁ mantenere e sviluppare il sistema operativo FreeBSD ·Nasce su CDROM come FreeBSD 1.0 nel 1993 ·Deriva da un patchkit per 386BSD, eredita codice da UNIX versione Berkeley 1977 ·Per problemi legali subisce un rallentamento, release 2.0 nel 1995 con codice royalty-free ·Dalla release 5.0 (2003) assume la struttura che ha oggi ·Disponibile per x86 32 e 64bit, ia64, MIPS, ppc, sparc... ·La mascotte (Beastie) nasce nel 1984 The FreeBSD project - 2 ·Erede di 4.4BSD (eÁ la stessa gente...) ·Sistema stabile; sviluppo uniforme; codice molto chiaro, ordinato e ben commentato ·Documentazione ufficiale ben curata ·Licenza molto permissiva, spesso attrae aziende per progetti commerciali: ·saltuariamente esterni collaborano con implementazioni ex-novo (i.e. Intel, GEOM, atheros, NDISwrapper, ZFS) ·a volte no (i.e. Windows NT) ·Semplificazione di molte caratteristiche tradizionali UNIX Di cosa si tratta Il progetto FreeBSD include: ·Un sistema base ·Bootloader, kernel, moduli, librerie di base, comandi e utility di base, servizi tradizionali ·Sorgenti completi in /usr/src (~500MB) ·EÁ giaÁ abbastanza completo (i.e. ipfw, ppp, bind, ...) ·Un sistema di gestione per software aggiuntivo ·Ports e packages ·Documentazione, canali di assistenza, strumenti di sviluppo ·i.e. Handbook, -

GELI Boot Booting from Encrypted Disks on Freebsd

GELI Boot Booting from Encrypted Disks on FreeBSD Allan Jude -- ScaleEngine Inc. [email protected] twitter: @allanjude Introduction Allan Jude ● 13 Years as FreeBSD Server Admin ● FreeBSD src/doc committer (focus: ZFS, bhyve, ucl, xo) ● Co-Author of “FreeBSD Mastery: ZFS” and “FreeBSD Mastery: Advanced ZFS” with Michael W. Lucas (For sale in the hallway) ● Architect of the ScaleEngine CDN (HTTP and Video) ● Host of BSDNow.tv & TechSNAP.tv Podcasts ● Use ZFS for large collections of videos, extremely large website caches, mirrors of PC-BSD pkgs and RaspBSD ● Single Handedly Manage Over 1000TB of ZFS Storage Overview ● Do a lot of work with ZFS ● Helped build the ZFS bits of the installer ● Integrated ZFS Boot Environments ● Created ZFS Boot Env. Menu ● ZFS Boot Env. do not work with GELI ● Booting from GELI encrypted pool requires creating an unencrypted “boot pool” with the kernel and GELI module ● Boot Environments are awesome, you should use them too I Have Written A Thing ● I am a very novice C programmer ● Implemented a minimal version of GELI in the gpt{,zfs}boot (UFS and ZFS) bootcodes ● Took a lot of time to understand the existing bootcode and how it works ● Took a lot of learning about C ● The existing boot code is terrible, and needs much love, too much copy-pasta ● Had to navigate many obstacles ● but, it works! How Do Computers Even Work? ● BIOS reads the 512 bytes MBR ● Consists of 446 byte bootstrap program, and partition table (4 entries) ● This bootstrap is then executed (boot0.S) ● It examines the partition table and finds the active partition, reads the first 512 bytes ● This is boot1. -

Institutionalizing Freebsd Isolated and Virtualized Hosts Using Bsdinstall(8), Zfs(8) and Nfsd(8)

Institutionalizing FreeBSD Isolated and Virtualized Hosts Using bsdinstall(8), zfs(8) and nfsd(8) [email protected] @MichaelDexter BSDCan 2018 Jails and bhyve… FreeBSD’s had Isolation since 2000 and Virtualization since 2014 Why are they still strangers? Institutionalizing FreeBSD Isolated and Virtualized Hosts Using bsdinstall(8), zfs(8) and nfsd(8) Integrating as first-class features Institutionalizing FreeBSD Isolated and Virtualized Hosts Using bsdinstall(8), zfs(8) and nfsd(8) This example but this is not FreeBSD-exclusive Institutionalizing FreeBSD Isolated and Virtualized Hosts Using bsdinstall(8), zfs(8) and nfsd(8) jail(8) and bhyve(8) “guests” Application Binary Interface vs. Instructions Set Architecture Institutionalizing FreeBSD Isolated and Virtualized Hosts Using bsdinstall(8), zfs(8) and nfsd(8) The FreeBSD installer The best file system/volume manager available The Network File System Broad Motivations Virtualization! Containers! Docker! Zones! Droplets! More more more! My Motivations 2003: Jails to mitigate “RPM Hell” 2011: “bhyve sounds interesting...” 2017: Mitigating Regression Hell 2018: OpenZFS EVERYWHERE A Tale of Two Regressions Listen up. Regression One FreeBSD Commit r324161 “MFV r323796: fix memory leak in [ZFS] g_bio zone introduced in r320452” Bug: r320452: June 28th, 2017 Fix: r324162: October 1st, 2017 3,710 Commits and 3 Months Later June 28th through October 1st BUT July 27th, FreeNAS MFC Slips into FreeNAS 11.1 Released December 13th Fixed in FreeNAS January 18th 3 Months in FreeBSD HEAD 36 Days -

GELI (8) Freebsd System Manager's Manual GELI (8) NAME Geli

GELI (8) FreeBSD System Manager’s Manual GELI (8) NAME geli — control utility for cryptographic GEOM class SYNOPSIS To compile GEOM ELI into your kernel, place the following lines in your kernel configuration file: device crypto options GEOM_ELI Alternately, to load the GEOM ELI module at boot time, place the following line in your loader.conf(5): geom_eli_load="YES" Usage of the geli(8) utility: geli init [ -bPv][ -a algo ][ -i iterations ][ -K newkeyfile][ -l keylen ][ -s sectorsize ] prov geli label - an alias for init geli attach [ -dpv ][ -k keyfile ] prov geli detach [ -fl] prov . geli stop - an alias for detach geli onetime [ -d][ -a algo][ -l keylen][ -s sectorsize ] prov . geli setkey [ -pPv ][ -i iterations ][ -k keyfile][ -K newkeyfile][ -n keyno] prov geli delkey [ -afv ][ -n keyno] prov geli kill [ -av][ prov . ] geli backup [ -v] prov file geli restore [ -v] file prov geli clear [ -v] prov . geli dump [ -v] prov . geli list geli status geli load geli unload DESCRIPTION The geli utility is used to configure encryption on GEOM providers. The following is a list of the most important features: • Utilizes the crypto(9) framework, so when there is crypto hardware available, geli will make use of it automatically. • Supports many cryptographic algorithms (currently AES, Blowfish and 3DES). • Can create a key from a couple of components (user entered passphrase, random bits from a file, etc.). • Allows to encrypt the root partition - the user will be asked for the passphrase before the root file system is mounted. • The passphrase of the user is strengthened with: B. Kaliski, PKCS #5: Password-Based Cryptography Specification, Version 2.0. -

Full Disk Encryption

Full Disk Encryption David Klaftenegger Department of Information Technology Uppsala University, Sweden 22. March 2019 Caveat Auditor Background Software LUKS this talk contains opinions Questions my opinions not the university’s nor do I claim to be an expert ... so expect some imprecision and errors 22 Mar 2019 Full Disk Encryption - Cryptoparty - 2 - David K My choices loss / theft broken device selling device singular access by evil maid important to you (that I can’t see it) protect in case of • device loss? • theft? • police? • nation state attackers? What’s the problem? Why encrypt data? Background Software LUKS Questions 22 Mar 2019 Full Disk Encryption - Cryptoparty - 3 - David K My choices loss / theft broken device selling device singular access by evil maid (that I can’t see it) protect in case of • device loss? • theft? • police? • nation state attackers? What’s the problem? Why encrypt data? Background important to you Software LUKS Questions 22 Mar 2019 Full Disk Encryption - Cryptoparty - 3 - David K My choices loss / theft broken device selling device singular access by evil maid protect in case of • device loss? • theft? • police? • nation state attackers? What’s the problem? Why encrypt data? Background important to you (that I can’t see it) Software LUKS Questions 22 Mar 2019 Full Disk Encryption - Cryptoparty - 3 - David K My choices loss / theft broken device selling device singular access by evil maid What’s the problem? Why encrypt data? Background important to you (that I can’t see it) Software protect in case -

ZFS Boot Environments Reloaded NLUUG ZFS Boot Environments Reloaded

ZFS Boot Environments Reloaded NLUUG ZFS Boot Environments Reloaded Sławomir Wojciech Wojtczak [email protected] vermaden.wordpress.com twitter.com/vermaden https://is.gd/BECTL ntro !"#$%##%#& ZFS Boot Environments Reloaded NLUUG What is ZFS Boot Environment? Its bootable clone%sna(shot of the working system. What it is' !"#$%##%#& ZFS Boot Environments Reloaded NLUUG What is ZFS Boot Environment? Its bootable clone%sna(shot of the working system. ● In ZFS terminology its clone of the snapshot. ZFS dataset → ZFS dataset@snapshot → ZFS clone (origin=dataset@snapshot) What it is' !"#$%##%#& ZFS Boot Environments Reloaded NLUUG What is ZFS Boot Environment? Its bootable clone%sna(shot of the working system. ● In ZFS terminology its clone of the snapshot. ZFS dataset → ZFS dataset@snapshot → ZFS clone (origin=dataset@snapshot) ● In ZFS (as everywhere) sna(shot is read onl). What it is' !"#$%##%#& ZFS Boot Environments Reloaded NLUUG What is ZFS Boot Environment? Its bootable clone%sna(shot of the working system. ● In ZFS terminology its clone of the snapshot. ZFS dataset → ZFS dataset@snapshot → ZFS clone (origin=dataset@snapshot) ● In ZFS (as everywhere) sna(shot is read onl). ● In ZFS clone can be mounted read write (and you can boot from it). What it is' !"#$%##%#& ZFS Boot Environments Reloaded NLUUG What is ZFS Boot Environment? Its bootable clone%sna(shot of the working system. ● In ZFS terminology its clone of the snapshot. ZFS dataset → ZFS dataset@snapshot → ZFS clone (origin=dataset@snapshot) ● In ZFS (as everywhere) sna(shot is read onl). ● In ZFS clone can be mounted read write (and you can boot from it). -

Introduzione Al Mondo Freebsd Corso Avanzato

Introduzione al mondo FreeBSD corso Avanzato •Struttura •Installazione •Configurazione •I ports •Gestione •Netstudent http://netstudent.polito.it •E.Richiardone [email protected] •Novembre 2012 •CC-by http://creativecommons.org/licenses/by/3.0/it/ The FreeBSD project - 1 • E` un progetto software open • Lo scopo e` mantenere e sviluppare il sistema operativo FreeBSD • Nasce su CDROM come FreeBSD 1.0 nel 1993 • Deriva da un patchkit per 386BSD, eredita codice da UNIX versione Berkeley 1977 • Per problemi legali subisce un rallentamento, release 2.0 nel 1995 con codice royalty-free • Dalla release 4.0 (2000) assume la struttura che ha oggi • Disponibile per x86 32 e 64bit, ia64, MIPS, ppc, sparc... • La mascotte (Beastie) nasce nel 1984 The FreeBSD project - 2 • Erede di 4.4BSD (e` la stessa gente...) • Sistema stabile; sviluppo uniforme; codice molto chiaro, ordinato e ben commentato • Documentazione ufficiale ben curata • Licenza molto permissiva, spesso attrae aziende per progetti commerciali: • saltuariamente progetti collaborano con implementazioni ex-novo (i.e. Intel, GEOM, NDISwrapper, ZFS, GNU/Linux emulation) • Semplificazione di molte caratteristiche tradizionali UNIX Di cosa si tratta Il progetto FreeBSD include: • Un sistema base • Bootloader, kernel, moduli, librerie di base, comandi e utility di base, servizi tradizionali • Sorgenti completi in /usr/src (~500MB) • E` gia` completo (i.e. ipfw, ppp, bind, ...) • Un sistema di gestione per software aggiuntivo • Ports e packages • Documentazione, canali di assistenza, strumenti -

Freebsd Enterprise Storage Polish BSD User Group Welcome 2020/02/11 Freebsd Enterprise Storage

FreeBSD Enterprise Storage Polish BSD User Group Welcome 2020/02/11 FreeBSD Enterprise Storage Sławomir Wojciech Wojtczak [email protected] vermaden.wordpress.com twitter.com/vermaden bsd.network/@vermaden https://is.gd/bsdstg FreeBSD Enterprise Storage Polish BSD User Group What is !nterprise" 2020/02/11 What is Enterprise Storage? The wikipedia.org/wiki/enterprise_storage page tells nothing about enterprise. Actually just redirects to wikipedia.org/wiki/data_storage page. The other wikipedia.org/wiki/computer_data_storage page also does the same. The wikipedia.org/wiki/enterprise is just meta page with lin s. FreeBSD Enterprise Storage Polish BSD User Group What is !nterprise" 2020/02/11 Common Charasteristics o Enterprise Storage ● Category that includes ser$ices/products designed &or !arge organizations. ● Can handle !arge "o!umes o data and !arge num%ers o sim#!tano#s users. ● 'n$olves centra!ized storage repositories such as SA( or NAS de$ices. ● )equires more time and experience%expertise to set up and operate. ● Generally costs more than consumer or small business storage de$ices. ● Generally o&&ers higher re!ia%i!it'%a"aila%i!it'%sca!a%i!it'. FreeBSD Enterprise Storage Polish BSD User Group What is !nterprise" 2020/02/11 EnterpriCe or EnterpriSe? DuckDuckGo does not pro$ide search results count +, Goog!e search &or enterprice word gi$es ~ 1 )00 000 results. Goog!e search &or enterprise word gi$es ~ 1 000 000 000 results ,1000 times more). ● /ost dictionaries &or enterprice word sends you to enterprise term. ● Given the *+,CE o& many enterprise solutions it could be enterPRICE 0 ● 0 or enterpri$e as well +. -

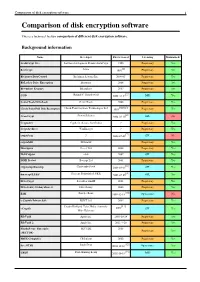

Comparison of Disk Encryption Software 1 Comparison of Disk Encryption Software

Comparison of disk encryption software 1 Comparison of disk encryption software This is a technical feature comparison of different disk encryption software. Background information Name Developer First released Licensing Maintained? ArchiCrypt Live Softwaredevelopment Remus ArchiCrypt 1998 Proprietary Yes [1] BestCrypt Jetico 1993 Proprietary Yes BitArmor DataControl BitArmor Systems Inc. 2008-05 Proprietary Yes BitLocker Drive Encryption Microsoft 2006 Proprietary Yes Bloombase Keyparc Bloombase 2007 Proprietary Yes [2] CGD Roland C. Dowdeswell 2002-10-04 BSD Yes CenterTools DriveLock CenterTools 2008 Proprietary Yes [3][4][5] Check Point Full Disk Encryption Check Point Software Technologies Ltd 1999 Proprietary Yes [6] CrossCrypt Steven Scherrer 2004-02-10 GPL No Cryptainer Cypherix (Secure-Soft India) ? Proprietary Yes CryptArchiver WinEncrypt ? Proprietary Yes [7] cryptoloop ? 2003-07-02 GPL No cryptoMill SEAhawk Proprietary Yes Discryptor Cosect Ltd. 2008 Proprietary Yes DiskCryptor ntldr 2007 GPL Yes DISK Protect Becrypt Ltd 2001 Proprietary Yes [8] cryptsetup/dmsetup Christophe Saout 2004-03-11 GPL Yes [9] dm-crypt/LUKS Clemens Fruhwirth (LUKS) 2005-02-05 GPL Yes DriveCrypt SecurStar GmbH 2001 Proprietary Yes DriveSentry GoAnywhere 2 DriveSentry 2008 Proprietary Yes [10] E4M Paul Le Roux 1998-12-18 Open source No e-Capsule Private Safe EISST Ltd. 2005 Proprietary Yes Dustin Kirkland, Tyler Hicks, (formerly [11] eCryptfs 2005 GPL Yes Mike Halcrow) FileVault Apple Inc. 2003-10-24 Proprietary Yes FileVault 2 Apple Inc. 2011-7-20 Proprietary -

Freenas® 11.2-U3 User Guide

FreeNAS® 11.2-U3 User Guide March 2019 Edition FreeNAS® is © 2011-2019 iXsystems FreeNAS® and the FreeNAS® logo are registered trademarks of iXsystems FreeBSD® is a registered trademark of the FreeBSD Foundation Written by users of the FreeNAS® network-attached storage operating system. Version 11.2 Copyright © 2011-2019 iXsystems (https://www.ixsystems.com/) CONTENTS Welcome .............................................................. 8 Typographic Conventions ..................................................... 10 1 Introduction 11 1.1 New Features in 11.2 .................................................... 11 1.1.1 RELEASE-U1 ..................................................... 14 1.1.2 U2 .......................................................... 14 1.1.3 U3 .......................................................... 15 1.2 Path and Name Lengths .................................................. 16 1.3 Hardware Recommendations ............................................... 17 1.3.1 RAM ......................................................... 17 1.3.2 The Operating System Device ........................................... 18 1.3.3 Storage Disks and Controllers ........................................... 18 1.3.4 Network Interfaces ................................................. 19 1.4 Getting Started with ZFS .................................................. 20 2 Installing and Upgrading 21 2.1 Getting FreeNAS® ...................................................... 21 2.2 Preparing the Media ................................................... -

Seven Ways to Increase Security in a New Freebsd Installation by MARIUSZ ZABORSKI

1 of 6 Seven Ways to Increase Security in a New FreeBSD Installation BY MARIUSZ ZABORSKI FreeBSD can be used as a desktop or as a server operating system. When setting up new boxes, it is crucial to choose the most secure configuration. We have a lot of data on our computers, and it all needs to be protected. This article describes seven configuration improvements that everyone should remember when configur- ing a new FreeBSD box. Full Disk Encryption With a fresh installation, it is important to decide about hard drive encryption—par- 1ticularly since it may be challenging to add later. The purpose of disk encryption is to protect data stored on the system. We store a lot of personal and business data on our com- puters, and most of the time, we do not want that to be read by unauthorized people. Our laptop may be stolen, and if so, it should be assumed that a thief might gain access to almost every document, photo, and all pages logged in to through our web browser. Some people may also try to tamper with or read our data when we are far away from our computer. We recommend encrypting all systems—especially servers or personal computers. Encryp- tion of hard disks is relatively inexpensive and the advantages are significant. In FreeBSD, there are three main ways to encrypt storage: • GBDE, • ZFS native encryption, • GELI. Geom Based Disk Encryption (GBDE) is the oldest disk encryption mechanism in FreeBSD. But the GBDE is currently neglected by developers. GDBE supports only AES-CBC with a 128-bit key length and uses a separate key for each write, which may introduce some CPU overhead. -

Open-Source-Lösungen Zur Wahrung Der Datensicherheit Und

Fachhochschule Hannover Fakult¨at IV - Wirtschaft und Informatik Studiengang Angewandte Informatik MASTERARBEIT Open-Source-L¨osungen zur Wahrung der Datensicherheit und glaubhaften Abstreitbarkeit in typischen Notebook-Szenarien Jussi Salzwedel September 2009 Beteiligte Erstprufer¨ Prof. Dr. rer. nat. Josef von Helden Fachhochschule Hannover Ricklinger Stadtweg 120 30459 Hannover E-Mail: [email protected] Telefon: 05 11 / 92 96 - 15 00 Zweitprufer¨ Prof. Dr. rer. nat. Carsten Kleiner Fachhochschule Hannover Ricklinger Stadtweg 120 30459 Hannover E-Mail: [email protected] Telefon: 05 11 / 92 96 - 18 35 Autor Jussi Salzwedel E-Mail: [email protected] 2 Erkl¨arung Hiermit erkl¨are ich, dass ich die eingereichte Masterarbeit selbst¨andig und ohne fremde Hilfe verfasst, andere als die von mir angegebenen Quellen und Hilfsmittel nicht benutzt und die den benutzten Werken w¨ortlich oder inhaltlich entnommenen Stellen als solche kenntlich gemacht habe. Ort, Datum Unterschrift 3 Inhaltsverzeichnis 1 Einfuhrung¨ 14 1.1 Einleitung................................... 14 1.2 Motivation................................... 15 1.3 Typografische Konventionen......................... 18 1.4 Abgrenzung.................................. 18 2 Anwendungsf¨alle 20 2.1 A1: Notebook kommt abhanden....................... 20 2.2 A2: Notebook kommt abhanden und wird nach Verlust gefunden..... 21 2.3 A3: Benutzen des Notebooks, ohne sensitive Daten preiszugeben..... 22 3 Sicherheitsanforderungen 23 3.1 S0: Open-Source............................... 24 3.2 S1: Gew¨ahrleistung der Vertraulichkeit................... 24 3.3 S2: Uberpr¨ ufbarkeit¨ der Integrit¨at...................... 24 3.4 S3: Glaubhafte Abstreitbarkeit........................ 25 4 Theoretische Grundlagen 26 4.1 Verschlusselungsverfahren¨ .......................... 26 4.1.1 Advanced Encryption Standard................... 27 4.1.2 Blowfish................................ 28 4.1.3 Camellia................................ 28 4.1.4 CAST5...............................