Interactive Non-Photorealistic

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Graphic Design & Illustration Using Adobe Illustrator CC 1 Dear Candidate, in Preparation for the Graphic Design & Illu

Graphic Design & Illustration Using Adobe Illustrator CC Dear Candidate, In preparation for the Graphic Design & Illustration Using Adobe Illustrator CC certification exam, we’ve put together a set of practice materials and example exam items for you to review. What you’ll find in this packet are: • Topic areas and objectives for the exam. • Links to practice tutorials and files. • Practice exam items. We’ve assembled excerpted material from the Adobe Illustrator CC Learn and Support page, and the Visual Design curriculum to highlight a few of the more challenging techniques covered on the exam. You can work through these technical guides and with the provided image files (provided separately). Additionally, we’ve included the certification objectives so that you are aware of the elements that are covered on the exam. Finally, we’ve included practice exam items to give you a feel for some of the items. These materials are meant to help you familiarize yourself with the areas of the exam so are not comprehensive across all the objectives. Thank you, Adobe Education Adobe, the Adobe logo, Adobe Photoshop and Creative Cloud are either registered trademarks or trademarks of Adobe Systems 1 Incorporated in the United States and/or other countries. All other trademarks are the property of their respective owners. © 2017 Adobe Systems Incorporated. All rights reserved. Graphic Design & Illustration Using Adobe Illustrator CC Adobe Certified Associate in Graphic Design & Illustration Using Adobe Illustrator CC (2015) Exam Structure The following lists the topic areas for the exam: • Setting project requirements • Understanding Digital Graphics and Illustrations • Understanding Adobe Illustrator • Creating Digital Graphics and Illustrations Using Adobe Illustrator • Archive, Export, and Publish Graphics Using Adobe Illustrator Number of Questions and Time • 40 questions • 50 minutes Exam Objectives Domain 1.0 Setting Project Requirements 1.1 Identify the purpose, audience, and audience needs for preparing graphics and illustrations. -

617.727.5944 2.7 Labeling a Picture Or a Diagram

BPS Curriculum Framework > Informational/Explanatory Text > Grade 2 > Drafting and Revising > Labeling a picture or diagram 2.7 Labeling a picture or a Connection : We have been noticing the beautiful pictures and helpful diagram diagrams in our mentor text for “all-about” books. We have even added pictures and diagrams to our own writing to really give our readers a better idea of what our subject looks like, but there is still one more thing we need to think about. We need to be sure our readers understand what they are looking at. Learning Objective: Students will label pictures and Teaching : Labeling our pictures gives our readers a better diagrams from the outdoor understanding of what they are looking at. Our labels help the reader classroom know exactly what they are seeing. It may tell the time of the year, the location, the size, or the specific parts of the subject they are looking at. Let’s look at The “Saddle Up!” Section in the “Kids book of Horses” and see how the author has used labels to teach us the parts of a Mentor Texts: “Volcanoes”; saddle. “Bears, Bears, Bears”; “Try It Think about something in the classroom and tell your partner “Apples”; “The Pumpkin what you might have to label if you were going to illustrate this object. Book”; “ The Kids Horse Book ”, Share out with class. Sylvia Funston Instructions to students for Independent Outdoor Writing : 1. Today, when we go out to the outdoor classroom we are going to choose an object to illustrate. It can be from our classroom list or anything else you see outside (at boat in the harbor, a truck or car going by, a small animal scampering away, or items on the playground: a slide or climbing frame. -

A Non-Photorealistic Lighting Model for Automatic Technical Illustration

A Non-Photorealistic Lighting Model For Automatic Technical Illustration Amy Gooch Bruce Gooch Peter Shirley Elaine Cohen Department of Computer Science University of Utah = http:==www.cs.utah.edu Abstract Phong-shaded 3D imagery does not provide geometric information of the same richness as human-drawn technical illustrations. A non-photorealistic lighting model is presented that attempts to nar- row this gap. The model is based on practice in traditional tech- nical illustration, where the lighting model uses both luminance and changes in hue to indicate surface orientation, reserving ex- treme lights and darks for edge lines and highlights. The light- ing model allows shading to occur only in mid-tones so that edge lines and highlights remain visually prominent. In addition, we show how this lighting model is modified when portraying models of metal objects. These illustration methods give a clearer picture of shape, structure, and material composition than traditional com- puter graphics methods. CR Categories: I.3.0 [Computer Graphics]: General; I.3.6 [Com- Figure 1: The non-photorealistic cool (blue) to warm (tan) tran- puter Graphics]: Methodology and Techniques. sition on the skin of the garlic in this non-technical setting is an example of the technique automated in this paper for technical il- Keywords: illustration, non-photorealistic rendering, silhouettes, lustrations. Colored pencil drawing by Susan Ashurst. lighting models, tone, color, shading 1 Introduction dynamic range shading is needed for the interior. As artists have discovered, adding a somewhat artificial hue shift to shading helps The advent of photography and computers has not replaced artists, imply shape without requiring a large dynamic range. -

CT.ENG CAD Drafting/Engineering Graphics

CT.ENG CAD Drafting/Engineering Graphics Essential Discipline Goals -Develop and apply the technical competency and related academic skills that allow for economic independence and career satisfaction. -Acquire the essential learnings and values that foster continued education throughout life. -Demonstrate the ability to communicate, solve problems, work individually and in teams, and apply information effectively. -Develop technological literacy and the ability to adapt to future change. Standards Indicators CT.ENG.05 Communicate graphically through sketches of multi-view and pictorial drawings that meet industry standards. CT.ENG.05.01 Read and apply the rules for sketching in relation to proportion, placement of the views, and drawing medium needed CT.ENG.05.02 Select necessary views for the problem CT.ENG.05.03 Use blocking technique for size, shape, and details CT.ENG.05.04 Apply surface shading techniques where needed CT.ENG.05.05 Identify the uses of sketches in industry CT.ENG.05.06 Identify and describe the terms used in sketching CT.ENG.10 Produce multi-view orthographic projections to industry standards. CT.ENG.10.01 Define and apply the terms related to multi-view drawings CT.ENG.10.02 Apply the rules for orthographic projection CT.ENG.10.03 Review and analyze the working drawing problem and specifications CT.ENG.10.04 Visualize and select the necessary views CT.ENG.10.05 Identify the types of lines, lettering, and drawing medium needed CT.ENG.10.06 Solve fractional, decimal, and metric equations as needed CT.ENG.10.06 Use concepts related to units of measurement CT.ENG.10.07 Analyze and identify the need for sectional and/or auxiliary views CT.ENG.10.08 Define and apply the rules for sections and auxiliary views CT.ENG.10.09 Visualize and draw geometric figures in two dimens CT.ENG.10.10 Describe, compare and classify geometric figures CT.ENG.10.11 Apply properties and relationships of circles to solve circle problems CT.ENG.15 Produce development layouts of various shaped objects to industry standards. -

Pen-And-Ink Illustrations Painterly Rendering Cartoon Shading Technical Illustrations

CSCI 420 Computer Graphics Lecture 24 Non-Photorealistic Rendering Pen-and-ink Illustrations Painterly Rendering Cartoon Shading Technical Illustrations Jernej Barbic University of Southern California 1 Goals of Computer Graphics • Traditional: Photorealism • Sometimes, we want more – Cartoons – Artistic expression in paint, pen-and-ink – Technical illustrations – Scientific visualization [Lecture next week] cartoon shading 2 Non-Photorealistic Rendering “A means of creating imagery that does not aspire to realism” - Stuart Green Cassidy Curtis 1998 David Gainey 3 Non-photorealistic Rendering Also called: • Expressive graphics • Artistic rendering • Non-realistic graphics Source: ATI • Art-based rendering • Psychographics 4 Some NPR Categories • Pen-and-Ink illustration – Techniques: cross-hatching, outlines, line art, etc. • Painterly rendering – Styles: impressionist, expressionist, pointilist, etc. • Cartoons – Effects: cartoon shading, distortion, etc. • Technical illustrations – Characteristics: Matte shading, edge lines, etc. • Scientific visualization – Methods: splatting, hedgehogs, etc. 5 Outline • Pen-and-Ink Illustrations • Painterly Rendering • Cartoon Shading • Technical Illustrations 6 Hue • Perception of “distinct” colors by humans • Red • Green • Blue • Yellow Source: Wikipedia Hue Scale 7 Tone • Perception of “brightness” of a color by humans # •! Also called lightness# darker lighter •! Important in NPR# Source: Wikipedia 8 Pen-and-Ink Illustrations Winkenbach and Salesin 1994 9 Pen-and-Ink Illustrations •! Strokes –! -

Guns, More Crime Author(S): Mark Duggan Source: Journal of Political Economy, Vol

More Guns, More Crime Author(s): Mark Duggan Source: Journal of Political Economy, Vol. 109, No. 5 (October 2001), pp. 1086-1114 Published by: The University of Chicago Press Stable URL: http://www.jstor.org/stable/10.1086/322833 Accessed: 03-04-2018 19:37 UTC JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of content in a trusted digital archive. We use information technology and tools to increase productivity and facilitate new forms of scholarship. For more information about JSTOR, please contact [email protected]. Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at http://about.jstor.org/terms The University of Chicago Press is collaborating with JSTOR to digitize, preserve and extend access to Journal of Political Economy This content downloaded from 153.90.149.52 on Tue, 03 Apr 2018 19:37:56 UTC All use subject to http://about.jstor.org/terms More Guns, More Crime Mark Duggan University of Chicago and National Bureau of Economic Research This paper examines the relationship between gun ownership and crime. Previous research has suffered from a lack of reliable data on gun ownership. I exploit a unique data set to reliably estimate annual rates of gun ownership at both the state and the county levels during the past two decades. My findings demonstrate that changes in gun ownership are significantly positively related to changes in the hom- icide rate, with this relationship driven almost entirely by an impact of gun ownership on murders in which a gun is used. -

Graphics Formats and Tools

Graphics formats and tools Images à recevoir Stand DRG The different types of graphics format Graphics formats can be classified broadly in 3 different groups -Vector - Raster (or bitmap) - Page description language (PDL) Graphics formats and tools 2 Vector formats In a vector format (revisable) a line is defined by 2 points, the text can be edited Graphics formats and tools 3 Raster (bitmap) format A raster (bitmap) format is like a photograph; the number of dots/cm defines the quality of the drawing Graphics formats and tools 4 Page description language (PDL) formats A page description language is a programming language that describes the appearance of a printed page at a higher level than an actual output bitmap Adobe’s PostScript (.ps), Encapsulated PostScript (.eps) and Portable Document Format (.pdf) are some of the best known page description languages Encapsulated PostScript (.eps) is commonly used for graphics It can contain both unstructured vector information as well as raster (bitmap) data Since it comprises a mixture of data, its quality and usability are variable Graphics formats and tools 5 Background Since the invention of computing, or more precisely since the creation of computer-assisted drawing (CAD), two main professions have evolved - Desktop publishing (DTP) developed principally on Macintosh - Computer-assisted drawing or design (CAD) developed principally on IBM (International Business Machines) Graphics formats and tools 6 Use of DTP applications Used greatly in the fields of marketing, journalism and industrial design, DTP applications fulfil the needs of various professions - from sketches and mock-ups to fashion design - interior design - coachwork (body work) design - web page design -etc. -

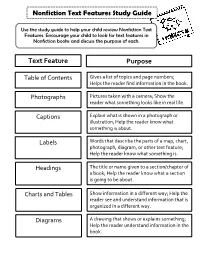

Nonfiction Text Features Study Guide Text Feature Purpose

Nonfiction Text Features Study Guide Use the study guide to help your child review Nonfiction Text Features. Encourage your child to look for text features in Nonfiction books and discuss the purpose of each. Text Feature Purpose Gives a list of topics and page numbers; Table of Contents Helps the reader find information in the book. Photographs Pictures taken with a camera; Show the reader what something looks like in real life. Captions Explain what is shown in a photograph or illustration; Help the reader know what something is about. Labels Words that describe the parts of a map, chart, photograph, diagram, or other text feature; Help the reader know what something is. Headings The title or name given to a section/chapter of a book; Help the reader know what a section is going to be about. Charts and Tables Show information in a different way; Help the reader see and understand information that is organized in a different way. Diagrams A drawing that shows or explains something; Help the reader understand information in the book. Text Feature Purpose Textboxes A box or some other shape that contains text; Show the reader that information is important or interesting. A picture of what something looks like on the Cutaways inside or from another point of view; Help the reader see all parts of something. Types of Print Print can be bold, different colors, fonts, and sizes; Show the reader that something is important or interesting. Close Ups Photographs that have been zoomed in or enlarged to help the reader see something better. -

Architectural and Engineering Design Department (AEDD) South Portland, Maine 04106 Title:Technical Illustration Catalog Number

Architectural and Engineering Design Department (AEDD) South Portland, Maine 04106 Title:Technical Illustration Catalog Number: AEDD-205 Credit Hours: 3 Total Contact Hours: 60 Lecture: 30 Lab: 30 Instructor: Meridith Comeau Phone: 7415779 email :[email protected] Course Syllabus Course Description This comprehensive course covers technical and perspective forms of three-dimensional drawing, one and two point perspective, shade and shadow, color, and rendering. Extensive sketching, a thorough understanding of technical drawing/graphic concepts, and hands-on experience promote the development of artistic talent as it relates to architectural engineering design. Prerequisite(s): AEDD-100 or AEDD-105 Course Objectives 1. Generate accurate axonometric and isometric drawings. 2. Generate exploded views of assemblies from working drawings. 3. Generate one and two point perspective drawings from working drawings. 4. Integrate the effects of light, shade, and shadow. 5. Generate full-color renderings from working drawings and photographs. Topical Outline of Instruction 1. Overview of course, explanation of examples, and the introduction of sketching techniques. 2. Line technique and values, depiction of surface textures. 3. Introduction of drawing mediums. 4. Generation of axonometric drawings. 5. Generation of isometric drawings. 6. Introduction of one point perspective drawing. 7. Introduction of two point perspective drawing. 8. Introduction of light and shadow. 9. Introduction of shading techniques. 10. Introduction of rendering techniques. Course Requirements 1. Active attendance and participation. 2. Generation of all assigned drawings. 3. Development of a portfolio. Student Evaluation and Grading Work will be evaluated on content, quality, and timeliness. Homework/Sketching/In-class = 30% Critique = 20% Portfolio = 40% Attendance = 10% I use the course portal to post and grade all assignments. -

Illustration Degree Offered: Bachelor of Fine Arts

Illustration Degree Offered: Bachelor of Fine Arts Minors Offered: • Art • Art History • Graphic Design • Illustration • Painting • Sculpture • Theatre Arts The BFA in Illustration focuses on developing drawing skills and their application in a professional en vironment, including editorial illustration, children's book illustration, web-site development, and character design for animation. Illustration at Daemen: • Demonstrate a high level of accomplishment with materials related to the graphic arts. • Visually present a narrative voice through individual compositions or series. • Work within a variety of media to explore editorial or sequential imagery. • Develop an editorial voice to support campaigns, storylines, or public initiatives. Illustration Emphasis: The BFA in Illustration at Daemen College combines the creative process of a fine artist with the problem solving techniques and communication skills of a commercial designer. Students are taught to brainstorm solutions, generate new ideas, and explore the materials and techniques best suited to present an idea. By graduation, the Illustration student will have ex perience in editorial, sequential, computer, packaging and storyboard illustration. Illustration studio courses are supported by substantive drawing instruction, as well as fundamental graphic design con cepts. The program's focus on exploration and process prepares students to develop a portfolio to successfully acquire freelance illustration work, or a full-time position in the publishing or digital communications industry. Faculty: The Illustration faculty work in a variety of professional situations by providing illustrations to magazines, poster cam paigns, and websites. Each maintains a freelance career while simultaneously working with students at Daemen in the development of projects and exhibits. 4103 Suggested Course Sequence for Illustration: 4897 10/16 For more information, contact Christian Brandjes in the Department of Visual and Performing Arts at [email protected] or 716-839-8463. -

Ellie Rastian Product Designer 017675131412

[email protected] Ellie Rastian www.65ping.studio Product Designer 017675131412 Experience Certification dunnhumby Germany GmbH Nielsen Norman Group 2018 March - 2020 July (2019) Berlin, Germany UX Certified Web designer Starting as a visual designer, I worked on e- Scrum.org commerce projects related to European Market. (2020) Soon, I showed my skills as a UX/UI designer and Professional Scrum Master was assigned to design the internal website for the Creative Services Team. I worked with the UX team and CS team to develop the website. University of Minnesota coursera Saatchi & Saatchi (2018 - 2019) 2015 March - 2016 June User Interface Design Specialization Kuala Lumpur, Malaysia Visual Designer Involved in ideation and execution for ATL & BTL Education advertising campaigns, proposals and pitches. Clients: MINI Malaysia, Bank Simpanan Nasional Malaysian Institute of Art (BSN). (2014 - 2016) Graphic Design Freelancer 2014 July - Present Shiraz University Visual Designer, UX Designer, Illustrator (2008 - 2010) I have spent over seven years crafting and delivering Bachelor of Business Administration unique digital experiences for global companies and BBA, Accounting and Finance startups such as MINI, Sam Media, Elixir Berlin Khalij Fars Online Language 2008 April - 2010 September Booshehr, Iran English Full professional proficiency Limited working proficiency, B1 Financial Accountant German Persian Full professional proficiency Malay Elementary proficiency Skills Design Tools Interaction Design, Visual Design, Information Sketch, InVision, -

Methodology for Producing a Hand-Drawn Thematic City Map

Master Thesis Methodology for Producing a Hand-Drawn Thematic City Map submitted by: Alika C. Jensen born on: 27.08.1992 in Dayton, Ohio, USA submitted for the academic degree of Master of Science (M.Sc.) Date of Submission 16.10.2017 Supervisors Prof. Dipl.-Phys. Dr.-Ing. habil. Dirk Burghardt Technische Universität Dresden Univ.Prof. Mag.rer.nat. Dr.rer.nat. Georg Gartner Technische Universität Wien Statement of Authorship Herewith I declare that I am the sole author of the thesis named „Methodology for Producing a Hand-Drawn Thematic City Map“ which has been submitted to the study commission of geosciences today. I have fully referenced the ideas and work of others, whether published or unpublished. Literal or analogous citations are clearly marked as such. Dresden, 16.10.2017 Signature Alika C. Jensen 2 Contents Title............................................................................................................................................1 Statement of Authorship...........................................................................................................2 Contents....................................................................................................................................3 Figures.......................................................................................................................................5 Terminology..............................................................................................................................7 1 Introduction...........................................................................................................................8