How an Honor Code Can Make the Difference During Data Collection

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1Guitar PDF Songs Index

Material on Guitar Website Reference Beginning Guitar Music Guitar Cover Beginning Chords Fingerpicking Bass Runs for Guitar Guitar Christmas Song List Guitar Care Guitar PDF Song Index 1/4/2017 Good Reader Web Downloads to Goodreader How to Use Goodreader Downloading Files to the iPad from iTunes Saving Your Internet Passwords Corrected Guitar and PDF 509 Songs 1/4/2017 A Bushel and a Peck Bad Moon Rising A White Sport Coat Ballad of Davy Crockett All I Ask of You Ballad of Green Berets All My Ex’s Live in Texas Battle Hymn of Aging All My Lovin’ Be Our Guest All My Trials Beautiful Brown Eyes Always On My Mind Because of You Am I That Easy to Forget Beep Beep Amanda - bass runs Beer for My Horses + tab Amazing Grace - D Begin the Beguine A America the Beautiful Besame Mucho American Pie Beyond the Reef Amor Big Rock Candy Mountain And I Love Her Blame It On Bossa Nova And I Love You So Blowin’ in the Wind Annie’s Song Blue Bayou April Love Blue Eyes Crying in the Rain - D, C Aquarius Blue Blue Skies Are You Lonesome Tonight Blueberry Hill Around the World in 80 Days Born to Lose As Tears Go By Both Sides Now Ashokan Farewell Breaking Up Is Hard to Do Autumn Leaves Bridge Over Troubled Water Bring Me Sunshine Moon Baby Blue D, A Bright Lights Big City Back Home Again Bus Stop Bad, Bad Leroy Brown By the Time I Get to Phoenix Bye Bye Love Dream A Little Dream of Me Edelweiss Cab Driver Eight Days A Week Can’t Help Falling El Condor Pasa + tab Can’t Smile Without You Elvira D, C, A Careless Love Enjoy Yourself Charade Eres Tu Chinese Happy -

WS Folk Riot Booklet

1 playing “cover” songs as diverse and influential Meanwhile, due to our leftist leanings and omni- 10,000 Watts of Folk as the Statler Brothers’ “Flowers on the Wall,” presence in the Village, activist Abbie Hoffman 1. I AIN’T KISSING YOU (0:54) by Trixie A. Balm met with we three Squares and co-wrote a theme VANGUARD STUDIOS, NYC (aka Lauren Agnelli) Alas, that deal fell through. though the song for his new live radio show, “Radio Free September 1985 sessions remain, with Tom, Lauren, Bruce and Billy U.S.A.”: heard here for the first time since the playing “cover” songs as diverse and influential debut show back in 1986 at the Village Gate. By 1985, we Washington Squares, having worked, as the Statler Brothers’ “Flowers on the Wall,” Vanguard, an important folk label during the ‘50s sang and played our way through the ‘80’s Richard Hell’s “Love Comes in Spurts,” Lou Reed’s At last, in 1987, Gold Castle/Polydor records who and ‘60s was sold in 1985. Vanguard sold off their Greenwich Village folk scene fray, were ready to “Sweet Jane,” and Johnny Thunders’ “Chinese Rocks.” DID sign us to a deal found the perfect sound classical collection and reissued their folk and then record. The record company interest was there though producer Mitch Easter (of the group Let’s started looking for new acts. With a bunch of well and soon serious recording contracts would dangle Having somewhat mastered those formative nuggets, Active— he also recorded REM’s initial sessions) known original Vanguard producers in the control room: before our fresh (very fresh!) young smirks. -

Rca Goes Canadian!

Volume 4, No. 13 Week of November 22nd , 1965 1 RC A GOES CANADIAN! •GIORGI HA!~ ~~~ r Company Limited This is the fourth in a series of articles from the leaders of the Canadian music industry. In these articles the people at the helm of the industry will express their outlooks and their 9pinions regarding the domestic industry. In the weeks to come, we hope to print comments from every Canadian-minded record company. NEW NATIONA L ISM CANADIAN ARTISTS That sudden upsurge of nationalism took For example, take the show-stoppin g, some people completely by surprise, even bill-topping Canadian dance group. Les in this country. Canadians, traditionally Feux-Follets are probably better kn own in modest people whose West Coast seldom London, England than London, Ontario. knew what their East Coast was doing, There's no question at all about their in were all at once flourishing flags and ask ternational status - but how a bout their ing awkward questions about a national national one? This is a Canadian achieve identity. It was all most confusing. ment and the people of Moose J ;'lW can be But to the Canadian music business just as proud as tr. ~ people of Montreal. to fi nd and promote the talent in this coun (where a little modesty goes a very long With our new nati:mal ism, there is no try. With a new la be l , we can re-release way) this new-found pride in home-grown longer a limited mar>.et for talent in either artists such as Colette Boky, whose re products was the best bit of news since language. -

Assar, EMI BMI Country Awards Win ASCAP Country Honors Honor Writers, Publishers

Follesé, Vassar, EMI BMI Country Awards Win ASCAP Country Honors Honor Writers, Publishers BY PHYLLIS STARK Nashville. Accepting the award were hall of BY DEBORAH EVANS PRICE Brian Wilson, Earle Hagen, and Alabama. NASHVILLE -Keith Follesé took home fame member E.W. Wendell and museum NASHVILLE -Lee Ann Womack's chart- topping Warner -Tamerlane executive VP /GM Tim ASCAP's top award -songwriter of the year - director Kyle Young. hit "I Hope You Dance" was named song of the Wipperman took the stage to accept this year's at the company's 39th annual Country Music Alabama's Randy Owen presented veter- year at the 49th annual BMI Country Awards. publisher of the year honor. The veteran pub- Awards held Nov. 3 at Nashville's Opryland an producer and Spark Entertainment/VFR Toby Keith, Mary Green, and Aimee Mayo shared lishing house netted top prize by accumulat- Hotel. Follesé is the writer of hits "The Way Records managing director of creative songwriter of the year honors, and Warner - ing the highest percentage of copyright own- You Love Me," "Smile," and numerous others. Harold Shedd with ASCAP's Lifetime Tamerlane was named publisher of the year. ership in award songs by placing 11 songs on He previously performed and recorded for Achievement Award. Shedd is best -known BMI president/CEO Frances W. Preston and the most -performed list. A &M as a duo with his wife, Adrienne. for producing Alabama, K.T. Oslin, Shania VP Paul Corbin presented citations honoring the Additional publishers collecting three or Arista Nashville artist Phil Vassar was Twain, Reba McEntire, Toby Keith, and nu- writers and publishers of the past year's 50 most - more awards were Careers -BMG Music Pub- named ASCAP's songwriter /artist of the year merous others. -

Menus for State Dinners During the Carter Administration

Menus for State Dinners during the Carter Administration President Jose Lopez Portillio of Mexico February 14, 1977 Dinner: Shrimp Gumbo Soup Corn Sticks Paul Mason Rare Sherry Supreme of Capon in White Grape Sauce Saffron Rice Asparagus Tips in Butter Charles Krug Gamay Beaujolais Hearts of Lettuce Salad Trappist Cheese Schramsberg Blanc de Blanc Burnt Almond Ice Cream Ring Butterscotch Sauce Cookies Demitasse Entertainment: Rudolf Serkin Program Prelude and Fugue in E minor, Felix Mendelssohn Sonata in F minor, Op. 57, Ludwig van Beethoven (“Appassionata”) Allegro assai Andante con moto (variazioni); Allegro ma non troppo-Presto Prime Minister Pierre Trudeau of Canada February 21, 1977 Dinner: Alaskan King Crab in Herb Sauce Saint Michelle Chenin Blanc Roast Stuffed Saddle of Lamb Timbale of Spinach Glazed Carrots Louis Martini Cabernet Sauvignon Watercress and Mushroom Salad Wisconsin Blue Cheese Beaulieu Extra Dry Orange Sherbet Ambrosia Cookies Demitasse Entertainment: The Young Columbians (19 Students from the Columbia School of Theatrical Arts, Inc., in Columbia Maryland) In 30 minutes, they cause American History to unfold through classic songs and dances from colonial days to the present. U.S. Marine Band will play selections from American Broadway musicals and movies in the foyer during dinner. U.S. Army Strings will stroll through the Dining Room during dessert. A Marine Corps harpist will provide music in the Diplomatic Reception Room where guests arrive. Prime Minister Rabin of Israel March 7, 1977 Dinner: Cold Cucumber Soup Bread Sticks Baked Stripped Bass Eggplant Braised Celery Charles Krug Johannisberg Riesling Hearts of Palm and Watercress Vinaigrette Almaden Blanc de Blancs Macedoine of Fresh Fruit Macaroons Entertainment: The Alexandria Quartet will perform a brief musical interlude in the Dining Room following the toast. -

Some of Our Karaoke Songs

Some of our Karaoke songs ARTIST SONG MF CODE TRACK 10 Years Wasteland SC3460 07 10 Years Wasteland SC3460 15 100 Proof Aged Somebody's Been SC8333 09 10000 Maniacs Because The Night SC8113 05 10000 Maniacs Candy Everybody Wants DK82 04 10000 Maniacs More Than This SC8390 06 10000 Maniacs These Are The Days SC8466 14 10000 Maniacs Trouble Me SC8439 03 10cc Donna SF090 15 10cc Dreadlock Holiday ZKH20 10 10cc Good Morning Judge XPK2-09 15 10cc I'm Mandy SF079 03 10cc I'm Not In Love SFD701-6 05 10cc Rubber Bullets SF071 01 10cc Things We Do For Love ZKH07 07 10cc Wall Street Shuffle SFMW814 01 112 Dance With Me SC8726 09 112 It's Over Now SC3238 07 112 U Already Know SC3442 02 112 (Vocal) U Already Know SC3442 10 1910 Fruitgum Simon Says SFGD047 10 1927 Compulsory Hero SFHH02-5 10 1975 Chocolate SF326 13 1975 City SF329 16 1975 Love Me SF358 13 1975 Robbers SF341 12 1975 Sex MRH109 18 1975 Somebody Else SF367 13 1975 Sound SF361 08 1975 The City MRH106 06 1975 Ugh SF360 09 2 4 Family Lean On Me ET021 09 2 Evisa Oh La La La SF114 10 2 Live Crew Do Wah Diddy Diddy SC8700 11 2 Live Crew We Want Some Pussy SC8700 08 2 Pac California Love SF049 04 2 Pac California Love SC8875 08 2 Pac Thugz Mansion SC8805 01 2 Play Feat Careless Whisper MRH015-2 12 2 Unlimited No Limit SFD901-3 11 2 Unlimited No Limits SF006 05 20 Fingers Short Dick Man SC8169 07 21st Century 21st Century Girls SF140 10 Just a small selection of our songs Page 1 Some of our Karaoke songs ARTIST SONG MF CODE TRACK 2k Fierce Sweet Love SF159 04 3 Doors Down Away From The Sun SC8865 -

Blake Shelton

Country Update BILLBOARD.COM/NEWSLETTERS SEPTEMBER 28, 2020 | PAGE 1 OF 20 INSIDE BILLBOARD COUNTRY UPDATE [email protected] Brice, Urban ‘Speed’ Country Songwriters Sure Play A Mean To Chart Peaks >page 4 Pinball As Genre Strikes A New Chord Willie Nelson’s Country music, we’ve been told for years, is three chords and The “weird,” or unsafe, chords often heighten the sense of Virtual Farm Aid the truth. tension and release that makes music work. The major seventh >page 10 But in the current environment, the genre’s key, signature guitar intro in Old Dominion’s new single, “Never Be Sorry,” sound may be undergoing a minor adjustment. spikes a standard chord with a hint of dissonance. Dan + Shay’s “I guess now it’s nine chords and the truth,” says Old “I Should Probably Go To Bed” uses a major three chord, injecting Dominion’s Trevor Rosen with a laugh. a sharp note from outside the key signature that practically begs Dan, Shay, Combs Nine might be on the high side, but the truth is that country for resolution. The hook of Devin Dawson’s “I Got a Truck” Roll Sevens is growing more experimental ends in the first chorus with >page 11 in its chord structures. him singing a note that’s not Standard three-note triads actually in the underlying are subtly giving way to more chord, creating a discomfort complex four-note chords, and that matches the plot. Danielle Two Tenilles songwriters are increasingly Bradbery’s “Never Have I Take Trophies likely to inject a flat or a Ever” momentarily threads DAWSON DAN + SHAY TURSI >page 11 sharp into a chord that bends an Eastern-flavored pentatonic it ever so slightly out of the scale into a typical major-scale key signature. -

Ricky Roche Request List

RICKY ROCHE REQUEST LIST ABBA S.O.S. Barenaked Ladies Beck WATERLOO BRIAN WILSON DEAD MELODIES Bryan Adams ONE WEEK GUESS I’M DOING FINE EVERYTHING I DO (I DO FOR YOU) Bay City Rollers LOST CAUSE SUMMER OF '69 I ONLY WANT TO BE WITH YOU TROPICALIA THIS TIME Beach Boys Harry Belafonte Aerosmith ANGEL DISNEY GIRLS (1957) JUMP IN THE LINE CRYIN’ GOD ONLY KNOWS The Bee Gees A-Ha TAKE ON ME HELP ME RHONDA HOW DEEP IS YOUR LOVE Air Supply ALL OUT OF LOVE SLOOP JOHN B I STARTED A JOKE Alice In Chains NO EXCUSES WOULDN’T IT BE NICE MASSACHUSETTS WOULD? The Beatles NY MINING DISASTER 1941 The Allman Bros ACROSS THE UNIVERSE TO LOVE SOMEBODY AINT WASTIN TIME NO MORE BLACKBIRD WORDS BLUE SKY * MELISSA DEAR PRUDENCE Bellamy Brothers MIDNIGHT RIDER FOOL ON THE HILL LET YOUR LOVE FLOW America HERE COMES THE SUN Tony Bennett BECAUSE OF YOU SISTER GOLDEN HAIR HERE THERE & EVERYWHERE Ben Folds / Ben Folds Five VENTURA HIGHWAY HEY JUDE ARMY The Animals HEY YOU’VE GOT TO BATTLE OF WHO DONT LET ME BE HIDE YOUR LOVE AWAY BRICK COULD CARE LESS MISUNDERSTOOD I WILL FRED JONES part 2 HOUSE OF THE RISING SUN I’LL BE BACK THE LUCKIEST Adam Ant I’LL FOLLOW THE SUN MAGIC GOODY TWO SHOES I’M A LOSER SELFLESS COLD & COMPOSED Marc Anthony I NEED TO KNOW I’M LOOKING THROUGH YOU SONG FOR THE DUMPED Louis Armstrong I’M ONLY SLEEPING YOUR REDNECK PAST WHAT A WONDERFUL WORLD IT’S ONLY LOVE Beyonce SINGLE LADIES Asia HEAT OF THE MOMENT I’VE JUST SEEN A FACE Big Country IN A BIG COUNTRY SMILE HAS LEFT YOUR EYES IN MY LIFE Big Star THIRTEEN Rick Astley JULIA Stephen Bishop -

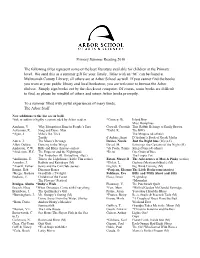

Primary Summer Reading 2010 the Following Titles Represent Some

Primary Summer Reading 2010 The following titles represent some of the best literature available for children at the Primary level. We send this as a summer gift for your family. Titles with an “M” can be found at Multnomah County Library; all others are at Arbor School as well. If you cannot find the books you want at your public library and local bookstore, you are welcome to browse the Arbor shelves. Simply sign books out by the check-out computer. Of course, some books are difficult to find, so please be mindful of others and return Arbor books promptly. To a summer filled with joyful experiences of many kinds, The Arbor Staff New additions to the list are in bold. *title or author is highly recommended by Arbor readers *Cooney, B. Island Boy Miss Rumphius Aardema, V. Why Mosquitoes Buzz in People’s Ears Crowell, Cressida That Rabbit Belongs to Emily Brown Ackerman, K. Song and Dance Man *Dahl, R. The BFG *Agee, J. Milo’s Hat Trick The Minpins (& others) Terrific d’Aulaire, Ingri D’Aulaire’s Book of Greek Myths Aiken, J. The Moon’s Revenge Davies, Nicola Just the Right Size (M) (n.f.) Allen, Debbie Dancing in the Wings Davol, M. Batwings the Curtains of the Night (M) Anderson, C.W. Billy and Blaze (horses--series) *de Paola, Tomie Strega Nona (& others) *Anderson, H.C. The Emperor and the Nightingale *Demi One Grain of Rice The Tinderbox (B. Ibatoulline, illus.) The Empty Pot *Ardizzone, E. Tim to the Lighthouse (Little Tim series) Eaton, Maxwell The Adventures of Max & Pinky (series) Arnosky, J. -

Karaoke Songs

Artist Title 311 All Mixed Up 311 Hey You 311 Love Song 702 Where Are My Girls At? Bruno Mars Mary You (Hed) Planet Earth Bartender 10 Years Wasteland 10,000 Maniacs More Than This 10,000 Maniacs These Are Days 100 Proof Aged In Soul Somebody's Been Sleeping 2 Live Crew Me So Horny 2 Live Crew We Want Some P*ssy 2 Pistols & Ray J. You Know Me 20 Fingers Short Dick Man 3 Doors Down Away From The Sun 3 Doors Down Be Like That 3 Doors Down Duck And Run 3 Doors Down Here By Me 3 Doors Down Here Without You 3 Doors Down It's Not My Time 3 Doors Down Kryptonite 3 Doors Down Landing In London 3 Doors Down Let Me Go 3 Doors Down Live For Today 3 Doors Down Loser 3 Doors Down When I'm Gone 3 Doors Down When You're Young 30 Seconds To Mars Closer To The Edge 30 Seconds To Mars The Kill 311 Down 38 Special Caught Up In You 38 Special Hold On Loosely 38 Special Rockin' Into The Night 38 Special Second Chance 3LW I Do 3OH!3 Don’t Trust Me 3oh!3 Starstrukk 3oh3 & Katy Perry Star Strukk 4 PM Sukiyaki 50 Cent 21 Questions 50 Cent Amusement Park 50 Cent Candy Shop 50 Cent If I Can't 50 Cent In Da Club 50 Cent P.I.M.P. 50 Cent Straight To The Bank 50 Cent Wanksta 5th Dimension Aquarius 5th Dimension Goodmorning Starshine 5th Dimension Last Night I Didn't Get To Sleep At All 5th Dimension Stoned Soul Picnic 8 Stops 7 Question Everything 98 Degrees I Do Cherish You 98 Degrees Invisible Man 98 Degrees The Hardest Thing A Day To Remember All Signs Point To Lauderdale A Day To Remember It's Complicated A Fine Frenzy Almost Lover A Flock Of Seagulls I Ran A Girl -

A History and a Claim

College of Saint Benedict and Saint John's University DigitalCommons@CSB/SJU Forum Lectures Forum 11-29-2018 Rock and roll’s first decade - - a history and a claim Thomas Woodward College of Saint Benedict/Saint John's University, [email protected] Follow this and additional works at: https://digitalcommons.csbsju.edu/forum_lectures Part of the History Commons, and the Music Commons Recommended Citation Woodward, Thomas, "Rock and roll’s first decade - - a history and a claim" (2018). Forum Lectures. 391. https://digitalcommons.csbsju.edu/forum_lectures/391 This Presentation is brought to you for free and open access by DigitalCommons@CSB/SJU. It has been accepted for inclusion in Forum Lectures by an authorized administrator of DigitalCommons@CSB/SJU. For more information, please contact [email protected]. Rock and Roll’s First Decade A History and a Claim NOTE Rock and roll’s source • Alan Freed’s “river of music which has absorbed many streams . all contributing to the big beat” • Ragtime • Jump Blues of the ’40s • Gospel • Jazz • Blues • Rhythm and Blues • Country • A stew (meat, vegetables, bay leaf) of different types of music • Rock continues to roll along, add ingredients, evolve • Hip-hop; sampling Key Words: Rhythm and blues; big beat • Louis Jordan, top R&B artist of the 1940s • Is You Is or Is You Ain’t (Ma Baby) 1944 • Choo Choo Ch’Boogie 1946 18 weeks at R&B #1; reached #7 on pop charts • Ain’t Nobody Here but Us Chickens 1946 R&B #1 • Saturday Night Fish Fry 1949 R&B #1 • Cab Calloway • The Honeydripper 1946 #3 -

Record Dedicated to Serving the Needs of the Music & Record Industry

' record Dedicated To Serving The Needs Of The Music & Record Industry Vol. 21, No. 995 July 2, 1966 In the opinion of the editors, this week the following records are the WHO IN SINGLE PICKS OF THE WEEK I(IIII(IIIII III(IIIC"Ilil lll it¡ ( IIIIIiIII II Zo1Y`OT IÍ - pnlihl THE WORLD . irti'.i.bIIII.JRIIIIIIWIIIIllllll 45 TON 902 THIS DOOR SWINGS BOTH WAYS ." MOTHERS TITTLE HELPER THE ROLLING STONES Cynical rock entry, "Mother's Nancy Sinatra sings about how Herman's Hermits' "This Door Little Helper," is about taking not great it is to be "Friday's Swings Both Ways" is a cute the easy way in modern living. Child." Bitter song emotionally philosophical ditty with plenty The Rolling Stones will continue sung by the gal, who just gets of pep. Another hit for the HH's to cause comment and sell better and better (Reprise 0491). in the easy -to-take side (MGM (London 902). 13548). SLEEPERS OF THE WEEK A Fast and funky new side from "Summer Kisses" is a summer Joe Hinton has a big r/ballad the Fuller Four. Group has "The song that should break through with brass section extra loud. Magic Touch" and could turn for the Floyd and Jerry duo. A Joe wails away with what should this into gold. Bobby and others nifty one to listen and dance to be enough to cause much action in raring to go r/r form (Mus- (Presta 1006). at the counter (Back Beat 565). tang 3018). .111.111".11.S OE THE 1I 'JI';Eti PIN..k DAN MOWN COUNTRY London Records' Rolling Stones Kicked Off Their U.S.