A Critical Engagement with Clark's Account of The

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Play Hard, Play Well! Tallahassee Duplicate Bridge Games ––– a Tribute to Paul! by Robert S

Bridge Club Schedule Play Hard, Play Well! Tallahassee Duplicate Bridge Games ––– A Tribute to Paul! by Robert S. Todd TDBC Contact: Steve Whitaker (850) 222-5797 Shtetpn:i/o/hro mCen.etaerrt h•l in1k4.n0e0t /N~joudrtyhk bM/tdobncr.ohtem Sl treet Dealer: West All we have to do is ruff the 9, Paul Soloway, the all time leading Monday 6:30 pm ♦ Tuesday 1:30 pm Vul. Vs NV return to dummy with a trump, and ACBL-masterpoint winner, and a true Wednesday 6:00 pm (Novice) IMPs use the J to discard one loser. “Gentleman of Bridge” passed away Thursday 6:30 pm (Special Games Only) ♦ ♣ in November. Paul was an inspira - Westminster Oaks ♠ A6543 Did you find that very nice line of -- play? If so, you joined the Japanese tion to players of all ages and skill Friday 1:00 pm ♥ levels. He truly enjoyed the game Sunday 1:30 pm J943 declarer from the 2001 Bermuda ♦ whenever he played it – young or Bridge Classes Contact Steve Whitaker, (850) 222-5797 Bowl in being fooled by a Master – Panama City Beach Duplicate Bridge Games ♣ 9872 old, sick or well, club game or World Paul Soloway! When you ruff the 9, Senior Center ♦ Championship! Corner of Lyndell Ave & Hutchinson Blvd. KQJ1098 LHO plays the 6. LHO’s diamond ♠ ♦ My fondest memory of Paul is Monday 6:00 pm (non-sanctioned) ♥ 942 holding was ♦1062. from the 2005 World Tuesday 10:00 am (sanctioned) Thursday 10:00 am (sanctioned) ♦ K Championships in Estoril, Portugal. The complete hand was I was there kibitzing and writing Panama City Duplicate Bridge Club Games ♣ A43 The auction goes… A6543 about the Championships – my first. -

Building Bridges Across Financial Communities

213495 Islamic cover_dc_Layout 1 3/13/12 5:27 PM Page 1 t has long been said that Islamic Finance Islamic Finance needs its own innovative blueprints to Project follow the pulse of the industry. I BUILDING BRIDGES FINANCIAL ACROSS COMMUNITIES Discussions on Islamic Finance as an alternative to conventional finance or a competitor have BUILDING BRIDGES ACROSS reached a new milestone: recognition of their FINANCIAL COMMUNITIES cooperation. As the field of Islamic Finance expands and diversifies, so does its ability to cross new venues and cultivate partnerships. The Global Financial Crisis, Social Responsibility, and The Ninth Harvard University Forum, enti- tled “Building Bridges Across Financial Faith-Based Finance Communities,” sought to explore these opportunities: What lessons on social respon- sibility can organizations find in Islamic Finance and other faith traditions? What can conventional banks and Islamic Finance insti- tutions learn from each other, particularly after the financial crisis? This volume, a selection of 11 papers presented S. Nazim Ali at the Ninth Forum sponsored by the Islamic Editor Finance Project at Harvard Law School, inves- tigates these questions through original research on best practices across and between industries and faith traditions. Essays consider diverse topics ranging from the influence of religion on corporate social responsibility and individual financial choices to the parameters of hedging and portfolio diversification. As our writers suggest, there is reason to be optimistic about the future -

Copyright Future Copyright Freedom: Marking the 40 Year Anniversary of the Commencement of Australia's Copyright Act 1968

COPYRIGHT FUTURE COPYRIGHT FREEDOM Marking the 40th Anniversary of the Commencement of Australia’s Copyright Act 1968 Edited by Brian Fitzgerald and Benedict Atkinson Queensland University of Technology COPYRIGHT Published 2011 by SYDNEY UNIVERSITY PRESS University of Sydney NSW 2006 Australia sydney.edu.au/sup Publication date: March 2011 © Individual authors 2011 © Sydney University Press 2011 ISBN 978–1–920899–71–4 National Library of Australia Cataloguing-in-Publication entry Title: Copyright future copyright freedom: marking the 40th anniversary of the commencement of Australia’s Copyright Act 1968 / edited by Brian Fitzgerald and Benedict Atkinson. ISBN: 9781920899714 (pbk.) Notes: Includes bibliographical references and index. Subjects: Australia. Copyright Act 1968. Copyright--Australia. Intellectual property--Australia. Other Authors/Contributors: Fitzgerald, Brian F. Atkinson, Benedict. Dewey Number: 346.940482 The digital version of this book is also available electronically through the Sydney eScholarship Repository at: ses.library.usyd.edu.au and The Queensland University of Technology ePrints Repository at: eprints.qut.edu.au. Printed in Australia iv ACKNOWLEDGEMENTS Cover image and design by Elliott Bledsoe. Cover Image credits: ‘USB Flash Drive’ by Ambuj Saxena, flic.kr/ambuj/ 345356294; ‘cassette para post’ by gabriel “gab” pinto, flic.kr/gabreal/ 2522599543; untitled by ‘Playingwithbrushes’, flic.krcom/playingwithpsp/ 3025911763; ‘iPod’s’ by Yeray Hernández, flic.kr/yerahg/498315719; ‘Presen- tation: Free Content for -

The Cut out Girl Covered in Mementos

01 02 03 04 05 06 The 07 08 09 Cut Out 10 11 Girl 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 S30 N31 9780735222243_CutOutGirl_TX.indd i 11/23/17 12:47 AM 9780735222243_CutOutGirl_TX.indd ii 11/23/17 12:47 AM 01 02 03 A L S O B Y B A R T VA N E S 04 05 Shakespeare’s Comedies 06 Shakespeare in Company 07 A Critical Companion to Spenser Studies 08 Spenser’s Forms of History 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 S30 N31 9780735222243_CutOutGirl_TX.indd iii 11/23/17 12:47 AM 9780735222243_CutOutGirl_TX.indd iv 11/23/17 12:47 AM 01 02 03 04 05 06 The 07 08 09 Cut Out 10 11 12 Girl 13 14 15 16 17 18 19 B A R T VA N E S 20 21 22 23 24 25 26 27 28 p e n g u i n p r e s s 29 New York S30 N31 9780735222243_CutOutGirl_TX.indd v 11/23/17 12:47 AM 01 02 03 04 05 06 07 08 09 PENGUIN PRESS 10 An imprint of Penguin Random House LLC 11 375 Hudson Street New York, New York 10014 12 penguin.com 13 Copyright © 2018 by Bart van Es Penguin supports copyright. Copyright fuels creativity, encourages diverse voices, 14 promotes free speech, and creates a vibrant culture. Thank you for buying an authorized 15 edition of this book and for complying with copyright laws by not reproducing, scanning, or distributing any part of it in any form without permission. -

NTSB/AAB-88/05 PB88-916905 ~- Title and Subtitle Aircraft Accident Briefs - Brief Format U.S

JACK R. HUNT LIBRARY DAYTONA BEACH, FLORIDA 32014 • 904-239-6595 TECHNICAL REPORT DOCUMENTATION PAGE 1. Report No. 2.Government Accession No. 3.Recipient's Catalog No. NTSB/AAB-88/05 PB88-916905 ~- Title and Subtitle Aircraft Accident Briefs - Brief Format U.S. Civil and Foreign Aviation 6.Performing Organization r~lPndar Year 1987 - Issue Number 1 Code 7. Author(s) 8.Performing Organization Report No. 9. Performing Organization Name and Address 10.Work Unit No. Bureau of Field Operations National Transportation Safety Board 11 .Contract or Grant No. Washington, D.C. 20594 13.Type of Report and Period Covered 12.Sponsoring Agency Name and Address Approximately 200 General Aviation and Air Carrier Accidents Occurring in NATIONAL TRANSPORTATION SAFETY BOARD 1986 in Brief Format Washington, D. C. 20594 14.Sponsoring Agency Code IS.Supplementary Notes 16.Abstract This publication contains selected aircraft accident reports in Brief Format occurring in U.S. civil and foreign aviation operations during Calendar Year 1987. Approximately 200 General Aviation and Air Carrier accidents contained in this publication represent a random selection. This publication is issued irregularly, normally eighteen times each year. The Brief Format represents the facts, conditions, circumstances and probable cause(s) for each accident. File Numbers: 0001 through 0200 17.Key Words 18.Distribution Statement Avi~tion accident, probable cause, findings, This document is availablE• certificate/rating, ~njuries, type of accident, type to the public through the operating certificate, flight conducted under, National Technical Infor accident occurred during, aircraft damage, basic mation Service, Spring weather field, Virginia 22161 19.Security Classification 20.Security Classification 21 .No. -

2017-18 St. Raphael CCW Newletters

St. RaphaelÊs Council of Catholic Women Our Lady of Good Counsel, Pray for Us 99th Edition CCW Newsletter www.st-raphaels.com/ccw September/October 2017 St. Raphael’s From Father Tim, Our Pastor Fall Festival Dear CCW members: Wel- come back to another new th October 27-29 year! The summer sure did go by fast. Later this month we CCW President’s Message will also welcome back the St. Raphael Catholic Church first day of fall and hopefully cooler Welcome back to all our Council weather soon thereafter. Anglo-Saxon members. I especially want to Christians of old used to call September welcome all our new members. I Halig-monath, meaning “holy month,” CCW OFFICERS look forward to meeting many of because of all the holy days crowded President you soon. We have a faith filled together, like the Nativity of the Blessed Denise DeBord year planned with lots of fun events Virgin Mary (September 8th), the Exalta- First Vice President for everyone. There is something for tion of the Holy Cross (September 14th), Susan Ugan everyone to help with. Each of you and the Feast of the Archangels Mi- Second Vice President has a special talent or gift. I would chael, Gabriel, and Raphael—our pa- Sandy Pennington especially like to encourage our tron (September 29th). I hope that this Treasurer school mom's to help us find ways to month will also be a holy month for you Judy Geier help you. I'm hoping some of you as the CCW begins their meetings and Corresponding Secretary can meet together and brainstorm activities again. -

How to Use This Songfinder

as of 3.14.2016 How To Use This Songfinder: We’ve indexed all the songs from 26 volumes of Real Books. Simply find the song title you’d like to play, then cross-reference the numbers in parentheses with the Key. For instance, the song “Ac-cent-tchu-ate the Positive” can be found in both The Real Book Volume III and The Real Vocal Book Volume II. KEY Unless otherwise marked, books are for C instruments. For more product details, please visit www.halleonard.com/realbook. 01. The Real Book – Volume I 10. The Charlie Parker Real Book (The Bird Book)/00240358 C Instruments/00240221 11. The Duke Ellington Real Book/00240235 B Instruments/00240224 Eb Instruments/00240225 12. The Bud Powell Real Book/00240331 BCb Instruments/00240226 13. The Real Christmas Book – 2nd Edition Mini C Instruments/00240292 C Instruments/00240306 Mini B Instruments/00240339 B Instruments/00240345 CD-ROMb C Instruments/00451087 Eb Instruments/00240346 C Instruments with Play-Along Tracks BCb Instruments/00240347 Flash Drive/00110604 14. The Real Rock Book/00240313 02. The Real Book – Volume II 15. The Real Rock Book – Volume II/00240323 C Instruments/00240222 B Instruments/00240227 16. The Real Tab Book – Volume I/00240359 Eb Instruments/00240228 17. The Real Bluegrass Book/00310910 BCb Instruments/00240229 18. The Real Dixieland Book/00240355 Mini C Instruments/00240293 CD-ROM C Instruments/00451088 19. The Real Latin Book/00240348 03. The Real Book – Volume III 20. The Real Worship Book/00240317 C Instruments/00240233 21. The Real Blues Book/00240264 B Instruments/00240284 22. -

1953 Zone Phone Presi.Ient: L·:R

GEOLOGICAL NEWS LETTER OFFICIAL PUBLICATION OF THE PORTLAND, OREGON Vo/. 19. GEOLOGICAL NEWS-LETTER Official Publication of the Gcolo2ical Society of the Ore11on Country 703 Times Building, Portland 4, Oregon POST MASTER: Return Posta2c Guaranteed PROPERTY oP STATE DEP'T OF OEOLOOY If. jll.INBRAL INDUSTRlfiS1 -;;e. 6 <-,, 'I (;f1'icers of i!:xccuti ve Board, 1952 - 1953 Zone Phone Presi.ient: l·:r. Norris !J. Stone 1645'1 S .l'. Gl.et;.!"orrl.e Dl"'i ve. Onwcgo BL 1.-lj Vice-Pres: ~;r. Raymond L. Baldwin 4804 S.W. Laurel.wood Drive l CH 145• Secretary: Ers. Johanna Simon 7006 S.E. 21st Avenue 2 EN 051,S 'fr<.!asurer: tlr. H.obert F. Wilbur 2020 S.E. Salmon Street 15 V2, 72~ i)irectors: J.;r, Rudolph Erickoon (1955) Hr. E. Cleveland Johnson (1954) Hr. Louis E. Oberson (1953) 1-lr. A. D. Vance (1953) lllI'. Ford E Wiloon (1954) Staff of Geological News Letter E1itor Smeritus: }'.r. Orrin i>. St <J.nley 2601 S.3. 49th Avenue 6 VE 125C iditcr: hrs. ll.u:iolph Ericlrnon ?..49 S.W, Gl.,nreorrie Driv< O~"""~ BL 1-lC As-:,t. Edi tcr: 1;rs. Nay R. Bushby 1202 s.w. Cardinell Drive 1 CA 212; Assoc. Editors: Er. Phil ilrogan 11+26 Ilarmon Blvd. , Bend 266- !<'. H. Libbey 1''ord E Hilson A. D. Vance Mrs. Albert Keen l"lc,ra Editors: Leo r'. Simon Nrs. Leonard M. Buoy Bus Lness Manager: Raymon:! L. Bal:l1dn 4801, S.'v[. Laurelwood Drive 1 SuCIETY OBJECTIVES To provide facilities for members of the Society to study Geology, particularly the geology of the Orer,on CountrJ. -

From the Quarterdeck Stingray Point Regatta

August, 2000 FBYC Web Site: http://FBYC.tripod.com From the Quarterdeck by Mike Karn, Commodore Stingray Point Regatta I need to clone myself. The club is planning a Captains Choice in in high gear preparing to host three August, and the lively Onancock Notice of Race major events in the next few Cruise is around the corner. This weeks. There will be major racing has grown quickly to become a Two Races Saturday, One Race and major parties this month! very popular event. Make plans Sunday, Classes: PHRF A/B/C/D Start with the Lightning North now so you won’t be left out! Spinnaker, MORC, PHRF Non- American Junior, Women’s and Flush with success of Junior Spinnaker. If sufficient yachts are Masters Championship being Week and the Leukemia Cup the registered the classes listed above planned by Ron Buchannan, membership scattered in July to may be subdivided, one-design Debbie Cycotte and Bob race, cruise and vacation all over!. classes added or classes combined. Wardwell. Should be great! The Juniors went on the road led Trophies given for Daily, Overalls, The Annual Regatta follows by Anna and Cam to meet others, Brent Halsey Jr. Memorial Trophy, immediately after with a large cast test their skills and explore other Potts Team Challenge Trophy, and of planners working their parts. sailing venues in the Bay. Cruisers Sportsmanship Award. Five Star The 61st running promises lots of went exploring north. One Design Dinner by Chef Maxwell, Saturday boats and well run courses. racers enjoyed the coolish summer Night Dance, Mount Gay Rum and The big regatta, Stingray Point, we’ve had and traveled to compete Hats! So come join the fastest is almost here and things are at clubs near and far. -

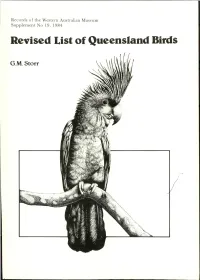

Revised List of Queensland Birds

Records of the Western Australian Museum Supplement 0 19. 1984 Revised List ofQueensland Birds G.M.Storr ,~ , , ' > " Records of the Western Australian Museum Supplement No. 19 I $ I,, 1 > Revised List oflQueensland Birds G. M. Storr ,: i, Perth 1984 'j t ,~. i, .', World List Abbreviation: . Rec. West. Aust. Mus. Suppl. no. 19 Cover Palm Cockatoo (Probosciger aterrimus), drawn by Jill Hollis. © Western Australian Museum 1984 I ISBN 0 7244 8765 4 Printed and Published by the Western Australian Museum, j Francis Street, Perth 6000, Western Australia. TABLE OF CONTENTS Page Introduction. ...................................... 5 List of birds. ...................................... 7 Gazetteer ....................................... .. 179 3 INTRODUCTION In 1967 I began to search the literature for information on Queensland birds their distribution, ecological status, relative abundance, habitat preferences, breeding season, movements and taxonomy. In addition much unpublished information was received from Mrs H.B. Gill, Messrs J.R. Ford, S.A. Parker, R.L. Pink, R.K. Carruthers, L. Neilsen, D. Howe, C.A.C. Cameron, Bro. Matthew Heron, Dr D.L. Serventy and the late W.E. Alexander. These data formed the basis of the List of Queensland birds (Stort 1973, Spec. Pubis West. Aust. Mus. No. 5). During the last decade the increase in our knowledge of Queensland birds has been such as to warrant a re-writing of the List. Much of this progress has been due to three things: (1) survey work by J.R. Ford, A. Gieensmith and N.C.H. Reid in central Queensland and southern Cape York Peninsula (Ford et al. 1981, Sunbird 11: 58-70), (2) research into the higher categories ofclassification, especially C.G. -

REMEMBERING the SPACE AGE ISBN 978-0-16-081723-6 F Asro El Yb T Eh S Epu Ir Tn E Edn Tn Fo D Co Mu E Tn S , .U S

REMEMBERING the SPACE AGE ISBN 978-0-16-081723-6 F asro le b yt eh S epu ir tn e edn tn fo D co mu e tn s , .U S . G evo r emn tn irP tn i Ogn eciff I tn re en :t skoob t ro e .Popgenoh .vog : lot l f ree ( 0081 215 )-;668 DC a re a( 0081 215 )-202 90000 aF :x ( M4012 a215 )-202 :li S t Ipo DCC, W ihsa gn t no , D C 20402 - 1000 ISBN 978-0-16-081723-6 9 780160 817236 ISBN 978-0-16-081723-6 F ro as el b yt eh S pu e ir tn e dn e tn fo D co mu e tn s, .U S . G vo er mn e tn P ir tn i gn O eciff I tn re en :t koob s . ro t e opg . vog P noh e : lot l f eer ( 668 ) 215 - 0081 ; DC a er a ( 202 ) 215 - 0081 90000 aF :x ( 202 ) 215 - 4012 Ma :li S t po I DC ,C W a hs i gn t no , D C 20402 - 1000 ISBN 978-0-16-081723-6 9 780160 817236 REMEMBERING the SPACE AGE Steven J. Dick Editor National Aeronautics and Space Administration Office of External Relations History Division Washington, DC 2008 NASA SP-2008-4703 Library of Congress Cataloging-in-Publication Data Remembering the Space Age / Steven J. Dick, editor. p. cm. Includes bibliographical references. 1. Astronautics--History--20th century. I. Dick, Steven J. TL788.5.R46 2008 629.4’109045--dc22 2008019448 CONTENTS Acknowledgments .......................................vii Introduction .......................................... -

Landscape Was Changed-Expect 16.5. Abattoir Jobs Hinge on Report 9.6

N.M.H. INDEX 1979 ABATTOIRS ABORIGINES (ConC) Brucellosis disease aC abaCCoir unreported Arukun vote returns five 4.4. 19.1. Rock ruling 5.4. Day win decide on abattoirs 2.2. Aboriginal land claim rejected 6.4. AbaCCoir Co geC new meat chillers 24.2. P.M. given Rock petition 9.4. Abattoir odour task for Maitland Council 28.2. 'Splendid experiment' gets off ground 9.4s Farley plan 11.4. Aborigine 'work ethic' wanted 10.4. Meat union want abattoir reopened. 21.4. Ranch evicts Aborigines 10.4. Farley abattoir ruled out 10.5. Aborigines worse off : Sth. Africa 17.4. Abattoir improvements programme expected 11.5. Qld. Aborigines feel betrayed 19.4. Abattoir saga 11.5. U.S. envoy to meet upset Aborigines 27.4. Farley abattoir idea still alive 15.5. N.T. land act to change 28.4. Smaller Farley plan opposed 23.5. Experts find relics in black camp site 3.5. Abattoir leasing may follow huge loss 24.5. Refuge fund 'directive' attacked 5.5. Maitland to stop odours from abattoirs 24.5. Young promises blacks support 11.5. The abattoir problems 28.5. Advisory council nominees sought 14.5. Resident group maintains policy on abattoir $132,000 aid for outback children 14.5. 29.5. Tradition ended by death 14.5. No open talks on abattoir yet 30.5. Fire custom theory backs Aborigines 15.5. Shorter week fear at Maitland abattoir 31.5. Awabakal body prepares five-year plan 16.5. Stock shortage hits boards 1.6. Landscape was changed-expect 16.5.