Secure Boot and Remote Attestation in the Sanctum Processor

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Superscalar Out-Of-Order X86 Soft Processor for FPGA

A Superscalar Out-of-Order x86 Soft Processor for FPGA Henry Wong University of Toronto, Intel [email protected] June 5, 2019 Stanford University EE380 1 Hi! ● CPU architect, Intel Hillsboro ● Ph.D., University of Toronto ● Today: x86 OoO processor for FPGA (Ph.D. work) – Motivation – High-level design and results – Microarchitecture details and some circuits 2 FPGA: Field-Programmable Gate Array ● Is a digital circuit (logic gates and wires) ● Is field-programmable (at power-on, not in the fab) ● Pre-fab everything you’ll ever need – 20x area, 20x delay cost – Circuit building blocks are somewhat bigger than logic gates 6-LUT6-LUT 6-LUT6-LUT 3 6-LUT 6-LUT FPGA: Field-Programmable Gate Array ● Is a digital circuit (logic gates and wires) ● Is field-programmable (at power-on, not in the fab) ● Pre-fab everything you’ll ever need – 20x area, 20x delay cost – Circuit building blocks are somewhat bigger than logic gates 6-LUT 6-LUT 6-LUT 6-LUT 4 6-LUT 6-LUT FPGA Soft Processors ● FPGA systems often have software components – Often running on a soft processor ● Need more performance? – Parallel code and hardware accelerators need effort – Less effort if soft processors got faster 5 FPGA Soft Processors ● FPGA systems often have software components – Often running on a soft processor ● Need more performance? – Parallel code and hardware accelerators need effort – Less effort if soft processors got faster 6 FPGA Soft Processors ● FPGA systems often have software components – Often running on a soft processor ● Need more performance? – Parallel -

Intel® Quartus® Prime Design Suite Version 18.1 Update Release Notes

Intel® Quartus® Prime Design Suite Version 18.1 Update Release Notes Updated for Intel® Quartus® Prime Design Suite: 18.1.1 Standard Edition Subscribe RN-01080-18.1.1.0 | 2019.04.17 Send Feedback Latest document on the web: PDF | HTML Contents Contents 1. Intel® Quartus® Prime Design Suite Version 18.1 Update Release Notes........................ 3 2. Issues Addressed in Update 1......................................................................................... 4 2.1. Intel Quartus Prime Pro Edition Software.................................................................. 4 2.2. Intel Quartus Prime Standard Edition Software.......................................................... 7 2.3. IP and IP Cores..................................................................................................... 8 2.4. DSP Builder for Intel FPGAs...................................................................................12 2.5. Intel High Level Synthesis Compiler........................................................................12 2.6. Intel FPGA SDK for OpenCL*................................................................................. 13 3. Issues Addressed in Update 2....................................................................................... 15 3.1. Intel Quartus Prime Pro Edition Software.................................................................15 3.2. IP and IP Cores................................................................................................... 15 3.3. Intel FPGA SDK for OpenCL.................................................................................. -

Intel® Industrial Iot Workshop Security for Industrial Platforms

Intel® Industrial IoT workshop Security for industrial platforms Gopi K. Agrawal Security Architect IOTG Technical Sales & Marketing Intel Corporation Legal © 2018 Intel Corporation No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from course of performance, course of dealing, or usage in trade. This document contains information on products, services and/or processes in development. All information provided here is subject to change without notice. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps. Intel technologies' features and benefits depend on system configuration and may require enabled hardware, software or service activation. Performance varies depending on system configuration. No computer system can be absolutely secure. Check with your system manufacturer or retailer or learn more at www.intel.com. Intel, the Intel logo, are trademarks of Intel Corporation in the U.S. and/or other countries. *Other names and brands may be claimed as the property of others. All information provided here is subject to change without notice. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. Intel technologies’ features and benefits depend on system configuration and may require enabled hardware, software or service activation. Performance varies depending on system configuration. No computer system can be absolutely secure. -

Demystifying Internet of Things Security Successful Iot Device/Edge and Platform Security Deployment — Sunil Cheruvu Anil Kumar Ned Smith David M

Demystifying Internet of Things Security Successful IoT Device/Edge and Platform Security Deployment — Sunil Cheruvu Anil Kumar Ned Smith David M. Wheeler Demystifying Internet of Things Security Successful IoT Device/Edge and Platform Security Deployment Sunil Cheruvu Anil Kumar Ned Smith David M. Wheeler Demystifying Internet of Things Security: Successful IoT Device/Edge and Platform Security Deployment Sunil Cheruvu Anil Kumar Chandler, AZ, USA Chandler, AZ, USA Ned Smith David M. Wheeler Beaverton, OR, USA Gilbert, AZ, USA ISBN-13 (pbk): 978-1-4842-2895-1 ISBN-13 (electronic): 978-1-4842-2896-8 https://doi.org/10.1007/978-1-4842-2896-8 Copyright © 2020 by The Editor(s) (if applicable) and The Author(s) This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. Open Access This book is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made. The images or other third party material in this book are included in the book’s Creative Commons license, unless indicated otherwise in a credit line to the material. -

(10) Patent No.: US 9037807 B2

US009037807B2 (12) United States Patent (10) Patent No.: US 9,037,807 B2 Vorbach (45) Date of Patent: May 19, 2015 (54) PROCESSOR ARRANGEMENT ON A CHIP Sep. 17, 2001 (DE) .................................. 101 45 792 INCLUDING DATA PROCESSING, MEMORY, Sep. 17, 2001 (DE) ... ... 101 45795 AND INTERFACE ELEMENTS Sep. 19, 2001 (DE) .................................. 101 46132 Sep. 30, 2001 (WO). ... PCT/EPO1/11299 (75) Inventor: Martin Vorbach, Munich (DE) Oct. 8, 2001 (WO) ....................... PCT/EPO1/11593 Nov. 5, 2001 (DE) .................................. 101 54. 259 (73) Assignee: srecinologies AG, Nov. 5, 2001 (DE) ... ... 101 54 260 Dec. 14, 2001 (EP) ..................................... O1129923 (*) Notice: Subject to any disclaimer, the term of this Jan. 18, 2002 (EP) ..................................... O2OO1331 patent is extended or adjusted under 35 Jan. 19, 2002 (DE). 102 O2 044 U.S.C. 154(b) by 0 days. Jan. 20, 2002 (DE) 102 O2 175 Feb. 15, 2002 (DE) 102 O2 653 (21) Appl. No.: 12/944,068 Feb. 18, 2002 (DE) ... ... 102 O6856 Feb. 18, 2002 (DE) ... ... 102 O6857 (22) Filed: Nov. 11, 2010 Feb. 21, 2002 (DE) ... ... 102 O7 224 Feb. 21, 2002 (DE) ... ... 102 O7 225 (65) Prior Publication Data Feb. 21, 2002 (DE) .................................. 102 O7 226 US 2011 FOO60942 A1 Mar. 10, 2011 (51) Int. Cl. O O G06F 3/4 (2006.01) Related U.S. Application Data G06F II/20 (2006.01) (60) Division of application No. 12/496.012, filed on Jul. 1, G06F 3/16 (2006.01) 2009, now abandoned, which is a continuation of G06F 12/00 (2006.01) application No. 10/471.061, filed as application No. -

Intel® Stratix® 10 General Purpose I/O User Guide

Intel® Stratix® 10 General Purpose I/O User Guide Updated for Intel® Quartus® Prime Design Suite: 21.2 Subscribe UG-S10GPIO | 2021.07.07 Send Feedback Latest document on the web: PDF | HTML Contents Contents 1. Intel® Stratix® 10 I/O Overview..................................................................................... 4 1.1. Intel Stratix 10 I/O and Differential I/O Buffers..........................................................5 1.2. Intel Stratix 10 I/O Migration Support...................................................................... 6 2. Intel Stratix 10 I/O Architecture and Features............................................................... 8 2.1. I/O Standards and Voltage Levels in Intel Stratix 10 Devices....................................... 8 2.1.1. Intel Stratix 10 I/O Standards Support......................................................... 9 2.1.2. Intel Stratix 10 I/O Standards Voltage Support............................................ 10 2.2. I/O Element Structure in Intel Stratix 10 Devices..................................................... 12 2.2.1. I/O Bank Architecture in Intel Stratix 10 Devices..........................................13 2.2.2. I/O Buffer and Registers in Intel Stratix 10 Devices...................................... 14 2.3. Programmable IOE Features in Intel Stratix 10 Devices............................................. 15 2.3.1. Programmable Output Slew Rate Control.....................................................17 2.3.2. Programmable IOE Delay......................................................................... -

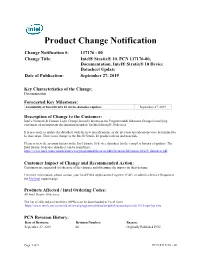

Product Change Notification

Product Change Notification Change Notification #: 117176 - 00 Change Title: Intel® Stratix® 10, PCN 117176-00, Documentation, Intel® Stratix® 10 Device Datasheet Update Date of Publication: September 27, 2019 Key Characteristics of the Change: Documentation Forecasted Key Milestones: September 27, 2019 Availability of Intel Stratix 10 device datasheet update: Description of Change to the Customer: Intel’s Network & Custom Logic Group (formerly known as the Programmable Solutions Group) is notifying customers of an important documentation update for Intel Stratix® 10 devices. It is necessary to update the datasheet with the new specifications, as the previous specifications were determined to be inaccurate. There is no change to the Intel® Stratix 10 product silicon and materials. Please review the revision history in the Intel Stratix 10 device datasheet for the complete history of updates. The Intel Stratix 10 device datasheet can be found here: https://www.intel.com/content/dam/www/programmable/us/en/pdfs/literature/hb/stratix-10/s10_datasheet.pdf Customer Impact of Change and Recommended Action: Customers are requested to take note of the changes and determine the impact on their designs. For more information, please contact your local Field Applications Engineer (FAE) or submit a Service Request at the My Intel support page. Products Affected / Intel Ordering Codes: All Intel Stratix 10 devices. The list of affected part numbers (OPNs) can be downloaded in Excel form: https://www.intel.com/content/dam/www/programmable/us/en/pdfs/literature/pcn/adv1915-opn-list.xlsx PCN Revision History: Date of Revision: Revision Number: Reason: September 27, 2019 00 Originally Published PCN Page 1 of 2 PCN #117176 - 00 Product Change Notification 117176 - 00 INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL PRODUCTS. -

CHERI Concentrate: Practical Compressed Capabilities

1 CHERI Concentrate: Practical Compressed Capabilities Jonathan Woodruff, Alexandre Joannou, Hongyan Xia, Anthony Fox, Robert Norton, Thomas Bauereiss, David Chisnall, Brooks Davis, Khilan Gudka, Nathaniel W. Filardo, A. Theodore Markettos, Michael Roe, Peter G. Neumann, Robert N. M. Watson, Simon W. Moore Abstract—We present CHERI Concentrate, a new fat-pointer compression scheme applied to CHERI, the most developed capability-pointer system at present. Capability fat pointers are a primary candidate to enforce fine-grained and non-bypassable security properties in future computer systems, although increased pointer size can severely affect performance. Thus, several proposals for capability compression have been suggested elsewhere that do not support legacy instruction sets, ignore features critical to the existing software base, and also introduce design inefficiencies to RISC-style processor pipelines. CHERI Concentrate improves on the state-of-the-art region-encoding efficiency, solves important pipeline problems, and eases semantic restrictions of compressed encoding, allowing it to protect a full legacy software stack. We present the first quantitative analysis of compiled capability code, which we use to guide the design of the encoding format. We analyze and extend logic from the open-source CHERI prototype processor design on FPGA to demonstrate encoding efficiency, minimize delay of pointer arithmetic, and eliminate additional load-to-use delay. To verify correctness of our proposed high-performance logic, we present a HOL4 machine-checked proof of the decode and pointer-modify operations. Finally, we measure a 50% to 75% reduction in L2 misses for many compiled C-language benchmarks running under a commodity operating system using compressed 128-bit and 64-bit formats, demonstrating both compatibility with and increased performance over the uncompressed, 256-bit format. -

Intel® Xeon® Scalable Processors (3Rd Gen)

Performance made flexible. 3rd Gen Intel® Xeon® Scalable Platform Technology Preview Lisa Spelman Corporate Vice President, Xeon and Memory Group Data Platforms Group Intel Corporation Unmatched Portfolio of Hardware, Software and Solutions Move Faster Store More Process Everything Optimized Software and System-Level Solutions 3 Announcing Today – Intel’s Latest Data Center Portfolio Targeting 3rd Gen Intel® Xeon® Scalable processors Move Faster Store More Process Everything Intel® Optane™ SSD P5800X Fastest SSD on the planet 3rd Gen Intel® Xeon® Scalable Intel® Optane™ Persistent processor Memory 200 series Intel® Ethernet E810- Intel’s highest performing server CPU 2CQDA2 Up to 6TB memory per socket with built-in AI and security solutions + Native data persistence Up to 200GbE per PCIe 4.0 slot for Intel® Agilex™ FPGA bandwidth-intensive workloads Intel® SSD D5-P5316 First PCIe 4.0 144-Layer QLC Industry leading FPGA logic performance and 3D NAND performance/watt Enables up to 1PB storage in 1U Optimized Solutions >500 Partner Solutions 4 World’s Most Broadly Deployed Data Center Processor From the cloud to the intelligent edge >1B Xeon Processor Cores Deployed in the >50M Cloud Since 20131 Intel® Xeon® >800 cloud providers Scalable Processors with Intel® Xeon® processor-based servers Shipped deployed1 Intel® Xeon® Scalable Processors are the Foundation for Multi-Cloud Environments 1. Source: Intel internal estimate using IDC Public Cloud Services Tracker and Intel’s Internal Cloud Tracker, as of March 1, 2021. 5 Why Customers Choose -

Application Specific Programmable Processors for Reconfigurable Self-Powered Devices

C651etukansi.kesken.fm Page 1 Monday, March 26, 2018 2:24 PM C 651 OULU 2018 C 651 UNIVERSITY OF OULU P.O. Box 8000 FI-90014 UNIVERSITY OF OULU FINLAND ACTA UNIVERSITATISUNIVERSITATIS OULUENSISOULUENSIS ACTA UNIVERSITATIS OULUENSIS ACTAACTA TECHNICATECHNICACC Teemu Nyländen Teemu Nyländen Teemu University Lecturer Tuomo Glumoff APPLICATION SPECIFIC University Lecturer Santeri Palviainen PROGRAMMABLE Postdoctoral research fellow Sanna Taskila PROCESSORS FOR RECONFIGURABLE Professor Olli Vuolteenaho SELF-POWERED DEVICES University Lecturer Veli-Matti Ulvinen Planning Director Pertti Tikkanen Professor Jari Juga University Lecturer Anu Soikkeli Professor Olli Vuolteenaho UNIVERSITY OF OULU GRADUATE SCHOOL; UNIVERSITY OF OULU, FACULTY OF INFORMATION TECHNOLOGY AND ELECTRICAL ENGINEERING Publications Editor Kirsti Nurkkala ISBN 978-952-62-1874-8 (Paperback) ISBN 978-952-62-1875-5 (PDF) ISSN 0355-3213 (Print) ISSN 1796-2226 (Online) ACTA UNIVERSITATIS OULUENSIS C Technica 651 TEEMU NYLÄNDEN APPLICATION SPECIFIC PROGRAMMABLE PROCESSORS FOR RECONFIGURABLE SELF-POWERED DEVICES Academic dissertation to be presented, with the assent of the Doctoral Training Committee of Technology and Natural Sciences of the University of Oulu, for public defence in the Wetteri auditorium (IT115), Linnanmaa, on 7 May 2018, at 12 noon UNIVERSITY OF OULU, OULU 2018 Copyright © 2018 Acta Univ. Oul. C 651, 2018 Supervised by Professor Olli Silvén Reviewed by Professor Leonel Sousa Doctor John McAllister ISBN 978-952-62-1874-8 (Paperback) ISBN 978-952-62-1875-5 (PDF) ISSN 0355-3213 (Printed) ISSN 1796-2226 (Online) Cover Design Raimo Ahonen JUVENES PRINT TAMPERE 2018 Nyländen, Teemu, Application specific programmable processors for reconfigurable self-powered devices. University of Oulu Graduate School; University of Oulu, Faculty of Information Technology and Electrical Engineering Acta Univ. -

Partner Directory Wind River Partner Program

PARTNER DIRECTORY WIND RIVER PARTNER PROGRAM The Internet of Things (IoT), cloud computing, and Network Functions Virtualization are but some of the market forces at play today. These forces impact Wind River® customers in markets ranging from aerospace and defense to consumer, networking to automotive, and industrial to medical. The Wind River® edge-to-cloud portfolio of products is ideally suited to address the emerging needs of IoT, from the secure and managed intelligent devices at the edge to the gateway, into the critical network infrastructure, and up into the cloud. Wind River offers cross-architecture support. We are proud to partner with leading companies across various industries to help our mutual customers ease integration challenges; shorten development times; and provide greater functionality to their devices, systems, and networks for building IoT. With more than 200 members and still growing, Wind River has one of the embedded software industry’s largest ecosystems to complement its comprehensive portfolio. Please use this guide as a resource to identify companies that can help with your development across markets. For updates, browse our online Partner Directory. 2 | Partner Program Guide MARKET FOCUS For an alphabetical listing of all members of the *Clavister ..................................................37 Wind River Partner Program, please see the Cloudera ...................................................37 Partner Index on page 139. *Dell ..........................................................45 *EnterpriseWeb -

PCI Express High Performance Reference Design

PCI Express High Performance Reference Design AN-456-1.4 Application Note The PCI Express High-Performance Reference Design highlights the performance of the Altera® Stratix® V Hard IP for PCI Express and IP Compiler for PCI ExpressTM MegaCore® functions. The design includes a high-performance chaining direct memory access (DMA) that transfers data between the Arria® II GX, Cyclone® IV GX, Stratix® IV GX, or Stratix V FPGA, internal memory and the system memory. The reference design includes a Windows XP-based software application that sets up the DMA transfers. The software application also measures and displays the performance achieved for the transfers. This reference design enables you to evaluate the performance the PCI Express protocol in an Arria II GX, Cyclone IV GX, Stratix IV GX, or Stratix V device. Altera offers the PCI Express MegaCore function in both hard IP and soft IP implementations. The hard IP implementation is available as a root port or endpoint. Depending on the device used, the hard IP implementation is compliant with PCI Express Base Specification 1.1, 2.0, or 3.0. The soft IP implementation is available only as an endpoint. It is compliant with PCI Express Base Specification 1.0a or 1.1. The remainder of this application note includes a tutorial on calculating the throughput of the PCI Express MegaCore function and instructions for running the chaining DMA design example. The chaining DMA in this reference design is the chaining DMA example generated by the PCI Express Compiler. This example is explained in detail in the Stratix V Hard IP for PCI Express User Guide for Stratix V devices and the PCI Express Compiler User Guide for earlier devices.