Some New Multi-Step Derivative-Free Iterative Methods for Solving Nonlinear Equations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Curriculum Vitae

CURRICULUM VITAE PERSONAL INFORMATION Name: Lubna Abid Ali Nationality: Pakistani Marital Status: Married E-mail Address: [email protected] EDUCATION Ph.D International Relations 2003 Quaid-i-Azam University, Islamabad Ph.D Middle East Studies Institute Research Work Columbia University 1994-1995 New York, U.S.A Ph.D Political Science Course Work Washington University 1995-1996 St. Louis, U.S.A M.Phil International Relations 1990 Quaid-i-Azam University, Islamabad M.Sc International Relations 1975-1978 Quaid-i-Azam University, Islamabad B.A Economics & Psychology 1975 Queen Marry College, Punjab University, Lahore HONORS & AWARDS 1. Learning Innovation Workshop on Strategic Planning, Higher Education 2011 Commission, Islamabad 2. Ambassador for Peace Universal Peace Federation, United Nations, New York, 2011 U.S.A 3. International Peace Award Institute of Peace and Development (INSPAD) Brussels 2008 4. International Scholar Award Institute of International Education, USA 1996 5. Gold Medal Topped Q.A.U in M.Sc International Relations 1978 6. Gold Medal Topped Punjab University in B.A 1975 7. Gold Medal Topped Lahore Board in F.A 1972 Page 1 of 14 CAREER DEVELOPMENT Professor/HOD Department of International Relations 2017-To Date National Defence University, Islamabad Professor School of Politics and International Relations 2011-2014 Quaid-i-Azam University, Islamabad Associate Professor Department of International Relations 2005-2011 Quaid-i-Azam University, Islamabad Assistant Professor Department of International Relations 1994-2005 Quaid-i-Azam University, Islamabad Visiting fellow Department of Political Science 1995-1996 Washington University, St. Louis, U.S.A Permanent Lecturer Department of International Relations 1987-1994 Quaid-i-Azam University, Islamabad Visiting Scholar Department of International Relations 1984-1987 Quaid-i-Azam University, Islamabad AMINISTRATIVE EXPERIENCE 2010-2012 Director, School of Politics and International Relations, Quaid-i-Azam University, Islamabad. -

Profile at Google Scholar P=List Works&Sortby=Pubdate

Dr Ihtsham Ul Haq Padda Head of Department, HEC Approved Supervisor, Department of Economics, Federal Urdu University for Arts Science and Technology (FUUAST), Islamabad, Pakistan Email: [email protected] Profile at Google Scholar https://scholar.google.com.pk/citations?hl=en&user=U9UiEuIAAAAJ&view_o p=list_works&sortby=pubdate Articles 1. IUH Padda, M Asim, What Determines Compliance with Cleaner Production? An Appraisal of the Tanning Industry in Sialkot, Pakistan, Environmental Science and Pollution Research, https://doi.org/10.1007/s11356 (2018) 2. IUH Padda; M Khan; TN Khan, The Impact of Government Expenditures on Human Welfare: An Empirical Analysis for Pakistan, University of Wah Journal of Social Sciences and Humanities, 01(1), (Accepted) (2018) 3. IUH Padda; A Hameed, Estimating Multidimensional Poverty Levels in Rural Pakistan: A Contribution to Sustainable Development Policies, Journal of Cleaner Production, 197, 435-442, (2018), [ISSN 0959-6526] 4. Y. Javed; IUH Padda; W Akram, An Analysis of Inclusive Growth for South Asia, Journal of Education & Social Sciences, 6(1): 110-122, (2018), [ISSN: 2410-5767 (Online) ISSN: 2414-8091] 5. R. Yasmeen; M. Hafeez; IUH Padda, Trade Balance and Terms of Trade Relationship: Evidence from Pakistan, Pakistan Journal of Applied Economics, Accepted, (2018) [ISSN: 0254-9204] 6. F. Safdar, IUH Padda, Impact of Institutions on Budget Deficit: The Case of Pakistan, NUML, International Journal of Business and Management, 12 (1), 77-88. (2017) [ISSN: 2410-5392] 7. M. Umer, IUH Padda, Linkage between Economic and Government Activities: Evidence for Wagner’s Law from Pakistan, Pakistan Business Review 18 (3), 752-773, (2016) 8. Husnain, et. -

University Name Research Papers University of Karachi, Karachi 342 University of Agriculture, Faisalabad 299 Govt

Research Output in Natural Sciences and Agriculture during 2010 ( ***revised 9/12/2011) University Name Research Papers University of Karachi, Karachi 342 University of Agriculture, Faisalabad 299 Govt. College University, Lahore 233 University of The Punjab, Lahore 217 University of Sargodha, Sargodha 174 COMSATS Institute of Information Technology, Islamabad 148 University of Peshawar, Peshawar 137 Bahauddin Zakariya University, Multan 114 KPK Agricultural University, Peshawar 92 National University of Science & Technology, Islamabad 83 University of Sind, Jamshoro 79 PMAS University of Arid Agriculture, Rawalpindi 77 Islamia University, Bahawalpur 68 Pakistan Institute of Engg & Applied Sciences, Islamabad 67 Govt. College University, Faisalabad 60 Kohat University of Science & Technology, Kohat 55 Federal Urdu University of Arts, Science & Technology, Islamabad 53 University of Engineering & Technology, Lahore 52 Hazara University, Dodhial 46 University of Baluchistan, Quetta 40 Lahore University of Management Sciences, Lahore 38 International Islamic University, Islamabad 38 Gomal University, D.I.Khan 37 Lahore College for Women University, Lahore 35 GIK Institute of Engineering Science & Technology, Topi 33 University of Malakand, Chakdara 33 University of Azad Jammu & Kashmir 32 Quaid-e-Azam University, Islamabad 28 Allama Iqbal Open University, Islamabad 27 Aga Khan University, Karachi 23 Abdul Wali Khan University, Mardan 22 University of Veterinary & Animal Sciences, Lahore 17 University of Gujrat,Gujrat 16 Fatima Jinnah Women -

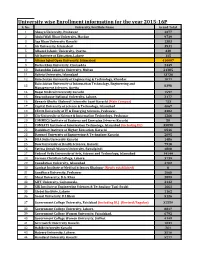

University Wise Enrollment Information for the Year 2015-16P S

University wise Enrollment information for the year 2015-16P S. No. University/Institute Name Grand Total 1 Abasyn University, Peshawar 4377 2 Abdul Wali Khan University, Mardan 9739 3 Aga Khan University Karachi 1383 4 Air University, Islamabad 3531 5 Alhamd Islamic University, Quetta. 338 6 Ali Institute of Education, Lahore 115 8 Allama Iqbal Open University, Islamabad 416607 9 Bacha Khan University, Charsadda 2449 10 Bahauddin Zakariya University, Multan 21385 11 Bahria University, Islamabad 13736 12 Balochistan University of Engineering & Technology, Khuzdar 1071 Balochistan University of Information Technology, Engineering and 13 8398 Management Sciences, Quetta 14 Baqai Medical University Karachi 1597 15 Beaconhouse National University, Lahore. 2177 16 Benazir Bhutto Shaheed University Lyari Karachi (Main Campus) 753 17 Capital University of Science & Technology, Islamabad 4067 18 CECOS University of IT & Emerging Sciences, Peshawar. 3382 19 City University of Science & Information Technology, Peshawar 1266 20 COMMECS Institute of Business and Emerging Sciences Karachi 50 21 COMSATS Institute of Information Technology, Islamabad (including DL) 35890 22 Dadabhoy Institute of Higher Education, Karachi 6546 23 Dawood University of Engineering & Technology Karachi 2095 24 DHA Suffa University Karachi 1486 25 Dow University of Health Sciences, Karachi 7918 26 Fatima Jinnah Women University, Rawalpindi 4808 27 Federal Urdu University of Arts, Science and Technology, Islamabad 14144 28 Forman Christian College, Lahore. 3739 29 Foundation University, Islamabad 4702 30 Gambat Institute of Medical Sciences Khairpur (Newly established) 0 31 Gandhara University, Peshawar 1068 32 Ghazi University, D.G. Khan 2899 33 GIFT University, Gujranwala. 2132 34 GIK Institute of Engineering Sciences & Technology Topi-Swabi 1661 35 Global Institute, Lahore 1162 36 Gomal University, D.I.Khan 5126 37 Government College University, Faislabad (including DL) (Revised/Regular) 32559 38 Government College University, Lahore. -

Sports Directory for Public and Private Universities Public Sector Universities Federal

Sports Directory for Public and Private Universities Public Sector Universities Federal: 1. Allama Iqbal Open University, Islamabad Name of Sports Officer: there is no sports officer in said university as per information received from university. Designation: Office Phone No: Celll No: E-mail: Mailing Address: 2. Bahria University, Islamabad Name of Sports Officer: Malik Bashir Ahmad Designation: Dy. Director Sports Office Phone No: 051-9261279 Cell No: 0331-2221333 E-mail: [email protected] Mailing Address: Bahria University, Naval Complex, E-8, Shangrila Road, Islamabad Name of Sports Officer: Mirza Tasleem Designation: Sports Officer Office Phone No: 051-9261279 Cell No: E-mail: [email protected] Mailing Address: as above 3. International Islamic University, Islamabad Name of Sports Officer: Muhammad Khalid Chaudhry Designation: Sports Officer Office Phone No: 051-9019656 Cell No: 0333-5120533 E-mail: [email protected] Mailing Address: Sports Officer, IIUI, H-12, Islamabad 4. National University of Modern Languages (NUML), Islamabad Name of Sports Officer: No Sports Officer Designation: Office Phone No: 051-9257646 Cell No: E-mail: Mailing Address: Sector H-9, Islamabad Name of Sports Officer: Saeed Ahmed Designation: Demonstrator Office Phone No: 051-9257646 Cell No: 0335-5794434 E-mail: [email protected] Mailing Address: as above 5. Quaid-e-Azam University, Islamabad Name of Sports Officer: M. Safdar Ali Designation: Dy. Director Sports Office Phone No: 051-90642173 Cell No: 0333-6359863 E-mail: [email protected] Mailing Address: Quaid-e-Azam University, Islamabad 6. National University of Sciences and Technology, Islamabad Name of Sports Officer: Mrs. -

Curriculum of Civil Engineering Bs/Be Ms/Me

CURRICULUM OF CIVIL ENGINEERING BS/BE MS/ME (Revised 2012) HIG HER EDUC ATION COMMISSION HIGHER EDUCATION COMMISSION ISLAMABAD CURRICULUM DIVISION, HEC Prof. Dr. Syed Sohail H. Naqvi Executive Director Mr. Muhammad Javed Khan Adviser (Academic) Malik Arshad Mahmood Director (Curri) Dr. M. Tahir Ali Shah Deputy Director (Curri) Mr. Farrukh Raza Asst. Director (Curri) Mr. Abdul Fatah Bhatti Asst. Director (Curri) Composed by: Mr. Zulfiqar Ali, HEC, Islamabad 2 CONTENTS 1. Introduction 6 2. Framework for 4-year in BS/BE in Civil Engineering 11 3. Scheme of Studies of Civil Engineering for BS/BE level 14 4. Details of Courses for BS/BE Civil Engineering. 18 5. Course Outline for MS/ME in Structural Engineering 64 6. Course Outline for MS/ME in Hydraulics & Irrigation Engineering 65 7. Course Outline for MS/ME in Geo-Technical Engineering 65 8. Course Outline for MS/ME in Transportation Engineering 66 9. Recommendations 67 8. Annexure - A . 68 3 PREFACE The curriculum of subject is described as a throbbing pulse of a nation. By viewing curriculum one can judge the stage of development and its pace of socio-economic development of a nation. With the advent of new technology, the world has turned into a global village. In view of tremendous research taking place world over new ideas and information pours in like of a stream of fresh water, making it imperative to update the curricula after regular intervals, for introducing latest development and innovation in the relevant field of knowledge. In exercise of the powers conferred under Section 3 Sub-Section 2 (ii) of Act of Parliament No. -

Minutes of the 28Th Meeting of the General Council of the National Computing Education Accreditation Council, HEC, Islamabad

Minutes of the 28th Meeting of the General Council of the National Computing Education Accreditation Council, HEC, Islamabad The 28th meeting of NCEAC (National Computing Education Accreditation Council) was held on Thursday, 15th March 2018 in Learning & Innovation Hall, HEC, H-8/1, Islamabad. The following attended: 1. Dr Mohammad Ayub Alvi, Chairperson NCEAC 2. Dr Shoab Ahmad Khan, Vice Chairperson, NCEAC 3. Dr Aasia Khanum, Associate Professor, Forman Christian College, Lahore 4. Dr Faisal Ahmad Khan Kakar, Dean (Faculty of Sciences) BUITEMS, Quetta 5. Dr Jamil Ahmad, Vice Chancellor, Kohat University of Science &Technology, Kohat 6. Dr Manzoor Ilahi Tamimy, Associate Professor, CIIT, Islamabad 7. Dr Muhammad Ali Maud, Professor (CS & E Department), UET, Lahore 8. Dr Muhammad Jamil Sawar, Consultant, MTBC, Rawalpindi 9. Dr Sayeed Ghani, Associate Dean, IBA, Karachi 10. Dr Zartash Afzal Uzmi, Assoc. Prof., LUMS, Lahore 11. Mr Fahad Saeed Warsi, VC Engineer, Ministry of IT, Sindh 12. Mr Raza Sukhera, PM(IT/Internal Audit), Ministry of IT, Islamabad 13. Ms Humaira Qudus, Deputy Director, QAA, HEC 14. Mr Naseem Haider, Ass. Electronic Advisor, Ministry of Sci. & Tech., Islamabad 15. Mr Sajid Iqbal, Manager Domestic Business, PSEB, Islamabad The following could not attend: 1. Dr Riaz Ahmad, Advisor, QAA, HEC 2. Chairman, Punjab IT Board, Lahore 3. President PASHA, Karachi 4. Secretary Ministry of Education, Gilgit-Baltistan 5. Secretary Information Technology Dept. Govt. of Balochistan, Quetta 6. Director, IT Dept. Govt. of KPK, Peshawar Meeting of the General Council started with recitation of verses from Holy Koran by Dr Manzoor Elahi Tamimy. Chairperson NCEAC welcomed and thanked the members for attending this meeting. -

University of Wah Annual Report 2015

UNIVERSITY OF WAH ANNUAL REPORT 2015 BISMILLAH IR-RaHMAN ir-RAHiM “(I START) IN THE NAME OF ALLAH, the most BENEFICENT, the most merciful” Table of Contents Message from the Chancellor..............................................................................01 Message from the Chairman Board of Governors...........................................02 Message from the Vice Chancellor.....................................................................03 Brief History of the University............................................................................04 Year in Review........................................................................................................05 University Management.......................................................................................07 Academic Activities...............................................................................................19 Academic Seminars / Conferences / Workshops.............................................31 Research and Development.................................................................................37 Quality Assurance.................................................................................................39 Faculty Development............................................................................................42 Students Enrolment...............................................................................................44 University Building Economies..........................................................................47 -

UOH Spotlight

Spotlight 2018 July - Dec 2018 | Volume 5 | Issue 2 The University of Haripur Restoring Hope; Building Community Spotlight, 2018 July - Dec 2018 | Volume 5 | Issue 2 © 2018 – The University of Haripur All rights reserved. All material appearing in this document is copyrighted and may be reproduced with permission. Any reproduction in full or in part of this publication must credit "The University of Haripur" as the copyright owner. Document Produced by: Zia-ur-Rehman (Manager, UAC) Edited, Rephrased and Formated by: Zia-ur-Rehman (Manager, UAC) Designed by: Zia-ur-Rehman (Manager, UAC) Printed by: Gul Awan Printers, Islamabad. The University of Haripur Restoring Hope; Building Community Spotlight, July - Dec 2018 | Volume 5 | Issue 2 EDITORIAL TEAM FOR DEVELOPING, DESIGNING & PRINTING OF SPOTLIGHT Dr. Abid Farid, Vice Chancellor-UOH (Patron in Chief, Spotlight) Salman Khan Zia-ur-Rehman Mudassar Ali Khan (Additional Director, P&D) (Manager, UAC) (Deputy Manager, UAC) Editor’s Message Dear Readers acknowledging the outstanding contributions of students, I hope all of you will be doing well. First of all, let me thank faculty and staff. No organization can successfully you for taking time out from your busy schedule for survive without the support of community and we are reading the latest issue of Spotlight that is in your hands. thankful to our community for their continuous support This issue of Spotlight presents a brief description of and hope that they will continue to support us in future various academic/research/co-curricular and extra- also. curricular activities during the last six months at University of Haripur. -

Program Book

PROGRAM BOOK International Conference on Innovation in Teaching and Learning (ICITL-2016) April 25-26, 2016 Organized By: Department of Education International Islamic University in Collaboration with IRD & Higher Education Commission Pakistan Venue: Old Campus, Quaid -e -Azam Auditorium, Faisal Mosque, Islamabad Message of Rector We, in the International Islamic University, Islamabad, consider ourselves privileged to be associated with this unique centre of learning in the Muslim world which strives to combine the essentials of the Islamic faith with the best of modern knowledge. I believe that quality of teaching is the most important factor which promotes academic excellence of any educational institution. I am really excited to welcome you to this exceptional academic event, the ―International Conference on Innovation in Teaching and Learning (ICITL- 2016)‖. ICITL will provide a forum to the academicians, professionals and researchers to re-shape their knowledge about teaching and learning along with an intellectual and international linkage atmosphere. It will focus on the latest trends, issues and innovations in teaching and learning. I do hope that important issues will be discussed, analyzed and questioned during the International Conference on Innovation in Teaching and Learning. Prof. Dr. Masoom Yasinzai Rector, International Islamic University Islamabad Message of President International Islamic University, Islamabad, is in the process of embarking on a new phase in the history of the University. We are busy in making preparations for an expansion plan both in terms of education as well as in terms of physical development. The main task of the educational institutions is to provide quality education (teaching and research). -

University of Engineering & Technology

Affiliated with University of Engineering & Technology (UET) Taxila Accredited by Pakistan Engineering Council (PEC) under OBE System VISION “To be a quality institute committed to excellence for producing professional graduates and potential leaders to serve the humanity and contribute to socioeconomic development through their knowledge and skills.” MISSION “To deploy the best possible teaching practices and pursue excellence to produce professional graduates in an ethical environment for the development of prosperous” society.” Core values • Outcome-Based Education • Teamwork • Discipline • Integrity 1. Message from the Chairman 2. Message from the Principal 3. Organizational Setup 4. Introduction 5. Civil Engineering Departments 6. Electrical Engineering Department 7. Mechanical Engineering Department 8. Basic Sciences & Humanities Department 9. Teaching and Examinations 10. Migration 11. Students Discipline Rules t is a well-known fact education in their chosen desirous of seeking that the Engineering fields to serve their admission in B.Sc. I and Technology is homeland, society and the Engineering Programs, advancing very swiftly nation as a whole. information about the in this modern age, and that courses offered by Swedish has completely In order to create an College of Engineering and revolutionized the enchanting, pleasant and Technology, Wah Cantt. In industrial, agricultural and learning environment, the addition, the information commercial sectors. purpose- built college about the selection Keeping in view the fast building houses is the state- procedure, available seats, global technological of-art, laboratories, rules and procedures are advancements, we are library, classrooms, sports also briefly stated here. committed to provide facilities etc. We have quality engineering and enrolled highly qualified, We believe in strict technical education. -

5Th Ranking of Pakistani Higher Education Institutions (Heis) 2015

HIG HER EDUC ATION COMMISSION 5th Ranking of Pakistani Higher Education Institutions (HEIs) 2015 Announced: 23.2.2016 Objective To create culture of competition among the Higher Education Institutions (HEIs). Encourage HEIs to compete at international level, improve quality/standards of education and use as tool for HEIs self-assessment of their performance for improvement. Introduction Ranking provide complete picture to stakeholders like researchers, students, business community, parents, industry etc. to compare institutions according to different parameters of their need, such as Quality & Research etc. For the development and progress of any country, quality of higher education is the key factor. HEIs are considered to be the originators of change and progress of the nations. In order to strengthen the quality of higher education in Pakistan, Higher Education Commission (HEC) has taken various initiatives to bring the HEIs of Pakistan at par with international standards. Ranking is one of the measures to scale the success of efforts of the HEIs to achieve the international competitiveness in education, research and innovation. Rankings is a debatable subject all over the world, In spite of the difficulties associated in ranking. This is the 5th ranking of Pakistani Universities being announced by Higher Education Commission of Pakistan. Last four rankings for the year were announced in 2006, 2012, 2013 and 2015. Methodology The methodologies for ranking got improved over the period of time in the light of feedback received from HEIs and making HEC's ranking more compatible with global rankings. There is no change in the ranking criteria for the current year ( 2015 ) and criteria is the same which was used for ranking 2014.