The Mont-Blanc Approach Towards Exascale Prof

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Supercomputing Resources in BSC, RES and PRACE

www.bsc.es Supercomputing Resources in BSC, RES and PRACE Sergi Girona, BSC-CNS Barcelona, 23 Septiembre 2015 ICTS 2014, un paso adelante para la RES Past RES members and resources BSC-CNS (MareNostrum) IAC (LaPalma II) Processor: 6.112 8-core Intel SandyBridge Processor: 1.024 IBM PowerPC 970 2.3GHz EP E5-2670/1600 20M 2.6GHz Memory: 2 TB 84 Xeon Phi 5110 P Disk: 14 + 10 TB Memory: 100,8 TB Network: Myrinet Disk: 2000 TB Universitat de València (Tirant II) Network: Infiniband FDR10 Processor: 2.048 IBM PowerPC 970 2.3GHz BSC-CNS (MinoTauro) Memory: 2 TB Processor: 256 M2090 NVIDIA GPU Disk: 56 + 40 TB 256 Intel E5649 2,53 GHz 6-core Network: Myrinet Memory: 3 TB Gobierno de Islas Canarias - ITC (Atlante) Network: Iinfiniband QDR Processor: 336 PowerPC 970 2.3GHz BSC-CNS (Altix) Memory: 672 GB Processor: SMP 128 cores Disk: 3 + 90 TB Memory: 1,5 TB Network: Myrinet UPM (Magerit II) Universidad de Málaga (Picasso) Processor: 3.920 (245x16) Power7 3.3GHz Processor: 82 AMD Opteron 6176 96 Intel E5-2670 Memory: 8700 GB 56 Intel E7-4870 Disk: 190 TB 32 GPUS Nvidia Tesla M2075 Network: Infiniband QDR Memory: 21 TB Universidad de Cantabria (Altamira II) Disk: 600 TB Lustre + 260 TB Network: Infiniband Processor: 316 Intel Xeon CPU E5-2670 2.6GHz Memory: 10 TB Universidad de Zaragoza (CaesaraugustaII) Disk: 14 TB Processor: 3072 AMD Opteron 6272 2.1GHz Network: Infiniband Memory: 12,5 TB Disk: 36 TB Network: Infiniband New nodes New RES members and HPC resources CESGA (FINIS TERRAE II) FCSCL (Caléndula) Peak Performance: 256 Tflops Peak Performance: -

RES Updates, Resources and Access / European HPC Ecosystem

RES updates, resources and Access European HPC ecosystem Sergi Girona RES Coordinator RES: HPC Services for Spain •The RES was created in 2006. •It is coordinated by the Barcelona Supercomputing Center (BSC-CNS). •It forms part of the Spanish “Map of Unique Scientific and Technical Infrastructures” (ICTS). RES: HPC Services for Spain RES is made up of 12 institutions and 13 supercomputers. BSC MareNostrum CESGA FinisTerrae CSUC Pirineus & Canigo BSC MinoTauro UV Tirant UC Altamira UPM Magerit CénitS Lusitania & SandyBridge UMA PiCaso IAC La Palma UZ CaesarAugusta SCAYLE Caléndula UAM Cibeles 1 10 100 1000 TFlop/s (logarithmiC sCale) RES: HPC Services for Spain • Objective: coordinate and manage high performance computing services to promote the progress of excellent science and innovation in Spain. • It offers HPC services for non-profit, open R&D purposes. • Since 2006, it has granted more than 1,000 Million CPU hours to 2,473 research activities. Research areas Hours granted per area 23% AECT 30% Mathematics, physics Astronomy, space and engineering and earth sciences BCV FI QCM 19% 28% Life and health Chemistry and sciences materials sciences RES supercomputers BSC (MareNostrum 4) 165888 cores, 11400 Tflops Main processors: Intel(R) Xeon(R) Platinum 8160 Memory: 390 TB Disk: 14 PB UPM (Magerit II) 3920 cores, 103 Tflops Main processors : IBM Power7 3.3 GHz Memory: 7840 GB Disk: 1728 TB UMA (Picasso) 4016 cores, 84Tflops Main processors: Intel SandyBridge-EP E5-2670 Memory: 22400 GB Disk: 720 TB UV (Tirant 3) 5376 cores, 111,8 Tflops -

La Difusió D

ADVERTIMENT . La consulta d’aquesta tesi queda condicionada a l’acceptació de les següents condicions d'ús: La difusió d’aquesta tesi per mitjà del servei TDX ( www.tesisenxarxa.net ) ha estat autoritzada pels titulars dels drets de propietat intel·lectual únicament per a usos privats emmarcats en activitats d’investigació i docència. No s’autoritza la seva reproducció amb finalitats de lucre ni la seva difusió i posada a disposició des d’un lloc aliè al servei TDX. No s’autoritza la presentació del seu contingut en una finestra o marc aliè a TDX (framing). Aquesta reserva de drets afecta tant al resum de presentació de la tesi com als seus continguts. En la utilització o cita de parts de la tesi és obligat indicar el nom de la persona autora. ADVERTENCIA . La consulta de esta tesis queda condicionada a la aceptación de las siguientes condiciones de uso: La difusión de esta tesis por medio del servicio TDR ( www.tesisenred.net ) ha sido autorizada por los titulares de los derechos de propiedad intelectual únicamente para usos privados enmarcados en actividades de investigación y docencia. No se autoriza su reproducción con finalidades de lucro ni su difusión y puesta a disposición desde un sitio ajeno al servicio TDR. No se autoriza la presentación de su contenido en una ventana o marco ajeno a TDR (framing). Esta reserva de derechos afecta tanto al resumen de presentación de la tesis como a sus contenidos. En la utilización o cita de partes de la tesis es obligado indicar el nombre de la persona autora. -

Sistema De Recuperación Automática De Un Supercomputador Con Arquitectura De Cluster

Universidad Politecnica´ de Madrid Facultad de Informatica´ Proyecto fin de carrera Sistema de recuperacion´ automatica´ de un supercomputador con arquitectura de cluster Autor: Juan Morales del Olmo Tutores: Pedro de Miguel Anasagasti Oscar´ Cubo Medina Madrid, septiembre 2008 La composicion´ de este documento se ha realizado con LATEX. Diseno˜ de Oscar Cubo Medina. Esta obra esta´ bajo una licencia Reconocimiento-No comercial-Compartir bajo la misma licencia 2.5 de Creative Commons. Para ver una copia de esta licencia, visite http://creativecommons.org/licenses/by-nc-sa/2.5/ o envie una carta a Creative Commons, 559 Nathan Abbott Way, Stanford, California 94305, USA. Las ideas no duran mucho. Hay que hacer algo con ellas. Santiago Ramon´ y Cajal A todos los que he desatendido durante la carrera Sinopsis El continuo aumento de las necesidades de computo´ de la comunidad cient´ıfica esta´ ocasionando la proliferacion´ de centros de supercomputacion´ a lo largo del mundo. Desde hace unos anos˜ la tendencia es ha utilizar una arquitectura de cluster para la construccion´ de estas maquinas.´ Precisamente la UPM cuenta con uno de estos computadores. Se trata de Magerit, el segundo super- computador mas´ potente de Espana˜ que se encuentra alojado en el CeSViMa y que alcanza los 16 TFLOPS. Los nodos de computo´ de un sistema de estas caracter´ısticas trabajan exhaustivamente casi sin des- canso, por eso es frecuente que vayan sufriendo problemas. Las tareas de reparacion´ de nodos consumen mucho tiempo al equipo de administracion´ de CeSViMa y no existen herramientas que agilicen estas labo- res. El objetivo de este proyecto es dotar de cierta autonom´ıa a Magerit para que pueda recuperar de forma automatica´ sus nodos de computo´ sin la intervencion´ de los administradores del sistema. -

Computational Astrophysics Computer Architectures Alexander Knebe, Universidad Autonoma De Madrid Computational Astrophysics Computer Architectures

Computational Astrophysics Computer Architectures Alexander Knebe, Universidad Autonoma de Madrid Computational Astrophysics Computer Architectures ! architectures ! real machines ! computing concepts ! parallel programming Computational Astrophysics Computer Architectures ! architectures ! real machines ! computing concepts ! parallel programming Computational Astrophysics architectures Computer Architectures ! serial machine = CPU RAM Computational Astrophysics architectures Computer Architectures ! serial machine = CPU RAM CPU: ! very primitive commands, obtained from compilers or interpreters of higher-level languages Computational Astrophysics architectures Computer Architectures ! serial machine = CPU RAM CPU: ! very primitive commands, obtained from compilers or interpreters of higher-level languages ! cycle chain: • fetch – get instruction and/or data from memory • decode – store instruction and/or data in register • execute – perform instruction Computational Astrophysics architectures Computer Architectures ! serial machine = CPU RAM CPU: ! very primitive commands, obtained from compilers or interpreters of higher-level languages ! cycle chain: • fetch – get instruction and/or data from memory • decode – store instruction and/or data in register • execute – perform instruction arithmetical/logical instructions: +, -, *, /, bitshift, if Computational Astrophysics architectures Computer Architectures ! serial machine = CPU RAM CPU: ! very primitive commands, obtained from compilers or interpreters of higher-level languages ! cycle -

BSC-Microsoft Research Centre Overview Osman Unsal

BSC-Microsoft Research Centre Overview Osman Unsal Moscow 11.June.2009 Outline • Barcelona Supercomputing Center • BSC – Microsoft Research Centre Barcelona Supercomputing Center • Mission – Investigate, develop and manage technology to facilitate the advancement of science. • Objectives – Operate national supercomputing facility – R&D in Supercomputing and Computer Architecture. – Collaborate in R&D e-Science • Consortium – the Spanish Government (MEC) – the Catalonian Government (DURSI) – the Technical University of Catalonia (UPC) Location MareNostrum ● MareNostrum 2006 ● 10240 PowerPC 970 cores ● 2560 JS21 2.3 GHz ● 20 TB of Memory ● 8 GB per node ● 380 TB Storage Capacity ● 3 networks ● Myrinet ● Gigabit ● 10/100 Ethernet ● Operating System ● Linux 2.6 (SuSE) Spanish Supercomputing Network Magerit Altamira CaesarAugusta LaPalma Picasso Tirant Access Committee Resource utilization Solicitudes recibidas y concedidas 140 120 • Resource distribution 100 – 20% internal use 80 60 – 80% assigned via Access 40 Committee 20 0 • Unique Access Committee 2006 P2 2006 P3 2006 P4 2007 P1 2007 P2 2007 P3 2008 P1 Total solicitadas, concedidas (clase A) y usadas – 44 Spanish researchers 60000 Solicitadas 50000 Concedidas • Core Team Usadas • 4 panels 40000 30000 – Astrophysics, Space, and Khoras Earth Sciences 20000 – Biomedicine and Life Sciences 10000 – Physics and Engineering 0 2006 P2 2006 P3 2006 P4 2007 P1 2007 P2 2007 P3 2008 P1 – Chemistry and New Materials Uso por áreas de Ciencia – New applications every four months 2500 – Committee renewed -

Numerical Simulations of Massive Separated Flows: Flow Over a Stalled NACA0012 Airfoil

Previous work on DNS/LES of bluff bodies Numerical details Computational details Results Numerical simulations of massive separated flows: flow over a stalled NACA0012 airfoil A. Oliva I. Rodr´ıguez O. Lehmkuhl R. Borrell A. Baez C.D. P´erez-Segarra Centre Tecnol`ogicde Transfer`enciade Calor ETSEIAT { UPC 7th RES Users'Conference Barcelona, September 13th, 2013 Previous work on DNS/LES of bluff bodies Numerical details Computational details Results Contents 1 Previous work on DNS/LES of bluff bodies 2 Numerical details 3 Computational details Pre-processing Solving the problem Post-processing 4 Results Previous work on DNS/LES of bluff bodies Numerical details Computational details Results Turbulent flow past bluff bodies Previous work on DNS/LES of bluff bodies Numerical details Computational details Results The turbulence problem Previous work on DNS/LES of bluff bodies Numerical details Computational details Results Motivations & objectives Advance in the understanding of the physics of turbulent flows To gain insight in the mechanism of the shear-layer transition and its influence in the wake characteristics and in the unsteady forces on the bluff body surface Contribute to the improvement of SGS modelling of complex flows, by the assessment of the performance of models suitable for unstructured grids and complex geometries at high Re, typical encountered in industrial applications. Previous work on DNS/LES of bluff bodies Numerical details Computational details Results Previous results (1/2) Project: Direct Numerical Simulation of turbulent flows in complex geometries using unstructured meshes. Flow around a circular cylinder. Refs: FI-2008-2-0037 and FI-2008-3-0021. -

High Performance Computing for Electron Dynamics in Complex

Optimisation of the first principle code Octopus for massive parallel architectures: application to light harvesting complexes Thesis Dissertation April 23, 2015 Joseba Alberdi-Rodriguez ([email protected]) Supervisors: Dr. Javier Muguerza Rivero Department of Computer Architecture and Technology Faculty of Computer Science Prof. Angel Rubio Secades Department of Materials Physics Faculty of Chemistry eman ta zabal zazu Universidad Euskal Herriko del País Vasco Unibertsitatea Servicio Editorial de la Universidad del País Vasco (UPV/EHU) - Euskal Herriko Unibertsitateko (UPV/EHU) Argitalpen Zerbitzua - University of the Basque Country (UPV/EHU) Press - ISBN: 978-84-9082-371-2 Knows no end Jakintzak ez duelako inoiz mugarik Familiari Abstract Computer simulation has become a powerful technique for assisting scientists in developing novel insights into the basic phenomena underlying a wide variety of complex physical systems. The work reported in this thesis is concerned with the use of massively parallel computers to simulate the fundamental features at the electronic structure level that control the initial stages of harvesting and transfer of solar energy in green plants which initiate the photosynthetic process. Currently available supercomputer facilities offer the possibility of using hundred of thousands of computing cores. However, obtaining a linear speed-up from HPC systems is far from trivial. Thus, great efforts must be devoted to understand the nature of the scientific code, the methods of parallel execution, data communication requirements in multi-process calculations, the efficient use of available memory, etc. This thesis deals with all of these themes, with a clear objective in mind: the electronic structure simulation of complete macro-molecular complexes, namely the Light Harvesting Complex II, with the aim of understanding its physical behaviour. -

MAP of Unique Scientific and Technical Infrastructures (ICTS)

MAP OF UNIQUE SCIENTIFIC AND TECHNICAL INFRASTRUCTURES (ICTS) GOBIERNO MINISTERIO DE ESPAÑA DE ECONOMÍA Y COMPETITIVIDAD MAP OF UNIQUE SCIENTIFIC AND TECHNICAL INFRASTRUCTURES (ICTS) GOBIERNO MINISTERIO DE ESPAÑA DE ECONOMÍA Y COMPETITIVIDAD FOREWORD panish science and technology The Ministry of Economy and Competitiveness Spain is a country of science, technology, and have reached a considerable has promoted, in conjuntction with the Auton- innovation. We participate in many of the level of excellence over the last omous Communities, an updated ICTS Map most important global infrastructure sites and Sthree decades. The number of approved on 7 October 2014 by the Council we are capable of competing for large inter- researchers has multiplied, we are now able of Scientific, Technological, and Innovation national projects. Reading through the pages to attract and retain talent, centres have been Policy, a coordination body for scientific and of this book will allow you to get an idea of created for highly-competitive research, and technological research in Spain. Thanks to a our scientific and technological capabilities. I companies have emerged that are capable productive collaboration with the Autono- invite you to get to know our Unique Scientific of tackling projects that require cutting-edge mous Communities, we have joined forces to and Technical Infrastructures, the ICTS, prod- technology. Our position as tenth in the world foster ICTS capacities, avoid redundancies, ucts of the effort of the scientific and techno- in scientific production, eighth if we consider and boost their industrial use. logical community. the journals with the greatest impact, reflects the quality of our science. Criteria of maximum scientific, technology, and innovation quality, submitting infrastruc- The growth that has characterised Spanish tures to independent assessment by top-level R&D over the last thirty years would not have international experts, have been taken into Carmen Vela Olmo been possible without facilities and infrastruc- account to create this map. -

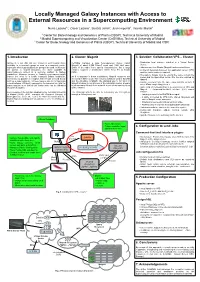

Locally Managed Galaxy Instances with Access to External Resources in a Supercomputing Environment

Locally Managed Galaxy Instances with Access to External Resources in a Supercomputing Environment Nuria Lozano1,2, Oscar Lozano2, Beatriz Jorrin1, Juan Imperial3, Vicente Martin2 1 Center for Biotechnology and Genomics of Plants (CBGP), Technical University of Madrid 2 Madrid Supercomputing and Visualization Center (CeSViMa), Technical University of Madrid 3 Center for Biotechnology and Genomics of Plants (CBGP), Technical University of Madrid and CSIC 1. Introduction 2. Cluster: Magerit 3. Solution: Collaboration VPS – Cluster Galaxy is a tool that can use resources well beyond those CeSViMa manages a large heterogeneous cluster, called ● Production local instance installed in a Virtual Private available to a research group or even to a research center, Magerit, of about 4,000 Power7 cores and 1,000 Intel ones. Server. especially when huge datasets are going to be used. In this case Some of the nodes have special characteristics, like a large ● Jobs are sent to a Cluster (Magerit) and executed there. the access to shared resources, like those available in memory footprint or accelerators (either Xeon Phi or Nvidia ● A shared filesystem between the application server (VPS) supercomputers centers is a welcome addition to Galaxy K20x). and the cluster nodes is required. capabilities. However, privacy or flexibility requirements might ● The path to Galaxy must be exactly the same on both the impose the need for a locally managed Galaxy installation, As it is standard in these installations, Magerit resources are nodes and the application server. It is the one required by something not possible in a typical, batch-mode oriented facility accessed in batch mode. The resource manager used is SLURM Magerit. -

Models, Methods and Tools for Availability Assessment of IT-Services and Business Processes

Models, Methods and Tools for Availability Assessment of IT-Services and Business Processes Dr.-Ing. Nikola Milanovic Habilitationsschrift an der FakultÄatIV { Elektrotechnik und Informatik der Technischen UniversitÄatBerlin Lehrgebiet: Informatik ErÄo®nug des Verfahrens: 15. Juli 2009 Verleihung der LehrbefÄahigung:19. Mai 2010 Ausstellung der Urkunde: 19. Mai 2010 Gutachter: Prof. Dr. Volker Markl (Technische UniversitÄatBerlin) Prof. Dr. Miroslaw Malek (Humboldt UniversitÄatzu Berlin) Prof. Dr. Alexander Reinefeld (Humboldt UniversitÄatzu Berlin) Berlin, 2010 D 83 II III Abstract In the world where on-demand and trustworthy service delivery is one of the main preconditions for successful business, service and business process avail- ability is of paramount importance and cannot be compromised. For that reason service availability is in central focus of the IT operations management research and practice. This work presents foundations, models, methods and tools that can be used for comprehensive service and business process availability assess- ment and management. As many terms in this emerging ¯eld are used colloquially, Chapter 2 pro- vides detailed background and de¯nitions. The problem of service availabil- ity assessment and management is interdisciplinary, combining ¯elds of fault- tolerance, service oriented architecture and business process management. An- other role of this chapter is to make the text accessible to readers with dif- ferent backgrounds. After the context of service availability has been intro- duced, Chapter 3 presents existing models for availability and performability assessment. The emphasis is on formal availability models, such as combinato- rial (e.g., reliability block diagrams, fault trees) and state-space (e.g., Markov chains, Petri net) models, but qualitative models (e.g., maturity models such as ITIL or CobiT or standards such as ISO 27002 and ISO 12207) are also covered, albeit with limited scope as they are not the primary focus of this work. -

Introduction to Openmp

Introduction to OpenMP HW & SW for High Performance Computing Master's Degree in Advanced Computing for Science and Engineering FIM - 2012/13 Vicente Martín v0.0 Contents ● Overview: Architectures and Tools. ● OpenMP. – The place of OpenMP among other HPC programming paradigms. – Memory and execution model. – Environment. – Directives. OpenMP UPC Taxonomy HPF MPI UPC HPF Ejemplo: cc-NUMA Silicon Graphics Origin 2000: two processors sharing a common hierarchical memory. Caches are held coherent (cc). The global shared memory space is unique. The access to memory banks physically located in remote boards is done through an interconnection network. The worst case memory access time to local, on board, memory is 318 ns. If the machine has 64 nodes, worst case access is 1067 ns. Ejemplo: Multicomputador Tarzan: Nodos 5-6: SMPs tetraprocesadores PPC 604e Nodos 1-4: Monoprocesadores Power2 SC Each node of the IBM SP2 is a workstation with its own Operating System controlling its separate address space. Access to a memory position belonging to other node requires the communication of two independent OS through an explicit message passing, in this case helped by specialised HW: The High Performance Switch. Machines available for practises. ● Magerit. – Multicomputer. Cluster architecture made up from 245 nodes with 16 Power7 cores each. Linux SLE. 8TB RAM / 200 TB disk under GPFS ● Triqui 1-2-3-4. Intel SMP 8 CPUs (2 quadcores). Linux (main machine for OpenMP) CeSViMa: Magerit Prácticas MPI (OpenMP limitado) Potencia sostenida >70 Tflops Multicomputador: Linux SLE – Infiniband QDR: comunicación interproceso MPI – Gigabit Ethernet: Sistema de ficheros (GPFS) + gestión. 245 nodos de cómputo – 16 Power 7 cores.