User Interaction in Hybrid Multi-Display Environments

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Before the FEDERAL TRADE COMMISSION Washington, DC 20580

Before the FEDERAL TRADE COMMISSION Washington, DC 20580 In the Matter of ) ) Request for Public Comment on the ) P104503 Federal Trade Commission’s ) Implementation of the Children’s Online ) Privacy Protection Rule ) COMMENTS OF The Center for Digital Democracy, American Academy of Child and Adolescent Psychiatry, American Academy of Pediatrics, Benton Foundation, Berkeley Media Studies Group, Campaign for a Commercial-Free Childhood, Center for Science in the Public Interest, Children Now, Consumer Action, Consumer Federation of America, Consumer Watchdog, Consumers Union, National Consumers League, Privacy Rights Clearinghouse, Public Health Institute, U.S. PIRG, and World Privacy Forum Of Counsel: Angela J. Campbell, Guilherme Roschke Pamela Hartka Institute for Public Representation James Kleier, Jr. Georgetown University Law Center Raquel Kellert 600 New Jersey Avenue, NW Andrew Lichtenberg Washington, DC 20001 Ari Meltzer (202) 662-9535 Georgetown Law Students Attorneys for CDD et al. June 30, 2010 SUMMARY The Center for Digital Democracy, American Academy of Child and Adolescent Psychiatry, American Academy of Pediatrics, Benton Foundation, Berkeley Media Studies Group, Campaign for a Commercial-Free Childhood, Center for Science in the Public Interest, Children Now, Consumer Action, Consumer Federation of America, Consumer Watchdog, Consumers Union, National Consumers League, Privacy Rights Clearinghouse, Public Health Institute, U.S. PIRG, and World Privacy Forum are pleased that the FTC has begun a comprehensive review of its children’s privacy regulations. In general, the Children’s Online Privacy Protection Act (COPPA) and the FTC rules implementing it have helped to protect the privacy and safety of children online. Recent developments in technology and marketing practices require that the COPPA rules be updated and clarified. -

Chapter 2: Windows 7

Chapter 2: Windows 7 When you delete a file, a. A copy of the file will be sent to the desktop b. You send the file to the Recycle Bin. c. The file will not be affected. d. A copy of the file will be stored in your active folder. Which of the following statements is correct about arrange icon on desktop. a. Icons on desktop can be arranged by name. b. Icons on desktop can be arranged by type. c. Icons on desktop can be arranged by size. d. All of the above. Which of the following statements is correct about opening control panel. a. You can open control panel from windows explorer. b. You can open control panel from start menu. c. You can open control panel from my computer. d. All of the above. The documents that is located in start menu store. a. The last 15 files that you have open. b. The last 15 files that you have delete. c. The last 15 files that you have copy. d. None of the above. The desktop is: a. An example of a hardware device. b. A folder. c. A file d. A window. The Shutdown icon on start menu means: a. Close all windows. b. Close the current windows. c. Close your computer. d. None of the above. To open a minimized window, you can click on the:- a. window’s button on the body of the taskbar. b. maximized button on the title bar. c. restore button on the title bar. d. all of the above. -

The Video Game Industry an Industry Analysis, from a VC Perspective

The Video Game Industry An Industry Analysis, from a VC Perspective Nik Shah T’05 MBA Fellows Project March 11, 2005 Hanover, NH The Video Game Industry An Industry Analysis, from a VC Perspective Authors: Nik Shah • The video game industry is poised for significant growth, but [email protected] many sectors have already matured. Video games are a large and Tuck Class of 2005 growing market. However, within it, there are only selected portions that contain venture capital investment opportunities. Our analysis Charles Haigh [email protected] highlights these sectors, which are interesting for reasons including Tuck Class of 2005 significant technological change, high growth rates, new product development and lack of a clear market leader. • The opportunity lies in non-core products and services. We believe that the core hardware and game software markets are fairly mature and require intensive capital investment and strong technology knowledge for success. The best markets for investment are those that provide valuable new products and services to game developers, publishers and gamers themselves. These are the areas that will build out the industry as it undergoes significant growth. A Quick Snapshot of Our Identified Areas of Interest • Online Games and Platforms. Few online games have historically been venture funded and most are subject to the same “hit or miss” market adoption as console games, but as this segment grows, an opportunity for leading technology publishers and platforms will emerge. New developers will use these technologies to enable the faster and cheaper production of online games. The developers of new online games also present an opportunity as new methods of gameplay and game genres are explored. -

B426 Ethernet Communication Module B426 Ethernet Communication Module

Intrusion Alarm Systems | B426 Ethernet Communication Module B426 Ethernet Communication Module www.boschsecurity.com u Full two-way IP event reporting with remote control panel programming support u 10/100 Base-T Ethernet communication for IPv6 and IPv4 networks u NIST-FIPS197 Certified for 128-bit to 256-bit AES Encrypted Line Security u Plug and Play installation, including UPnP service to enable remote programming behind firewalls u Advanced configuration by browser, RPS, or A-Link The Conettix Ethernet Communication Modules are 1 four-wire powered SDI, SDI2, and Option bus devices 2 4 that provides two-way communication with compatible control panels over IPv4 or IPv6 Ethernet networks. 3 Typical applications include: • Reporting and path supervision to a Conettix 12 Communications Receiver/Gateway. • Remote administration and control with Remote 11 5 Programming Software or A-Link. 9 • Connection to building automation and integration applications. 10 8 6 System overview 7 The modules (B426/B426-M) are built for a wide variety of secure commercial and industrial applications. Flexible end-to-end path supervision, AES encryption, and anti-substitution features make the Callout ᅳ Description Callout ᅳ Description modules desirable for high security and fire monitoring 1 ᅳ Compatible Bosch control 7 ᅳ Conettix D6100i applications. Use the modules as stand-alone paths or panel Communications Receiver/ with another communication technology. Gateway and/or Conettix D6600 Communications Receiver/ Gateway (Conettix D6600 Communications -

The Control Panel and Settings in Windows 10 Most Programs and Apps Have Settings Specific to That Program

GGCS Introduction to Windows 10 Part 3: The Control Panel and Settings in Windows 10 Most programs and apps have settings specific to that program. For example, in a word processor such as Microsoft Word there are settings for margins, fonts, tabs, etc. If you have another word processor, it can have different settings for margins, fonts, etc. These specific settings only affect one program. The settings in the Control Panel and in Settings are more general and affect the whole computer and peripherals such as the mouse, keyboard, monitor and printers. For example, if you switch the right and left buttons on the mouse in the Control Panel or in Settings, they are switched for everything you click on. If you change the resolution of the monitor, it is changed for the desktop, menus, Word, Internet Explorer and Edge, etc. How to display the Control Panel 1. Right-click the Windows Start button or press the Windows key on the keyboard + X. 2. Click “Control Panel” on the popup menu as shown in the first screen capture. In Windows 10, many of the settings that once were in the Control Panel have moved to Settings. However, there are often links in Settings that take you back to the Control Panel and many other settings that still only exist in the Control Panel. Settings versus Control Panel is an evolving part of Windows design that started with Windows 8. It is not clear at this time whether the Control Panel will eventually go away or whether it will simply be used less frequently by most users. -

B9512G Control Panels B9512G Control Panels

Intrusion Alarm Systems | B9512G Control Panels B9512G Control Panels www.boschsecurity.com u Fully integrated intrusion, fire, and access control allows users to interface with one system instead of three u Provides up to 599 points using a combination of hardwired or wireless devices for installation flexibility, and up to 32 areas and 32 doors for up to 2,000 users u On-board Ethernet port for Conettix IP alarm communication and remote programming, compatible with modern IP networks including IPv6/ IPv4, Auto-IP, and Universal Plug and Play u Installer-friendly features for simple installation and communications, including plug-in PSTN and cellular communication modules u Remote Security Control app which allows users to control their security systems - and view system cameras - remotely from mobile devices such as phones and tablets The B9512G Control Panel and the B8512G Control • Monitor alarm points for intruder, gas, or fire alarms. Panel are the new premier commercial control panels • Program all system functions local or remote using from Bosch. B9512G control panels integrate Remote Programming Software (RPS) or the Installer intrusion, fire, and access control providing one simple Services Portal programming tool (available in user interface for all systems. Europe, Middle East, Africa, and China) or by using With the ability to adapt to large and small basic programming through the keypad. applications, the B9512G provides up to 599 • Add up to 32 doors of access control using the optional B901 Access Control Module. Optionally use individually identified points that can be split into 32 the D9210C Access Control Interface Module for up areas. -

How to Open Control Panel in Windows 10 Way 1: Open It in the Start Menu

Course Name : O Level(B4-Ist sem.) Subject : ITT&NB Topic : Control Panel Date : 27-03-20 Control Panel The Control Panel is a component of Microsoft Windows that provides the ability to view and change system settings. It consists of a set of applets that include adding or removing hardware and software, controlling user accounts, changing accessibility options, and accessing networking settings. How to open Control Panel in Windows 10 Way 1: Open it in the Start Menu. Click the bottom-left Start button to open the Start Menu, type control panel in the search box and select Control Panel in the results. Way 2: Access Control Panel from the Quick Access Menu. Press Windows+X or right-tap the lower-left corner to open the Quick Access Menu, and then choose Control Panel in it. Way 3: Go to Control Panel through the Settings Panel. Open the Settings Panel by Windows+I, and tap Control Panel on it. Way 4: Open Control Panel in the File Explorer. Click the File Explorer icon on the taskbar, select Desktop and double-tap Control Panel. Way 5: Open the program via Run. Press Windows+R to open the Run dialog, enter control panel in the empty box and click OK. Changing System Date and Time Step 1: Click the bottom-right clock icon on the taskbar, and select Date and time settings. Or we can right click the clock icon, click Adjust data /time. Step 2: As the Date and time Windows opens, we can turn off Set time automatically. Step 3: In the Date and Time Settings window, respectively change date and time, and then tap OK to confirm the changes. -

Licensing Guide

Licensing Guide Plesk licenses, editions and standard features ............................................................... 2 Plesk Onyx – Special Editions (2018) .................................................................................. 4 Plesk Onyx Licensing on Hyperscalers ............................................................................... 4 Extra Features, Feature Packs and Extensions ................................................................ 5 Available Plesk Feature Packs ............................................................................................ 6 Plesk-developed extensions ............................................................................................... 8 Third-party premium extensions.................................................................................... 14 Plesk licenses, editions and standard features Plesk uses a simple, flexible license model with loads of options: 1) Server-based licenses – example: Plesk licenses a. Installation on dedicated servers (also known as physical servers) b. Installation on virtual servers (also known as virtual private servers or VPS) 2) You can buy all our licenses on a monthly/annual basis - or in discounted bundles. You can end this license at any time and it renews automatically through our licensing servers. 3) All three editions of our server-based licenses present a number of core features: a. Plesk Web Admin Edition For Web & IT Admins who manage sites for an employer, business, or themselves. If you need simple -

A Case Study on Merger of Skype and Microsoft

European Journal of Business, Economics and Accountancy Vol. 8, No. 1, 2020 ISSN 2056-6018 VALUATION OF TARGET FIRMS IN MERGERS AND ACQUISITIONS: A CASE STUDY ON MERGER OF SKYPE AND MICROSOFT Nguyen Vuong Bang Tam Thu Dau Mot University VIETNAM [email protected] ABSTRACT Mergers and acquisitions have become the most popular used methods of growth for the company and it’s one of the best ways to make a shortcut to get the success. They create the larger potential market share and open it up to a more diversified market, increase competitive advantage against competitors. It also allows firms to operate more efficiently and benefit both competition and consumers. However, there are also many cases that the synergy between acquiring company and acquired company failed. The most common reason is to not create synergy between both of them. In recent months, the merger between Microsoft and Skype is a very hot topic of analysts and viewers…etc. This acquisition presents a big opportunity for both firms, Skype give Microsoft a boost in the enterprise collaboration. To exchange for this synergy, Microsoft paid $8.5 billion in cash for Skype, the firm is not yet profitable. Skype revenue totaling $860 million last year and operating profit of $264 million, the company lost $6.9 million overall, according to documents filed with the SEC. Is that a good deal for Microsoft? Many analysts have different point of view but most of them have negative perspective. Research was to provide the analysis of Skype’s intrinsic value with an optimistic view of point about Skype’s future, Microsoft overpaid for Skype. -

(BHC) Will Be Held Friday, September 25, 2020 10:00 AM - 12:00 PM

BALDWIN HILLS CONSERVANCY NOTICE OF PUBLIC MEETING The meeting of the Baldwin Hills Conservancy (BHC) will be held Friday, September 25, 2020 10:00 AM - 12:00 PM Pursuant to Executive Order N-29-20 issued by Governor Gavin Newsom on March 17, 2020, certain provisions of the Bagley Keene Open Meeting Act are suspended due to a State of Emergency in response to the COVID-19 pandemic. Consistent with the Executive Order, this public meeting will be conducted by teleconference and internet, with no public locations. Members of the public may dial into the teleconference and or join the meeting online at Zoom. Please click the link below to join the webinar: https://ca-water-gov.zoom.us/j/91443309377 Or Telephone, Dial: USA 214 765 0479 USA 8882780296 (US Toll Free) Conference code: 596019 Materials for the meeting will be available at the Conservancy website on the Meetings & Notices tab in advance of the meeting date. 10:00 AM - CALL TO ORDER – Keshia Sexton, Chair MEETING AGENDA PUBLIC COMMENTS ON AGENDA OR NON-AGENDA ITEMS SHOULD BE SUBMITTED BEFORE ROLL CALL Public Comment and Time Limits: Members of the public can make comments in advance by emailing [email protected] or during the meeting by following the moderator’s directions on how to indicate their interest in speaking. Public comment will be taken prior to action on agenda items and at the end of the meeting for non-agenda items. Individuals wishing to comment will be allowed up to three minutes to speak. Speaking times may be reduced depending upon the number of speakers. -

Quick Start Guide

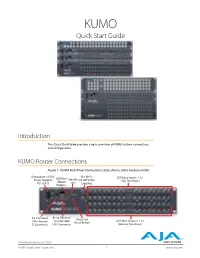

KUMO Quick Start Guide Introduction This Quick Start Guide provides a basic overview of KUMO system connections and configuration. KUMO Router Connections Figure 1. KUMO Rear Panel Connections (3232 shown, other models similar) Redundant 12 VDC REF BNCs USB Port SDI Video Inputs 1-32 Power Supplies Identify Ext Ref Video (Top Two Rows) PS1 & PS2 (Newer LED Looping KUMOs) RS-422 Serial RJ-45 Ethernet Recessed SDI Video Outputs 1-32 9 Pin Female 10/100/1000 Reset Button D Connector LAN Connector (Bottom Two Rows) Published January 30, 2020 KUMO Quick Start Guide v4.6 1 www.aja.com PS 1 & PS 2 Power Connectors Power to the KUMO unit is supplied by an external power supply module that accepts a 110-220VAC, 50/60Hz power input and supplies +12 VDC to KUMO via connector PS1 or PS2. One power supply is provided, and it may be connected to either of the two power connectors. An optional second power supply can provide redundancy to help protect against outages. IMPORTANT: The power connector has a latch, similar to an Ethernet connector. Depress the latch (facing the outside edge of the KUMO device) before disconnecting the power cable from the unit. Power Loss Recovery If KUMO experiences a loss of power, when power is restored the router returns to the previous state of all source to destination crosspoints, and all configured source and destination names are retained. If a KUMO control panel configured with a KUMO router loses power, when power is restored the control panel’s configuration is retained, and button tallies will return to their previous states. -

Game Developers Conference Europe Wrap, New Women’S Group Forms, Licensed to Steal Super Genre Break Out, and More

>> PRODUCT REVIEWS SPEEDTREE RT 1.7 * SPACEPILOT OCTOBER 2005 THE LEADING GAME INDUSTRY MAGAZINE >>POSTMORTEM >>WALKING THE PLANK >>INNER PRODUCT ART & ARTIFICE IN DANIEL JAMES ON DEBUG? RELEASE? RESIDENT EVIL 4 CASUAL MMO GOLD LET’S DEVELOP! Thanks to our publishers for helping us create a new world of video games. GameTapTM and many of the video game industry’s leading publishers have joined together to create a new world where you can play hundreds of the greatest games right from your broadband-connected PC. It’s gaming freedom like never before. START PLAYING AT GAMETAP.COM TM & © 2005 Turner Broadcasting System, Inc. A Time Warner Company. Patent Pending. All Rights Reserved. GTP1-05-116-104_mstrA_v2.indd 1 9/7/05 10:58:02 PM []CONTENTS OCTOBER 2005 VOLUME 12, NUMBER 9 FEATURES 11 TOP 20 PUBLISHERS Who’s the top dog on the publishing block? Ranked by their revenues, the quality of the games they release, developer ratings, and other factors pertinent to serious professionals, our annual Top 20 list calls attention to the definitive movers and shakers in the publishing world. 11 By Tristan Donovan 21 INTERVIEW: A PIRATE’S LIFE What do pirates, cowboys, and massively multiplayer online games have in common? They all have Daniel James on their side. CEO of Three Rings, James’ mission has been to create an addictive MMO (or two) that has the pick-up-put- down rhythm of a casual game. In this interview, James discusses the barriers to distributing and charging for such 21 games, the beauty of the web, and the trouble with executables.