Visual Menu Techniques Gilles Bailly, Éric Lecolinet, Laurence Nigay

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Class 715 Data Processing: Presentation Processing of Document, 715 - 1 Operator Interface Processing, and Screen Saver Display Processing

CLASS 715 DATA PROCESSING: PRESENTATION PROCESSING OF DOCUMENT, 715 - 1 OPERATOR INTERFACE PROCESSING, AND SCREEN SAVER DISPLAY PROCESSING 715 DATA PROCESSING: PRESENTATION PROCESSING OF DOCUMENT, OPERATOR INTERFACE PROCESSING, AND SCREEN SAVER DISPLAY PROCESSING 200 PRESENTATION PROCESSING OF 236 ..Stylesheet based markup DOCUMENT language transformation/ 201 .Integration of diverse media translation (e.g., to a 202 ..Authoring diverse media published format using stylesheet, etc.) presentation 237 ..Markup language syntax 203 ..Synchronization of presentation validation 204 ..Presentation attribute (e.g., ..Accommodating varying screen layout, etc.) 238 size 205 .Hypermedia 239 ..Conversion from one markup 206 ..Hyperlink organization (e.g., language to another (e.g., XML grouping, bookmarking, etc.) to HTML or utilizing an 207 ..Hyperlink display attribute intermediate format, etc.) (e.g., color, shape, etc.) 240 ..Frames 208 ..Hyperlink editing (e.g., link 241 ..Placemark-based indexing authoring, rerouting, etc.) 242 ..Structured document compression 209 .Compound document 243 .Layout 210 ..Matching application process to 244 ..Spacing control displayed media 245 ...Horizontal spacing 211 .Drawing 246 ..Area designation 212 .Spreadsheet 247 ..Boundary processing 213 ..Alternative scenario management 248 ..Format information in separate 214 ..Having dimension greater than file two 249 ..Format transformation 215 ..Including graph or chart of 250 ..Detecting format code change spreadsheet information 251 ..Pagination 216 ..Cell protection -

Organizing Windows Desktop/Workspace

Organizing Windows Desktop/Workspace Instructions Below are the different places in Windows that you may want to customize. On your lab computer, go ahead and set up the environment in different ways to see how you’d like to customize your work computer. Start Menu and Taskbar ● Size: Click on the Start Icon (bottom left). As you move your mouse to the edges of the Start Menu window, your mouse icon will change to the resize icons . Click and drag the mouse to the desired Start Menu size. ● Open Start Menu, and “Pin” apps to the Start Menu/Taskbar by finding them in the list, right-clicking the app, and select “Pin to Start” or “More-> “Pin to Taskbar” OR click and drag the icon to the Tiles section. ● Drop “Tiles” on top of each other to create folders of apps. ● Right-click on Tiles (for example the Weather Tile), and you can resize the Tile (maybe for apps you use more often), and also Turn On live tiles to get updates automatically in the Tile (not for all Tiles) ● Right-click applications in the Taskbar to view “jump lists” for certain applications, which can show recently used documents, visited websites, or other application options. ● If you prefer using the keyboard for opening apps, you probably won’t need to customize the start menu. Simply hit the Windows Key and start typing the name of the application to open, then hit enter when it is highlighted. As the same searches happen, the most used apps will show up as the first selection. -

Toga Documentation Release 0.2.15

Toga Documentation Release 0.2.15 Russell Keith-Magee Aug 14, 2017 Contents 1 Table of contents 3 1.1 Tutorial..................................................3 1.2 How-to guides..............................................3 1.3 Reference.................................................3 1.4 Background................................................3 2 Community 5 2.1 Tutorials.................................................5 2.2 How-to Guides.............................................. 17 2.3 Reference................................................. 18 2.4 Background................................................ 24 2.5 About the project............................................. 27 i ii Toga Documentation, Release 0.2.15 Toga is a Python native, OS native, cross platform GUI toolkit. Toga consists of a library of base components with a shared interface to simplify platform-agnostic GUI development. Toga is available on Mac OS, Windows, Linux (GTK), and mobile platforms such as Android and iOS. Contents 1 Toga Documentation, Release 0.2.15 2 Contents CHAPTER 1 Table of contents Tutorial Get started with a hands-on introduction to pytest for beginners How-to guides Guides and recipes for common problems and tasks Reference Technical reference - commands, modules, classes, methods Background Explanation and discussion of key topics and concepts 3 Toga Documentation, Release 0.2.15 4 Chapter 1. Table of contents CHAPTER 2 Community Toga is part of the BeeWare suite. You can talk to the community through: • @pybeeware on Twitter -

Citrix VDI—Opening and Closing

How To: Citrix VDI—Opening and Closing In order to provide better and more efficient administration of the GIS Citrix environment, a new means of access is being implemented. Once this new process is in place, the current means of GIS Citrix access will no longer be available. Users can access multiple programs via the new Citrix VDI (Virtual Desktop Infrastructure). In this exercise, direction will be provided to: 1) access the new Citrix VDI environment, 2) login to ArcFM, 3) retrieve a stored display, and 4) properly exit the system. Access Citrix VDI 1. Open an Internet Explorer window. 2. Type the following URL: http://citrix.cpsenergy.com 3. Select Enter on your keyboard. Note: Consider saving the URL as a favorite, such as “ GIS Citrix Receiver.” 4. When the Citrix application opens, select DESKTOPS from the top menu bar. 5. Click on Details to create the Prod VDI—Corp icon as a Favorite. 6. Click “Add to Favorites” then click “Open” to continue. 7. Use your NT username and password to sign in. The Prod VDI-Corp Desktop will then open and resemble the following image. 2 Notes: If your mouse pointer is not showing in the VDI screen, simply click into the area and the pointer will appear. If an MSN.com website loads, close it out. At the top of the Desktop is the XenDesktop toolbar. 8. Select and review the XenDesktop toolbar. A variety of tool tasks will appear. Select “Home” to minimize the VDI and return to the local desktop home screen. 3 Note: An initial DPI screen resolution setting is required for first time users. -

WIMP Interfaces for Emerging Display Environments

Graz University of Technology Institute for Computer Graphics and Vision Dissertation WIMP Interfaces for Emerging Display Environments Manuela Waldner Graz, Austria, June 2011 Thesis supervisor Prof. Dr. Dieter Schmalstieg Referee Prof. Dr. Andreas Butz To Martin Abstract With the availability of affordable large-scale monitors and powerful projector hardware, an increasing variety of display configurations can be found in our everyday environments, such as office spaces and meeting rooms. These emerging display environments combine conventional monitors and projected displays of different size, resolution, and orientation into a common interaction space. However, the commonly used WIMP (windows, icons, menus, and pointers) user interface metaphor is still based on a single pointer operating multiple overlapping windows on a single, rectangular screen. This simple concept cannot easily capture the complexity of heterogeneous display settings. As a result, the user cannot facilitate the full potential of emerging display environments using these interfaces. The focus of this thesis is to push the boundaries of conventional WIMP interfaces to enhance information management in emerging display environments. Existing and functional interfaces are extended to incorporate knowledge from two layers: the physical environment and the content of the individual windows. The thesis first addresses the tech- nical infrastructure to construct spatially aware multi-display environments and irregular displays. Based on this infrastructure, novel WIMP interaction and information presenta- tion techniques are demonstrated, exploiting the system's knowledge of the environment and the window content. These techniques cover two areas: spatially-aware cross-display navigation techniques for efficient information access on remote displays and window man- agement techniques incorporating knowledge of display form factors and window content to support information discovery, manipulation, and sharing. -

Tracking Menus

Tracking Menus George Fitzmaurice, Azam Khan, Robert Pieké, Bill Buxton, Gordon Kurtenbach Alias|wavefront 210 King Street East Toronto, Ontario M5A 1J7, Canada E-mail: {gf | akhan | rpieke | gordo}@aw.sgi.com; [email protected] ABSTRACT We describe a new type of graphical user interface widget, In keyboard-based systems, alternate ways of switching known as a “tracking menu.” A tracking menu consists of a between tools (keyboard accelerator techniques) are cluster of graphical buttons, and as with traditional menus, typically provided to reduce travel time. For example, in the cursor can be moved within the menu to select and Adobe Photoshop, a very popular feature is an accelerator interact with items. However, unlike traditional menus, technique in which the system switches from the current when the cursor hits the edge of the menu, the menu moves tool to the pan tool when the user depresses the space bar to continue tracking the cursor. Thus, the menu always key. Thus, trips to and from the tool palette are not stays under the cursor and close at hand. necessary to use the panning tool. In this paper we define the behavior of tracking menus, In pen-only systems, there is no keyboard available and show unique affordances of the widget, present a variety of therefore other techniques are required to reduce travel examples, and discuss design characteristics. We examine time. A pen-barrel button could be used to switch tools but one tracking menu design in detail, reporting on usability these buttons are often mistakenly pressed, causing an studies and our experience integrating the technique into a error, or are very awkward to press. -

Sample Paper Title

Marking the City: Interactions in Multiple Space Scales in Virtual Reality Marina Lima Medeiros Fig. 1. Desktop Menu in Following VR Viewer mode while HDM-user creates a Marker with Spatial Menu and Radial Menu options. Abstract—This article shows an overview of an immersive VR application to explore, to analyze and to mark large-scale photogrammetric 3D models. The objective is to interrogate the effectiveness of the proposed navigation process and annotation tools for spatial understanding. Due to the amount of interactions, different kinds of menus were necessary: desktop coordination menu, radial menu attached to the controller and 3D spatial menu for creating markers. Besides the menus, the navigation tasks through different perception space scales required a great number of interactions metaphors, patterns and techniques displaying the complexity of the user experience in Virtual Reality for understanding and analyzing urban digital twins. Those interactions allowed by the user interface were then analysed and classifyed according to a theoretical background and were experimented in preliminary tests with end users. Although designed for particular needs of the army, the tools and interactions can be adapted for city models explorations and urban planning. For future steps of the research, a usability study is going to be performed to test the performance of the interface and to have more end users feedback. Index Terms— Immersive User Interface, Interaction pattern, Interaction technique, Digital Urban Twins. 1 INTRODUCTION Digital twins’ technologies for simulating cities are a powerful tool understanding. This research proposes an immersive VR application for planners and stakeholders. They are especially important for the with navigation options through different space scales and annotation development of smart cities. -

Design Patterns and Frameworks for Developing WIMP User Interfaces

Design Patterns and Frameworks for Developing WIMP+ User Interfaces Vom Fachbereich Informatik der Technischen Universität Darmstadt genehmigte Dissertation zur Erlangung des akademischen Grades der Doktor-Ingenieurin (Dr.-Ing.) vorgelegt von Yongmei Wu, M.Sc. geboren in Guizhou, China Referenten der Arbeit: Prof. Dr.-Ing. Hans-Jürgen Hoffmann Prof. Robert J.K. Jacob, Ph.D. Tag des Einreichens: 09.10.2001 Tag der mündlichen Prüfung: 11.12.2001 D17 Darmstädter Dissertation 2001 Abstract Abstract This work investigates the models and tools for support of developing a kind of future user interfaces, which are partially built upon the WIMP (Windows, Icons, Menus, and Pointing device: the mouse) interaction techniques and devices; and able to observe and leverage at least one controlled process under the supervision of their user(s). In this thesis, they are called WIMP+ user interfaces. There are a large variety of applications dealt with WIMP+ user interfaces, e.g., robot control, telecommunication, car driver assistant systems, distributed multi-user database systems, automation rail systems, etc. At first, it studies the evolution of user interfaces, deduces the innovative functions of future user interfaces, and defines WIMP+ user interfaces. Then, it investigates high level models for user interface realization. Since the most promising user-centered design methodology is a new emerging model, it is still short of modeling methodology and rules to support the concrete development process. Therefore, in this work, a universal modeling methodology, which picks up the design pattern application, is researched and used to structure different low level user interface models. And a framework, named Hot-UCDP, for aiding the development process, is proposed. -

Augmented Reality In-Situation Menu of 3D Models

17 Augmented Reality In-Situation Menu of 3D Models Thuong N. Hoang Wearable Computer Lab – University of South Australia [email protected] Abstract range of innovative 3D modeling techniques, including construction of 3D geometry using carving We present a design and implementation of an in- planes [2] and constructive solid geometry with situation menu system for loading and visualizing 3D boolean operators for creating complex 3D objects [3]. models in the physical world context. The menu Pinch gloves are the main input devices for system uses 3D objects as menu items, and is directly Tinmith, with fiducial markers placed on the thumbs placed within the context of the augmented for the control of two cursors. Tinmith hand cursors environment, to support visualizing and placing 3D are detected if the user brings the markers into view of models into the augmented world. The menu system the video camera, located on top of the HMD helmet. employs a collection of techniques for the placement The conductive pads on the fingers and palms of the of 3D models in one of three relative coordinate pinch gloves are used for menu command execution. systems: head, body, and world. The in-situ menu also The command menu system is located on both left and serves as a 3D model selection/placement tool and a right lower corners of the display with 10 options collaborative feature between an outdoor AR user and available at any time, each of which is mapped a desktop user. The insitu menu system extends the directly to the fingers on the corresponding pinch current Tinmith menu and modeling system. -

Basic Computer Lesson

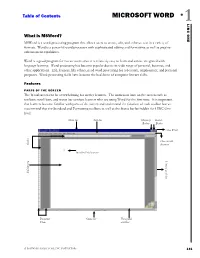

Table of Contents MICROSOFT WORD 1 ONE LINC What is MSWord? MSWord is a word-processing program that allows users to create, edit, and enhance text in a variety of formats. Word is a powerful word processor with sophisticated editing and formatting as well as graphic- enhancement capabilities. Word is a good program for novice users since it is relatively easy to learn and can be integrated with language learning. Word processing has become popular due to its wide range of personal, business, and other applications. ESL learners, like others, need word processing for job search, employment, and personal purposes. Word-processing skills have become the backbone of computer literacy skills. Features PARTS OF THE SCREEN The Word screen can be overwhelming for novice learners. The numerous bars on the screen such as toolbars, scroll bars, and status bar confuse learners who are using Word for the first time. It is important that learners become familiar with parts of the screen and understand the function of each toolbar but we recommend that the Standard and Formatting toolbars as well as the Status bar be hidden for LINC One level. Menu bar Title bar Minimize Restore Button Button Close Word Close current Rulers document Insertion Point (cursor) Vertical scroll bar Editing area Document Status bar Horizontal Views scroll bar A SOFTWARE GUIDE FOR LINC INSTRUCTORS 131 1 MICROSOFT WORD Hiding Standard toolbar, Formatting toolbar, and Status bar: • To hide the Standard toolbar, click View | Toolbars on the Menu bar. Check off Standard. LINC ONE LINC • To hide the Formatting toolbar, click View | Toolbars on the Menu bar. -

The BIAS Soundscape Planning Tool for Underwater Continuous Low Frequency Sound

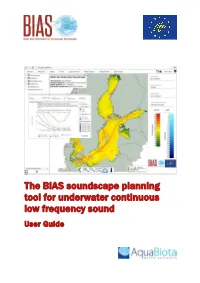

The BIAS soundscape planning tool for underwater continuous low frequency sound User Guide The BIAS soundscape planning tool BIAS - Baltic Sea Information on the Acoustic Soundscape The EU LIFE+ project Baltic Sea Information on the Acoustic Soundscape (BIAS) started in September 2012 for supporting a regional implementation of underwater noise in the Baltic Sea, in line with the EU roadmap for the Marine Strategy Framework Directive (MSFD) and the general recognition that a regional handling of Descriptor 11 is advantageous, or even necessary, for regions such as the Baltic Sea. BIAS was directed exclusively towards the MSFD descriptor criteria 11.2 Continuous low frequency sound and aimed at the establishment of a regional implementation plan for this sound category with regional standards, methodologies, and tools allowing for cross-border handling of acoustic data and the associated results. The project was the first one to include all phases of implementation of a joint monitoring programme across national borders. One year of sound measurements were performed in 2014 by six nations at 36 locations across the Baltic Sea. The measurements, as well as the post-processing of the measurement data, were subject to standard field procedures, quality control and signal processing routines, all established within BIAS based on the recommendations by the Technical Subgroup on Underwater Noise (TSG-Noise). The measured data were used to model soundscape maps for low frequent continuous noise in the project area, providing the first views of the Baltic Sea soundscape and its variation on a monthly basis. In parallel, a GIS-based online soundscape planning tool was designed for handling and visualizing both the measured data and the modelled soundscape maps. -

Flexible Interfaces: Future Developments for Post-WIMP Interfaces Benjamin Bressolette, Michel Beaudouin-Lafon

Flexible interfaces: future developments for post-WIMP interfaces Benjamin Bressolette, Michel Beaudouin-Lafon To cite this version: Benjamin Bressolette, Michel Beaudouin-Lafon. Flexible interfaces: future developments for post- WIMP interfaces. CMMR 2019 - 14th International Symposium on Computer Music Multidisciplinary Research, Oct 2019, Marseille, France. hal-02435177 HAL Id: hal-02435177 https://hal.archives-ouvertes.fr/hal-02435177 Submitted on 15 Jan 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Flexible interfaces : future developments for post-WIMP interfaces Benjamin Bressolette and Michel Beaudouin-Lafon LRI, Universit´eParis-Sud, CNRS, Inria, Universit´eParis-Saclay Orsay, France [email protected] R´esum´e Most current interfaces on desktop computers, tablets or smart- phones are based on visual information. In particular, graphical user in- terfaces on computers are based on files, folders, windows, and icons, ma- nipulated on a virtual desktop. This article presents an ongoing project on multimodal interfaces, which may lead to promising improvements for computers' interfaces. These interfaces intend to ease the interaction with computers for blind or visually impaired people. We plan to inter- view blind or visually impaired users, to understand how they currently use computers, and how a new kind of flexible interface can be designed to meet their needs.