Swarms Are Hell: Warfare As an Anti-Transhuman Choice

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Posthuman Rights: Dimensions of Transhuman Worlds

#EVANS, W.. (2015). Posthuman Rights: Dimensions of Transhuman Worlds. Revista Teknokultura, Vol. 12(2), 373-384. Recibido: 29-04-2015 Open peer review Aceptado: 12-07-2015 http://revistas.ucm.es/index.php/TEKN/pages/view/opr-49072 Posthuman Rights: Dimensions of Transhuman Worlds Derechos posthumanos: Dimensiones de los mundos transhumanos Woody Evans Texas Woman’s University, EEUU [email protected] ABSTRACT There are at least three dimensions to rights. We may have and lack freedom to 1) be, 2) do, and 3) have. These dimensions reformulate Locke’s categories, and are further complicated by placing them within the context of domains such as natural or civil rights. Here the question of the origins of rights is not addressed, but issues concerning how we may contextualize them are discussed. Within the framework developed, this paper makes use of Actor-Network Theory and Enlightenment values to examine the multidimensionality and appropriateness of animal rights and human rights for posthumans. The core position here is that rights may be universal and constant, but they can only be accessed within a matrix of relative cultural dimensions. This will be true for posthumans, and their rights will be relative to human rights and dependent on human and posthuman responsibilities. http://dx.doi.org/10.5209/rev_TK.2015.v12.n2.49072 ISSN: 1549 2230 Revista Teknokultura, (2015), Vol. 12 Núm. 2: 373-384 373 Posthuman Rights: Woody Evans Dimensions of Transhuman Worlds KEYWORDS transhumanism, human rights, natural rights, animal rights, civil rights, technology, political philosophy. RESUMEN Hay por lo menos tres dimensiones de los derechos. -

Transhumanism, Metaphysics, and the Posthuman God

Journal of Medicine and Philosophy, 35: 700–720, 2010 doi:10.1093/jmp/jhq047 Advance Access publication on November 18, 2010 Transhumanism, Metaphysics, and the Posthuman God JEFFREY P. BISHOP* Saint Louis University, St. Louis, Missouri, USA *Address correspondence to: Jeffrey P. Bishop, MD, PhD, Albert Gnaegi Center for Health Care Ethics, Saint Louis University, 3545 Lafayette Avenure, Suite 527, St. Louis, MO 63104, USA. E-mail: [email protected] After describing Heidegger’s critique of metaphysics as ontotheol- ogy, I unpack the metaphysical assumptions of several transhu- manist philosophers. I claim that they deploy an ontology of power and that they also deploy a kind of theology, as Heidegger meant it. I also describe the way in which this metaphysics begets its own politics and ethics. In order to transcend the human condition, they must transgress the human. Keywords: Heidegger, metaphysics, ontology, ontotheology, technology, theology, transhumanism Everywhere we remain unfree and chained to technology, whether we passionately affirm or deny it. —Martin Heidegger I. INTRODUCTION Transhumanism is an intellectual and cultural movement, whose proponents declare themselves to be heirs of humanism and Enlightenment philosophy (Bostrom, 2005a, 203). Nick Bostrom defines transhumanism as: 1) The intellectual and cultural movement that affirms the possibility and desirability of fundamentally improving the human condition through applied reason, especially by developing and making widely available technologies to eliminate aging and to greatly enhance human intellectual, physical, and psychological capacities. 2) The study of the ramifications, promises, and potential dangers of technologies that will enable us to overcome fundamental human limitations, and the related study of the ethical matters involved in developing and using such technologies (Bostrom, 2003, 2). -

Human Enhancements and Voting: Towards a Declaration of Rights and Responsibilities of Beings

philosophies Article Human Enhancements and Voting: Towards a Declaration of Rights and Responsibilities of Beings S. J. Blodgett-Ford 1,2 1 Boston College Law School, Boston College, Newton Centre, MA 02459, USA; [email protected] 2 GTC Law Group PC & Affiliates, Westwood, MA 02090, USA Abstract: The phenomenon and ethics of “voting” will be explored in the context of human enhance- ments. “Voting” will be examined for enhanced humans with moderate and extreme enhancements. Existing patterns of discrimination in voting around the globe could continue substantially “as is” for those with moderate enhancements. For extreme enhancements, voting rights could be challenged if the very humanity of the enhanced was in doubt. Humans who were not enhanced could also be disenfranchised if certain enhancements become prevalent. Voting will be examined using a theory of engagement articulated by Professor Sophie Loidolt that emphasizes the importance of legitimization and justification by “facing the appeal of the other” to determine what is “right” from a phenomenological first-person perspective. Seeking inspiration from the Universal Declaration of Human Rights (UDHR) of 1948, voting rights and responsibilities will be re-framed from a foun- dational working hypothesis that all enhanced and non-enhanced humans should have a right to vote directly. Representative voting will be considered as an admittedly imperfect alternative or additional option. The framework in which voting occurs, as well as the processes, temporal cadence, Citation: Blodgett-Ford, S.J. Human and role of voting, requires the participation from as diverse a group of humans as possible. Voting Enhancements and Voting: Towards a rights delivered by fiat to enhanced or non-enhanced humans who were excluded from participation Declaration of Rights and in the design and ratification of the governance structure is not legitimate. -

From Transgender to Transhuman Marriage and Family in a Transgendered World the Freedom of Form

Copyright © 2011 Martine Rothblatt All Rights Reserved. Printed in the U.S.A. Edited by Nickolas Mayer Cover Design by Greg Berkowitz ISBN-13: 978-0615489421 ISBN-10: 0615489427 eBook ISBN: 978-1-61914-309-8 For Bina CONTENTS __________________________ Forward by Museum of Tolerance Historian Harold Brackman, Ph.D. Preface to the Second Edition Preface to the First Edition Acknowledgements 1. Billions of Sexes What is Male and Female? Are Genitals But the Tip of the Iceberg? Thought Patterns New Feminist Thinking Scientific Developments Transgenderism The Apartheid of Sex Persona Creatus 2. We Are Not Our Genitals: The Continuum of Sex When Sex Began The Genesis of Gender Jealousy Egotism Testosterone Liberation The Sex of An Avatar 3. Law and Sex Women’s Work or a Man’s Job Marriage and Morality Looking for Sex Olympic Masquerade Counting Cyberfolks 4. Justice and Gender: The Milestones Ahead Love and Marriage Government and Sex The Bathroom Bugaboo Papering a Transhuman 5. Science and Sex What Society Needs from Gender Science The Inconsistency of Sex Opportunities Under the Rainbow of Gender Elements of Sexual Identity Identity and Behavior; Sex and Tissue Where Did My Sex Come From? Is Consciousness Like Pornography? 6. Talking and Thinking About Sex Sex on the Mind The Human Uncertainty Principle Bio-Cyber-Ethics 7. Sex and Sex Beyond Gay or Straight Multisexuality Cybersex Is There Transhuman Joy Without Orgasmic Sex? 8. From Transgender to Transhuman Marriage and Family in a Transgendered World The Freedom of Form Epilogue Afterword FORWARD __________________________ by Museum of Tolerance Historian Harold Brackman, Ph.D. -

GURPS Classic Dinosaurs

G ® U R P GURPS S D I N O S A U R AND OOTHER PREHHISTTORRIC CREEATTUREES S S T E V E J A C K S O N BY RD G O R EW NE A R FO HOR M A K BBYY SSTTEEPPHHEENN DDEEDDMMAANN ITH JAC E W . DR S STEVE JACKSON GAMES G U R TYRANT KINGS! P S Giganotosaurus, the largest D carnivore ever to walk the I Earth . Packs of N Deinonychus, the “terrible O S claws” . Triceratops, armed A with shield and spears . U 65-ton Brachiosaurus, tall as R a four-story building . S Ankylosaurus, the living tank . the fearsome Tyrannosaurus rex . cunning Troodons . the deadly Utahraptor . Their fossil bones inspired myths of dragons and other monsters. Their images still terrify us today. Visit their world – or have them visit yours. GURPS Dinosaurs includes: A detailed bestiary of the world before human history, with more than 100 dinosaurs, plus pterosaurs, sea monsters, other reptiles, and prehistoric birds and mammals. A chronology of life on Earth, from the Cambrian explosion to the Ice Ages. Character creation and detailed roleplaying information for early hominids and humans, from Australopithecus to Cro-Magnon, including advantages, disadvantages and skills. S Maps and background material for the world of the dinosaurs. T E Plot and adventure ideas for Time Travel, Supers, Horror, V Cliffhangers, Atomic Horror, Space, Survivors, Fantasy, E J Cyberpunk, and even caveman slapstick campaigns! A C K WRITTEN BY STEPHEN DEDMAN S ISBN 1-55634-293-4 O EDITED BY STEVE JACKSON, LILLIAN BUTLER N AND SUSAN PINSONNEAULT G COVER BY PAUL KOROSHETZ A M ILLUSTRATED BY SCOTT COOPER, E USSELL AWLEY AND AT RTEGA ® 9!BMF@JA:RSQXRUoYjZ\ZlZdZ` S R H P O SJG01795 6508 Printed in the STEVE JACKSON GAMES U.S.A. -

Superhuman, Transhuman, Post/Human: Mapping the Production and Reception of the Posthuman Body

Superhuman, Transhuman, Post/Human: Mapping the Production and Reception of the Posthuman Body Scott Jeffery Thesis submitted for the Degree of Doctor of Philosophy School of Applied Social Science, University of Stirling, Scotland, UK September 2013 Declaration I declare that none of the work contained within this thesis has been submitted for any other degree at any other university. The contents found within this thesis have been composed by the candidate Scott Jeffery. ACKNOWLEDGEMENTS Thank you, first of all, to my supervisors Dr Ian McIntosh and Dr Sharon Wright for their support and patience. To my brother, for allowing to me to turn several of his kitchens into offices. And to R and R. For everything. ABSTRACT The figure of the cyborg, or more latterly, the posthuman body has been an increasingly familiar presence in a number of academic disciplines. The majority of such studies have focused on popular culture, particularly the depiction of the posthuman in science-fiction, fantasy and horror. To date however, few studies have focused on the posthuman and the comic book superhero, despite their evident corporeality, and none have questioned comics’ readers about their responses to the posthuman body. This thesis presents a cultural history of the posthuman body in superhero comics along with the findings from twenty-five, two-hour interviews with readers. By way of literature reviews this thesis first provides a new typography of the posthuman, presenting it not as a stable bounded subject but as what Deleuze and Guattari (1987) describe as a ‘rhizome’. Within the rhizome of the posthuman body are several discursive plateaus that this thesis names Superhumanism (the representation of posthuman bodies in popular culture), Post/Humanism (a critical-theoretical stance that questions the assumptions of Humanism) and Transhumanism (the philosophy and practice of human enhancement with technology). -

Agogic Principles in Trans-Human Settings †

Proceedings Agogic Principles in Trans-Human Settings † Christian Stary Department of Business Information Systems-Communications Engineering, Johannes Kepler University, 4040 Linz, Austria; [email protected]; Tel.: +43-732-2468-4320 † Presented at the IS4SI 2017 Summit DIGITALISATION FOR A SUSTAINABLE SOCIETY, Gothenburg, Sweden, 12–16 June 2017. Published: 8 June 2017 Abstract: This contribution proposes a learning approach to system design in a transhuman era. Understanding transhuman settings as systems of co-creation and co-evolution, development processes can be informed by learning principles. A development framework is proposed and scenarios of intervention are sketched. Illustrating the application of agogic principles sets the stage for further research in this highly diverse and dynamically evolving field. Keywords: agocics; learning; trans-human; post-human; system design 1. Introduction According to More transhumanismus is about evolving intelligent life beyond its currently human form and overcoming human limitations by means of science and technology. It should be guided by life-promoting principles and values [1]. Advocating the improvement of human capacities through advanced technologies has triggered intense discussions about future IT and artefact developments, in particular when Bostrom [2,3] has argued self-emergent artificial systems could finally control the development of intelligence, and thus, human life. To that respect Kenney and Haraway [4] have argued for co-construction anticipating a variety of humanoid and artificial actors: ‘Multi-species-becoming-with, multi-species co-making, making together; sym-poiesis rather than auto- poiesis’ (p. 260). Such an approach challenges system design taking into account humans and technological artefacts, as transhuman entities need to be considered at some stage of development. -

Cyborgs and Enhancement Technology

philosophies Article Cyborgs and Enhancement Technology Woodrow Barfield 1 and Alexander Williams 2,* 1 Professor Emeritus, University of Washington, Seattle, Washington, DC 98105, USA; [email protected] 2 140 BPW Club Rd., Apt E16, Carrboro, NC 27510, USA * Correspondence: [email protected]; Tel.: +1-919-548-1393 Academic Editor: Jordi Vallverdú Received: 12 October 2016; Accepted: 2 January 2017; Published: 16 January 2017 Abstract: As we move deeper into the twenty-first century there is a major trend to enhance the body with “cyborg technology”. In fact, due to medical necessity, there are currently millions of people worldwide equipped with prosthetic devices to restore lost functions, and there is a growing DIY movement to self-enhance the body to create new senses or to enhance current senses to “beyond normal” levels of performance. From prosthetic limbs, artificial heart pacers and defibrillators, implants creating brain–computer interfaces, cochlear implants, retinal prosthesis, magnets as implants, exoskeletons, and a host of other enhancement technologies, the human body is becoming more mechanical and computational and thus less biological. This trend will continue to accelerate as the body becomes transformed into an information processing technology, which ultimately will challenge one’s sense of identity and what it means to be human. This paper reviews “cyborg enhancement technologies”, with an emphasis placed on technological enhancements to the brain and the creation of new senses—the benefits of which may allow information to be directly implanted into the brain, memories to be edited, wireless brain-to-brain (i.e., thought-to-thought) communication, and a broad range of sensory information to be explored and experienced. -

From Australopithecus to Cyborgs. Are We Facing the End of Human Evolution?

Acta Bioethica 2020; 26 (2): 165-177 FROM AUSTRALOPITHECUS TO CYBORGS. ARE WE FACING THE END OF HUMAN EVOLUTION? Justo Aznar1, Enrique Burguete2 Abstract: Social implementation of post-humanism could affect the biological evolution of living beings and especially that of humans. This paper addresses the issue from the biological and anthropological-philosophical perspectives. From the biological perspective, reference is made first to the evolution of hominids until the emergence of Homo sapiens, and secondly, to the theories of evolution with special reference to their scientific foundation and the theory of extended heredity. In the anthropological-philosophical part, the paradigm is presented according to which human consciousness, in its emancipatory zeal against biological nature, must “appropriate” the roots of its physis to transcend the human and move towards a more “perfect” entity; we also assess the theory that refers this will to the awakening of the cosmic consciousness in our conscious matter. Finally, it assesses whether this post-humanist emancipatory paradigm implies true evolution or, instead, an involution to the primitive state of nature. Keywords: theories of evolution, Australopithecus, cyborgs, post-humanism, transhumanism, somatechnics De Australopithecus a cyborgs. ¿Nos enfrentamos al final de la evolución humana? Resumen: La implementación social del poshumanismo podría afectar la evolución biológica de los seres vivos y, especialmente, la de los humanos. Este artículo aborda el tema desde las perspectivas biológica y antropológico-filosófica. Desde la perspectiva biológica, se hace referencia, en primer lugar, a la evolución de los homínidos hasta la aparición del Homo sapiens, y en segundo lugar a las teorías de la evolución, con especial referencia a su fundamento científico y a la teoría de la herencia extendida. -

Open Dissertation.Pdf

The Pennsylvania State University The Graduate School TRANSHUMANISM: EVOLUTIONARY LOGIC, RHETORIC, AND THE FUTURE A Dissertation in English by Andrew Pilsch c 2011 Andrew Pilsch Submitted in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy May 2011 The dissertation of Andrew Pilsch was reviewed and approved∗ by the following: Richard Doyle Professor of English Dissertation Advisor, Chair of Committee Jeffrey Nealon Liberal Arts Research Professor of English Mark Morrisson Professor of English and Science, Technology, and Society Robert Yarber Distinguished Professor of Art Mark Morrisson Graduate Program Director Professor of English ∗Signatures are on file in the Graduate School. Abstract This project traces the discursive formation called “transhumanism” through vari- ous incarnations in twentieth century science, philosophy, and science fiction. While subject to no single, clear definition, I follow most of the major thinkers in the topic by defining transhumanism as a discourse surrounding the view of human beings as subject to ongoing evolutionary processes. Humanism, from Descartes forward, has histori- cally viewed the human as stable; transhumanism, instead, views humans as constantly evolving and changing, whether through technological or cultural means. The degree of change, the direction of said change, and the shape the species will take in the distant future, however, are all topics upon which there is little consensus in transhuman circles. In tracing this discourse, I accomplish a number of things. First, previously dis- parate zones of academic inquiry–poststructural philosophy, science studies, literary modernism and postmodernism, etc.–are shown to be united by a common vocabulary when viewed from the perspective of the “evolutionary futurism” suggested by tran- shuman thinkers. -

The Dangers of Transhumanist Philosophies on Human and Nonhuman Beings

Iowa State University Capstones, Theses and Graduate Theses and Dissertations Dissertations 2017 Beyond Transhumanism: The aD ngers of Transhumanist Philosophies on Human and Nonhuman Beings Benjamin Shane Evans Iowa State University Follow this and additional works at: https://lib.dr.iastate.edu/etd Part of the English Language and Literature Commons Recommended Citation Evans, Benjamin Shane, "Beyond Transhumanism: The aD ngers of Transhumanist Philosophies on Human and Nonhuman Beings" (2017). Graduate Theses and Dissertations. 15300. https://lib.dr.iastate.edu/etd/15300 This Thesis is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Graduate Theses and Dissertations by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. Beyond Transhumanism: The dangers of Transhumanist philosophies on human and nonhuman beings by Benjamin Shane Evans A thesis submitted to the graduate faculty in partial fulfillment of the requirements for the degree of MASTER OF ARTS Major: English Programs of Study Committee: Brianna Burke, Major Professor Matthew Sivils Charissa Menifee The student author and the program of study committee are solely responsible for the content of this thesis. The Graduate College will ensure this thesis is globally accessible and will not permit alterations after a degree is conferred. Iowa State University Ames, Iowa 2017 ii DEDICATION The work and thoughts put into these words are forever dedicated to Charlie Thomas and Harper Amalie, and those who come next. TABLE OF CONTENTS Page ACKNOWLEDGMENTS………………………………………………………………………iv INTRODUCTION……………………………..……………………………………………..….1 CHAPTER 1. -

Building Transhuman Immortals-Revised

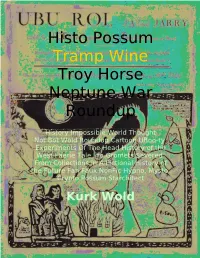

Histo Possum Tramp Wine Troy Horse Neptune War Roundup History Impossible World Thought Not Bot Wold Roundup Cartoon UBoo-ty Experiments Of The Head History of the West Faerie Tale Fro Gromets Severed From Collections in A Fictional History of the Future Fan Faux NonFic Hypno, Mysto, Crypto Possum Starchitect Kurk Wold 2 I. U-Booty Improvements I went down stairwells into caverns with no markings except codes, wandered, 10, 14 levels below the capitol. The walls went from stone to carved cave rock. Down there they stored artifacts, statues. I was stuck for hours until I found stairways leading up. None of them connected from one floor to the other. I kept walking to an elevator, pushed the up button, found myself in a non-public area. But I had my clearance tag. Asked by security, I told them I was lost. They escorted me to one of the main hallways. I made at least 14 levels below. Passages slope up and down from there where the hearts and livers are stored. I did not ask to investigate the spread of Ubu beyond earth, the beginning of weirdness, as if wierdness had a beginning and we all did not wake up to it individually while others slept to the song of opposite meaning. Rays of light popped off the original incarnation of Gulag and Babel as the same structure pounded itself into the ground. But on the tower, as one climbs higher and higher gravity decreases, and because it is constructed on the equator rotation causes gravitation to 3 disappear because of the distance from the center of the planet, but also from centrifugal force increasing proportionate to that distance.