Induction and Comparison

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mow!,'Mum INN Nn

mow!,'mum INN nn %AUNE 20, 1981 $2.75 R1-047-8, a.cec-s_ Q.41.001, 414 i47,>0Z tet`44S;I:47q <r, 4.. SINGLES SLEEPERS ALBUMS COMMODORES.. -LADY (YOU BRING TUBES, -DON'T WANT TO WAIT ANY- POINTER SISTERS, "BLACK & ME UP)" (prod. by Carmichael - eMORE" (prod. by Foster) (writers: WHITE." Once again,thesisters group) (writers: King -Hudson - Tubes -Foster) .Pseudo/ rving multiple lead vocals combine witt- King)(Jobete/Commodores, Foster F-ees/Boone's Tunes, Richard Perry's extra -sensory sonc ASCAP) (3:54). Shimmering BMI) (3 50Fee Waybill and the selection and snappy production :c strings and a drying rhythm sec- ganc harness their craziness long create an LP that's several singles tionbackLionelRichie,Jr.'s enoughtocreate epic drama. deep for many formats. An instant vocal soul. From the upcoming An attrEcti.e piece for AOR-pop. favoriteforsummer'31. Plane' "In the Pocket" LP. Motown 1514. Capitol 5007. P-18 (E!A) (8.98). RONNIE MILSAI3, "(There's) NO GETTIN' SPLIT ENZ, "ONE STEP AHEAD" (prod. YOKO ONO, "SEASON OF GLASS." OVER ME"(prod.byMilsap- byTickle) \rvriter:Finn)(Enz. Released to radio on tape prior to Collins)(writers:Brasfield -Ald- BMI) (2 52. Thick keyboard tex- appearing on disc, Cno's extremel ridge) {Rick Hall, ASCAP) (3:15). turesbuttressNeilFinn'slight persona and specific references tc Milsap is in a pop groove with this tenor or tit's melodic track from her late husband John Lennon have 0irresistible uptempo ballad from the new "Vlaiata- LP. An air of alreadysparkedcontroversyanci hisforthcoming LP.Hissexy, mystery acids to the appeal for discussion that's bound to escaate confident vocal steals the show. -

Elvis Presley Music

Vogue Madonna Take on Me a-ha Africa Toto Sweet Dreams (Are Made of This) Eurythmics You Make My Dreams Daryl Hall and John Oates Taited Love Soft Cell Don't You (Forget About Me) Simple Minds Heaven Is a Place on Earth Belinda Carlisle I'm Still Standing Elton John Wake Me Up Before You Go-GoWham! Blue Monday New Order Superstition Stevie Wonder Move On Up Curtis Mayfield For Once In My Life Stevie Wonder Red Red Wine UB40 Send Me On My Way Rusted Root Hungry Eyes Eric Carmen Good Vibrations The Beach Boys MMMBop Hanson Boom, Boom, Boom!! Vengaboys Relight My Fire Take That, LuLu Picture Of You Boyzone Pray Take That Shoop Salt-N-Pepa Doo Wop (That Thing) Ms Lauryn Hill One Week Barenaked Ladies In the Summertime Shaggy, Payvon Bills, Bills, Bills Destiny's Child Miami Will Smith Gonna Make You Sweat (Everbody Dance Now) C & C Music Factory Return of the Mack Mark Morrison Proud Heather Small Ironic Alanis Morissette Don't You Want Me The Human League Just Cant Get Enough Depeche Mode The Safety Dance Men Without Hats Eye of the Tiger Survivor Like a Prayer Madonna Rocket Man Elton John My Generation The Who A Little Less Conversation Elvis Presley ABC The Jackson 5 Lessons In Love Level 42 In the Air Tonight Phil Collins September Earth, Wind & Fire In Your Eyes Kylie Minogue I Want You Back The Jackson 5 Jump (For My Love) The Pointer Sisters Rock the Boat Hues Corportation Jolene Dolly Parton Never Too Much Luther Vandross Kiss Prince Karma Chameleon Culture Club Blame It On the Boogie The Jacksons Everywhere Fleetwood Mac Beat It -

Song Pack Listing

TRACK LISTING BY TITLE Packs 1-86 Kwizoke Karaoke listings available - tel: 01204 387410 - Title Artist Number "F" You` Lily Allen 66260 'S Wonderful Diana Krall 65083 0 Interest` Jason Mraz 13920 1 2 Step Ciara Ft Missy Elliot. 63899 1000 Miles From Nowhere` Dwight Yoakam 65663 1234 Plain White T's 66239 15 Step Radiohead 65473 18 Til I Die` Bryan Adams 64013 19 Something` Mark Willis 14327 1973` James Blunt 65436 1985` Bowling For Soup 14226 20 Flight Rock Various Artists 66108 21 Guns Green Day 66148 2468 Motorway Tom Robinson 65710 25 Minutes` Michael Learns To Rock 66643 4 In The Morning` Gwen Stefani 65429 455 Rocket Kathy Mattea 66292 4Ever` The Veronicas 64132 5 Colours In Her Hair` Mcfly 13868 505 Arctic Monkeys 65336 7 Things` Miley Cirus [Hannah Montana] 65965 96 Quite Bitter Beings` Cky [Camp Kill Yourself] 13724 A Beautiful Lie` 30 Seconds To Mars 65535 A Bell Will Ring Oasis 64043 A Better Place To Be` Harry Chapin 12417 A Big Hunk O' Love Elvis Presley 2551 A Boy From Nowhere` Tom Jones 12737 A Boy Named Sue Johnny Cash 4633 A Certain Smile Johnny Mathis 6401 A Daisy A Day Judd Strunk 65794 A Day In The Life Beatles 1882 A Design For Life` Manic Street Preachers 4493 A Different Beat` Boyzone 4867 A Different Corner George Michael 2326 A Drop In The Ocean Ron Pope 65655 A Fairytale Of New York` Pogues & Kirsty Mccoll 5860 A Favor House Coheed And Cambria 64258 A Foggy Day In London Town Michael Buble 63921 A Fool Such As I Elvis Presley 1053 A Gentleman's Excuse Me Fish 2838 A Girl Like You Edwyn Collins 2349 A Girl Like -

ADAM and the ANTS Adam and the Ants Were Formed in 1977 in London, England

ADAM AND THE ANTS Adam and the Ants were formed in 1977 in London, England. They existed in two incarnations. One of which lasted from 1977 until 1982 known as The Ants. This was considered their Punk era. The second incarnation known as Adam and the Ants also featured Adam Ant on vocals, but the rest of the band changed quite frequently. This would mark their shift to new wave/post-punk. They would release ten studio albums and twenty-five singles. Their hits include Stand and Deliver, Antmusic, Antrap, Prince Charming, and Kings of the Wild Frontier. A large part of their identity was the uniform Adam Ant wore on stage that consisted of blue and gold material as well as his sophisticated and dramatic stage presence. Click the band name above. ECHO AND THE BUNNYMEN Formed in Liverpool, England in 1978 post-punk/new wave band Echo and the Bunnymen consisted of Ian McCulloch (vocals, guitar), Will Sergeant (guitar), Les Pattinson (bass), and Pete de Freitas (drums). They produced thirteen studio albums and thirty singles. Their debut album Crocodiles would make it to the top twenty list in the UK. Some of their hits include Killing Moon, Bring on the Dancing Horses, The Cutter, Rescue, Back of Love, and Lips Like Sugar. A very large part of their identity was silohuettes. Their music videos and album covers often included silohuettes of the band. They also have somewhat dark undertones to their music that are conveyed through the design. Click the band name above. THE CLASH Formed in London, England in 1976, The Clash were a punk rock group consisting of Joe Strummer (vocals, guitar), Mick Jones (vocals, guitar), Paul Simonon (bass), and Topper Headon (drums). -

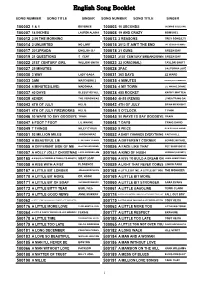

English Song Booklet

English Song Booklet SONG NUMBER SONG TITLE SINGER SONG NUMBER SONG TITLE SINGER 100002 1 & 1 BEYONCE 100003 10 SECONDS JAZMINE SULLIVAN 100007 18 INCHES LAUREN ALAINA 100008 19 AND CRAZY BOMSHEL 100012 2 IN THE MORNING 100013 2 REASONS TREY SONGZ,TI 100014 2 UNLIMITED NO LIMIT 100015 2012 IT AIN'T THE END JAY SEAN,NICKI MINAJ 100017 2012PRADA ENGLISH DJ 100018 21 GUNS GREEN DAY 100019 21 QUESTIONS 5 CENT 100021 21ST CENTURY BREAKDOWN GREEN DAY 100022 21ST CENTURY GIRL WILLOW SMITH 100023 22 (ORIGINAL) TAYLOR SWIFT 100027 25 MINUTES 100028 2PAC CALIFORNIA LOVE 100030 3 WAY LADY GAGA 100031 365 DAYS ZZ WARD 100033 3AM MATCHBOX 2 100035 4 MINUTES MADONNA,JUSTIN TIMBERLAKE 100034 4 MINUTES(LIVE) MADONNA 100036 4 MY TOWN LIL WAYNE,DRAKE 100037 40 DAYS BLESSTHEFALL 100038 455 ROCKET KATHY MATTEA 100039 4EVER THE VERONICAS 100040 4H55 (REMIX) LYNDA TRANG DAI 100043 4TH OF JULY KELIS 100042 4TH OF JULY BRIAN MCKNIGHT 100041 4TH OF JULY FIREWORKS KELIS 100044 5 O'CLOCK T PAIN 100046 50 WAYS TO SAY GOODBYE TRAIN 100045 50 WAYS TO SAY GOODBYE TRAIN 100047 6 FOOT 7 FOOT LIL WAYNE 100048 7 DAYS CRAIG DAVID 100049 7 THINGS MILEY CYRUS 100050 9 PIECE RICK ROSS,LIL WAYNE 100051 93 MILLION MILES JASON MRAZ 100052 A BABY CHANGES EVERYTHING FAITH HILL 100053 A BEAUTIFUL LIE 3 SECONDS TO MARS 100054 A DIFFERENT CORNER GEORGE MICHAEL 100055 A DIFFERENT SIDE OF ME ALLSTAR WEEKEND 100056 A FACE LIKE THAT PET SHOP BOYS 100057 A HOLLY JOLLY CHRISTMAS LADY ANTEBELLUM 500164 A KIND OF HUSH HERMAN'S HERMITS 500165 A KISS IS A TERRIBLE THING (TO WASTE) MEAT LOAF 500166 A KISS TO BUILD A DREAM ON LOUIS ARMSTRONG 100058 A KISS WITH A FIST FLORENCE 100059 A LIGHT THAT NEVER COMES LINKIN PARK 500167 A LITTLE BIT LONGER JONAS BROTHERS 500168 A LITTLE BIT ME, A LITTLE BIT YOU THE MONKEES 500170 A LITTLE BIT MORE DR. -

Top 40 Singles Top 40 Albums

22 February 1981 CHART #272 Top 40 Singles Top 40 Albums The Tide Is High The Bridge Greatest Hits Clues 1 Blondie 21 Deane Waretini 1 Anne Murray 21 Robert Palmer Last week 1 / 6 weeks FESTIVAL Last week 26 / 4 weeks CBS Last week 1 / 5 weeks Platinum / EMI Last week 36 / 5 weeks FESTIVAL Gotta Pull Myself Together Amigo Flesh And Blood Music By Candlelight 2 The Nolans 22 Black Slate 2 Roxy Music 22 Gheorghe Zamfir Last week 4 / 6 weeks CBS Last week 30 / 3 weeks POLYGRAM Last week 3 / 24 weeks Platinum / POLYGRAM Last week 33 / 43 weeks Platinum / POLYGRAM Could I Have This Dance Don't Make Waves Double Fantasy Lost In Love 3 Anne Murray 23 The Nolans 3 John Lennon & Yoko Ono 23 Air Supply Last week 2 / 10 weeks EMI Last week 42 / 17 weeks CBS Last week 4 / 10 weeks Platinum / WEA Last week 27 / 5 weeks EMI Shaddap You Face Passion Zenyatta Mondatta Sandinista 4 Joe Dolce 24 Rod Stewart 4 The Police 24 The Clash Last week 3 / 8 weeks POLYGRAM Last week 16 / 9 weeks WEA Last week 8 / 10 weeks Gold / FESTIVAL Last week 11 / 6 weeks CBS Woman Hungry Heart Chameleon Give Me The Night 5 John Lennon 25 Bruce Springsteen 5 David Bowie 25 George Benson Last week 8 / 2 weeks WEA Last week 24 / 9 weeks CBS Last week 2 / 7 weeks RCA Last week 18 / 28 weeks Platinum / WEA Tell It Like It Is I'm Coming Out Hotter Than July Crimes Of Passion 6 Heart 26 Diana Ross 6 Stevie Wonder 26 Pat Benatar Last week 11 / 5 weeks CBS Last week 28 / 11 weeks EMI Last week 5 / 11 weeks Platinum / EMI Last week 29 / 15 weeks FESTIVAL I Believe In You Hey Nineteen -

Led Zeppelin R.E.M. Queen Feist the Cure Coldplay the Beatles The

Jay-Z and Linkin Park System of a Down Guano Apes Godsmack 30 Seconds to Mars My Chemical Romance From First to Last Disturbed Chevelle Ra Fall Out Boy Three Days Grace Sick Puppies Clawfinger 10 Years Seether Breaking Benjamin Hoobastank Lostprophets Funeral for a Friend Staind Trapt Clutch Papa Roach Sevendust Eddie Vedder Limp Bizkit Primus Gavin Rossdale Chris Cornell Soundgarden Blind Melon Linkin Park P.O.D. Thousand Foot Krutch The Afters Casting Crowns The Offspring Serj Tankian Steven Curtis Chapman Michael W. Smith Rage Against the Machine Evanescence Deftones Hawk Nelson Rebecca St. James Faith No More Skunk Anansie In Flames As I Lay Dying Bullet for My Valentine Incubus The Mars Volta Theory of a Deadman Hypocrisy Mr. Bungle The Dillinger Escape Plan Meshuggah Dark Tranquillity Opeth Red Hot Chili Peppers Ohio Players Beastie Boys Cypress Hill Dr. Dre The Haunted Bad Brains Dead Kennedys The Exploited Eminem Pearl Jam Minor Threat Snoop Dogg Makaveli Ja Rule Tool Porcupine Tree Riverside Satyricon Ulver Burzum Darkthrone Monty Python Foo Fighters Tenacious D Flight of the Conchords Amon Amarth Audioslave Raffi Dimmu Borgir Immortal Nickelback Puddle of Mudd Bloodhound Gang Emperor Gamma Ray Demons & Wizards Apocalyptica Velvet Revolver Manowar Slayer Megadeth Avantasia Metallica Paradise Lost Dream Theater Temple of the Dog Nightwish Cradle of Filth Edguy Ayreon Trans-Siberian Orchestra After Forever Edenbridge The Cramps Napalm Death Epica Kamelot Firewind At Vance Misfits Within Temptation The Gathering Danzig Sepultura Kreator -

Songs by Artist

YouStarKaraoke.com Songs by Artist 602-752-0274 Title Title Title 1 Giant Leap 1975 3 Doors Down My Culture City Let Me Be Myself (Wvocal) 10 Years 1985 Let Me Go Beautiful Bowling For Soup Live For Today Through The Iris 1999 Man United Squad Loser Through The Iris (Wvocal) Lift It High (All About Belief) Road I'm On Wasteland 2 Live Crew The Road I'm On 10,000 MANIACS Do Wah Diddy Diddy When I M Gone Candy Everybody Wants Doo Wah Diddy When I'm Gone Like The Weather Me So Horny When You're Young More Than This We Want Some PUSSY When You're Young (Wvocal) These Are The Days 2 Pac 3 Doors Down & Bob Seger Trouble Me California Love Landing In London 100 Proof Aged In Soul Changes 3 Doors Down Wvocal Somebody's Been Sleeping Dear Mama Every Time You Go (Wvocal) 100 Years How Do You Want It When You're Young (Wvocal) Five For Fighting Thugz Mansion 3 Doors Down 10000 Maniacs Until The End Of Time Road I'm On Because The Night 2 Pac & Eminem Road I'm On, The 101 Dalmations One Day At A Time 3 LW Cruella De Vil 2 Pac & Eric Will No More (Baby I'ma Do Right) 10CC Do For Love 3 Of A Kind Donna 2 Unlimited Baby Cakes Dreadlock Holiday No Limits 3 Of Hearts I'm Mandy 20 Fingers Arizona Rain I'm Not In Love Short Dick Man Christmas Shoes Rubber Bullets 21St Century Girls Love Is Enough Things We Do For Love, The 21St Century Girls 3 Oh! 3 Wall Street Shuffle 2Pac Don't Trust Me We Do For Love California Love (Original 3 Sl 10CCC Version) Take It Easy I'm Not In Love 3 Colours Red 3 Three Doors Down 112 Beautiful Day Here Without You Come See Me -

SHSU Video Archive Basic Inventory List Department of Library Science

SHSU Video Archive Basic Inventory List Department of Library Science A & E: The Songmakers Collection, Volume One – Hitmakers: The Teens Who Stole Pop Music. c2001. A & E: The Songmakers Collection, Volume One – Dionne Warwick: Don’t Make Me Over. c2001. A & E: The Songmakers Collection, Volume Two – Bobby Darin. c2001. A & E: The Songmakers Collection, Volume Two – [1] Leiber & Stoller; [2] Burt Bacharach. c2001. A & E Top 10. Show #109 – Fads, with commercial blacks. Broadcast 11/18/99. (Weller Grossman Productions) A & E, USA, Channel 13-Houston Segments. Sally Cruikshank cartoon, Jukeboxes, Popular Culture Collection – Jesse Jones Library Abbott & Costello In Hollywood. c1945. ABC News Nightline: John Lennon Murdered; Tuesday, December 9, 1980. (MPI Home Video) ABC News Nightline: Porn Rock; September 14, 1985. Interview with Frank Zappa and Donny Osmond. Abe Lincoln In Illinois. 1939. Raymond Massey, Gene Lockhart, Ruth Gordon. John Ford, director. (Nostalgia Merchant) The Abominable Dr. Phibes. 1971. Vincent Price, Joseph Cotton. Above The Rim. 1994. Duane Martin, Tupac Shakur, Leon. (New Line) Abraham Lincoln. 1930. Walter Huston, Una Merkel. D.W. Griffith, director. (KVC Entertaiment) Absolute Power. 1996. Clint Eastwood, Gene Hackman, Laura Linney. (Castle Rock Entertainment) The Abyss, Part 1 [Wide Screen Edition]. 1989. Ed Harris. (20th Century Fox) The Abyss, Part 2 [Wide Screen Edition]. 1989. Ed Harris. (20th Century Fox) The Abyss. 1989. (20th Century Fox) Includes: [1] documentary; [2] scripts. The Abyss. 1989. (20th Century Fox) Includes: scripts; special materials. The Abyss. 1989. (20th Century Fox) Includes: special features – I. The Abyss. 1989. (20th Century Fox) Includes: special features – II. Academy Award Winners: Animated Short Films. -

Artist 5Th Dimension, the 5Th Dimension, the a Flock of Seagulls

Artist 5th Dimension, The 5th Dimension, The A Flock of Seagulls AC/DC Ackerman, William Adam and the Ants Adam Ant Adam Ant Adam Ant Adams, Bryan Adams, Bryan Adams, Ryan & the Cardinals Aerosmith Aerosmith Alice in Chains Allman, Duane Amazing Rhythm Aces America America April Wine Arcadia Archies, The Asia Asleep at the Wheel Association, The Association, The Atlanta Rhythm Section Atlanta Rhythm Section Atlanta Rhythm Section Atlanta Rhythm Section Atlanta Rhythm Section Atlanta Rhythm Section Atlanta Rhythm Section Autry, Gene Axe Axton, Hoyt Axton, Hoyt !1 Bachman Turner Overdrive Bad Company Bad Company Bad Company Bad English Badlands Badlands Band, The Bare, Bobby Bay City Rollers Beach Boys, The Beach Boys, The Beach Boys, The Beach Boys, The Beach Boys, The Beach Boys, The Beatles, The Beatles, The Beatles, The Beatles, The Beaverteeth Bennett, Tony Benson, George Bent Left Big Audio Dynamite II Billy Squier Bishop, Elvin Bishop, Elvin Bishop, Stephen Black N Blue Black Sabbath Blind Faith Bloodrock Bloodrock Bloodrock Bloodstone Blue Nile, The Blue Oyster Cult !2 Blue Ridge Rangers Blues Brothers Blues Brothers Blues Brothers Blues Pills Blues Pills Bob Marley and the Wailers Bob Wills and His Texas Playboys BoDeans Bonham Bonoff, Karla Boston Boston Boston Boston Symphony Orchestra Bowie, David Braddock, Bobby Brickell, Edie & New Bohemians Briley, Martin Britny Fox Brown, Clarence Gatemouth Brown, Toni & Garthwaite, Terry Browne, Jackson Browne, Jackson Browne, Jackson Browne, Jackson Browne, Jackson Browne, Jackson Bruce -

The Stone Roses Music Current Affairs Culture 1980

Chronology: Pre-Stone Roses THE STONE ROSES MUSIC CURRENT AFFAIRS CULTURE 1980 Art created by John 45s: David Bowie, Ashes Cinema: Squire in this year: to Ashes; Joy Division, The Empire Strikes Back; N/K Love Will Tear Us Apart; Raging Bull; Superman II; Michael Jackson, She's Fame; Airplane!; The Out of My Life; Visage, Elephant Man; The Fade to Grey; Bruce Shining; The Blues Springsteen, Hungry Brothers; Dressed to Kill; Heart; AC/DC, You Shook Nine to Five; Flash Me All Night Long; The Gordon; Heaven's Gate; Clash, Bankrobber; The Caddyshack; Friday the Jam, Going Underground; 13th; The Long Good Pink Floyd, Another Brick Friday; Ordinary People. in the Wall (Part II); The d. Alfred Hitchcock (Apr Police, Don't Stand So 29), Peter Sellers (Jul 24), Close To Me; Blondie, Steve McQueen (Nov 7), Atomic & The Tide Is High; Mae West (Nov 22), Madness, Baggy Trousers; George Raft (Nov 24), Kelly Marie, Feels Like I'm Raoul Walsh (Dec 31). In Love; The Specials, Too Much Too Young; Dexy's Fiction: Midnight Runners, Geno; Frederick Forsyth, The The Pretenders, Talk of Devil's Alternative; L. Ron the Town; Bob Marley and Hubbard, Battlefield Earth; the Wailers, Could You Be Umberto Eco, The Name Loved; Tom Petty and the of the Rose; Robert Heartbreakers, Here Ludlum, The Bourne Comes My Girl; Diana Identity. Ross, Upside Down; Pop Musik, M; Roxy Music, Non-fiction: Over You; Paul Carl Sagan, Cosmos. McCartney, Coming Up. d. Jean-Paul Sartre (Apr LPs: Adam and the Ants, 15). Kings of the Wild Frontier; Talking Heads, Remain in TV / Media: Light; Queen, The Game; Millions of viewers tune Genesis, Duke; The into the U.S. -

2 Column Indented

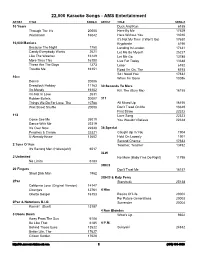

22,000 Karaoke Songs - AMS Entertainment ARTIST TITLE SONG # ARTIST TITLE SONG # 10 Years Duck And Run 6188 Through The Iris 20005 Here By Me 17629 Wasteland 16042 Here Without You 13010 It's Not My Time (I Won't Go) 17630 10,000 Maniacs Kryptonite 6190 Because The Night 1750 Landing In London 17631 Candy Everybody Wants 2621 Let Me Be Myself 25227 Like The Weather 16149 Let Me Go 13785 More Than This 16150 Live For Today 13648 These Are The Days 1273 Loser 6192 Trouble Me 16151 Road I'm On, The 6193 So I Need You 17632 10cc When I'm Gone 13086 Donna 20006 Dreadlock Holiday 11163 30 Seconds To Mars I'm Mandy 16152 Kill, The (Bury Me) 16155 I'm Not In Love 2631 Rubber Bullets 20007 311 Things We Do For Love, The 10788 All Mixed Up 16156 Wall Street Shuffle 20008 Don't Tread On Me 13649 First Straw 22322 112 Love Song 22323 Come See Me 25019 You Wouldn't Believe 22324 Dance With Me 22319 It's Over Now 22320 38 Special Peaches & Cream 22321 Caught Up In You 1904 U Already Know 13602 Hold On Loosely 1901 Second Chance 17633 2 Tons O' Fun Teacher, Teacher 13492 It's Raining Men (Hallelujah!) 6017 3LW 2 Unlimited No More (Baby I'ma Do Right) 11795 No Limits 6183 3Oh!3 20 Fingers Don't Trust Me 16157 Short Dick Man 1962 3OH!3 & Katy Perry 2Pac Starstrukk 25138 California Love (Original Version) 14147 Changes 12761 4 Him Ghetto Gospel 16153 Basics Of Life 20002 For Future Generations 20003 2Pac & Notorious B.I.G.