University of Oklahoma Graduate College

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Reactionary Postmodernism? Neoliberalism, Multiculturalism, the Internet, and the Ideology of the New Far Right in Germany

University of Vermont ScholarWorks @ UVM UVM Honors College Senior Theses Undergraduate Theses 2018 Reactionary Postmodernism? Neoliberalism, Multiculturalism, the Internet, and the Ideology of the New Far Right in Germany William Peter Fitz University of Vermont Follow this and additional works at: https://scholarworks.uvm.edu/hcoltheses Recommended Citation Fitz, William Peter, "Reactionary Postmodernism? Neoliberalism, Multiculturalism, the Internet, and the Ideology of the New Far Right in Germany" (2018). UVM Honors College Senior Theses. 275. https://scholarworks.uvm.edu/hcoltheses/275 This Honors College Thesis is brought to you for free and open access by the Undergraduate Theses at ScholarWorks @ UVM. It has been accepted for inclusion in UVM Honors College Senior Theses by an authorized administrator of ScholarWorks @ UVM. For more information, please contact [email protected]. REACTIONARY POSTMODERNISM? NEOLIBERALISM, MULTICULTURALISM, THE INTERNET, AND THE IDEOLOGY OF THE NEW FAR RIGHT IN GERMANY A Thesis Presented by William Peter Fitz to The Faculty of the College of Arts and Sciences of The University of Vermont In Partial Fulfilment of the Requirements For the Degree of Bachelor of Arts In European Studies with Honors December 2018 Defense Date: December 4th, 2018 Thesis Committee: Alan E. Steinweis, Ph.D., Advisor Susanna Schrafstetter, Ph.D., Chairperson Adriana Borra, M.A. Table of Contents Introduction 1 Chapter One: Neoliberalism and Xenophobia 17 Chapter Two: Multiculturalism and Cultural Identity 52 Chapter Three: The Philosophy of the New Right 84 Chapter Four: The Internet and Meme Warfare 116 Conclusion 149 Bibliography 166 1 “Perhaps one will view the rise of the Alternative for Germany in the foreseeable future as inevitable, as a portent for major changes, one that is as necessary as it was predictable. -

New Modernism(S)

New Modernism(s) BEN DUVALL 5 Intro: Surfaces and Signs 13 The Typography of Utopia/Dystopia 27 The Hyperlinked Sign 41 The Aesthetics of Refusal 5 Intro: Surfaces and Signs What can be said about graphic design, about the man- ner in which its artifact exists? We know that graphic design is a manipulation of certain elements in order to communicate, specifically typography and image, but in order to be brought together, these elements must exist on the same plane–the surface. If, as semi- oticians have said, typography and images are signs in and of themselves, then the surface is the locus for the application of sign systems. Based on this, we arrive at a simple equation: surface + sign = a work of graphic design. As students and practitioners of this kind of “surface curation,” the way these elements are functioning currently should be of great interest to us. Can we say that they are operating in fundamentally different ways from the way they did under modern- ism? Even differently than under postmodernism? Per- haps the way the surface and sign are treated is what distinguishes these cultural epochs from one another. We are confronted with what Roland Barthes de- fined as a Text, a site of interacting and open signs, 6 NEW MODERNISM(S) and therefore, a site of reader interpretation and of SIGNIFIER + SIGNIFIED = SIGN semiotic play.1 This is of utmost importance, the treat- ment of the signs within a Text is how we interpret, Physical form of an Ideas represented Unit of meaning idea, e.g. -

Connections Between Gilles Lipovetsky's Hypermodern Times and Post-Soviet Russian Cinema James M

Communication and Theater Association of Minnesota Journal Volume 36 Article 2 January 2009 "Brother," Enjoy Your Hypermodernity! Connections between Gilles Lipovetsky's Hypermodern Times and Post-Soviet Russian Cinema James M. Brandon Hillsdale College, [email protected] Follow this and additional works at: https://cornerstone.lib.mnsu.edu/ctamj Part of the Film and Media Studies Commons, and the Soviet and Post-Soviet Studies Commons Recommended Citation Brandon, J. (2009). "Brother," Enjoy Your Hypermodernity! Connections between Gilles Lipovetsky's Hypermodern Times and Post- Soviet Russian Cinema. Communication and Theater Association of Minnesota Journal, 36, 7-22. This General Interest is brought to you for free and open access by Cornerstone: A Collection of Scholarly and Creative Works for Minnesota State University, Mankato. It has been accepted for inclusion in Communication and Theater Association of Minnesota Journal by an authorized editor of Cornerstone: A Collection of Scholarly and Creative Works for Minnesota State University, Mankato. Brandon: "Brother," Enjoy Your Hypermodernity! Connections between Gilles CTAMJ Summer 2009 7 “Brother,” Enjoy your Hypermodernity! Connections between Gilles Lipovetsky’s Hypermodern Times and Post-Soviet Russian Cinema James M. Brandon Associate Professor [email protected] Department of Theatre and Speech Hillsdale College Hillsdale, MI ABSTRACT In prominent French social philosopher Gilles Lipovetsky’s Hypermodern Times (2005), the author asserts that the world has entered the period of hypermodernity, a time where the primary concepts of modernity are taken to their extreme conclusions. The conditions Lipovetsky described were already manifesting in a number of post-Soviet Russian films. In the tradition of Slavoj Zizek’s Enjoy Your Symptom (1992), this essay utilizes a number of post-Soviet Russian films to explicate Lipovetsky’s philosophy, while also using Lipovetsky’s ideas to explicate the films. -

In Defense of Cyberterrorism: an Argument for Anticipating Cyber-Attacks

IN DEFENSE OF CYBERTERRORISM: AN ARGUMENT FOR ANTICIPATING CYBER-ATTACKS Susan W. Brenner Marc D. Goodman The September 11, 2001, terrorist attacks on the United States brought the notion of terrorism as a clear and present danger into the consciousness of the American people. In order to predict what might follow these shocking attacks, it is necessary to examine the ideologies and motives of their perpetrators, and the methodologies that terrorists utilize. The focus of this article is on how Al-Qa'ida and other Islamic fundamentalist groups can use cyberspace and technology to continue to wage war againstthe United States, its allies and its foreign interests. Contending that cyberspace will become an increasingly essential terrorist tool, the author examines four key issues surrounding cyberterrorism. The first is a survey of conventional methods of "physical" terrorism, and their inherent shortcomings. Next, a discussion of cyberspace reveals its potential advantages as a secure, borderless, anonymous, and structured delivery method for terrorism. Third, the author offers several cyberterrorism scenarios. Relating several examples of both actual and potential syntactic and semantic attacks, instigated individually or in combination, the author conveys their damagingpolitical and economic impact. Finally, the author addresses the inevitable inquiry into why cyberspace has not been used to its full potential by would-be terrorists. Separately considering foreign and domestic terrorists, it becomes evident that the aims of terrorists must shift from the gross infliction of panic, death and destruction to the crippling of key information systems before cyberattacks will take precedence over physical attacks. However, given that terrorist groups such as Al Qa'ida are highly intelligent, well-funded, and globally coordinated, the possibility of attacks via cyberspace should make America increasingly vigilant. -

Zombies—A Pop Culture Resource for Public Health Awareness Melissa Nasiruddin, Monique Halabi, Alexander Dao, Kyle Chen, and Brandon Brown

Zombies—A Pop Culture Resource for Public Health Awareness Melissa Nasiruddin, Monique Halabi, Alexander Dao, Kyle Chen, and Brandon Brown itting at his laboratory bench, a scientist adds muta- secreted by puffer fish that can trigger paralysis or death- Stion after mutation to a strand of rabies virus RNA, like symptoms, could be primary ingredients. Which toxins unaware that in a few short days, an outbreak of this very are used in the zombie powders specifically, however, is mutation would destroy society as we know it. It could be still a matter of contention among academics (4). Once the called “Zombie Rabies,” a moniker befitting of the next sorcerer has split the body and soul, he stores the ti-bon anj, Hollywood blockbuster—or, in this case, a representation the manifestation of awareness and memory, in a special of the debate over whether a zombie apocalypse, manu- bottle. Inside the container, that part of the soul is known as factured by genetically modifying one or more diseases the zombi astral. With the zombi astral in his possession, like rabies, could be more than just fiction. Fear of the the sorcerer retains complete control of the victim’s spiri- unknown has long been a psychological driving force for tually dead body, now known as the zombi cadavre. The curiosity, and the concept of a zombie apocalypse has be- zombi cadavre remains a slave to the will of the sorcerer come popular in modern society. This article explores the through continued poisoning or spell work (1). In fact, the utility of zombies to capitalize on the benefits of spreading only way a zombi can be freed from its slavery is if the spell public health awareness through the use of relatable popu- jar containing its ti-bon anj is broken, or if it ingests salt lar culture tools and scientific explanations for fictional or meat. -

New York State Thoroughbred Breeding and Development Fund Corporation

NEW YORK STATE THOROUGHBRED BREEDING AND DEVELOPMENT FUND CORPORATION Report for the Year 2008 NEW YORK STATE THOROUGHBRED BREEDING AND DEVELOPMENT FUND CORPORATION SARATOGA SPA STATE PARK 19 ROOSEVELT DRIVE-SUITE 250 SARATOGA SPRINGS, NY 12866 Since 1973 PHONE (518) 580-0100 FAX (518) 580-0500 WEB SITE http://www.nybreds.com DIRECTORS EXECUTIVE DIRECTOR John D. Sabini, Chairman Martin G. Kinsella and Chairman of the NYS Racing & Wagering Board Patrick Hooker, Commissioner NYS Dept. Of Agriculture and Markets COMPTROLLER John A. Tesiero, Jr., Chairman William D. McCabe, Jr. NYS Racing Commission Harry D. Snyder, Commissioner REGISTRAR NYS Racing Commission Joseph G. McMahon, Member Barbara C. Devine Phillip Trowbridge, Member William B. Wilmot, DVM, Member Howard C. Nolan, Jr., Member WEBSITE & ADVERTISING Edward F. Kelly, Member COORDINATOR James Zito June 2009 To: The Honorable David A. Paterson and Members of the New York State Legislature As I present this annual report for 2008 on behalf of the New York State Thoroughbred Breeding and Development Fund Board of Directors, having just been installed as Chairman in the past month, I wish to reflect on the profound loss the New York racing community experienced in October 2008 with the passing of Lorraine Power Tharp, who so ably served the Fund as its Chairwoman. Her dedication to the Fund was consistent with her lifetime of tireless commitment to a variety of civic and professional organizations here in New York. She will long be remembered not only as a role model for women involved in the practice of law but also as a forceful advocate for the humane treatment of all animals. -

America's Defense Meltdown

AMERICA’S DEFENSE MELTDOWN ★ ★ ★ Pentagon Reform for President Obama and the New Congress 13 non-partisan Pentagon insiders, retired military officers & defense specialists speak out The World Security Institute’s Center for Defense Information (CDI) provides expert analysis on various components of U.S. national security, international security and defense policy. CDI promotes wide-ranging discussion and debate on security issues such as nuclear weapons, space security, missile defense, small arms and military transformation. CDI is an independent monitor of the Pentagon and Armed Forces, conducting re- search and analyzing military spending, policies and weapon systems. It is comprised of retired senior government officials and former military officers, as well as experi- enced defense analysts. Funded exclusively by public donations and foundation grants, CDI does not seek or accept Pentagon money or military industry funding. CDI makes its military analyses available to Congress, the media and the public through a variety of services and publications, and also provides assistance to the federal government and armed services upon request. The views expressed in CDI publications are those of the authors. World Security Institute’s Center for Defense Information 1779 Massachusetts Avenue, NW Washington, D.C. 20036-2109 © 2008 Center for Defense Information ISBN-10: 1-932019-33-2 ISBN-13: 978-1-932019-33-9 America’s Defense Meltdown PENTAGON REFORM FOR PRESIDENT OBAMA AND THE NEW CONGRESS 13 non-partisan Pentagon insiders, retired military officers & defense specialists speak out Edited by Winslow T. Wheeler Washington, D.C. November 2008 ABOUT THE AUTHORS Thomas Christie began his career in the Department of Defense and related positions in 1955. -

The Emergence and Development of the Sentient Zombie: Zombie

The Emergence and Development of the Sentient Zombie: Zombie Monstrosity in Postmodern and Posthuman Gothic Kelly Gardner Submitted in partial fulfilment of the requirements for the award of Doctor of Philosophy Division of Literature and Languages University of Stirling 31st October 2015 1 Abstract “If you’ve never woken up from a car accident to discover that your wife is dead and you’re an animated rotting corpse, then you probably won’t understand.” (S. G. Browne, Breathers: A Zombie’s Lament) The zombie narrative has seen an increasing trend towards the emergence of a zombie sentience. The intention of this thesis is to examine the cultural framework that has informed the contemporary figure of the zombie, with specific attention directed towards the role of the thinking, conscious or sentient zombie. This examination will include an exploration of the zombie’s folkloric origin, prior to the naming of the figure in 1819, as well as the Haitian appropriation and reproduction of the figure as a representation of Haitian identity. The destructive nature of the zombie, this thesis argues, sees itself intrinsically linked to the notion of apocalypse; however, through a consideration of Frank Kermode’s A Sense of an Ending, the second chapter of this thesis will propose that the zombie need not represent an apocalypse that brings devastation upon humanity, but rather one that functions to alter perceptions of ‘humanity’ itself. The third chapter of this thesis explores the use of the term “braaaaiiinnss” as the epitomised zombie voice in the figure’s development as an effective threat within zombie-themed videogames. -

A Portrayal of Gender and a Description of Gender Roles in Selected American Modern and Postmodern Plays

East Tennessee State University Digital Commons @ East Tennessee State University Electronic Theses and Dissertations Student Works 5-2002 A Portrayal of Gender and a Description of Gender Roles in Selected American Modern and Postmodern Plays. Bonny Ball Copenhaver East Tennessee State University Follow this and additional works at: https://dc.etsu.edu/etd Part of the English Language and Literature Commons, and the Feminist, Gender, and Sexuality Studies Commons Recommended Citation Copenhaver, Bonny Ball, "A Portrayal of Gender and a Description of Gender Roles in Selected American Modern and Postmodern Plays." (2002). Electronic Theses and Dissertations. Paper 632. https://dc.etsu.edu/etd/632 This Dissertation - Open Access is brought to you for free and open access by the Student Works at Digital Commons @ East Tennessee State University. It has been accepted for inclusion in Electronic Theses and Dissertations by an authorized administrator of Digital Commons @ East Tennessee State University. For more information, please contact [email protected]. The Portrayal of Gender and a Description of Gender Roles in Selected American Modern and Postmodern Plays A dissertation presented to the Faculty of the Department of Educational Leadership and Policy Analysis East Tennessee State University In partial fulfillment of the requirements for the degree Doctor of Education in Educational Leadership and Policy Analysis by Bonny Ball Copenhaver May 2002 Dr. W. Hal Knight, Chair Dr. Jack Branscomb Dr. Nancy Dishner Dr. Russell West Keywords: Gender Roles, Feminism, Modernism, Postmodernism, American Theatre, Robbins, Glaspell, O'Neill, Miller, Williams, Hansbury, Kennedy, Wasserstein, Shange, Wilson, Mamet, Vogel ABSTRACT The Portrayal of Gender and a Description of Gender Roles in Selected American Modern and Postmodern Plays by Bonny Ball Copenhaver The purpose of this study was to describe how gender was portrayed and to determine how gender roles were depicted and defined in a selection of Modern and Postmodern American plays. -

Zombie Apocalypse: Engaging Students in Environmental Health

Journal of University Teaching & Learning Practice Volume 15 | Issue 2 Article 4 2018 Zombie Apocalypse: Engaging Students In Environmental Health And Increasing Scientific Literacy Through The seU Of Cultural Hooks And Authentic Challenge Based Learning Strategies Harriet Whiley Flinders University, [email protected] Donald Houston Flinders University, [email protected] Anna Smith Flinders University, [email protected] Kirstin Ross Flinders University, [email protected] Follow this and additional works at: http://ro.uow.edu.au/jutlp Recommended Citation Whiley, Harriet; Houston, Donald; Smith, Anna; and Ross, Kirstin, Zombie Apocalypse: Engaging Students In Environmental Health And Increasing Scientific Literacy Through The sU e Of Cultural Hooks And Authentic Challenge Based Learning Strategies, Journal of University Teaching & Learning Practice, 15(2), 2018. Available at:http://ro.uow.edu.au/jutlp/vol15/iss2/4 Research Online is the open access institutional repository for the University of Wollongong. For further information contact the UOW Library: [email protected] Zombie Apocalypse: Engaging Students In Environmental Health And Increasing Scientific Literacy Through The seU Of Cultural Hooks And Authentic Challenge Based Learning Strategies Abstract Environmental Health (EH) is an essential profession for protecting human health and yet as a discipline it is under-recognised, overlooked and misunderstood. Too few students undertake EH studies, culminating in a dearth of qualified Environmental Health Officers (EHOs) in Australia. A major deterrent to students enrolling in EH courses is a lack of appreciation of the relevance to their own lives. This is symptomatic of a wider problem of scientific literacy: the relevance gap and how to bridge it. -

Using Postmodernism in Drama and Theatre Making David Porter AS/A2

Using postmodernism in drama and theatre making AS/A2 David Porter AS/A2 Introduction David Porter is former Head of Performing Arts at Kirkley High School, We live in an increasingly ‘cross-genre’ environment where things are mixed, Lowestoft, teacher and one-time sampled and mashed up. History, time, roles, arts, technology and our cultural children’s theatre performer. Freelance and social contexts are in flux. Postmodernism expresses many of the ways in writer, blogger and editor, he is a senior which this is happening. assessor for A level performance studies, IGSCE drama moderator and GCSE drama As the reformed A levels come on stream, postmodernism may appear to examiner. have little place except in BTECs. However, performing arts in general and drama in particular require knowledge and understanding of wider contexts, reinterpretations, devising and study of texts from different periods. Exploring postmodernism is an excellent way to open up a world of artistic interest, exploration, experiment and mash-ups for students who are 16 and over. Although different in details, the Drama and Theatre A level specifications offered for teaching from 2016 (first exam in 2018) are similar in intention and breadth of study. All require exploration of some predetermined and some centre-chosen texts, a variety of leading practitioners, and devising and re- interpretations. Using postmodernism broadens students’ viewpoints, ideas and practical experiences of using art forms to express material. Exam preparation aside, this scheme serves as an -

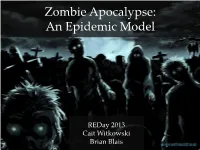

Zombie Apocalypse: an Epidemic Model

Zombie Apocalypse: An Epidemic Model REDay 2013 Cait Witkowski Brian Blais Overview • Our interest • Zombie basics • Epidemic (SIR) model • Munz et al., 2009 • Modifications What is a zombie? • “Undead” • Eat human flesh • Infect healthy • Difficult to kill (destroy brain) Photo credit: thewalkingdeadstrream.net What is a zombie? Main dynamics: 1. How you become a zombie Sick, Bitten, Die 2. How you get rid of zombies Cure, Death Epidemic Model • Outbreak • Spread • Control • Vaccination Epidemic Model Susceptible Infected Recovered S I R Total Pop Photo credit: http://whatthehealthmag.wordpress.com S I S I (change in quantity S)= -(constant) S I (change in quantity S)= -(constant) S’= -θ S I (change in quantity S)= -(constant) S’= -θ I’= +θ Epidemic Model ζI I R I’ = - ζI R’= +ζI Epidemic Model βSI S I S’ = -βSI I’ = +βSI Epidemic Model βSI ζI S I R S’ = -βSI I’ = +βSI—ζI R’= +ζI Epidemic Model Example of SIR dynamics for influenza Sebastian Bonhoeffer, SIR models of epidemics Epidemic Model Modifications •Death rates •Latent periods (SEIS) •Ability to recover (SEIR) •Ability to become susceptible again (SIRS) “When Zombies Attack!: Mathematical Modelling of an Outbreak Zombie Infection” Munz, Hudea, Imad, Smith (2009) Goals: •Model a zombie attack, using biological assumptions based on popular zombie movies •Determine equilibria and their stability •Illustrate the outcome with numerical solutions •Introduce epidemic modeling with fun example “When Zombies Attack!: Mathematical Modelling of an Outbreak Zombie Infection” Munz, Hudea, Imad,