Multimedia 1 Contents

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Desktop Migration and Administration Guide

Red Hat Enterprise Linux 7 Desktop Migration and Administration Guide GNOME 3 desktop migration planning, deployment, configuration, and administration in RHEL 7 Last Updated: 2021-05-05 Red Hat Enterprise Linux 7 Desktop Migration and Administration Guide GNOME 3 desktop migration planning, deployment, configuration, and administration in RHEL 7 Marie Doleželová Red Hat Customer Content Services [email protected] Petr Kovář Red Hat Customer Content Services [email protected] Jana Heves Red Hat Customer Content Services Legal Notice Copyright © 2018 Red Hat, Inc. This document is licensed by Red Hat under the Creative Commons Attribution-ShareAlike 3.0 Unported License. If you distribute this document, or a modified version of it, you must provide attribution to Red Hat, Inc. and provide a link to the original. If the document is modified, all Red Hat trademarks must be removed. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, the Red Hat logo, JBoss, OpenShift, Fedora, the Infinity logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. Linux ® is the registered trademark of Linus Torvalds in the United States and other countries. Java ® is a registered trademark of Oracle and/or its affiliates. XFS ® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. MySQL ® is a registered trademark of MySQL AB in the United States, the European Union and other countries. -

Mdns/Dns-Sd Tutorial

MDNS/DNS-SD TUTORIAL In this tutorial, we will describe how to use mDNS/DNS-SD on Raspberry Pi. mDNS/DNS-SD is a protocol for service discovery in a local area network. It is standardized under RFCs 6762 [1] and 6763[2]. The protocol is also known by the Bonjour trademark by Apple, or Zeroconf. On Linux, it is implemented in the avahi package. [1] http://tools.ietf.org/html/rfc6762 [2] http://tools.ietf.org/html/rfc6763 About mDNS/DNS-SD There are several freely available implementations of mDNS/DNS-SD: 1. avahi – Linux implementation (http://www.avahi.org/) 2. jmDNS – Java implementation (http://jmdns.sourceforge.net/) 3. Bonjour – MAC OS (installed by default) 4. Bonjour – Windows (https://support.apple.com/kb/DL999?locale=en_US) During this course, we will use only avahi. However, any of the aforementioned implementations are compatible. Avahi installation avahi is available as a package for Raspbian. Install it with: sudo apt-get install avahi-deamon avahi-utils Avahi usage avahi-daemon is the main process that takes care of proper operation of the protocol. It takes care of any configuration of the interfaces and network messaging. A user can control the deamon with command line utilities, or via D-Bus. In this document, we will describe the former option. For the latter one, please see http://www.avahi.org/wiki/Bindings. Publishing services avahi-publish-service is the command for publishing services. The syntax is: avahi-publish-service SERVICE-NAME _APPLICATION- PROTOCOL._TRANPOSRT-PROTOCOL PORT “DESCRIPTION” --sub SUBPROTOCOL For instance, the command: avahi-publish-service light _coap._udp 5683 “/mylight” --sub _floor1._sub._coap._udp will publish a service named ‘light’, which uses the CoAP protocol over UDP on port 5683. -

106 INKSCAPE – FREE VECTOR GRAPHICS EDITOR Grabareva A

INKSCAPE – FREE VECTOR GRAPHICS EDITOR Grabareva A. Supervisor: Voevodina M. E-mail: [email protected], [email protected] Kharkiv, О.М. Beketov National University of Urban Economy in Kharkiv Inkscape is a cross-platform, powerful enough and in many ways competitive free vector graphics editor with open source code, and in which the SVG format is used as the main standard for work. It is convenient for creating both artistic and technical illustrations. Inkscape is an analogue of such graphic editors as Corel Draw, Adobe Illustrator, Xara X and Freehand. Intended use: - illustrations for office circulars, presentations, creation of logos, business cards, posters; - technical illustrations (diagrams, graphics, etc.); - vector graphics for high-quality printing (with preliminary import of SVG into Scribus); - web graphics – from banners to site layouts, icons for applications and website buttons, - graphics for games. Main characteristics of Inkscape: - the program is free and distributed under the GNU General Public License; - cross-platform; - the program supports the following document formats: import – almost all popular and frequently used formats: SVG, JPEG, GIF, BMP, EPS, PDF, PNG, ICO, and many additional ones, such as SVGZ, EMF, PostScript, AI, Dia, Sketch, TIFF, XPM, WMF, WPG, GGR, ANI, CUR, PCX, PNM, RAS, TGA, WBMP, XBM, XPM; export – the main formats are PNG and SVG and many additional EPS, PostScript, PDF, Dia, AI, Sketch, POV-Ray, LaTeX, OpenDocument Draw, GPL, EMF, POV, DXF; - there is support for layers; - -

State of Linux Audio in 2009 Linux Plumbers Conference 2009

State of Linux Audio in 2009 Linux Plumbers Conference 2009 Lennart Poettering [email protected] September 2009 Lennart Poettering State of Linux Audio in 2009 Who Am I? Software Engineer at Red Hat, Inc. Developer of PulseAudio, Avahi and a few other Free Software projects http://0pointer.de/lennart/ [email protected] IRC: mezcalero Lennart Poettering State of Linux Audio in 2009 Perspective Lennart Poettering State of Linux Audio in 2009 So, what happened since last LPC? Lennart Poettering State of Linux Audio in 2009 RIP: EsounD is officially gone. Lennart Poettering State of Linux Audio in 2009 (at least on Fedora) RIP: OSS is officially gone. Lennart Poettering State of Linux Audio in 2009 RIP: OSS is officially gone. (at least on Fedora) Lennart Poettering State of Linux Audio in 2009 Audio API Guide http://0pointer.de/blog/projects/guide-to-sound-apis Lennart Poettering State of Linux Audio in 2009 We also make use of high-resolution timers on the desktop by default. We now use realtime scheduling on the desktop by default. Lennart Poettering State of Linux Audio in 2009 We now use realtime scheduling on the desktop by default. We also make use of high-resolution timers on the desktop by default. Lennart Poettering State of Linux Audio in 2009 2s Buffers Lennart Poettering State of Linux Audio in 2009 Mixer abstraction? Due to user-friendliness, i18n, meta data (icons, ...) We moved a couple of things into the audio server: Timer-based audio scheduling; mixing; flat volume/volume range and granularity extension; integration of volume sliders; mixer abstraction; monitoring Lennart Poettering State of Linux Audio in 2009 We moved a couple of things into the audio server: Timer-based audio scheduling; mixing; flat volume/volume range and granularity extension; integration of volume sliders; mixer abstraction; monitoring Mixer abstraction? Due to user-friendliness, i18n, meta data (icons, ...) Lennart Poettering State of Linux Audio in 2009 udev integration: meta data, by-path/by-id/.. -

Release Notes for Fedora 15

Fedora 15 Release Notes Release Notes for Fedora 15 Edited by The Fedora Docs Team Copyright © 2011 Red Hat, Inc. and others. The text of and illustrations in this document are licensed by Red Hat under a Creative Commons Attribution–Share Alike 3.0 Unported license ("CC-BY-SA"). An explanation of CC-BY-SA is available at http://creativecommons.org/licenses/by-sa/3.0/. The original authors of this document, and Red Hat, designate the Fedora Project as the "Attribution Party" for purposes of CC-BY-SA. In accordance with CC-BY-SA, if you distribute this document or an adaptation of it, you must provide the URL for the original version. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, JBoss, MetaMatrix, Fedora, the Infinity Logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. For guidelines on the permitted uses of the Fedora trademarks, refer to https:// fedoraproject.org/wiki/Legal:Trademark_guidelines. Linux® is the registered trademark of Linus Torvalds in the United States and other countries. Java® is a registered trademark of Oracle and/or its affiliates. XFS® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. MySQL® is a registered trademark of MySQL AB in the United States, the European Union and other countries. All other trademarks are the property of their respective owners. -

VNC User Guide 7 About This Guide

VNC® User Guide Version 5.3 December 2015 Trademarks RealVNC, VNC and RFB are trademarks of RealVNC Limited and are protected by trademark registrations and/or pending trademark applications in the European Union, United States of America and other jursidictions. Other trademarks are the property of their respective owners. Protected by UK patent 2481870; US patent 8760366 Copyright Copyright © RealVNC Limited, 2002-2015. All rights reserved. No part of this documentation may be reproduced in any form or by any means or be used to make any derivative work (including translation, transformation or adaptation) without explicit written consent of RealVNC. Confidentiality All information contained in this document is provided in commercial confidence for the sole purpose of use by an authorized user in conjunction with RealVNC products. The pages of this document shall not be copied, published, or disclosed wholly or in part to any party without RealVNC’s prior permission in writing, and shall be held in safe custody. These obligations shall not apply to information which is published or becomes known legitimately from some source other than RealVNC. Contact RealVNC Limited Betjeman House 104 Hills Road Cambridge CB2 1LQ United Kingdom www.realvnc.com Contents About This Guide 7 Chapter 1: Introduction 9 Principles of VNC remote control 10 Getting two computers ready to use 11 Connectivity and feature matrix 13 What to read next 17 Chapter 2: Getting Connected 19 Step 1: Ensure VNC Server is running on the host computer 20 Step 2: Start VNC -

Camcorder Multimedia Framework with Linux and Gstreamer

Camcorder multimedia framework with Linux and GStreamer W. H. Lee, E. K. Kim, J. J. Lee , S. H. Kim, S. S. Park SWL, Samsung Electronics [email protected] Abstract Application Applications Layer Along with recent rapid technical advances, user expec- Multimedia Middleware Sequencer Graphics UI Connectivity DVD FS tations for multimedia devices have been changed from Layer basic functions to many intelligent features. In order to GStreamer meet such requirements, the product requires not only a OSAL HAL OS Layer powerful hardware platform, but also a software frame- Device Software Linux Kernel work based on appropriate OS, such as Linux, support- Drivers codecs Hardware Camcorder hardware platform ing many rich development features. Layer In this paper, a camcorder framework is introduced that is designed and implemented by making use of open Figure 1: Architecture diagram of camcorder multime- source middleware in Linux. Many potential develop- dia framework ers can be referred to this multimedia framework for camcorder and other similar product development. The The three software layers on any hardware platform are overall framework architecture as well as communica- application, middleware, and OS. The architecture and tion mechanisms are described in detail. Furthermore, functional operation of each layer is discussed. Addi- many methods implemented to improve the system per- tionally, some design and implementation issues are ad- formance are addressed as well. dressed from the perspective of system performance. The overall software architecture of a multimedia 1 Introduction framework is described in Section 2. The framework design and its operation are introduced in detail in Sec- It has recently become very popular to use the internet to tion 3. -

RZ/G Verified Linux Package for 64Bit Kernel Version 1.0.5-RT R01TU0278EJ0105 Rev

RZ/G Verified Linux Package for 64bit kernel Version 1.0.5-RT R01TU0278EJ0105 Rev. 1.05 Component list Aug. 31, 2020 Components listed below are installed to the rootfs which is used for booting target boards by building the Verified Linux Package according to the Release Note. Each image name corresponds to the target name used when running bitbake commands such as “bitbake core-image- weston”. Column “weston (Gecko)” corresponds to the building procedure for HTML5 described in the Release Note for HTML5. Versions of some packages installed for HTML5 are different from the other images. In the cases where a version of a specific package installed for HTML5 is different, it is written like as “(Gecko: x.x.x)” in column “version”. weston minimal bsp weston qt version (Gecko) acl ✓ 2.2.52 adwaita-icon-theme-symbolic ✓ ✓ ✓ 3.24.0 alsa-conf ✓ ✓ ✓ ✓ 1.1.4.1 alsa-plugins-pulseaudio-conf ✓ 1.1.4 alsa-states ✓ ✓ ✓ ✓ 0.2.0 alsa-tools ✓ ✓ ✓ 1.1.3 alsa-utils ✓ ✓ ✓ ✓ 1.1.4 alsa-utils-aconnect ✓ ✓ ✓ ✓ 1.1.4 alsa-utils-alsactl ✓ ✓ ✓ ✓ 1.1.4 alsa-utils-alsaloop ✓ ✓ ✓ ✓ 1.1.4 alsa-utils-alsamixer ✓ ✓ ✓ ✓ 1.1.4 alsa-utils-alsatplg ✓ ✓ ✓ ✓ 1.1.4 alsa-utils-alsaucm ✓ ✓ ✓ ✓ 1.1.4 alsa-utils-amixer ✓ ✓ ✓ ✓ 1.1.4 alsa-utils-aplay ✓ ✓ ✓ ✓ 1.1.4 alsa-utils-aseqdump ✓ ✓ ✓ ✓ 1.1.4 alsa-utils-aseqnet ✓ ✓ ✓ ✓ 1.1.4 alsa-utils-iecset ✓ ✓ ✓ ✓ 1.1.4 alsa-utils-midi ✓ ✓ ✓ ✓ 1.1.4 alsa-utils-speakertest ✓ ✓ ✓ ✓ 1.1.4 at ✓ 3.1.20 attr ✓ 2.4.47 audio-init ✓ ✓ ✓ ✓ 1.0 avahi-daemon ✓ ✓ ✓ 0.6.32 avahi-locale-en-gb ✓ ✓ ✓ 0.6.32 base-files ✓ ✓ ✓ ✓ ✓ 3.0.14 base-files-dev ✓ ✓ ✓ 3.0.14 base-passwd ✓ ✓ ✓ ✓ ✓ 3.5.29 bash ✓ ✓ ✓ ✓ ✓ 3.2.57 bash-dev ✓ ✓ ✓ 3.2.57 bayer2raw ✓ ✓ ✓ 1.0 bc ✓ 1.06 bc-dev ✓ 1.06 bluez5 ✓ ✓ ✓ ✓ 5.46 bluez-alsa ✓ ✓ ✓ ✓ 1.0 bt-fw ✓ ✓ ✓ ✓ 8.7.1+git0+0ee619b598 busybox ✓ ✓ ✓ ✓ ✓ 1.30.1 (Gecko: 1.22.0) busybox-hwclock ✓ ✓ ✓ ✓ ✓ 1.30.1 (Gecko: 1.22.0) busybox-udhcpc ✓ ✓ ✓ ✓ ✓ 1.30.1 (Gecko: 1.22.0) bzip2 ✓ ✓ ✓ 1.0.6 R01TU0278EJ0105 Rev. -

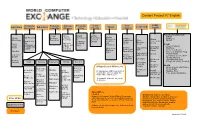

Content Project V7 English

Content Project V7 English Public Computer Entrepre- Climate Program- Solar Water Tech Leadership Agriculture Recycling Education neurship Change ming Energy Literacy Health Potential and Growth CD3W Videos BFOIT CD3W IEARN We Recycle Business Lessons Introduction Resources CD3W CD3W Resources Getting Where GirlRising Resources Resources Readings to on Solar Started with Class UNGEI How to Set Computer Energy on Water There Is No and Ubuntu Manage- Doctor Peace Up Nat’l Geo Program- 10.04 ment STEM Computer a Climate.gov ming Sanitation Corps UNESCO Khan Academy Books Refurbishing CLEAN Getting Empowering Center and more UN Lesson HIV/AIDS WikiSlice Plans on Started with Girls Library Ck-12 Textbooks Adrodok Water OpenOffice Intro to Computer Prog Empowering Medline Thinkersmith Vegetable How to Girls-School Computer Sci in a Box Garden Assemble a Computer Ubuntu Manual Life Skills Childhood LibreOffice Guides Healthy Illness Harvest Math Science Reference Writing Soc.Sci. Promoting Comp. for Class. Guidesl Powerful Health for People Health Wikipedia and Wikibooks Children Hesperian Health Guides Khan Video BlueMall Medline Learning Volunteer- Teach AIDS Khan Video Lessons Basic 20 Gigabytes with thousands of Health Medline Plus Lessons Spelling Center ism Activities for Language searchable articles using the Pop. Council Activities Ck-12 Kiwix offline wiki-reader Primary Gr. Ck-12 Textbooks Dictionary Common World Map Textbooks Sense Project HIV Toolkit Wiki HowTo Thousands of books for youth WikiSlice Compositio of all ages Unesco -Animals -

The Gnome Desktop Comes to Hp-Ux

GNOME on HP-UX Stormy Peters Hewlett-Packard Company 970-898-7277 [email protected] THE GNOME DESKTOP COMES TO HP-UX by Stormy Peters, Jim Leth, and Aaron Weber At the Linux World Expo in San Jose last August, a consortium of companies, including Hewlett-Packard, inaugurated the GNOME Foundation to further the goals of the GNOME project. An organization of open-source software developers, the GNOME project is the major force behind the GNOME desktop: a powerful, open-source desktop environment with an intuitive user interface, a component-based architecture, and an outstanding set of applications for both developers and users. The GNOME Foundation will provide resources to coordinate releases, determine future project directions, and promote GNOME through communication and press releases. At the same conference in San Jose, Hewlett-Packard also announced that GNOME would become the default HP-UX desktop environment. This will enhance the user experience on HP-UX, providing a full feature set and access to new applications, and also will allow commonality of desktops across different vendors' implementations of UNIX and Linux. HP will provide transition tools for migrating users from CDE to GNOME, and support for GNOME will be available from HP. Those users who wish to remain with CDE will continue to be supported. Hewlett-Packard, working with Ximian, Inc. (formerly known as Helix Code), will be providing the GNOME desktop on HP-UX. Ximian is an open-source desktop company that currently employs many of the original and current developers of GNOME, including Miguel de Icaza. They have developed and contributed applications such as Evolution and Red Carpet to GNOME. -

Libreoffice 10Th Anniversary Digital Sovereignty & Open

LibreOffice 10th Anniversary Digital Sovereignty & Open Document Standards Italo Vignoli 10 Years / 20 Years July 19, 2000: Sun announces OpenOffice.org Sept 28, 2010: OpenOffice.org community announces LibreOffice Timeline 35 years since Marco Börries releases the first version of StarWriter in 1985 2009: Oracle acquires Sun OpenOffice vs LibreOffice SEPTEMBER 28, 2010 OpenOffice.org Community announces The Document Foundation The community of volunteers developing and promoting OpenOffice.org sets up an independent Foundation to drive the further growth of the project The brand "LibreOffice" has been chosen for the software going forward Gamalielsson, J. and Lundell, B. (2011) Open Source communities for long-term maintenance of digital assets: what is offered for ODF & OOXML?, in Hammouda, I. and Lundell, B. (Eds.) Proceedings of SOS 2011: Towards Sustainable Open Source, Tampere University of Technology, Tampere, ISBN 978-952-15-2718-0, ISSN 1797-836X. LibreOffice 3.3 at FOSDEM 2011 Roadmap to the Future … • Time based, six-monthly release train … • Synchronised with the Linux distributions cadence • i.e. a normal Free Software project • Rapid fire, (monthly) bug-fix release on stable branch The Document Foundation The Document Foundation Founding Principles COPYLEFT LICENSE NO CONTRIBUTOR AGREEMENT MERITOCRACY COMMUNITY GOVERNANCE VENDOR INDEPENDENCE LibreOffice Main Asset Incredible Easy Hacks Huge Mentoring Effort 100 200 300 400 500 600 0 Sep 10 Oct 10 Nov 10 CumulativeNumber of LibreOffice New Code Committers New Hackers New Dec -

Ubuntu Server Guide Basic Installation Preparing to Install

Ubuntu Server Guide Welcome to the Ubuntu Server Guide! This site includes information on using Ubuntu Server for the latest LTS release, Ubuntu 20.04 LTS (Focal Fossa). For an offline version as well as versions for previous releases see below. Improving the Documentation If you find any errors or have suggestions for improvements to pages, please use the link at thebottomof each topic titled: “Help improve this document in the forum.” This link will take you to the Server Discourse forum for the specific page you are viewing. There you can share your comments or let us know aboutbugs with any page. PDFs and Previous Releases Below are links to the previous Ubuntu Server release server guides as well as an offline copy of the current version of this site: Ubuntu 20.04 LTS (Focal Fossa): PDF Ubuntu 18.04 LTS (Bionic Beaver): Web and PDF Ubuntu 16.04 LTS (Xenial Xerus): Web and PDF Support There are a couple of different ways that the Ubuntu Server edition is supported: commercial support and community support. The main commercial support (and development funding) is available from Canonical, Ltd. They supply reasonably- priced support contracts on a per desktop or per-server basis. For more information see the Ubuntu Advantage page. Community support is also provided by dedicated individuals and companies that wish to make Ubuntu the best distribution possible. Support is provided through multiple mailing lists, IRC channels, forums, blogs, wikis, etc. The large amount of information available can be overwhelming, but a good search engine query can usually provide an answer to your questions.