Learning Polytrees

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

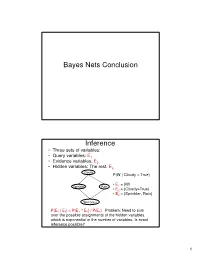

Bayes Nets Conclusion Inference

Bayes Nets Conclusion Inference • Three sets of variables: • Query variables: E1 • Evidence variables: E2 • Hidden variables: The rest, E3 Cloudy P(W | Cloudy = True) • E = {W} Sprinkler Rain 1 • E2 = {Cloudy=True} • E3 = {Sprinkler, Rain} Wet Grass P(E 1 | E 2) = P(E 1 ^ E 2) / P(E 2) Problem: Need to sum over the possible assignments of the hidden variables, which is exponential in the number of variables. Is exact inference possible? 1 A Simple Case A B C D • Suppose that we want to compute P(D = d) from this network. A Simple Case A B C D • Compute P(D = d) by summing the joint probability over all possible values of the remaining variables A, B, and C: P(D === d) === ∑∑∑ P(A === a, B === b,C === c, D === d) a,b,c 2 A Simple Case A B C D • Decompose the joint by using the fact that it is the product of terms of the form: P(X | Parents(X)) P(D === d) === ∑∑∑ P(D === d | C === c)P(C === c | B === b)P(B === b | A === a)P(A === a) a,b,c A Simple Case A B C D • We can avoid computing the sum for all possible triplets ( A,B,C) by distributing the sums inside the product P(D === d) === ∑∑∑ P(D === d | C === c)∑∑∑P(C === c | B === b)∑∑∑P(B === b| A === a)P(A === a) c b a 3 A Simple Case A B C D This term depends only on B and can be written as a 2- valued function fA(b) P(D === d) === ∑∑∑ P(D === d | C === c)∑∑∑P(C === c | B === b)∑∑∑P(B === b| A === a)P(A === a) c b a A Simple Case A B C D This term depends only on c and can be written as a 2- valued function fB(c) === === === === === === P(D d) ∑∑∑ P(D d | C c)∑∑∑P(C c | B b)f A(b) c b …. -

Matroid Theory

MATROID THEORY HAYLEY HILLMAN 1 2 HAYLEY HILLMAN Contents 1. Introduction to Matroids 3 1.1. Basic Graph Theory 3 1.2. Basic Linear Algebra 4 2. Bases 5 2.1. An Example in Linear Algebra 6 2.2. An Example in Graph Theory 6 3. Rank Function 8 3.1. The Rank Function in Graph Theory 9 3.2. The Rank Function in Linear Algebra 11 4. Independent Sets 14 4.1. Independent Sets in Graph Theory 14 4.2. Independent Sets in Linear Algebra 17 5. Cycles 21 5.1. Cycles in Graph Theory 22 5.2. Cycles in Linear Algebra 24 6. Vertex-Edge Incidence Matrix 25 References 27 MATROID THEORY 3 1. Introduction to Matroids A matroid is a structure that generalizes the properties of indepen- dence. Relevant applications are found in graph theory and linear algebra. There are several ways to define a matroid, each relate to the concept of independence. This paper will focus on the the definitions of a matroid in terms of bases, the rank function, independent sets and cycles. Throughout this paper, we observe how both graphs and matrices can be viewed as matroids. Then we translate graph theory to linear algebra, and vice versa, using the language of matroids to facilitate our discussion. Many proofs for the properties of each definition of a matroid have been omitted from this paper, but you may find complete proofs in Oxley[2], Whitney[3], and Wilson[4]. The four definitions of a matroid introduced in this paper are equiv- alent to each other. -

Tree Structures

Tree Structures Definitions: o A tree is a connected acyclic graph. o A disconnected acyclic graph is called a forest o A tree is a connected digraph with these properties: . There is exactly one node (Root) with in-degree=0 . All other nodes have in-degree=1 . A leaf is a node with out-degree=0 . There is exactly one path from the root to any leaf o The degree of a tree is the maximum out-degree of the nodes in the tree. o If (X,Y) is a path: X is an ancestor of Y, and Y is a descendant of X. Root X Y CSci 1112 – Algorithms and Data Structures, A. Bellaachia Page 1 Level of a node: Level 0 or 1 1 or 2 2 or 3 3 or 4 Height or depth: o The depth of a node is the number of edges from the root to the node. o The root node has depth zero o The height of a node is the number of edges from the node to the deepest leaf. o The height of a tree is a height of the root. o The height of the root is the height of the tree o Leaf nodes have height zero o A tree with only a single node (hence both a root and leaf) has depth and height zero. o An empty tree (tree with no nodes) has depth and height −1. o It is the maximum level of any node in the tree. CSci 1112 – Algorithms and Data Structures, A. -

A Simple Linear-Time Algorithm for Finding Path-Decompostions of Small Width

Introduction Preliminary Definitions Pathwidth Algorithm Summary A Simple Linear-Time Algorithm for Finding Path-Decompostions of Small Width Kevin Cattell Michael J. Dinneen Michael R. Fellows Department of Computer Science University of Victoria Victoria, B.C. Canada V8W 3P6 Information Processing Letters 57 (1996) 197–203 Kevin Cattell, Michael J. Dinneen, Michael R. Fellows Linear-Time Path-Decomposition Algorithm Introduction Preliminary Definitions Pathwidth Algorithm Summary Outline 1 Introduction Motivation History 2 Preliminary Definitions Boundaried graphs Path-decompositions Topological tree obstructions 3 Pathwidth Algorithm Main result Linear-time algorithm Proof of correctness Other results Kevin Cattell, Michael J. Dinneen, Michael R. Fellows Linear-Time Path-Decomposition Algorithm Introduction Preliminary Definitions Motivation Pathwidth Algorithm History Summary Motivation Pathwidth is related to several VLSI layout problems: vertex separation link gate matrix layout edge search number ... Usefullness of bounded treewidth in: study of graph minors (Robertson and Seymour) input restrictions for many NP-complete problems (fixed-parameter complexity) Kevin Cattell, Michael J. Dinneen, Michael R. Fellows Linear-Time Path-Decomposition Algorithm Introduction Preliminary Definitions Motivation Pathwidth Algorithm History Summary History General problem(s) is NP-complete Input: Graph G, integer t Question: Is tree/path-width(G) ≤ t? Algorithmic development (fixed t): O(n2) nonconstructive treewidth algorithm by Robertson and Seymour (1986) O(nt+2) treewidth algorithm due to Arnberg, Corneil and Proskurowski (1987) O(n log n) treewidth algorithm due to Reed (1992) 2 O(2t n) treewidth algorithm due to Bodlaender (1993) O(n log2 n) pathwidth algorithm due to Ellis, Sudborough and Turner (1994) Kevin Cattell, Michael J. Dinneen, Michael R. -

Matroids You Have Known

26 MATHEMATICS MAGAZINE Matroids You Have Known DAVID L. NEEL Seattle University Seattle, Washington 98122 [email protected] NANCY ANN NEUDAUER Pacific University Forest Grove, Oregon 97116 nancy@pacificu.edu Anyone who has worked with matroids has come away with the conviction that matroids are one of the richest and most useful ideas of our day. —Gian Carlo Rota [10] Why matroids? Have you noticed hidden connections between seemingly unrelated mathematical ideas? Strange that finding roots of polynomials can tell us important things about how to solve certain ordinary differential equations, or that computing a determinant would have anything to do with finding solutions to a linear system of equations. But this is one of the charming features of mathematics—that disparate objects share similar traits. Properties like independence appear in many contexts. Do you find independence everywhere you look? In 1933, three Harvard Junior Fellows unified this recurring theme in mathematics by defining a new mathematical object that they dubbed matroid [4]. Matroids are everywhere, if only we knew how to look. What led those junior-fellows to matroids? The same thing that will lead us: Ma- troids arise from shared behaviors of vector spaces and graphs. We explore this natural motivation for the matroid through two examples and consider how properties of in- dependence surface. We first consider the two matroids arising from these examples, and later introduce three more that are probably less familiar. Delving deeper, we can find matroids in arrangements of hyperplanes, configurations of points, and geometric lattices, if your tastes run in that direction. -

Incremental Dynamic Construction of Layered Polytree Networks )

440 Incremental Dynamic Construction of Layered Polytree Networks Keung-Chi N g Tod S. Levitt lET Inc., lET Inc., 14 Research Way, Suite 3 14 Research Way, Suite 3 E. Setauket, NY 11733 E. Setauket , NY 11733 [email protected] [email protected] Abstract Many exact probabilistic inference algorithms1 have been developed and refined. Among the earlier meth ods, the polytree algorithm (Kim and Pearl 1983, Certain classes of problems, including per Pearl 1986) can efficiently update singly connected ceptual data understanding, robotics, discov belief networks (or polytrees) , however, it does not ery, and learning, can be represented as incre work ( without modification) on multiply connected mental, dynamically constructed belief net networks. In a singly connected belief network, there is works. These automatically constructed net at most one path (in the undirected sense) between any works can be dynamically extended and mod pair of nodes; in a multiply connected belief network, ified as evidence of new individuals becomes on the other hand, there is at least one pair of nodes available. The main result of this paper is the that has more than one path between them. Despite incremental extension of the singly connect the fact that the general updating problem for mul ed polytree network in such a way that the tiply connected networks is NP-hard (Cooper 1990), network retains its singly connected polytree many propagation algorithms have been applied suc structure after the changes. The algorithm cessfully to multiply connected networks, especially on is deterministic and is guaranteed to have networks that are sparsely connected. These methods a complexity of single node addition that is include clustering (also known as node aggregation) at most of order proportional to the number (Chang and Fung 1989, Pearl 1988), node elimination of nodes (or size) of the network. -

A Framework for the Evaluation and Management of Network Centrality ∗

A Framework for the Evaluation and Management of Network Centrality ∗ Vatche Ishakiany D´oraErd}osz Evimaria Terzix Azer Bestavros{ Abstract total number of shortest paths that go through it. Ever Network-analysis literature is rich in node-centrality mea- since, researchers have proposed different measures of sures that quantify the centrality of a node as a function centrality, as well as algorithms for computing them [1, of the (shortest) paths of the network that go through it. 2, 4, 10, 11]. The common characteristic of these Existing work focuses on defining instances of such mea- measures is that they quantify a node's centrality by sures and designing algorithms for the specific combinato- computing the number (or the fraction) of (shortest) rial problems that arise for each instance. In this work, we paths that go through that node. For example, in a propose a unifying definition of centrality that subsumes all network where packets propagate through nodes, a node path-counting based centrality definitions: e.g., stress, be- with high centrality is one that \sees" (and potentially tweenness or paths centrality. We also define a generic algo- controls) most of the traffic. rithm for computing this generalized centrality measure for In many applications, the centrality of a single node every node and every group of nodes in the network. Next, is not as important as the centrality of a group of we define two optimization problems: k-Group Central- nodes. For example, in a network of lobbyists, one ity Maximization and k-Edge Centrality Boosting. might want to measure the combined centrality of a In the former, the task is to identify the subset of k nodes particular group of lobbyists. -

Lowest Common Ancestors in Trees and Directed Acyclic Graphs1

Lowest Common Ancestors in Trees and Directed Acyclic Graphs1 Michael A. Bender2 3 Martín Farach-Colton4 Giridhar Pemmasani2 Steven Skiena2 5 Pavel Sumazin6 Version: We study the problem of finding lowest common ancestors (LCA) in trees and directed acyclic graphs (DAGs). Specifically, we extend the LCA problem to DAGs and study the LCA variants that arise in this general setting. We begin with a clear exposition of Berkman and Vishkin’s simple optimal algorithm for LCA in trees. The ideas presented are not novel theoretical contributions, but they lay the foundation for our work on LCA problems in DAGs. We present an algorithm that finds all-pairs-representative : LCA in DAGs in O~(n2 688 ) operations, provide a transitive-closure lower bound for the all-pairs-representative-LCA problem, and develop an LCA-existence algorithm that preprocesses the DAG in transitive-closure time. We also present a suboptimal but practical O(n3) algorithm for all-pairs-representative LCA in DAGs that uses ideas from the optimal algorithms in trees and DAGs. Our results reveal a close relationship between the LCA, all-pairs-shortest-path, and transitive-closure problems. We conclude the paper with a short experimental study of LCA algorithms in trees and DAGs. Our experiments and source code demonstrate the elegance of the preprocessing-query algorithms for LCA in trees. We show that for most trees the suboptimal Θ(n log n)-preprocessing Θ(1)-query algorithm should be preferred, and demonstrate that our proposed O(n3) algorithm for all- pairs-representative LCA in DAGs performs well in both low and high density DAGs. -

Trees a Tree Is a Graph Which Is (A) Connected and (B) Has No Cycles (Acyclic)

Trees A tree is a graph which is (a) Connected and (b) has no cycles (acyclic). 1 Lemma 1 Let the components of G be C1; C2; : : : ; Cr, Suppose e = (u; v) 2= E, u 2 Ci; v 2 Cj. (a) i = j ) !(G + e) = !(G). (b) i 6= j ) !(G + e) = !(G) − 1. (a) v u (b) u v 2 Proof Every path P in G + e which is not in G must contain e. Also, !(G + e) ≤ !(G): Suppose (x = u0; u1; : : : ; uk = u; uk+1 = v; : : : ; u` = y) is a path in G + e that uses e. Then clearly x 2 Ci and y 2 Cj. (a) follows as now no new relations x ∼ y are added. (b) Only possible new relations x ∼ y are for x 2 Ci and y 2 Cj. But u ∼ v in G + e and so Ci [ Cj becomes (only) new component. 2 3 Lemma 2 G = (V; E) is acyclic (forest) with (tree) components C1; C2; : : : ; Ck. jV j = n. e = (u; v) 2= E, u 2 Ci; v 2 Cj. (a) i = j ) G + e contains a cycle. (b) i 6= j ) G + e is acyclic and has one less com- ponent. (c) G has n − k edges. 4 (a) u; v 2 Ci implies there exists a path (u = u0; u1; : : : ; u` = v) in G. So G + e contains the cycle u0; u1; : : : ; u`; u0. u v 5 (a) v u Suppose G + e contains the cycle C. e 2 C else C is a cycle of G. C = (u = u0; u1; : : : ; u` = v; u0): But then G contains the path (u0; u1; : : : ; u`) from u to v – contradiction. -

![Arxiv:1901.04560V1 [Math.CO] 14 Jan 2019 the flexibility That Makes Hypergraphs Such a Versatile Tool Complicates Their Analysis](https://docslib.b-cdn.net/cover/7775/arxiv-1901-04560v1-math-co-14-jan-2019-the-exibility-that-makes-hypergraphs-such-a-versatile-tool-complicates-their-analysis-817775.webp)

Arxiv:1901.04560V1 [Math.CO] 14 Jan 2019 the flexibility That Makes Hypergraphs Such a Versatile Tool Complicates Their Analysis

MINIMALLY CONNECTED HYPERGRAPHS MARK BUDDEN, JOSH HILLER, AND ANDREW PENLAND Abstract. Graphs and hypergraphs are foundational structures in discrete mathematics. They have many practical applications, including the rapidly developing field of bioinformatics, and more generally, biomathematics. They are also a source of interesting algorithmic problems. In this paper, we define a construction process for minimally connected r-uniform hypergraphs, which captures the intuitive notion of building a hypergraph piece-by-piece, and a numerical invariant called the tightness, which is independent of the construc- tion process used. Using these tools, we prove some fundamental properties of minimally connected hypergraphs. We also give bounds on their chromatic numbers and provide some results involving edge colorings. We show that ev- ery connected r-uniform hypergraph contains a minimally connected spanning subhypergraph and provide a polynomial-time algorithm for identifying such a subhypergraph. 1. Introduction Graphs and hypergraphs provide many beautiful results in discrete mathemat- ics. They are also extremely useful in applications. Over the last six decades, graphs and their generalizations have been used for modeling many biological phenomena, ranging in scale from protein-protein interactions, individualized cancer treatments, carcinogenesis, and even complex interspecial relationships [12, 15, 16, 19, 22]. In the last few years in particular, hypergraphs have found an increasingly prominent position in the biomathematical literature, as they allow scientists and practitioners to model complex interactions between arbitrarily many actors [22]. arXiv:1901.04560v1 [math.CO] 14 Jan 2019 The flexibility that makes hypergraphs such a versatile tool complicates their analysis. Because of this, many different algorithms and metrics have been devel- oped to assist with their application [18, 23]. -

1 Plane and Planar Graphs Definition 1 a Graph G(V,E) Is Called Plane If

1 Plane and Planar Graphs Definition 1 A graph G(V,E) is called plane if • V is a set of points in the plane; • E is a set of curves in the plane such that 1. every curve contains at most two vertices and these vertices are the ends of the curve; 2. the intersection of every two curves is either empty, or one, or two vertices of the graph. Definition 2 A graph is called planar, if it is isomorphic to a plane graph. The plane graph which is isomorphic to a given planar graph G is said to be embedded in the plane. A plane graph isomorphic to G is called its drawing. 1 9 2 4 2 1 9 8 3 3 6 8 7 4 5 6 5 7 G is a planar graph H is a plane graph isomorphic to G 1 The adjacency list of graph F . Is it planar? 1 4 56 8 911 2 9 7 6 103 3 7 11 8 2 4 1 5 9 12 5 1 12 4 6 1 2 8 10 12 7 2 3 9 11 8 1 11 36 10 9 7 4 12 12 10 2 6 8 11 1387 12 9546 What happens if we add edge (1,12)? Or edge (7,4)? 2 Definition 3 A set U in a plane is called open, if for every x ∈ U, all points within some distance r from x belong to U. A region is an open set U which contains polygonal u,v-curve for every two points u,v ∈ U. -

On Hamiltonian Line-Graphsq

ON HAMILTONIANLINE-GRAPHSQ BY GARY CHARTRAND Introduction. The line-graph L(G) of a nonempty graph G is the graph whose point set can be put in one-to-one correspondence with the line set of G in such a way that two points of L(G) are adjacent if and only if the corresponding lines of G are adjacent. In this paper graphs whose line-graphs are eulerian or hamiltonian are investigated and characterizations of these graphs are given. Furthermore, neces- sary and sufficient conditions are presented for iterated line-graphs to be eulerian or hamiltonian. It is shown that for any connected graph G which is not a path, there exists an iterated line-graph of G which is hamiltonian. Some elementary results on line-graphs. In the course of the article, it will be necessary to refer to several basic facts concerning line-graphs. In this section these results are presented. All the proofs are straightforward and are therefore omitted. In addition a few definitions are given. If x is a Une of a graph G joining the points u and v, written x=uv, then we define the degree of x by deg zz+deg v—2. We note that if w ' the point of L(G) which corresponds to the line x, then the degree of w in L(G) equals the degree of x in G. A point or line is called odd or even depending on whether it has odd or even degree. If G is a connected graph having at least one line, then L(G) is also a connected graph.