An Algorithm for the Exact Treedepth Problem James Trimble School of Computing Science, University of Glasgow Glasgow, Scotland, UK [email protected]

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

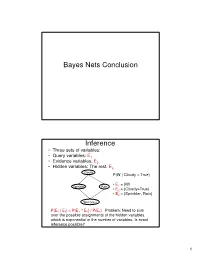

Bayes Nets Conclusion Inference

Bayes Nets Conclusion Inference • Three sets of variables: • Query variables: E1 • Evidence variables: E2 • Hidden variables: The rest, E3 Cloudy P(W | Cloudy = True) • E = {W} Sprinkler Rain 1 • E2 = {Cloudy=True} • E3 = {Sprinkler, Rain} Wet Grass P(E 1 | E 2) = P(E 1 ^ E 2) / P(E 2) Problem: Need to sum over the possible assignments of the hidden variables, which is exponential in the number of variables. Is exact inference possible? 1 A Simple Case A B C D • Suppose that we want to compute P(D = d) from this network. A Simple Case A B C D • Compute P(D = d) by summing the joint probability over all possible values of the remaining variables A, B, and C: P(D === d) === ∑∑∑ P(A === a, B === b,C === c, D === d) a,b,c 2 A Simple Case A B C D • Decompose the joint by using the fact that it is the product of terms of the form: P(X | Parents(X)) P(D === d) === ∑∑∑ P(D === d | C === c)P(C === c | B === b)P(B === b | A === a)P(A === a) a,b,c A Simple Case A B C D • We can avoid computing the sum for all possible triplets ( A,B,C) by distributing the sums inside the product P(D === d) === ∑∑∑ P(D === d | C === c)∑∑∑P(C === c | B === b)∑∑∑P(B === b| A === a)P(A === a) c b a 3 A Simple Case A B C D This term depends only on B and can be written as a 2- valued function fA(b) P(D === d) === ∑∑∑ P(D === d | C === c)∑∑∑P(C === c | B === b)∑∑∑P(B === b| A === a)P(A === a) c b a A Simple Case A B C D This term depends only on c and can be written as a 2- valued function fB(c) === === === === === === P(D d) ∑∑∑ P(D d | C c)∑∑∑P(C c | B b)f A(b) c b …. -

On Treewidth and Graph Minors

On Treewidth and Graph Minors Daniel John Harvey Submitted in total fulfilment of the requirements of the degree of Doctor of Philosophy February 2014 Department of Mathematics and Statistics The University of Melbourne Produced on archival quality paper ii Abstract Both treewidth and the Hadwiger number are key graph parameters in structural and al- gorithmic graph theory, especially in the theory of graph minors. For example, treewidth demarcates the two major cases of the Robertson and Seymour proof of Wagner's Con- jecture. Also, the Hadwiger number is the key measure of the structural complexity of a graph. In this thesis, we shall investigate these parameters on some interesting classes of graphs. The treewidth of a graph defines, in some sense, how \tree-like" the graph is. Treewidth is a key parameter in the algorithmic field of fixed-parameter tractability. In particular, on classes of bounded treewidth, certain NP-Hard problems can be solved in polynomial time. In structural graph theory, treewidth is of key interest due to its part in the stronger form of Robertson and Seymour's Graph Minor Structure Theorem. A key fact is that the treewidth of a graph is tied to the size of its largest grid minor. In fact, treewidth is tied to a large number of other graph structural parameters, which this thesis thoroughly investigates. In doing so, some of the tying functions between these results are improved. This thesis also determines exactly the treewidth of the line graph of a complete graph. This is a critical example in a recent paper of Marx, and improves on a recent result by Grohe and Marx. -

Treewidth I (Algorithms and Networks)

Treewidth Algorithms and Networks Overview • Historic introduction: Series parallel graphs • Dynamic programming on trees • Dynamic programming on series parallel graphs • Treewidth • Dynamic programming on graphs of small treewidth • Finding tree decompositions 2 Treewidth Computing the Resistance With the Laws of Ohm = + 1 1 1 1789-1854 R R1 R2 = + R R1 R2 R1 R2 R1 Two resistors in series R2 Two resistors in parallel 3 Treewidth Repeated use of the rules 6 6 5 2 2 1 7 Has resistance 4 1/6 + 1/2 = 1/(1.5) 1.5 + 1.5 + 5 = 8 1 + 7 = 8 1/8 + 1/8 = 1/4 4 Treewidth 5 6 6 5 2 2 1 7 A tree structure tree A 5 6 P S 2 P 6 P 2 7 S Treewidth 1 Carry on! • Internal structure of 6 6 5 graph can be forgotten 2 2 once we know essential information 1 7 about it! 4 ¼ + ¼ = ½ 6 Treewidth Using tree structures for solving hard problems on graphs 1 • Network is ‘series parallel graph’ • 196*, 197*: many problems that are hard for general graphs are easy for e.g.: – Trees NP-complete – Series parallel graphs • Many well-known problems Linear / polynomial time computable 7 Treewidth Weighted Independent Set • Independent set: set of vertices that are pair wise non-adjacent. • Weighted independent set – Given: Graph G=(V,E), weight w(v) for each vertex v. – Question: What is the maximum total weight of an independent set in G? • NP-complete 8 Treewidth Weighted Independent Set on Trees • On trees, this problem can be solved in linear time with dynamic programming. -

Exclusive Graph Searching Lélia Blin, Janna Burman, Nicolas Nisse

Exclusive Graph Searching Lélia Blin, Janna Burman, Nicolas Nisse To cite this version: Lélia Blin, Janna Burman, Nicolas Nisse. Exclusive Graph Searching. Algorithmica, Springer Verlag, 2017, 77 (3), pp.942-969. 10.1007/s00453-016-0124-0. hal-01266492 HAL Id: hal-01266492 https://hal.archives-ouvertes.fr/hal-01266492 Submitted on 2 Feb 2016 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Exclusive Graph Searching∗ L´eliaBlin Sorbonne Universit´es, UPMC Univ Paris 06, CNRS, Universit´ed'Evry-Val-d'Essonne. LIP6 UMR 7606, 4 place Jussieu 75005, Paris, France [email protected] Janna Burman LRI, Universit´eParis Sud, CNRS, UMR-8623, France. [email protected] Nicolas Nisse Inria, France. Univ. Nice Sophia Antipolis, CNRS, I3S, UMR 7271, Sophia Antipolis, France. [email protected] February 2, 2016 Abstract This paper tackles the well known graph searching problem, where a team of searchers aims at capturing an intruder in a network, modeled as a graph. This problem has been mainly studied for its relationship with the pathwidth of graphs. All variants of this problem assume that any node can be simultaneously occupied by several searchers. -

Tree Structures

Tree Structures Definitions: o A tree is a connected acyclic graph. o A disconnected acyclic graph is called a forest o A tree is a connected digraph with these properties: . There is exactly one node (Root) with in-degree=0 . All other nodes have in-degree=1 . A leaf is a node with out-degree=0 . There is exactly one path from the root to any leaf o The degree of a tree is the maximum out-degree of the nodes in the tree. o If (X,Y) is a path: X is an ancestor of Y, and Y is a descendant of X. Root X Y CSci 1112 – Algorithms and Data Structures, A. Bellaachia Page 1 Level of a node: Level 0 or 1 1 or 2 2 or 3 3 or 4 Height or depth: o The depth of a node is the number of edges from the root to the node. o The root node has depth zero o The height of a node is the number of edges from the node to the deepest leaf. o The height of a tree is a height of the root. o The height of the root is the height of the tree o Leaf nodes have height zero o A tree with only a single node (hence both a root and leaf) has depth and height zero. o An empty tree (tree with no nodes) has depth and height −1. o It is the maximum level of any node in the tree. CSci 1112 – Algorithms and Data Structures, A. -

A Simple Linear-Time Algorithm for Finding Path-Decompostions of Small Width

Introduction Preliminary Definitions Pathwidth Algorithm Summary A Simple Linear-Time Algorithm for Finding Path-Decompostions of Small Width Kevin Cattell Michael J. Dinneen Michael R. Fellows Department of Computer Science University of Victoria Victoria, B.C. Canada V8W 3P6 Information Processing Letters 57 (1996) 197–203 Kevin Cattell, Michael J. Dinneen, Michael R. Fellows Linear-Time Path-Decomposition Algorithm Introduction Preliminary Definitions Pathwidth Algorithm Summary Outline 1 Introduction Motivation History 2 Preliminary Definitions Boundaried graphs Path-decompositions Topological tree obstructions 3 Pathwidth Algorithm Main result Linear-time algorithm Proof of correctness Other results Kevin Cattell, Michael J. Dinneen, Michael R. Fellows Linear-Time Path-Decomposition Algorithm Introduction Preliminary Definitions Motivation Pathwidth Algorithm History Summary Motivation Pathwidth is related to several VLSI layout problems: vertex separation link gate matrix layout edge search number ... Usefullness of bounded treewidth in: study of graph minors (Robertson and Seymour) input restrictions for many NP-complete problems (fixed-parameter complexity) Kevin Cattell, Michael J. Dinneen, Michael R. Fellows Linear-Time Path-Decomposition Algorithm Introduction Preliminary Definitions Motivation Pathwidth Algorithm History Summary History General problem(s) is NP-complete Input: Graph G, integer t Question: Is tree/path-width(G) ≤ t? Algorithmic development (fixed t): O(n2) nonconstructive treewidth algorithm by Robertson and Seymour (1986) O(nt+2) treewidth algorithm due to Arnberg, Corneil and Proskurowski (1987) O(n log n) treewidth algorithm due to Reed (1992) 2 O(2t n) treewidth algorithm due to Bodlaender (1993) O(n log2 n) pathwidth algorithm due to Ellis, Sudborough and Turner (1994) Kevin Cattell, Michael J. Dinneen, Michael R. -

![Arxiv:2006.06067V2 [Math.CO] 4 Jul 2021](https://docslib.b-cdn.net/cover/6166/arxiv-2006-06067v2-math-co-4-jul-2021-416166.webp)

Arxiv:2006.06067V2 [Math.CO] 4 Jul 2021

Treewidth versus clique number. I. Graph classes with a forbidden structure∗† Cl´ement Dallard1 Martin Milaniˇc1 Kenny Storgelˇ 2 1 FAMNIT and IAM, University of Primorska, Koper, Slovenia 2 Faculty of Information Studies, Novo mesto, Slovenia [email protected] [email protected] [email protected] Treewidth is an important graph invariant, relevant for both structural and algo- rithmic reasons. A necessary condition for a graph class to have bounded treewidth is the absence of large cliques. We study graph classes closed under taking induced subgraphs in which this condition is also sufficient, which we call (tw,ω)-bounded. Such graph classes are known to have useful algorithmic applications related to variants of the clique and k-coloring problems. We consider six well-known graph containment relations: the minor, topological minor, subgraph, induced minor, in- duced topological minor, and induced subgraph relations. For each of them, we give a complete characterization of the graphs H for which the class of graphs excluding H is (tw,ω)-bounded. Our results yield an infinite family of χ-bounded induced-minor-closed graph classes and imply that the class of 1-perfectly orientable graphs is (tw,ω)-bounded, leading to linear-time algorithms for k-coloring 1-perfectly orientable graphs for every fixed k. This answers a question of Breˇsar, Hartinger, Kos, and Milaniˇc from 2018, and one of Beisegel, Chudnovsky, Gurvich, Milaniˇc, and Servatius from 2019, respectively. We also reveal some further algorithmic implications of (tw,ω)- boundedness related to list k-coloring and clique problems. In addition, we propose a question about the complexity of the Maximum Weight Independent Set prob- lem in (tw,ω)-bounded graph classes and prove that the problem is polynomial-time solvable in every class of graphs excluding a fixed star as an induced minor. -

Handout on Vertex Separators and Low Tree-Width K-Partition

Handout on vertex separators and low tree-width k-partition January 12 and 19, 2012 Given a graph G(V; E) and a set of vertices S ⊂ V , an S-flap is the set of vertices in a connected component of the graph induced on V n S. A set S is a vertex separator if no S-flap has more than n=p 2 vertices. Lipton and Tarjan showed that every planar graph has a separator of size O( n). This was generalized by Alon, Seymour and Thomas to any family of graphs that excludes some fixed (arbitrary) subgraph H as a minor. Theorem 1 There a polynomial time algorithm that given a parameter h and an n vertex graphp G(V; E) either outputs a Kh minor, or outputs a vertex separator of size at most h hn. Corollary 2 Let G(V; E) be an arbitrary graph with nop Kh minor, and let W ⊂ V . Then one can find in polynomial time a set S of at most h hn vertices such that every S-flap contains at most jW j=2 vertices from W . Proof: The proof given in class for Theorem 1 easily extends to this setting. 2 p Corollary 3 Every graph with no Kh as a minor has treewidth O(h hn). Moreover, a tree decomposition with this treewidth can be found in polynomial time. Proof: We have seen an algorithm that given a graph of treewidth p constructs a tree decomposition of treewidth 8p. Usingp Corollary 2, that algorithm can be modified to give a tree decomposition of treewidth 8h hn in our case, and do so in polynomial time. -

Discrete Mathematics Rooted Directed Path Graphs Are Leaf Powers

Discrete Mathematics 310 (2010) 897–910 Contents lists available at ScienceDirect Discrete Mathematics journal homepage: www.elsevier.com/locate/disc Rooted directed path graphs are leaf powers Andreas Brandstädt a, Christian Hundt a, Federico Mancini b, Peter Wagner a a Institut für Informatik, Universität Rostock, D-18051, Germany b Department of Informatics, University of Bergen, N-5020 Bergen, Norway article info a b s t r a c t Article history: Leaf powers are a graph class which has been introduced to model the problem of Received 11 March 2009 reconstructing phylogenetic trees. A graph G D .V ; E/ is called k-leaf power if it admits a Received in revised form 2 September 2009 k-leaf root, i.e., a tree T with leaves V such that uv is an edge in G if and only if the distance Accepted 13 October 2009 between u and v in T is at most k. Moroever, a graph is simply called leaf power if it is a Available online 30 October 2009 k-leaf power for some k 2 N. This paper characterizes leaf powers in terms of their relation to several other known graph classes. It also addresses the problem of deciding whether a Keywords: given graph is a k-leaf power. Leaf powers Leaf roots We show that the class of leaf powers coincides with fixed tolerance NeST graphs, a Graph class inclusions well-known graph class with absolutely different motivations. After this, we provide the Strongly chordal graphs largest currently known proper subclass of leaf powers, i.e, the class of rooted directed path Fixed tolerance NeST graphs graphs. -

Lowest Common Ancestors in Trees and Directed Acyclic Graphs1

Lowest Common Ancestors in Trees and Directed Acyclic Graphs1 Michael A. Bender2 3 Martín Farach-Colton4 Giridhar Pemmasani2 Steven Skiena2 5 Pavel Sumazin6 Version: We study the problem of finding lowest common ancestors (LCA) in trees and directed acyclic graphs (DAGs). Specifically, we extend the LCA problem to DAGs and study the LCA variants that arise in this general setting. We begin with a clear exposition of Berkman and Vishkin’s simple optimal algorithm for LCA in trees. The ideas presented are not novel theoretical contributions, but they lay the foundation for our work on LCA problems in DAGs. We present an algorithm that finds all-pairs-representative : LCA in DAGs in O~(n2 688 ) operations, provide a transitive-closure lower bound for the all-pairs-representative-LCA problem, and develop an LCA-existence algorithm that preprocesses the DAG in transitive-closure time. We also present a suboptimal but practical O(n3) algorithm for all-pairs-representative LCA in DAGs that uses ideas from the optimal algorithms in trees and DAGs. Our results reveal a close relationship between the LCA, all-pairs-shortest-path, and transitive-closure problems. We conclude the paper with a short experimental study of LCA algorithms in trees and DAGs. Our experiments and source code demonstrate the elegance of the preprocessing-query algorithms for LCA in trees. We show that for most trees the suboptimal Θ(n log n)-preprocessing Θ(1)-query algorithm should be preferred, and demonstrate that our proposed O(n3) algorithm for all- pairs-representative LCA in DAGs performs well in both low and high density DAGs. -

Trees a Tree Is a Graph Which Is (A) Connected and (B) Has No Cycles (Acyclic)

Trees A tree is a graph which is (a) Connected and (b) has no cycles (acyclic). 1 Lemma 1 Let the components of G be C1; C2; : : : ; Cr, Suppose e = (u; v) 2= E, u 2 Ci; v 2 Cj. (a) i = j ) !(G + e) = !(G). (b) i 6= j ) !(G + e) = !(G) − 1. (a) v u (b) u v 2 Proof Every path P in G + e which is not in G must contain e. Also, !(G + e) ≤ !(G): Suppose (x = u0; u1; : : : ; uk = u; uk+1 = v; : : : ; u` = y) is a path in G + e that uses e. Then clearly x 2 Ci and y 2 Cj. (a) follows as now no new relations x ∼ y are added. (b) Only possible new relations x ∼ y are for x 2 Ci and y 2 Cj. But u ∼ v in G + e and so Ci [ Cj becomes (only) new component. 2 3 Lemma 2 G = (V; E) is acyclic (forest) with (tree) components C1; C2; : : : ; Ck. jV j = n. e = (u; v) 2= E, u 2 Ci; v 2 Cj. (a) i = j ) G + e contains a cycle. (b) i 6= j ) G + e is acyclic and has one less com- ponent. (c) G has n − k edges. 4 (a) u; v 2 Ci implies there exists a path (u = u0; u1; : : : ; u` = v) in G. So G + e contains the cycle u0; u1; : : : ; u`; u0. u v 5 (a) v u Suppose G + e contains the cycle C. e 2 C else C is a cycle of G. C = (u = u0; u1; : : : ; u` = v; u0): But then G contains the path (u0; u1; : : : ; u`) from u to v – contradiction. -

The Size Ramsey Number of Graphs with Bounded Treewidth | SIAM

SIAM J. DISCRETE MATH. © 2021 Society for Industrial and Applied Mathematics Vol. 35, No. 1, pp. 281{293 THE SIZE RAMSEY NUMBER OF GRAPHS WITH BOUNDED TREEWIDTH\ast NINA KAMCEVy , ANITA LIEBENAUz , DAVID R. WOOD y , AND LIANA YEPREMYANx Abstract. A graph G is Ramsey for a graph H if every 2-coloring of the edges of G contains a monochromatic copy of H. We consider the following question: if H has bounded treewidth, is there a \sparse" graph G that is Ramsey for H? Two notions of sparsity are considered. Firstly, we show that if the maximum degree and treewidth of H are bounded, then there is a graph G with O(jV (H)j) edges that is Ramsey for H. This was previously only known for the smaller class of graphs H with bounded bandwidth. On the other hand, we prove that in general the treewidth of a graph G that is Ramsey for H cannot be bounded in terms of the treewidth of H alone. In fact, the latter statement is true even if the treewidth is replaced by the degeneracy and H is a tree. Key words. size Ramsey number, bounded treewidth, bounded-degree trees, Ramsey number AMS subject classifications. 05C55, 05D10, 05C05 DOI. 10.1137/20M1335790 1. Introduction. A graph G is Ramsey for a graph H, denoted by G ! H, if every 2-coloring of the edges of G contains a monochromatic copy of H. In this paper we are interested in how sparse G can be in terms of H if G ! H.