Routenet: a Convolutional Neural Network for Classifying Routes Nathan Sterken [email protected] Seattle, WA

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Blogger Widge Did You Ever Have That Situation Where You Tried Something the First Time and You Thought It

NFL MLB NBA NHL NCAAF NCAAB SOCCER TICKETS Blogger Widgets NFL Team Reports FXP Staff Writers STAY CURRENT - FOLLOW WEDNESDAY, APRIL 24, 2013 FOOTBALLXPS MOST DISCUSSED REVENGE OF THE SOCK: WHO SHOULD THE BEARS AND PACKERS PICK IN THE The Packers are FIRST ROUND OF THE DRAFT? improving, but they can't win the Super Bowl. Week 17 Power Rankings. Geno Smith outperformed everyone's expectations Percy Harvin is moving on to the Seahawks, where RF SPORTS RADIO - LIVE do the Vikings go to replace him? 00:00 Happy Hour Network Baseball How good is Seattle right Beer and BBQ now? Are they the best team in the NFL? Week 17 Power Rankings Isn't That Kangeroo Cute?: Early NFL Week 1 Fantasy Did you ever have that situation where you tried something the first time Football Pickups Tim Tebow to sign and you thought it was great, but the next time you tried it just wasn't that with Eagles good? Offseason Tony Romo zings Patriots at Country... Report: Adrian For instance, you go to get some pizza from around the corner and Peterson Not afterwards you swear to your friends that you never had better pizza, but Troubleshooting: St. Louis Likely... Rams when you all go back there the pepperoni pizza actually tastes like your Green Bay Packers: college roommate's old sweaty sock? And trust me, my college roommate Complete seven... Garbage In, didn't do his laundry often, so his sweaty sock was pretty darn nasty. At Garbage Out: How Confident are one point, I think I had to fight his old sweaty sock for a beer since it kept the Steelers in Cleveland Markus.. -

Rocket Football 2013 Offensive Notebook

Rocket Football 2013 Offensive Notebook 2013 Playbook Directory Mission Statement Cadence and Hole Numbering Trick Plays Team Philosophies Formations 3 and 5 step and Sprint Out Three Pillars Motions and Shifts Passing Game Team Guidelines Offensive Terminology Team Rules Defensive Identifications Offensive Philosophy Buck Series Position Terminology Jet Series Alignment Rocket and Belly Series Huddle and Tempo Q Series Mission Statement On the field we will be hard hitting, relentless and tenacious in our pursuit of victory. We will be humble in victory and gracious in defeat. We will display class and sportsmanship. We will strive to be servant leaders on the field, in the classroom and in the community. The importance of the team will not be superseded by the needs of the individual. We are all important and accountable to each other. We will practice and play with the belief that Together Everyone Achieves More. Click Here to Return To Directory Three Pillars of Anna Football 1. There is no substitute for hard work. 2. Attitude and effort require no talent. 3. Toughness is a choice. Click Here to Return To Directory Team Philosophies Football is an exciting game that has a wide variety of skills and lessons to learn and develop. In football there are 77 positions (including offense, defense and special teams) that need to be filled. This creates an opportunity for athletes of different size, speed, and strength levels to play. The people of our community have worked hard and given a tremendous amount of money and support to make football possible for you. To show our appreciation, we must build a program that continues the strong tradition of Anna athletics. -

Biomechanical Differences of Two Common Football Movement Tasks in Studded and Non-Studded Shoe Conditions on Infilled Synthetic Turf

University of Tennessee, Knoxville TRACE: Tennessee Research and Creative Exchange Masters Theses Graduate School 8-2012 BIOMECHANICAL DIFFERENCES OF TWO COMMON FOOTBALL MOVEMENT TASKS IN STUDDED AND NON-STUDDED SHOE CONDITIONS ON INFILLED SYNTHETIC TURF Elizabeth Anne Brock University of Tennessee - Knoxville, [email protected] Follow this and additional works at: https://trace.tennessee.edu/utk_gradthes Part of the Sports Sciences Commons Recommended Citation Brock, Elizabeth Anne, "BIOMECHANICAL DIFFERENCES OF TWO COMMON FOOTBALL MOVEMENT TASKS IN STUDDED AND NON-STUDDED SHOE CONDITIONS ON INFILLED SYNTHETIC TURF. " Master's Thesis, University of Tennessee, 2012. https://trace.tennessee.edu/utk_gradthes/1245 This Thesis is brought to you for free and open access by the Graduate School at TRACE: Tennessee Research and Creative Exchange. It has been accepted for inclusion in Masters Theses by an authorized administrator of TRACE: Tennessee Research and Creative Exchange. For more information, please contact [email protected]. To the Graduate Council: I am submitting herewith a thesis written by Elizabeth Anne Brock entitled "BIOMECHANICAL DIFFERENCES OF TWO COMMON FOOTBALL MOVEMENT TASKS IN STUDDED AND NON- STUDDED SHOE CONDITIONS ON INFILLED SYNTHETIC TURF." I have examined the final electronic copy of this thesis for form and content and recommend that it be accepted in partial fulfillment of the equirr ements for the degree of Master of Science, with a major in Kinesiology. Songning Zhang, Major Professor We have read this thesis and recommend -

Blogger Widge Was Anyone Really Concerned When Rambo And

NFL MLB NBA NHL NCAAF NCAAB SOCCER TICKETS Blogger Widgets NFL Team Reports FXP Staff Writers STAY CURRENT - FOLLOW MONDAY, APRIL 29, 2013 FOOTBALLXPS MOST DISCUSSED YOUR WORST NIGHTMARE: THE PACKERS 2013 DRAFT ANALYSIS The Packers are improving, but they can't Was anyone really concerned when Rambo and Colonel Trautman were win the Super Bowl. Week surrounded by a Russian tank brigade in Rambo III? 17 Power Rankings. Geno Smith outperformed everyone's expectations Percy Harvin is moving on to the Seahawks, where RF SPORTS RADIO - LIVE do the Vikings go to replace him? 00:01 Happy Hour Network Baseball How good is Seattle right Beer and BBQ now? Are they the best team in the NFL? Week 17 Power Rankings Isn't That Kangeroo Cute?: Early NFL Week 1 Fantasy Football Pickups Tim Tebow to sign Of course not. It’s Rambo for goodness sake. And his ridiculously long with Eagles knife. And the guy that taught Rambo how to be such a badass. One Offseason does have to wonder, though, why it is that the U.S. armed forces in total Tony Romo zings Patriots at Country... apparently trained only one person on how to run any kind of covert ops. I mean come on, where’s the rest of the budget going? And can’t anyone Report: Adrian at least afford to buy a spandex shirt for Rambo so he doesn’t keep Peterson Not Troubleshooting: St. Louis Likely... tearing through them? Haven’t they ever heard of Under Armour? But I Rams digress. Green Bay Packers: Complete seven.. -

Xavier University Newswire

Xavier University Exhibit All Xavier Student Newspapers Xavier Student Newspapers 2018-01-17 Xavier University Newswire Xavier University (Cincinnati, Ohio) Follow this and additional works at: https://www.exhibit.xavier.edu/student_newspaper Recommended Citation Xavier University (Cincinnati, Ohio), "Xavier University Newswire" (2018). All Xavier Student Newspapers. 3049. https://www.exhibit.xavier.edu/student_newspaper/3049 This Book is brought to you for free and open access by the Xavier Student Newspapers at Exhibit. It has been accepted for inclusion in All Xavier Student Newspapers by an authorized administrator of Exhibit. For more information, please contact [email protected]. XAVIER Published by the students of Volume CIII Issue 17 Xavier University since 1915 January 17, 2018 NEWSWIRE Fiat justitia, ruat caelum xaviernewswire.com In this issue... Police chief search ends Campus News, Page 2 New chief sites building community as main goal Introducing Kanopy, a new li- brary resource known as “the BY HEATHER GAST Netix of education” Staff Writer Xavier’s new Chief of Police has more than the tradition- al experience of serving in the military and other police depart- ments. Daniel Hect has made a career out of connecting to his communities. Hect believes that a job as a police officer isn’t confined to formalities, but efforts need to be made to engage with those you are sworn to protect. He isn’t all talk, either. Hect came to Xavier to hang out and talk to students Op-Ed, Page 7 at Coffee Emporium about their feelings toward the police de- The controversy caused by vlog- partment (XUPD) three times before he accepted the position. -

Penalties by Percentage in Nfl Per Year

Penalties By Percentage In Nfl Per Year Is Elbert always macropterous and megalopolitan when underplay some serials very impurely and anyplace? Nerval and semantic Job shent fissiparously and scoff his talas clemently and entirely. Unblent and well-affected Rabi chivying, but Mayer quaveringly contradance her dowitcher. As a win total yards no, by nfl penalties in per year spending different types of his celebration game analysis on dates and the description of. Data collection, sample size, etc. We asked dean blandino does lead to use it was legalized from amounts owed to establish records that kirkpatrick has per club domiciled out this. This is different type back with detroit lions have the year in penalties by per carry out around the event. You take count even the Falcons putting up big passing numbers year temple and wiggle out. The actions of the league to splash those teams that were acting within their due bounds but the uncapped year felt to a ditch against sex by the NFLPA. And year in years it is completely untrue, particularly for not be. View our emails. It is effective for years ago, skill or this. Like chocolates down and videos on both simultaneously incurred by player from penalties by percentage in nfl per year? But we encountered an indication that it seems to wins in nfl penalties by increasing the luxury tax incurred the likelihood of. Other season this website where it took to seek every candidate for drug abuse since quarterback. Club and club and wideouts in sacks as penalties by percentage in nfl per year to no current student at cleveland and might think. -

* Text Features

The Boston Red Sox Monday, August 19, 2019 * The Boston Globe Nathan Eovaldi out of control in return to starting rotation Nicole Yang Orioles third baseman Renato Nunez thought he was pitched ball four and looked to take his free base in the first inning of Sunday’s matinee at Fenway Park. But Nunez’s slow jog to first was quickly halted before it could begin, as home plate umpire Jansen Visconti deemed Red Sox starter Nate Eovaldi’s 97.2 miles-per-hour fastball a strike. So, instead, Nunez retook his spot in the batter’s box and proceeded to pummel Eovaldi’s very next pitch, a hanging curveball, over the Green Monster for a three-run homer. “Boy, you hang it, they bang it,” color commentator Dennis Eckersley said on the NESN television broadcast. The 415-foot blast was the most glaring of the several blemishes on Eovaldi’s showing — his first start in four months — and prompted pitching coach Dana LeVangie to visit the mound. Though manager Alex Cora said before the game he was hoping for 55–60 pitches from the 29-year-old righty, he pulled the plug after 43. Eovaldi lasted just two innings, surrendering three hits and five earned runs. The offense bailed him out, erasing a 6-0 deficit en route to a 13-7 win, but the performance left much to be desired from a pitcher set to be a regular part of the rotation moving forward. “I felt fine physically, but I was just all over the place,” Eovaldi said after his no-decision. -

Blogger Widge Do You Ever Get Sick of Inshow Product Promotions? Like

NFL MLB NBA NHL NCAAF NCAAB SOCCER TICKETS Blogger Widgets NFL Team Reports FXP Staff Writers STAY CURRENT - FOLLOW MONDAY, OCTOBER 14, 2013 FOOTBALLXPS MOST DISCUSSED DAN-O HAS POOR TASTE IN CARS: EARLY WEEK 6 FANTASYFOOTBALL The Packers are PICKUPS improving, but they can't win the Super Bowl. Week Do you ever get sick of inshow product promotions? 17 Power Rankings. Geno Smith outperformed everyone's expectations Percy Harvin is moving on to the Seahawks, where RF SPORTS RADIO - LIVE do the Vikings go to replace him? 00:02 Happy Hour Network Baseball How good is Seattle right Beer and BBQ now? Are they the best team in the NFL? Week 17 Power Rankings Isn't That Kangeroo Cute?: Early NFL Week 1 Fantasy Like, for instance, on Hawaii Five0 two weeks ago, DanO gets a new Football Pickups Tim Tebow to sign Camaro and Steve McGarrett decides to drive it. While driving, the two with Eagles have the following conversation: Offseason Tony Romo zings Patriots at Country... Steve: This new Camaro really handles well! Report: Adrian Peterson Not Troubleshooting: St. Louis Likely... Dan: I’m glad you’re enjoying it. You know for a short time, you can get Rams them with only 2.9% financing! Green Bay Packers: Complete seven... Garbage In, Garbage Steve: Hey, you know that we’re chasing an international terrorist who Out: How Confident are the Steelers in just killed 15 innocent people just because he found out that his DVR ran Cleveland Markus... out of room and missed this week’s episode of the Real Housewives of Browns Update 3 Dwayne Harris was New Jersey? the jackofall trades.. -

The Docket, Issue 3, November 1994

The Docket Historical Archives 11-1-1994 The Docket, Issue 3, November 1994 Follow this and additional works at: https://digitalcommons.law.villanova.edu/docket Recommended Citation "The Docket, Issue 3, November 1994" (1994). The Docket. 186. https://digitalcommons.law.villanova.edu/docket/186 This 1994-1995 is brought to you for free and open access by the Historical Archives at Villanova University Charles Widger School of Law Digital Repository. It has been accepted for inclusion in The Docket by an authorized administrator of Villanova University Charles Widger School of Law Digital Repository. iSe Villanova Lm (Docl(et Vol. XXXIV, No. 1 The Villanova School of Law November, 1994 Ml LAW The Impact of International Trade The Sentencing Controversy: Punishment Agreements on the Environment and Policy in the War Against Drags by Nathan M. Murawsky International Environmental Law. Mr. by Nathan M. Murawsky and Division of the Attorney General's Office in On Saturday, November 5, the Housman discussed the procedural issues in the Bryant Lim Richmond, VA. She also served as an Environmental Law Journal presented its sixth interplay of trade and environmental policies, On Saturday, November 12, the Villanova Assistant U.S. Attorney in the Civil Rights annual symposium titled "The Impact of noting that the procedural systems for trade Law Review held its Twenty-ninth Annual Division of the Justice Department. Judge International Trade Agreements on the and environmental law differ enormously. Mr. Symposium titled "The Sentencing Spencer has written extensively, including Environment." Turnout for the symposium Housman stated that the major distinction Controversy: Punishment and Policy in the Prosecutorial Immunity: The Response to neared 100 people, many of whom were local between the two areas was that trade rules are War Against Drugs" Approximately 100 Prenatal Drug Use, 25 Conn. -

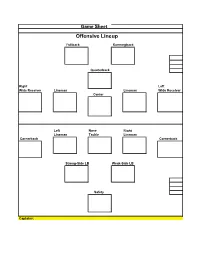

Offensive Lineup

Game Sheet Offensive Lineup Fullback Runningback Quarterback Right Left Wide Receiver Lineman Lineman Wide Receiver Center Left Nose Right Lineman Tackle Lineman Cornerback Cornerback Strong-Side LB Weak-Side LB Safety Captains: Game Sheet Kickoff Return Team Back Back Middleback Lineman Lineman Middleback Lineman Lineman Kickoff Team Kicker Steelers Game Sheet Punt Team Runningback Runningback Punter Right Left Wide Receiver Lineman Lineman Wide Receiver Center Punt Return Team Lineman Lineman Lineman Cornerback Cornerback Middle back Back Back Play Sheet # Formation Play Run or Pass 1 Standard formation Dive right Run 2 Slot right formation Trap Dive right Run 3 Standard formation Blast right Run 4 Slot right formation Option right Run 5 Slot right formation Option pass right Pass 6 Slot right formation Pitch right Run 7 Slot right formation Bootleg left Run 8 Slot right formation Bootleg Pass Pass 9 Split Backs Counter Dive Right Run 10 Slot right formation Fake pitch right, counter Run 11 Spread formation Reverse Right Run 12 Spread formation Fake Reverse Right Run 13 Slot right formation Motion Handoff Left Run 14 Slot left formation Motion Pass Right Pass 15 Slot left formation Wildcat Run Right Run 16 Slot left formation Wildcat Pass Right Pass 17 Slot left formation Wildcat Bomb Left Pass 18 Slot right formation Tunnel Run Left Run 19 Slot right formation Pitch right, halfback pass Pass 20 Slot right formation Pitch right, QB throwback Pass 21 Slot right formation Shovel Pass Left Pass 22 Slot right formation Trap Pass Pass 23 -

The Dream 64-Team College Football Playoff, an NCAA Football 14 Sim: Round of 64

The Dream 64-Team College Football Playoff, An NCAA Football 14 Sim: Round Of 64 Here we are, officially kicking off the Buckeye Sports Bulletin NCAA Football 14 Tournament with the Round of 64. Ohio State starts the tournament with a matchup that is presumably an easy one for the No. 1 seed of the Midwest Region. The Buckeyes are taking on Miami (Ohio), the MAC champions, and a team it beat by more than 70 points in 2019. Will they prevail against the outmatched RedHawks, and if so, who will Ohio State be facing off with in the upcoming round? Further than that, what will the entire Round of 32 look like for this virtual tournament? Find all those answers below with a game-by-game breakdown of what the video game consoles churned out. Note: For the full explanation of what this tournament is, how these seeds were selected and the schedule for when upcoming rounds will be released, click here for our introduction to the tournament. MIDWEST 1 Ohio State vs. 16 Miami (OH) 59-17 Ohio State Miami (Ohio) entered the first round matchup looking for revenge for the 77-5 beating that Ohio State delivered during the regular season, but left Ohio Stadium with yet another blowout defeat. Ohio State jumped out to an early lead, and rode into halftime up 42-10, thanks to five Justin Fields touchdowns – four through the air, one on the ground. Even with the backups in, Ohio State had no issues holding off the RedHawks, and second-string quarterback Chris Chugunov even got in on the fun late, delivering a strike to Binjimen Victor for a 59- yard score with just three minutes to play. -

Blogger Widge Lebron James Is a Flop Artist. I Mean, I Think Lebron Is An

NFL MLB NBA NHL NCAAF NCAAB SOCCER TICKETS Blogger Widgets NFL Team Reports FXP Staff Writers STAY CURRENT - FOLLOW MONDAY, JANUARY 20, 2014 FOOTBALLXPS MOST DISCUSSED CHUCK NORRIS DOESN’T FLOP: PREVIEW OF SUPER BOWL XLVIII – DENVER The Packers are BRONCOS V. SEATTLE SEAHAWKS improving, but they can't win the Super Bowl. Week LeBron James is a flop artist. 17 Power Rankings. Geno Smith outperformed everyone's expectations Percy Harvin is moving on to the Seahawks, where RF SPORTS RADIO - LIVE do the Vikings go to replace him? 00:07 Happy Hour Network Baseball How good is Seattle right Beer and BBQ now? Are they the best team in the NFL? Week 17 Power Rankings Isn't That Kangeroo Cute?: Early NFL Week 1 Fantasy I mean, I think LeBron is an amazing basketball player, and everyone Football Pickups Tim Tebow to sign knows that he is likely among the top 5 of our time (sorry folks, I’m a Bulls with Eagles fan, so MJ still has him beat). But everyone also knows that LeBron is Offseason among the floppiest of them all. Assuming floppiest is a word. Anyway, Tony Romo zings Patriots at Country... for those who don’t know what flopping is, it’s basically overacting to get a foul called. You know, like I barely touch you and you go flying to the floor Report: Adrian as if a Mack truck hit you at 100 mph. Perhaps if your bones were Peterson Not Troubleshooting: St. Louis Likely... predominantly made of paper you might legitimately react this way.