(ITWS) Demonstration and Validation Operational Test and Evaluation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

![The Error Is the Feature: How to Forecast Lightning Using a Model Prediction Error [Applied Data Science Track, Category Evidential]](https://docslib.b-cdn.net/cover/5685/the-error-is-the-feature-how-to-forecast-lightning-using-a-model-prediction-error-applied-data-science-track-category-evidential-205685.webp)

The Error Is the Feature: How to Forecast Lightning Using a Model Prediction Error [Applied Data Science Track, Category Evidential]

The Error is the Feature: How to Forecast Lightning using a Model Prediction Error [Applied Data Science Track, Category Evidential] Christian Schön Jens Dittrich Richard Müller Saarland Informatics Campus Saarland Informatics Campus German Meteorological Service Big Data Analytics Group Big Data Analytics Group Offenbach, Germany ABSTRACT ACM Reference Format: Despite the progress within the last decades, weather forecasting Christian Schön, Jens Dittrich, and Richard Müller. 2019. The Error is the is still a challenging and computationally expensive task. Current Feature: How to Forecast Lightning using a Model Prediction Error: [Ap- plied Data Science Track, Category Evidential]. In Proceedings of 25th ACM satellite-based approaches to predict thunderstorms are usually SIGKDD Conference on Knowledge Discovery and Data Mining (KDD ’19). based on the analysis of the observed brightness temperatures in ACM, New York, NY, USA, 10 pages. different spectral channels and emit a warning if a critical threshold is reached. Recent progress in data science however demonstrates 1 INTRODUCTION that machine learning can be successfully applied to many research fields in science, especially in areas dealing with large datasets. Weather forecasting is a very complex and challenging task requir- We therefore present a new approach to the problem of predicting ing extremely complex models running on large supercomputers. thunderstorms based on machine learning. The core idea of our Besides delivering forecasts for variables such as the temperature, work is to use the error of two-dimensional optical flow algorithms one key task for meteorological services is the detection and pre- applied to images of meteorological satellites as a feature for ma- diction of severe weather conditions. -

6.4 the Warning Time for Cloud-To-Ground Lightning in Isolated, Ordinary Thunderstorms Over Houston, Texas

6.4 THE WARNING TIME FOR CLOUD-TO-GROUND LIGHTNING IN ISOLATED, ORDINARY THUNDERSTORMS OVER HOUSTON, TEXAS Nathan C. Clements and Richard E. Orville Texas A&M University, College Station, Texas 1. INTRODUCTION shear and buoyancy, with small magnitudes of vertical wind shear likely to produce ordinary, convective Lightning is a natural but destructive phenomenon storms. Weisman and Klemp (1986) describe the short- that affects various locations on the earth’s surface lived single cell storm as the most basic convective every year. Uman (1968) defines lightning as a self- storm. Therefore, this ordinary convective storm type will propagating atmospheric electrical discharge that results be the focus of this study. from the accumulation of positive and negative space charge, typically occurring within convective clouds. This 1.2 THUNDERSTORM CHARGE STRUCTURE electrical discharge can occur in two basic ways: cloud flashes and ground flashes (MacGorman and Rust The primary source of lightning is electric charge 1998, p. 83). The latter affects human activity, property separated in a cloud type known as a cumulonimbus and life. Curran et al. (2000) find that lightning is ranked (Cb) (Uman 1987). Interactions between different types second behind flash and river flooding as causing the of hydrometeors within a cloud are thought to carry or most deaths from any weather-related event in the transfer charges, thus creating net charge regions United States. From 1959 to 1994, there was an throughout a thunderstorm. Charge separation can average of 87 deaths per year from lightning. Curran et either come about by inductive mechanisms (i.e. al. also rank the state of Texas as third in the number of requires an electric field to induce charge on the surface fatalities from 1959 to 1994, behind Florida at number of the hydrometeor) or non-inductive mechanisms (i.e. -

Datasheet: Thunderstorm Local Lightning Sensor TSS928

Thunderstorm Local Lightning Sensor ™ TSS928 lightning Vaisala TSS928™ is a local-area lightning detection sensor that can be integrated with automated surface weather observations. Superior Performance in Local- area TSS928 detects: Lightning Tracking • Optical, magnetic, and electrostatic Lightning-sensitive operations rely on pulses from lightning events with zero Vaisala TSS928 sensors to provide critical false alarms local lightning information, both for • Cloud and cloud-to-ground lightning meteorological applications as well as within 30 nautical miles (56 km) threat data, to facilitate advance • Cloud-to-ground lightning classified warnings, initiate safety procedures, and into three range intervals: isolate equipment with full confidence. • 0 … 5 nmi (0 … 9 km) The patented lightning algorithms of TSS928 provide the most precise ranging • 5 … 10 nmi (9 … 19 km) of any stand-alone lightning sensor • 10 … 30 nmi (19 … 56 km) available in the world today. • Cloud-to-ground lightning classified The optical coincident requirement into directions: N, NE, E, SE, S, SW, W, Vaisala TSS928™ accurately reports the eliminates reporting of non-lightning and NW range and direction of cloud-to-ground events. The Vaisala Automated lightning and provides cloud lightning TSS928 can be used to integrate counts. Lightning Alert and Risk Management lightning reports with automated (ALARM) system software is used to weather observation programs such as visualize TSS928 sensor data. METAR. Technical Data Measurement Performance Support Services Detection range 30 nmi (56 km) radius from sensor Vaisala TSS928™ is fully supported by our Customer location Support Center, Technical Service Group, and Field Range resolution Three range groups: Service Engineering Team. Maintain optimal 0 … 5 nmi (0 … 9 km) performance by purchasing a service agreement 5 … 10 nmi (9 … 19 km) customized to your unique system requirements. -

METAR/SPECI Reporting Changes for Snow Pellets (GS) and Hail (GR)

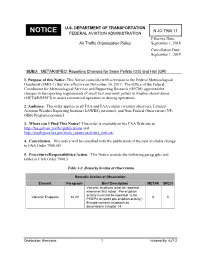

U.S. DEPARTMENT OF TRANSPORTATION N JO 7900.11 NOTICE FEDERAL AVIATION ADMINISTRATION Effective Date: Air Traffic Organization Policy September 1, 2018 Cancellation Date: September 1, 2019 SUBJ: METAR/SPECI Reporting Changes for Snow Pellets (GS) and Hail (GR) 1. Purpose of this Notice. This Notice coincides with a revision to the Federal Meteorological Handbook (FMH-1) that was effective on November 30, 2017. The Office of the Federal Coordinator for Meteorological Services and Supporting Research (OFCM) approved the changes to the reporting requirements of small hail and snow pellets in weather observations (METAR/SPECI) to assist commercial operators in deicing operations. 2. Audience. This order applies to all FAA and FAA-contract weather observers, Limited Aviation Weather Reporting Stations (LAWRS) personnel, and Non-Federal Observation (NF- OBS) Program personnel. 3. Where can I Find This Notice? This order is available on the FAA Web site at http://faa.gov/air_traffic/publications and http://employees.faa.gov/tools_resources/orders_notices/. 4. Cancellation. This notice will be cancelled with the publication of the next available change to FAA Order 7900.5D. 5. Procedures/Responsibilities/Action. This Notice amends the following paragraphs and tables in FAA Order 7900.5. Table 3-2: Remarks Section of Observation Remarks Section of Observation Element Paragraph Brief Description METAR SPECI Volcanic eruptions must be reported whenever first noted. Pre-eruption activity must not be reported. (Use Volcanic Eruptions 14.20 X X PIREPs to report pre-eruption activity.) Encode volcanic eruptions as described in Chapter 14. Distribution: Electronic 1 Initiated By: AJT-2 09/01/2018 N JO 7900.11 Remarks Section of Observation Element Paragraph Brief Description METAR SPECI Whenever tornadoes, funnel clouds, or waterspouts begin, are in progress, end, or disappear from sight, the event should be described directly after the "RMK" element. -

Sensor Mfg. Flexible and Expandable for a Wide Variety of Aviation Wind Speed and Direction RM Young & Taylor Applications

3.1 THE 21st CENTURY’S AIRPORT WEATHER REPORTING SYSTEM Patrick L. Kelly1, Gary L. Stringer, Eric Tseo, DeLyle M. Ellefson, Michael Lydon and Diane Buckshnis Coastal Environmental Systems, Seattle, Washington sensor readings as well as regular hardware self-tests, 1. INTRODUCTION such as checking the ability to communicate with a serial sensor. It calculates a variety of other parameters Coastal Environmental Systems has built and is including cloud height, obscuration, dew point, altimeter delivering a complete meteorological and RVR setting, RVR and other relevant data. (combined) aviation weather monitoring system. The The following is a table of the sensors used and the system is suitable for CAT I, II or III airports (or smaller) manufacturers: and measures and reports RVR in addition to all the meteorological parameters. The overall system is very Sensor Mfg. flexible and expandable for a wide variety of aviation Wind speed and Direction RM Young & Taylor applications. The following paper describes this system Air Temperature YSI and some of its abilities. Relative Humidity Rotronics Pressure/Altimeter Druck 2. SYSTEM DESCRIPTION Rainfall Met-One Precipitation ID OSI The system has multiple purposes: Ceilometer Eliasson • Display the required weather sensor data in a Visibility Belfort GUI Ambient Light Belfort Freezing Rain Goodrich • Allow multiple displays to connect through a Lightning Detection GAI/Vaisala variety of means Aspirated Temperature Shield RM Young • Allow users to have different levels of access • Provide a Ground To Air voice output Table 1: Sensors and Manufacturers • Provide a dial in voice output • Feed data to other systems (where applicable) The field station is polled by the TDAU and can be • Provide remote maintenance monitoring via the done through direct cable, short haul modem, fiber optic, Internet UHF radio or a combination of these. -

Lightning and the Space Program

National Aeronautics and Space Administration facts Lightning and the Space NASA Program Lightning protection systems weather radar, are the primary Air Force thunderstorm surveil- lance tools for evaluating weather conditions that lead to the ennedy Space Center operates extensive lightning protec- issuance and termination of lightning warnings. Ktion and detection systems in order to keep its employees, The Launch Pad Lightning Warning System comprises 31 the 184-foot-high space shuttle, the launch pads, payloads and electric field mills uniformly distributed throughout Kennedy processing facilities from harm. The protection systems and the and Cape Canaveral. They serve as an early warning system detection systems incorporate equipment and personnel both for electrical charges building aloft or approaching as part of a at Kennedy and Cape Canaveral Air Force Station (CCAFS), storm system. These instruments are ground-level electric field located southeast of Kennedy. strength monitors. Information from this warning system gives forecasters information on trends in electric field potential Predicting lightning before it reaches and the locations of highly charged clouds capable of support- Kennedy Space Center ing natural or triggered lightning. The data are valuable in Air Force 45th Weather Squadron – The first line of detecting early storm electrification and the threat of triggered defense for lightning safety is accurately predicting when and lightning for launch vehicles. where thunderstorms will occur. The Air Force 45th Weather The Lightning Detection and Ranging system, developed Squadron provides all weather support for Kennedy/CCAFS by Kennedy, detects and locates lightning in three dimensions operations, except space shuttle landings, which are supported using a “time of arrival” computation on signals received at by the National Atmospheric and Oceanic Administration seven antennas. -

JO 7900.5D Chg.1

U.S. DEPARTMENT OF TRANSPORTATION JO 7900.SD CHANGE CHG 1 FEDERAL AVIATION ADMINISTRATION National Policy Effective Date: 11/29/2017 SUBJ: JO 7900.SD Surface Weather Observing 1. Purpose. This change amends practices and procedures in Surface Weather Observing and also defines the FAA Weather Observation Quality Control Program. 2. Audience. This order applies to all FAA and FAA-contract personnel, Limited Aviation Weather Reporting Stations (LAWRS) personnel, Non-Federal Observation (NF-OBS) Program personnel, as well as United States Coast Guard (USCG) personnel, as a component ofthe Department ofHomeland Security and engaged in taking and reporting aviation surface observations. 3. Where I can find this order. This order is available on the FAA Web site at http://faa.gov/air traffic/publications and on the MyFAA employee website at http://employees.faa.gov/tools resources/orders notices/. 4. Explanation of Changes. This change adds references to the new JO 7210.77, Non Federal Weather Observation Program Operation and Administration order and removes the old NF-OBS program from Appendix B. Backup procedures for manual and digital ATIS locations are prescribed. The FAA is now the certification authority for all FAA sponsored aviation weather observers. Notification procedures for the National Enterprise Management Center (NEMC) are added. Appendix B, Continuity of Service is added. Appendix L, Aviation Weather Observation Quality Control Program is also added. PAGE CHANGE CONTROL CHART RemovePa es Dated Insert Pa es Dated ii thru xi 12/20/16 ii thru xi 11/15/17 2 12/20/16 2 11/15/17 5 12/20/17 5 11/15/17 7 12/20/16 7 11/15/17 12 12/20/16 12 11/15/17 15 12/20/16 15 11/15/17 19 12/20/16 19 11/15/17 34 12/20/16 34 11/15/17 43 thru 45 12/20/16 43 thru 45 11/15/17 138 12/20/16 138 11/15/17 148 12/20/16 148 11/15/17 152 thru 153 12/20/16 152 thru 153 11/15/17 AppendixL 11/15/17 Distribution: Electronic 1 Initiated By: AJT-2 11/29/2017 JO 7900.5D Chg.1 5. -

GOES-R Lightning Detection Fact Sheet

NOAA SATELLITE AND INFORMATION SERVICE | GOES-R SERIES PROGRAM OFFICE GOES-R Lightning Detection This fact sheet This explains fact sheet explainsthe fire the lightningmonitoring detection applications applications available available from GOES-R from Series GOES-R satellites. Series satellites. Why is it important to monitor lightning? Rapid increases in total lightning (in-cloud and cloud-to-ground) activity often precede severe and tornadic thunderstorms. Characterizing lightning activity in storms allows forecasters to focus on intensifying storms before they produce damaging winds, hail or tornadoes. Lightning is a significant threat to life and property, and particularly hazardous for those working outdoors and participating in recreational activities (hiking, boating, golfing, etc.). Lightning is also a Global Climate Observing System essential climate variable, needed to understand and predict changes in climate. Using satellites, we can detect total lightning over vast geographic areas, both day and night, with near-uniform detection efficiency. Lightning during a severe thunderstorm in Las Vegas, Nevada. Credit: David Lund How do GOES-R Series satellites monitor lightning? GOES-R Series satellites carry a Geostationary Lightning Mapper (GLM), the first operational lightning mapper flown in geostationary orbit. GLM is an optical instrument operating at near-infrared wavelengths that detects and maps total lightning activity continuously over the Americas and adjacent ocean regions. GLM data reveal convective storm development -

Three Events Occurred During This Period Which Together Constitute A

7.3 METEOROLOGICAL AND OCEANIC INSTRUMENTATION AT SPACEPORT FLORIDA – OPPORTUNITIES FOR COASTAL RESEARCH William P. Roeder, Diana L. Hajek, F. Clay Flinn, George A. Maul*, and Michael E. Fitzpatrick 45th Weather Squadron * Department of Marine and Environmental Systems Patrick Air Force Base, FL Florida Institute of Technology Melbourne, FL 1. INTRODUCTION 2.1 Complex Space Launch Weather Requirements The east central coast of Florida has a collection of Weather has a large impact on many aspects of unique and dense meteorological and oceanographic space launch, including: launch operations ground sensors. Data from these instruments are routinely processing operations in preparation for launch, post- saved and thus represent a special resource for coastal launch, various special missions, and routine 24/7 atmospheric and oceanic research. This is especially weather watch and warning responsibilities. true for thunderstorm prediction and convective During launch countdowns, the 45 WS forecasts processes, since central Florida is ‘Lightning Alley’ for and evaluates the Lightning Launch Commit Criteria the U.S. This is an area extremely rich in atmospheric (LCC) and User LCC (Hazen et al., 1995). The boundary layer interactions and subsequent Lightning LCC are a set of complex rules to avoid thunderstorm formation during the summer. Likewise, rocket-triggered lightning (Roeder et al., 1999). All US the nearby coastal waters are dynamically complex. Air Force and NASA space launches use the same The Gulf Stream flows nearby and can cause large Lightning LCC. horizontal sea-surface and vertical sea-to-air The User LCC are limits for three weather temperature gradients that vary in complex patterns categories: 1) near surface winds so the rocket can over space and time with large meteorological and safely clear the launch tower, 2) ceiling and visibility for biological impacts. -

3.2 Total Lightning As an Indication of Convectively Induced Turbulence Potential in and Around Thunderstorms

3.2 TOTAL LIGHTNING AS AN INDICATION OF CONVECTIVELY INDUCED TURBULENCE POTENTIAL IN AND AROUND THUNDERSTORMS Ryan Rogers*1, Lawrence Carey1, Kris Bedka2, Wayne Feltz3, Monte Bateman4, Geoffrey Stano5 1 Atmospheric Science Department, University of Alabama-Huntsville 2 SSAI/NASA LaRC 3 CIMSS, University of Wisconsin-Madison 4 USRA, Huntsville, Alabama 5 NASA SPoRT, Huntsville, Alabama 1. INTRODUCTION statistics and found that thunderstorm related flight delays cost the commercial aviation industry Thunderstorms and their associated hazards approximately 2 billion dollars annually in direct pose a serious risk to general, commercial, and operating expenses. All threats to aviation military aviation interests. The threat to aircraft associated with thunderstorms are a result of the from thunderstorms primarily manifests itself in kinetic energy contained in the thunderstorm three areas: icing, lightning, and turbulence. updraft. Just the presence of lightning within a These hazards can be mitigated by avoiding convective cell gives some indication as to the thunderstorms by as wide a margin as is possible. strength of the updraft. Total Lightning (IC+CG) With aviation hazard reduction in mind, the information can reveal not only the location of the Federal Aviation Administration (FAA) provides convective updraft but can also give clues as to some guidelines for pilots to follow to maintain a the strength of the convection. Thunderstorms, by safe distance from thunderstorms in their 2012 definition, contain lightning and the ability to detect publication of the Aeronautical Information Manual and interpret lightning information is a valuable (AIM) in section 7-1-29 dealing with thunderstorm tool to determine which air space has increased flying. -

Lightning Enhancement Over Major Oceanic Shipping Lanes

PUBLICATIONS Geophysical Research Letters RESEARCH LETTER Lightning enhancement over major oceanic 10.1002/2017GL074982 shipping lanes Key Points: Joel A. Thornton1 , Katrina S. Virts2 , Robert H. Holzworth3 , and Todd P. Mitchell4 • Lightning is enhanced by about a factor of 2 directly over two of the 1Department of Atmospheric Sciences, University of Washington, Seattle, Washington, USA, 2NASA Marshall Space Flight busiest shipping lanes in the Indian 3 Ocean and South China Sea Center, Huntsville, Alabama, USA, Department of Earth and Space Sciences, University of Washington, Seattle, Washington, 4 • Environmental factors such as USA, Joint Institute for the Study of the Atmosphere and Ocean, University of Washington, Seattle, Washington, USA convergence, sea surface temperature, or atmospheric stability do not explain the enhancement Abstract Using 12 years of high-resolution global lightning stroke data from the World Wide Lightning • We hypothesize that ship exhaust Location Network (WWLLN), we show that lightning density is enhanced by up to a factor of 2 directly particles change storm cloud microphysics, causing enhanced over shipping lanes in the northeastern Indian Ocean and the South China Sea as compared to adjacent areas condensate in the mixed-phase with similar climatological characteristics. The lightning enhancement is most prominent during the region and lightning convectively active season, November–April for the Indian Ocean and April–December in the South China Sea, and has been detectable from at least 2005 to the present. We hypothesize that emissions of aerosol Supporting Information: particles and precursors by maritime vessel traffic lead to a microphysical enhancement of convection and • Supporting Information S1 storm electrification in the region of the shipping lanes. -

Demanding Tactical Military

40813_VaisalaNews_155 7.12.2000 18:30 Sivu 14 Versatile automated weather observation for Demanding Tactical Military Military forces have a universal need for automated weather stations that can be rapidly deployed and used in diverse field operations. They have also tended increasingly to use commercial-off-the-shelf (COTS) products. Vaisala’s new generation MAWS201M Tactical Meteorological Observation System meets the versatile requirements of military forces, and is a genuine COTS product. ilitary forces have a to be portable, capable of quick perature and relative humidity universal need for deployment worldwide, and and precipitation. In addition M rapid-deployment, operational in tactical situa- to the basic functions of pow- automated weather tions in a variety of environ- ering and taking measurements stations that can be used in di- ments. from the sensors, the verse field operations. Two systems can be con- MAWS201M also processes the Furthermore, they are increas- nected to a Windows NT- statistical calculations, per- ingly using commercial-off-the- based workstation via hardwire forms data quality control, logs shelf (COTS) equipment. The or radio modems. The work- data into a secure Flash memo- challenge is to provide COTS station displays the data nu- ry, and formats the data for Hannu Kokko, B.Sc. (Eng.) systems that can be easily merically and graphically, codes output in application-specific Product Manager shipped, installed and field-up- aviation weather reports in formats. Surface Weather Division graded with a variety of sensors METAR/SPECI, and archives The MAWS201M uses a 32- Vaisala Helsinki to give full aviation support ca- and transmits the data for fur- bit Motorola CPU, a 16-bit Finland pabilities in the METAR for- ther processing.