A DEEP LEARNING MODEL to PREDICT THUNDERSTORMS WITHIN 400Km2 SOUTH

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Curriculum Vitae ROBERT JEFFREY TRAPP Department of Atmospheric

Curriculum Vitae ROBERT JEFFREY TRAPP Department of Atmospheric Sciences University of Illinois at Urbana-Champaign 105 S. Gregory Street Urbana, Illinois 61801 [email protected] EDUCATION The University of Oklahoma, Ph.D. in Meteorology, 1994 Dissertation: Numerical Simulation of the Genesis of Tornado-Like Vortices Principal Advisor: Prof. Brian H. Fiedler Texas A&M University, M.S. in Meteorology, 1989 Thesis: The Effects of Cloud Base Rotation on Microburst Dynamics-A Numerical Investigation Principal Advisor: Prof. P. Das University of Missouri-Columbia, B.S. in Agriculture/Atmospheric Science, 1985 APPOINTMENTS Professor, University of Illinois at Urbana-Champaign, Department of Atmospheric Sciences, August 2014-current. Professor, Department of Earth and Atmospheric Sciences, Purdue University, August 2010- July 2014. Associate Professor, Department of Earth and Atmospheric Sciences, Purdue University, August 2003-August 2010 Research Scientist, Cooperative Institute for Mesoscale Meteorological Studies, University of Oklahoma, and National Severe Storms Laboratory, July 1996-July 2003 Visiting Scientist, National Center for Atmospheric Research, Mesoscale and Microscale Meteorology Division, August 1998-December 2002 National Research Council Postdoctoral Research Fellow, at the National Severe Storms Laboratory, July 1994-June 1996 GRANTS AND FUNDING Principal Investigator, Modulation of convective-draft characteristics and subsequent tornado intensity by the environmental wind and thermodynamics within the Southeast U.S, NOAA, $287,529, 2017-2019 (pending formal approval by NOAA Grants Officer). Principal Investigator, Collaborative Research: Remote sensing of electrictrification, lightning, and mesoscale/microscale processes with adaptive ground observations during RELMPAGO, NSF-AGS, $636,947, 2017-2021. Co-Principal Investigator, Remote sensing of electrification, lightning, and mesoscale/microscale processes with adaptive ground observations (RELMPAGO), NSF-AGS, $23,940, 2016–2017. -

3. Electrical Structure of Thunderstorm Clouds

3. Electrical Structure of Thunderstorm Clouds 1 Cloud Charge Structure and Mechanisms of Cloud Electrification An isolated thundercloud in the central New Mexico, with rudimentary indication of how electric charge is thought to be distributed and around the thundercloud, as inferred from the remote and in situ observations. Adapted from Krehbiel (1986). 2 2 Cloud Charge Structure and Mechanisms of Cloud Electrification A vertical tripole representing the idealized gross charge structure of a thundercloud. The negative screening layer charges at the cloud top and the positive corona space charge produced at ground are ignored here. 3 3 Cloud Charge Structure and Mechanisms of Cloud Electrification E = 2 E (−)cos( 90o−α) Q H = 2 2 3/2 2πεo()H + r sinα = k Q = const R2 ( ) Method of images for finding the electric field due to a negative point charge above a perfectly conducting ground at a field point located at the ground surface. 4 4 Cloud Charge Structure and Mechanisms of Cloud Electrification The electric field at ground due to the vertical tripole, labeled “Total”, as a function of the distance from the axis of the tripole. Also shown are the contributions to the total electric field from the three individual charges of the tripole. An upward directed electric field is defined as positive (according to the physics sign convention). 5 5 Cloud Charge Structure and Mechanisms of Cloud Electrification Electric Field Change Due to Negative Cloud-to-Ground Discharge Electric Field Change Due to a Cloud Discharge Electric Field Change, kV/m Change, Electric Field Electric Field Change, kV/m Change, Electric Field Distance, km Distance, km Electric field change at ground, due to the Electric field change at ground, due to the total removal of the negative charge of the total removal of the negative and upper vertical tripole via a cloud-to-ground positive charges of the vertical tripole via a discharge, as a function of distance from cloud discharge, as a function of distance from the axis of the tripole. -

![The Error Is the Feature: How to Forecast Lightning Using a Model Prediction Error [Applied Data Science Track, Category Evidential]](https://docslib.b-cdn.net/cover/5685/the-error-is-the-feature-how-to-forecast-lightning-using-a-model-prediction-error-applied-data-science-track-category-evidential-205685.webp)

The Error Is the Feature: How to Forecast Lightning Using a Model Prediction Error [Applied Data Science Track, Category Evidential]

The Error is the Feature: How to Forecast Lightning using a Model Prediction Error [Applied Data Science Track, Category Evidential] Christian Schön Jens Dittrich Richard Müller Saarland Informatics Campus Saarland Informatics Campus German Meteorological Service Big Data Analytics Group Big Data Analytics Group Offenbach, Germany ABSTRACT ACM Reference Format: Despite the progress within the last decades, weather forecasting Christian Schön, Jens Dittrich, and Richard Müller. 2019. The Error is the is still a challenging and computationally expensive task. Current Feature: How to Forecast Lightning using a Model Prediction Error: [Ap- plied Data Science Track, Category Evidential]. In Proceedings of 25th ACM satellite-based approaches to predict thunderstorms are usually SIGKDD Conference on Knowledge Discovery and Data Mining (KDD ’19). based on the analysis of the observed brightness temperatures in ACM, New York, NY, USA, 10 pages. different spectral channels and emit a warning if a critical threshold is reached. Recent progress in data science however demonstrates 1 INTRODUCTION that machine learning can be successfully applied to many research fields in science, especially in areas dealing with large datasets. Weather forecasting is a very complex and challenging task requir- We therefore present a new approach to the problem of predicting ing extremely complex models running on large supercomputers. thunderstorms based on machine learning. The core idea of our Besides delivering forecasts for variables such as the temperature, work is to use the error of two-dimensional optical flow algorithms one key task for meteorological services is the detection and pre- applied to images of meteorological satellites as a feature for ma- diction of severe weather conditions. -

6.4 the Warning Time for Cloud-To-Ground Lightning in Isolated, Ordinary Thunderstorms Over Houston, Texas

6.4 THE WARNING TIME FOR CLOUD-TO-GROUND LIGHTNING IN ISOLATED, ORDINARY THUNDERSTORMS OVER HOUSTON, TEXAS Nathan C. Clements and Richard E. Orville Texas A&M University, College Station, Texas 1. INTRODUCTION shear and buoyancy, with small magnitudes of vertical wind shear likely to produce ordinary, convective Lightning is a natural but destructive phenomenon storms. Weisman and Klemp (1986) describe the short- that affects various locations on the earth’s surface lived single cell storm as the most basic convective every year. Uman (1968) defines lightning as a self- storm. Therefore, this ordinary convective storm type will propagating atmospheric electrical discharge that results be the focus of this study. from the accumulation of positive and negative space charge, typically occurring within convective clouds. This 1.2 THUNDERSTORM CHARGE STRUCTURE electrical discharge can occur in two basic ways: cloud flashes and ground flashes (MacGorman and Rust The primary source of lightning is electric charge 1998, p. 83). The latter affects human activity, property separated in a cloud type known as a cumulonimbus and life. Curran et al. (2000) find that lightning is ranked (Cb) (Uman 1987). Interactions between different types second behind flash and river flooding as causing the of hydrometeors within a cloud are thought to carry or most deaths from any weather-related event in the transfer charges, thus creating net charge regions United States. From 1959 to 1994, there was an throughout a thunderstorm. Charge separation can average of 87 deaths per year from lightning. Curran et either come about by inductive mechanisms (i.e. al. also rank the state of Texas as third in the number of requires an electric field to induce charge on the surface fatalities from 1959 to 1994, behind Florida at number of the hydrometeor) or non-inductive mechanisms (i.e. -

Climatic Information of Western Sahel F

Discussion Paper | Discussion Paper | Discussion Paper | Discussion Paper | Clim. Past Discuss., 10, 3877–3900, 2014 www.clim-past-discuss.net/10/3877/2014/ doi:10.5194/cpd-10-3877-2014 CPD © Author(s) 2014. CC Attribution 3.0 License. 10, 3877–3900, 2014 This discussion paper is/has been under review for the journal Climate of the Past (CP). Climatic information Please refer to the corresponding final paper in CP if available. of Western Sahel V. Millán and Climatic information of Western Sahel F. S. Rodrigo (1535–1793 AD) in original documentary sources Title Page Abstract Introduction V. Millán and F. S. Rodrigo Conclusions References Department of Applied Physics, University of Almería, Carretera de San Urbano, s/n, 04120, Almería, Spain Tables Figures Received: 11 September 2014 – Accepted: 12 September 2014 – Published: 26 September J I 2014 Correspondence to: F. S. Rodrigo ([email protected]) J I Published by Copernicus Publications on behalf of the European Geosciences Union. Back Close Full Screen / Esc Printer-friendly Version Interactive Discussion 3877 Discussion Paper | Discussion Paper | Discussion Paper | Discussion Paper | Abstract CPD The Sahel is the semi-arid transition zone between arid Sahara and humid tropical Africa, extending approximately 10–20◦ N from Mauritania in the West to Sudan in the 10, 3877–3900, 2014 East. The African continent, one of the most vulnerable regions to climate change, 5 is subject to frequent droughts and famine. One climate challenge research is to iso- Climatic information late those aspects of climate variability that are natural from those that are related of Western Sahel to human influences. -

Datasheet: Thunderstorm Local Lightning Sensor TSS928

Thunderstorm Local Lightning Sensor ™ TSS928 lightning Vaisala TSS928™ is a local-area lightning detection sensor that can be integrated with automated surface weather observations. Superior Performance in Local- area TSS928 detects: Lightning Tracking • Optical, magnetic, and electrostatic Lightning-sensitive operations rely on pulses from lightning events with zero Vaisala TSS928 sensors to provide critical false alarms local lightning information, both for • Cloud and cloud-to-ground lightning meteorological applications as well as within 30 nautical miles (56 km) threat data, to facilitate advance • Cloud-to-ground lightning classified warnings, initiate safety procedures, and into three range intervals: isolate equipment with full confidence. • 0 … 5 nmi (0 … 9 km) The patented lightning algorithms of TSS928 provide the most precise ranging • 5 … 10 nmi (9 … 19 km) of any stand-alone lightning sensor • 10 … 30 nmi (19 … 56 km) available in the world today. • Cloud-to-ground lightning classified The optical coincident requirement into directions: N, NE, E, SE, S, SW, W, Vaisala TSS928™ accurately reports the eliminates reporting of non-lightning and NW range and direction of cloud-to-ground events. The Vaisala Automated lightning and provides cloud lightning TSS928 can be used to integrate counts. Lightning Alert and Risk Management lightning reports with automated (ALARM) system software is used to weather observation programs such as visualize TSS928 sensor data. METAR. Technical Data Measurement Performance Support Services Detection range 30 nmi (56 km) radius from sensor Vaisala TSS928™ is fully supported by our Customer location Support Center, Technical Service Group, and Field Range resolution Three range groups: Service Engineering Team. Maintain optimal 0 … 5 nmi (0 … 9 km) performance by purchasing a service agreement 5 … 10 nmi (9 … 19 km) customized to your unique system requirements. -

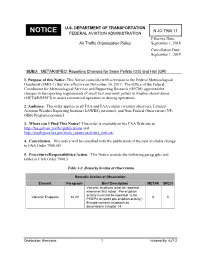

METAR/SPECI Reporting Changes for Snow Pellets (GS) and Hail (GR)

U.S. DEPARTMENT OF TRANSPORTATION N JO 7900.11 NOTICE FEDERAL AVIATION ADMINISTRATION Effective Date: Air Traffic Organization Policy September 1, 2018 Cancellation Date: September 1, 2019 SUBJ: METAR/SPECI Reporting Changes for Snow Pellets (GS) and Hail (GR) 1. Purpose of this Notice. This Notice coincides with a revision to the Federal Meteorological Handbook (FMH-1) that was effective on November 30, 2017. The Office of the Federal Coordinator for Meteorological Services and Supporting Research (OFCM) approved the changes to the reporting requirements of small hail and snow pellets in weather observations (METAR/SPECI) to assist commercial operators in deicing operations. 2. Audience. This order applies to all FAA and FAA-contract weather observers, Limited Aviation Weather Reporting Stations (LAWRS) personnel, and Non-Federal Observation (NF- OBS) Program personnel. 3. Where can I Find This Notice? This order is available on the FAA Web site at http://faa.gov/air_traffic/publications and http://employees.faa.gov/tools_resources/orders_notices/. 4. Cancellation. This notice will be cancelled with the publication of the next available change to FAA Order 7900.5D. 5. Procedures/Responsibilities/Action. This Notice amends the following paragraphs and tables in FAA Order 7900.5. Table 3-2: Remarks Section of Observation Remarks Section of Observation Element Paragraph Brief Description METAR SPECI Volcanic eruptions must be reported whenever first noted. Pre-eruption activity must not be reported. (Use Volcanic Eruptions 14.20 X X PIREPs to report pre-eruption activity.) Encode volcanic eruptions as described in Chapter 14. Distribution: Electronic 1 Initiated By: AJT-2 09/01/2018 N JO 7900.11 Remarks Section of Observation Element Paragraph Brief Description METAR SPECI Whenever tornadoes, funnel clouds, or waterspouts begin, are in progress, end, or disappear from sight, the event should be described directly after the "RMK" element. -

ESSENTIALS of METEOROLOGY (7Th Ed.) GLOSSARY

ESSENTIALS OF METEOROLOGY (7th ed.) GLOSSARY Chapter 1 Aerosols Tiny suspended solid particles (dust, smoke, etc.) or liquid droplets that enter the atmosphere from either natural or human (anthropogenic) sources, such as the burning of fossil fuels. Sulfur-containing fossil fuels, such as coal, produce sulfate aerosols. Air density The ratio of the mass of a substance to the volume occupied by it. Air density is usually expressed as g/cm3 or kg/m3. Also See Density. Air pressure The pressure exerted by the mass of air above a given point, usually expressed in millibars (mb), inches of (atmospheric mercury (Hg) or in hectopascals (hPa). pressure) Atmosphere The envelope of gases that surround a planet and are held to it by the planet's gravitational attraction. The earth's atmosphere is mainly nitrogen and oxygen. Carbon dioxide (CO2) A colorless, odorless gas whose concentration is about 0.039 percent (390 ppm) in a volume of air near sea level. It is a selective absorber of infrared radiation and, consequently, it is important in the earth's atmospheric greenhouse effect. Solid CO2 is called dry ice. Climate The accumulation of daily and seasonal weather events over a long period of time. Front The transition zone between two distinct air masses. Hurricane A tropical cyclone having winds in excess of 64 knots (74 mi/hr). Ionosphere An electrified region of the upper atmosphere where fairly large concentrations of ions and free electrons exist. Lapse rate The rate at which an atmospheric variable (usually temperature) decreases with height. (See Environmental lapse rate.) Mesosphere The atmospheric layer between the stratosphere and the thermosphere. -

Severe Weather Forecasting Tip Sheet: WFO Louisville

Severe Weather Forecasting Tip Sheet: WFO Louisville Vertical Wind Shear & SRH Tornadic Supercells 0-6 km bulk shear > 40 kts – supercells Unstable warm sector air mass, with well-defined warm and cold fronts (i.e., strong extratropical cyclone) 0-6 km bulk shear 20-35 kts – organized multicells Strong mid and upper-level jet observed to dive southward into upper-level shortwave trough, then 0-6 km bulk shear < 10-20 kts – disorganized multicells rapidly exit the trough and cross into the warm sector air mass. 0-8 km bulk shear > 52 kts – long-lived supercells Pronounced upper-level divergence occurs on the nose and exit region of the jet. 0-3 km bulk shear > 30-40 kts – bowing thunderstorms A low-level jet forms in response to upper-level jet, which increases northward flux of moisture. SRH Intense northwest-southwest upper-level flow/strong southerly low-level flow creates a wind profile which 0-3 km SRH > 150 m2 s-2 = updraft rotation becomes more likely 2 -2 is very conducive for supercell development. Storms often exhibit rapid development along cold front, 0-3 km SRH > 300-400 m s = rotating updrafts and supercell development likely dryline, or pre-frontal convergence axis, and then move east into warm sector. BOTH 2 -2 Most intense tornadic supercells often occur in close proximity to where upper-level jet intersects low- 0-6 km shear < 35 kts with 0-3 km SRH > 150 m s – brief rotation but not persistent level jet, although tornadic supercells can occur north and south of upper jet as well. -

Severe Thunderstorm Safety

Severe Thunderstorm Safety Thunderstorms are dangerous because they include lightning, high winds, and heavy rain that can cause flash floods. Remember, it is a severe thunderstorm that produces a tornado. By definition, a thunderstorm is a rain shower that contains lightning. A typical storm is usually 15 miles in diameter lasting an average of 30 to 60 minutes. Every thunderstorm produces lightning, which usually kills more people each year than tornadoes. A severe thunderstorm is a thunderstorm that contains large hail, 1 inch in diameter or larger, and/or damaging straight-line winds of 58 mph or greater (50 nautical mph). Rain cooled air descending from a severe thunderstorms can move at speeds in excess of 100 mph. This is what is called “straight-line” wind gusts. There were 12 injuries from thunderstorm wind gusts in Missouri in 2014, with only 5 injuries from tornadoes. A downburst is a sudden out-rush of this wind. Strong downbursts can produce extensive damage which is often similar to damage produced by a small tornado. A downburst can easily overturn a mobile home, tear roofs off houses and topple trees. Severe thunderstorms can produce hail the size of a quarter (1 inch) or larger. Quarter- size hail can cause significant damage to cars, roofs, and can break windows. Softball- size hail can fall at speeds faster than 100 mph. Thunderstorm Safety Avoid traveling in a severe thunderstorm – either pull over or delay your travel plans. When a severe thunderstorm threatens, follow the same safety rules you do if a tornado threatens. Go to a basement if available. -

Sensor Mfg. Flexible and Expandable for a Wide Variety of Aviation Wind Speed and Direction RM Young & Taylor Applications

3.1 THE 21st CENTURY’S AIRPORT WEATHER REPORTING SYSTEM Patrick L. Kelly1, Gary L. Stringer, Eric Tseo, DeLyle M. Ellefson, Michael Lydon and Diane Buckshnis Coastal Environmental Systems, Seattle, Washington sensor readings as well as regular hardware self-tests, 1. INTRODUCTION such as checking the ability to communicate with a serial sensor. It calculates a variety of other parameters Coastal Environmental Systems has built and is including cloud height, obscuration, dew point, altimeter delivering a complete meteorological and RVR setting, RVR and other relevant data. (combined) aviation weather monitoring system. The The following is a table of the sensors used and the system is suitable for CAT I, II or III airports (or smaller) manufacturers: and measures and reports RVR in addition to all the meteorological parameters. The overall system is very Sensor Mfg. flexible and expandable for a wide variety of aviation Wind speed and Direction RM Young & Taylor applications. The following paper describes this system Air Temperature YSI and some of its abilities. Relative Humidity Rotronics Pressure/Altimeter Druck 2. SYSTEM DESCRIPTION Rainfall Met-One Precipitation ID OSI The system has multiple purposes: Ceilometer Eliasson • Display the required weather sensor data in a Visibility Belfort GUI Ambient Light Belfort Freezing Rain Goodrich • Allow multiple displays to connect through a Lightning Detection GAI/Vaisala variety of means Aspirated Temperature Shield RM Young • Allow users to have different levels of access • Provide a Ground To Air voice output Table 1: Sensors and Manufacturers • Provide a dial in voice output • Feed data to other systems (where applicable) The field station is polled by the TDAU and can be • Provide remote maintenance monitoring via the done through direct cable, short haul modem, fiber optic, Internet UHF radio or a combination of these. -

Thunderstorm Predictors and Their Forecast Skill for the Netherlands

Atmospheric Research 67–68 (2003) 273–299 www.elsevier.com/locate/atmos Thunderstorm predictors and their forecast skill for the Netherlands Alwin J. Haklander, Aarnout Van Delden* Institute for Marine and Atmospheric Sciences, Utrecht University, Princetonplein 5, 3584 CC Utrecht, The Netherlands Accepted 28 March 2003 Abstract Thirty-two different thunderstorm predictors, derived from rawinsonde observations, have been evaluated specifically for the Netherlands. For each of the 32 thunderstorm predictors, forecast skill as a function of the chosen threshold was determined, based on at least 10280 six-hourly rawinsonde observations at De Bilt. Thunderstorm activity was monitored by the Arrival Time Difference (ATD) lightning detection and location system from the UK Met Office. Confidence was gained in the ATD data by comparing them with hourly surface observations (thunder heard) for 4015 six-hour time intervals and six different detection radii around De Bilt. As an aside, we found that a detection radius of 20 km (the distance up to which thunder can usually be heard) yielded an optimum in the correlation between the observation and the detection of lightning activity. The dichotomous predictand was chosen to be any detected lightning activity within 100 km from De Bilt during the 6 h following a rawinsonde observation. According to the comparison of ATD data with present weather data, 95.5% of the observed thunderstorms at De Bilt were also detected within 100 km. By using verification parameters such as the True Skill Statistic (TSS) and the Heidke Skill Score (Heidke), optimal thresholds and relative forecast skill for all thunderstorm predictors have been evaluated.