Best Practice When Using Non-Alphabetic Characters in Orthographies: Helping Languages Succeed in the Modern World

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Jupyter Reference Guide

– 1 – Nicholas Bowman, Sonja Johnson-Yu, Kylie Jue Handout #15 CS 106AP August 5, 2019 Jupyter Reference Guide This handout goes over the basics of Jupyter notebooks, including how to install Jupyter, launch a notebook, and run its cells. Jupyter notebooks are a common tool used across different disciplines for exploring and displaying data, and they also make great interactive explanatory tools. Installing Jupyter To install Jupyter, run the following command inside your Terminal (replace python3 with py if you’re using a Windows device): $ python3 -m pip install jupyter Launching a notebook A Jupyter notebook is a file that ends in the extension .ipynb, which stands for “interactive Python notebook.” To launch a Python notebook after you’ve installed Jupyter, you should first navigate to the directory where your notebook is located (you’ll be there by default if you’re using the PyCharm terminal inside a project). Then run the following command inside your Terminal (if this doesn’t work, see the “Troubleshooting” section below): $ jupyter notebook This should open a window in your browser (Chrome, Firefox, Safari, Edge, etc.) that looks like Figure 1. Jupyter shows the files inside the current directory and allows you to click on any Python notebook files within that folder. Figure 1: After running the jupyter notebook command, you should see a window that lists the Python notebooks inside the current directory from which you ran the command. The picture above shows the Lecture 25 directory. – 2 – To launch a particular notebook, click on its file name. This should open a new tab with the notebook. -

Unicode Nearly Plain-Text Encoding of Mathematics Murray Sargent III Office Authoring Services, Microsoft Corporation 4-Apr-06

Unicode Nearly Plain Text Encoding of Mathematics Unicode Nearly Plain-Text Encoding of Mathematics Murray Sargent III Office Authoring Services, Microsoft Corporation 4-Apr-06 1. Introduction ............................................................................................................ 2 2. Encoding Simple Math Expressions ...................................................................... 3 2.1 Fractions .......................................................................................................... 4 2.2 Subscripts and Superscripts........................................................................... 6 2.3 Use of the Blank (Space) Character ............................................................... 7 3. Encoding Other Math Expressions ........................................................................ 8 3.1 Delimiters ........................................................................................................ 8 3.2 Literal Operators ........................................................................................... 10 3.3 Prescripts and Above/Below Scripts........................................................... 11 3.4 n-ary Operators ............................................................................................. 11 3.5 Mathematical Functions ............................................................................... 12 3.6 Square Roots and Radicals ........................................................................... 13 3.7 Enclosures..................................................................................................... -

6 X 10.5 Long Title.P65

Cambridge University Press 978-0-521-51840-6 - An Introduction to Classical Nahuatl Michel Launey Excerpt More information Ī Ī Ī Ī Ī PART Ī Ī ONE Ī Ī Ī Ī Ī Ī Ī Ī Ī Ī Ī Ī Ī Ī Ī Ī Ī Ī Ī Ī Ī © in this web service Cambridge University Press www.cambridge.org Cambridge University Press 978-0-521-51840-6 - An Introduction to Classical Nahuatl Michel Launey Excerpt More information PRELIMINARY LESSON Phonetics and Writing S ince the time of the conquest, Nahuatl has been written by means of theLatinalphabet.Thereis,therefore,alongtraditiontowhichitis preferable to conform for the most part. Nonetheless, for the following reasons, somewordsinthisbookarewritteninanorthographythatdiffersfromthe traditional one. ̈ Theorthographyis,ofcourse,“hispanicized.”Torepresentthephoneticele- ments of Nahuatl, the letters or combinations of letters that represent identical or similar sounds in Spanish are used. Hence, there is no problem with the sounds that exist in both languages, not to mention those that are lacking in Nahuatl (b, d, g, r etc.). On the other hand, those that exist in Nahuatl but not inSpanisharefoundinalternatespellings,orareevenaltogetherignored.In particular, this is the case with vowel length and (even worse) with the glottal stop (see Table 1.1), which are systematically marked only by two grammar- ians, Horacio Carochi and Aldama y Guevara, and in a text named Bancroft Dialogues.1 ̈ This defective character is heightened by a certain fluctuation because the orthography of Nahuatl has never really been fixed. Hence, certain texts represent the vowel /i/ indifferently with i or j, others always represent it with i but extend this spelling to consonantal /y/, that is, to a differ- ent phoneme. -

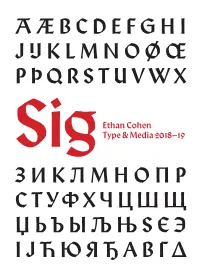

Sig Process Book

A Æ B C D E F G H I J IJ K L M N O Ø Œ P Þ Q R S T U V W X Ethan Cohen Type & Media 2018–19 SigY Z А Б В Г Ґ Д Е Ж З И К Л М Н О П Р С Т У Ф Х Ч Ц Ш Щ Џ Ь Ъ Ы Љ Њ Ѕ Є Э І Ј Ћ Ю Я Ђ Α Β Γ Δ SIG: A Revival of Rudolf Koch’s Wallau Type & Media 2018–19 ЯREthan Cohen ‡ Submitted as part of Paul van der Laan’s Revival class for the Master of Arts in Type & Media course at Koninklijke Academie von Beeldende Kunsten (Royal Academy of Art, The Hague) INTRODUCTION “I feel such a closeness to William Project Overview Morris that I always have the feeling Sig is a revival of Rudolf Koch’s Wallau Halbfette. My primary source that he cannot be an Englishman, material was the Klingspor Kalender für das Jahr 1933 (Klingspor Calen- dar for the Year 1933), a 17.5 × 9.6 cm book set in various cuts of Wallau. he must be a German.” The Klingspor Kalender was an annual promotional keepsake printed by the Klingspor Type Foundry in Offenbach am Main that featured different Klingspor typefaces every year. This edition has a daily cal- endar set in Magere Wallau (Wallau Light) and an 18-page collection RUDOLF KOCH of fables set in 9 pt Wallau Halbfette (Wallau Semibold) with woodcut illustrations by Willi Harwerth, who worked as a draftsman at the Klingspor Type Foundry. -

Package Mathfont V. 1.6 User Guide Conrad Kosowsky December 2019 [email protected]

Package mathfont v. 1.6 User Guide Conrad Kosowsky December 2019 [email protected] For easy, off-the-shelf use, type the following in your docu- ment preamble and compile using X LE ATEX or LuaLATEX: \usepackage[hfont namei]{mathfont} Abstract The mathfont package provides a flexible interface for changing the font of math- mode characters. The package allows the user to specify a default unicode font for each of six basic classes of Latin and Greek characters, and it provides additional support for unicode math and alphanumeric symbols, including punctuation. Crucially, mathfont is compatible with both X LE ATEX and LuaLATEX, and it provides several font-loading commands that allow the user to change fonts locally or for individual characters within math mode. Handling fonts in TEX and LATEX is a notoriously difficult task. Donald Knuth origi- nally designed TEX to support fonts created with Metafont, and while subsequent versions of TEX extended this functionality to postscript fonts, Plain TEX's font-loading capabilities remain limited. Many, if not most, LATEX users are unfamiliar with the fd files that must be used in font declaration, and the minutiae of TEX's \font primitive can be esoteric and confusing. LATEX 2"'s New Font Selection System (nfss) implemented a straightforward syn- tax for loading and managing fonts, but LATEX macros overlaying a TEX core face the same versatility issues as Plain TEX itself. Fonts in math mode present a double challenge: after loading a font either in Plain TEX or through the nfss, defining math symbols can be unin- tuitive for users who are unfamiliar with TEX's \mathcode primitive. -

The Unicode Cookbook for Linguists: Managing Writing Systems Using Orthography Profiles

Zurich Open Repository and Archive University of Zurich Main Library Strickhofstrasse 39 CH-8057 Zurich www.zora.uzh.ch Year: 2017 The Unicode Cookbook for Linguists: Managing writing systems using orthography profiles Moran, Steven ; Cysouw, Michael DOI: https://doi.org/10.5281/zenodo.290662 Posted at the Zurich Open Repository and Archive, University of Zurich ZORA URL: https://doi.org/10.5167/uzh-135400 Monograph The following work is licensed under a Creative Commons: Attribution 4.0 International (CC BY 4.0) License. Originally published at: Moran, Steven; Cysouw, Michael (2017). The Unicode Cookbook for Linguists: Managing writing systems using orthography profiles. CERN Data Centre: Zenodo. DOI: https://doi.org/10.5281/zenodo.290662 The Unicode Cookbook for Linguists Managing writing systems using orthography profiles Steven Moran & Michael Cysouw Change dedication in localmetadata.tex Preface This text is meant as a practical guide for linguists, and programmers, whowork with data in multilingual computational environments. We introduce the basic concepts needed to understand how writing systems and character encodings function, and how they work together. The intersection of the Unicode Standard and the International Phonetic Al- phabet is often not met without frustration by users. Nevertheless, thetwo standards have provided language researchers with a consistent computational architecture needed to process, publish and analyze data from many different languages. We bring to light common, but not always transparent, pitfalls that researchers face when working with Unicode and IPA. Our research uses quantitative methods to compare languages and uncover and clarify their phylogenetic relations. However, the majority of lexical data available from the world’s languages is in author- or document-specific orthogra- phies. -

Saltillo Street Names Index: Autumn Gleen Dr

M R - 6 - E R R - 5 - E C c 1 2 3 D 4 5 6 7 U C S D A T R . E R D L 6 . 8 E 3 Y SNER ST. RD 2446 MES SALTILLO STREET NAMES INDEX: AUTUMN GLEEN DR. E3 OLD HWY. 51 E3 A BAUHAUS DR. E3 OLD NATCHEZ TRACE TRAIL G3 BENT GRASS CIR. H2 OLD SALTILLO RD. E4, F4, F5, G5, H4, H5 A BERCH TREE DR. F4 OLD SOUTH PLANTATION DR. E2 R D BIG HARPE TRAIL G2 OLIVIA CV. D4 BIRMINGHAM RIDGE RD. F1, F2, G3 7 PARKRIDGE DR, F4 8 3 BOLIVAR CIR. G3 PATTERSON CIR. E5 . D BOSTIC AVE. E4 PECAN DR. D5 R BRIDLE CV. E3 PETE AVE. F3 D U BRISCOE ST. E4 PEYTON LN. D4 H BROCK DR. F3 33 35 35 35 PINECREST ST. E4 ST 32 32 33 TOWERY . CARTWRIGHT AVE. E4 PINEHURST AVE. E4 31 36 31 R 4 3 D 3 2 CHAMPIONS CV. H2 PINEWOOD ST. E4 5 6 5 4 CHERRY HILL DR. C4 POPULARWOOD LN. F4 1 6 9 5 CHESTNUT LN. C4 PRINSTON DR. E3 1 CHICKASAW CIR. G3 PULLTIGHT RD. B4, C4 CHICKASAW TRAIL G3 PYLE DR. E4 CHRISTY WAY C4 QUAIL CREEK RD. E3 CO 653-B H2, H3 RAILROAD AVE. E5 CO 653-D G3 RD 189 F3 CO 2012 E2, E3 RD 251 D2 3 CO 2012 F3 8 RD 521 C1, E2 7 CO 2720 A1 RD 599 C1, C2, D2 D R CO 2720 B1 RD 647 H3 COBBS CV. -

Invisible-Punctuation.Pdf

... ' I •e •e •4 I •e •e •4 •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • ••••• • • •• • • • • • I •e •e •4 In/visible Punctuation • • • • •• • • • •• • • • •• • • • • • •• • • • • • •• • • • • • • •• • • • • • •• • • • • • ' •• • • • • • John Lennard •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • I •e •e •4 I •e •e •4 I •e •e •4 I •e •e •4 I •e •e •4 I ••• • • 4 I.e• • • 4 I ••• • • 4 I ••• • • 4 I ••• • • 4 I ••• • • 4 • • •' .•. • . • .•. •. • ' .. ' • • •' .•. • . • .•. • . • ' . ' . UNIVERSITY OF THE WEST INDIES- LENNARD, 121-138- VISIBLE LANGUAGE 45.1/ 2 I •e •e' • • • • © VISIBLE LANGUAGE, 2011 -RHODE ISLAND SCHOOL OF DESIGN- PROVIDENCE, RHODE ISLAND 02903 .. ' ABSTRACT The article offers two approaches to the question of 'invisible punctuation,' theoretical and critical. The first is a taxonomy of modes of punctuational invisibility, · identifying denial, repression, habituation, error and absence. Each is briefly discussed and some relations with technologies of reading are considered. The second considers the paragraphing, or lack of it, in Sir Philip Sidney's Apology for Poetry: one of the two early printed editions and at least one of the two MSS are mono paragraphic, a feature always silently eliminated by editors as a supposed carelessness. It is argued that this is improbable -

How the Past Affects the Future: the Story of the Apostrophe1

HOW THE PAST AFFECTS THE FUTURE: THE STORY OF THE APOSTROPHE1 Christina Cavella and Robin A. Kernodle I. Introduction The apostrophe, a punctuation mark which “floats above the line, symbolizing something missing in the text” (Battistella, 1999, p. 109), has been called “an unstable feature of written English” (Gasque, 1997, p. 203), “the step-child of English orthography” (Barfoot, 1991, p. 121), and “an entirely insecure orthographic squiggle” (Barfoot, 1991, p. 133). Surely the apostrophe intends no harm; why then the controversy and apparent emotionalism surrounding it? One major motivation for investigating the apostrophe is simply because it is so often misused. A portion of the usage problem can perhaps be attributed to the chasm dividing spoken and written language, as the apostrophe was originally intended to indicate missing letters, which may or may not have actually been enunciated. To understand what has been called “the aberrant apostrophe,” (Crystal, 1995, p. 203) and the uncertainty surrounding its usage, an examination of its history is essential, for it is this “long and confused” (Crystal, 1995, p. 203) history that is partially responsible for the modern-day misuses of the apostrophe. This paper will trace the history of the apostrophe, examining the purpose(s) for which the apostrophe has been utilized in the past as well as presenting its current use. An overview of contemporary rules of usage is then included, along with specific examples of apostrophe misuse and a recommendation on how to teach apostrophe usage to non- native speakers of English. Finally, an attempt is made to predict the apostrophe’s future. -

THE MEANING of “EQUALS” Charles Darr Take a Look at the Following Mathematics Problem

PROFESSIONAL DEVELOPMENT THE MEANING OF “EQUALS” Charles Darr Take a look at the following mathematics problem. has been explored by mathematics education researchers internationally (Falkner, Levi and Carpenter, 1999; Kieran, 1981, 1992; MacGregor Write the number in the square below that you think and Stacey, 1997; Saenz-Ludlow and Walgamuth, 1998; Stacey and best completes the equation. MacGregor, 1999; Wright, 1999). However, the fact that so many students appear to have this 4 + 5 = + 3 misconception highlights some important considerations that we, as mathematics educators, can learn from and begin to address. How difficult do you consider this equation to be? At what age do you In what follows I look at two of these considerations. I then go on to think a student should be able to complete it correctly? discuss some strategies we can employ in the classroom to help our I recently presented this problem to over 300 Year 7 and 8 students at students gain a richer sense of what the equals sign represents. a large intermediate school. More than half answered it incorrectly.1 Moreover, the vast majority of the students who wrote something other The meaning of “equals” than 6 were inclined to write the missing number as “9”. Firstly, it is worth considering what the equals sign means in a This result is not just an intermediate school phenomenon, however. mathematical sense, and why so many children seem to struggle to Smaller numbers of students in other Years were also tested. In Years 4, develop this understanding. 5 and 6, even larger percentages wrote incorrect responses, while at Years From a mathematical point of view, the equals sign is not a command 9 and 10, more than 20 percent of the students were still not writing a to do something. -

It's the Symbol You Put Before the Answer

It’s the symbol you put before the answer Laura Swithinbank discovers that pupils often ‘see’ things differently “It’s the symbol you put before the answer.” 3. A focus on both representing and solving a problem rather than on merely solving it; ave you ever heard a pupil say this in response to being asked what the equals sign is? Having 4. A focus on both numbers and letters, rather than H heard this on more than one occasion in my on numbers alone (…) school, I carried out a brief investigation with 161 pupils, 5. A refocusing of the meaning of the equals sign. Year 3 to Year 6, in order to find out more about the way (Kieran, 2004:140-141) pupils interpret the equals sign. The response above was common however, what really interested me was the Whilst reflecting on the Kieran summary, some elements variety of other answers I received. Essentially, it was immediately struck a chord with me with regard to my own clear that pupils did not have a consistent understanding school context, particularly the concept of ‘refocusing the of the equals sign across the school. This finding spurred meaning of the equals sign’. The investigation I carried me on to consider how I presented the equals sign to my out clearly echoed points raised by Kieran. For example, pupils, and to deliberate on the following questions: when asked to explain the meaning of the ‘=’ sign, pupils at my school offered a wide range of answers including: • How can we define the equals sign? ‘the symbol you put before the answer’; ‘the total of the • What are the key issues and misconceptions with number’; ‘the amount’; ‘altogether’; ‘makes’; and ‘you regard to the equals sign in primary mathematics? reveal the answer after you’ve done the maths question’. -

Trademarks 10 Things You Should Know to Protect Your Product and Business Names

Trademarks 10 things you should know to protect your product and business names 1. What is a trademark? A trademark is a brand name for a product. It can be a word, phrase, logo, design, or virtually anything that is used to identify the source of the product and distinguish it from competitors’ products. More than one trademark may be used in connection with a product; for example, COCA-COLA® and DIET COKE® are both trademarks for beverages. A trademark represents the goodwill and reputation of a product and its source. Its owner has the right to prevent others from trading on that goodwill by using the same or a similar trademark on the same or similar products in a way that is likely to cause confusion as to the source, origin, or sponsorship of the products. A service mark is like a trademark, except it is used to identify and distinguish services rather than products. For example, the “golden arches” mark shown below is a service mark for restaurant services. The terms “trademark” and “mark” are often used interchangeably to refer to either a trademark or service mark. 2. How should a mark be used? Trademarks must be used properly to maintain their value. Marks should be used as adjectives, but not as nouns or verbs. 4 | knobbe.com For example, when referring to utilizing the FACEBOOK® website, do not say that you “Facebooked” or that you were “Facebooking.” To prevent loss of trademark or service mark rights, the generic name for the product should appear after the mark, and the mark should appear visually different from the surrounding text.