A Case for Computer Architecture Performance Metrics That Reflect

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2018 Annual Report on Form 10-K

2018 ANNUAL REPORT ON FORM 10-K MARCH 2019 DEAR SHAREHOLDERS: From the industry’s first 1GHz CPU to the world’s first GPU delivering a teraflop of computing power, AMD has always stood for pushing the boundaries of what is possible. A few years ago, we made several big bets to accelerate our pace of innovation, strengthen our execution, and enable AMD to deliver a leadership portfolio of computing and graphics processors capable of increasing our share of the $75 billion high-performance computing market. In 2018, we saw those bets begin to pay off as we delivered our second straight year of greater than 20% annual revenue growth and significantly improved our gross margin and profitability from the previous year. REVENUE GROSS MARGIN % R&D INVESTMENT EXPENSE/REVENUE % $ Billions $ Billions $6.5B 38% $1.43B 34% $5.3B 34% 33% $4.3B $1.20B 23% $1.01B 31% 2016 2017 2018 2016 2017 2018 2016 2017 2018 2016 2017 2018 Added $2.2B in revenue Significantly improved gross Increased R&D by more than Significant improvement over the last 2 years margin over last 2 years based 40% over the last 2 years in OPEX leverage on new product portfolio Our newest Ryzen™, EPYC™ and datacenter GPU products contributed more than $1.2 billion of revenue in 2018 and helped us gain share across our priority markets. In 2018, we added 3.9% points of desktop processor unit share, 5.3% points of notebook processor unit share and met our goal of exiting the year with mid-single digit server processor market share. -

J7F3 Mini-ITX Motherboard Series

Mainboard Diagram J7F3 Mini-ITX Motherboard Series -SiS 741CX Northbridge + SiS 964 Southbridge Chipsets -Support Socket-462 AMD Geode NX processor -Support Front Side Bus 133MHz -Single Channel DDR2 400 Memory DIMM -Support 2 Serial ATA Devices with RAID 0, 1 -Support 2xAD Connector With Expansion Daughter-boards -Ethernet LAN Supported -AC’97 6 Channel Audio CODEC -VIA VT6307S IEEE1394a Controller for J7F3E -17 x 17CM Mini-ITX Form Factor Features and Benefits Support Socket 462 AMD Geode™ NX Processor The AMD Geode™ NX processor family gives product designers a wide range of options in low-power, high-performance processors. Based on Mobile AMD Athlon™ processor technology, AMD NX processors deliver superior computing performance for applications including thin-client, point-of-sale terminals, kiosks, high-end printers, and home media systems. AMD Geode Solutions have received new model numbers to better reflect total performance beyond just megahertz. This presentation of attributes gives designers greater understanding of the capabilities of AMD Geode Solutions. SiS 741CX Northbridge Chipset and SiS964 Southbridge Chipset The SiS741CX chipset can be combined with three different AMD Geode NX processors, including the AMD Geode™ NX 1250@6W processor*, AMD Geode™ NX 1500@6W processor** and AMD Geode™ NX 1750@14W processor***, enabling development of a wider variety of products for different market segments. The SiS741CX chipset supports the AMD Geode NX processor family, DDR266 front side bus, as well as high-speed DDR333 DRAM. Furthermore, the SiS741CX chipset incorporates SiS's revolutionary HyperStreaming™ Technology, which provides multiple divided pipelines for data, allows data to be sent concurrently, and separates data for easier memory retrieval, resulting in a remarkable reduction in latency versus traditional chipsets. -

Iei's Amd Embedded Solution

* Issue Date: 1st June, 2006 * Design and specifications are subject to change without prior notice. IEI’S AMD EMBEDDED SOLUTION LX/GX Embedded Series Headquarters America-United States China IEI Technology Corp. IEI Technology USA Corp. Armorlink SH Corp. TEL : +886-2-86916798 FAX : +886-2-66160028 TEL : +1-562-690-6677 FAX : +1-562-690-0898 TEL : +86-21-54429000 FAX : +86-21-54429100 [email protected] www.ieiworld.com [email protected] www.usa.ieiworld.com [email protected] www.ieiworld.com.cn No. 29, Jhongsing Rd.,Sijhih City, 515 N. Puente St., Brea, CA 92821 No.515, Shenfu Road, Minhang District, Shanghai, China Taipei County, 221, Taiwan www.ieiworld.com IEI Technology Corp. www.ieiworld.com IEI’s AMD Embedded Solution Vision of AMD - IEI Alliance When IEI meets AMD... Single Board Computer Geode™ Connectivity y LX u LX800 Design Services Family EXPERT OF AMD’S FULL-LINE EMBEDDED SOLUTIONS Manufacturing Services Geode™ Customer-Centric y GX u GX466 Family ABOUT IEI ABOUT AMD Communication Partnership y A Leading Industrial Computing Solution Provider A Leading Global Provider of Innovative Microprocessor IEI Technology Corp. is a leading industrial computing platform Solutions provider. IEI follows the requirements of the RoHS Directive Advanced Micro Devices (AMD) is a leading global provider on a worldwide basis so that customers can start to work for of innovative microprocessor solutions for computing, their long-term projects. IEI supplies hundreds of industrial communications and consumer electronics markets. Founded computer boards, systems and peripherals in thousands of in 1969, AMD is dedicated to delivering superior computing customer applications and supports OEM/ODM service to solutions based on customer needs that empower users worldwide. -

AMD Geode™ NX Processors BIOS Considerations Application Note

AMD Geode™ NX Processors BIOS Considerations Application Note PID: 32483 Rev: A Issue Date: October 2004 © 2004 Advanced Micro Devices, Inc. All rights reserved. The contents of this document are provided in connection with Advanced Micro Devices, Inc. (“AMD”) products. AMD makes no representations or warranties with respect to the accuracy or completeness of the contents of this publication and reserves the right to make changes to specifications and product descriptions at any time without notice. No license, whether express, implied, arising by estoppel or otherwise, to any intellectual property rights is granted by this publication. Except as set forth in AMD’s Standard Terms and Conditions of Sale, AMD assumes no liability whatsoever, and disclaims any express or implied warranty, relating to its products including, but not limited to, the implied warranty of mer- chantability, fitness for a particular purpose, or infringement of any intellectual property right. AMD’s products are not designed, intended, authorized or warranted for use as components in systems intended for surgical implant into the body, or in other applications intended to support or sustain life, or in any other application in which the failure of AMD’s product could create a situation where personal injury, death, or severe property or environmental damage may occur. AMD reserves the right to discontinue or make changes to its products at any time without notice. Contacts www.amd.com Trademarks AMD, the AMD Arrow logo, AMD Athlon, AMD PowerNow!, and combinations thereof, and Geode are trademarks of Advanced Micro Devices, Inc. Microsoft and Windows are registered trademarks of Microsoft Corporation. -

AMD in Embedded: Proven Leadership and Solutions

AMD in Embedded: Proven Leadership and Solutions A long history of high-performance low-power solutions for embedded applications For over two decades AMD has been a leader in the embedded market: in the early 1990’s with the introduction of the Am386 and Am486 and their adoption in embedded designs, and followed in 1995 with the introduction of the Am5x86 processor. The AM5x86 processor was one of the fastest and most universally-compatible upgrade paths for users of 486 systems when it was introduced. AMD continued to expand their E86 (Embedded x86) product family in the late 90’s with the release of the Élan™SC520 microcontroller for data communications, telecommunications, and information appliance markets. The ÉlanSC520 microcontroller extended the options available to embedded systems designers by providing fifth-generation x86 performance and was designed to run both 16-bit and 32-bit software. The AMD embedded group grew significantly in early 2000 with the acquisition of Alchemy Semiconductor for its Alchemy line of MIPS processors for the hand-held and portable media player markets. In order to augment its existing line of embedded x86 processor products, AMD also purchased the AMD Geode™ business in August 2003 which was originally part of National Semiconductor. During the second quarter of 2004, AMD launched the new low- power AMD Geode™ NX processors which were based on the AMD-K7™ Thoroughbred architecture with speeds up to 1.4 GHz. These AMD Geode NX processors offered a high performance option in the AMD Geode product line that was still sufficiently low power to be designed into fan-less applications. -

AMD Geode™ GX Processor/CS5535 Companion Device Geoderom Porting Guide © 2006 Advanced Micro Devices, Inc

AMD Geode™ GX Processor/ CS5535 Companion Device GeodeROM Porting Guide April 2006 Publication ID: 32430C AMD Geode™ GX Processor/CS5535 Companion Device GeodeROM Porting Guide © 2006 Advanced Micro Devices, Inc. All rights reserved. The contents of this document are provided in connection with Advanced Micro Devices, Inc. (“AMD”) products. AMD makes no representations or warranties with respect to the accuracy or completeness of the contents of this publication and reserves the right to make changes to specifications and product descriptions at any time without notice. No license, whether express, implied, arising by estoppel or otherwise, to any intellectual property rights is granted by this publication. Except as set forth in AMD’s Standard Terms and Conditions of Sale, AMD assumes no liability whatsoever, and disclaims any express or implied warranty, relating to its products including, but not limited to, the implied warranty of mer- chantability, fitness for a particular purpose, or infringement of any intellectual property right. AMD’s products are not designed, intended, authorized or warranted for use as components in systems intended for surgical implant into the body, or in other applications intended to support or sustain life, or in any other application in which the failure of AMD’s product could create a situation where personal injury, death, or severe property or environmental damage may occur. AMD reserves the right to discontinue or make changes to its products at any time without notice. Trademarks AMD, the AMD Arrow logo, and combinations thereof, and Geode, GeodeLink, Virtual System Architecture, and XpressGRAPHICS are trademarks of Advanced Micro Devices, Inc. -

The Next Major Advance in Chip-Level Design Productivity

The Next Major Advance in Chip-Level Synopsys EDA Interoperability Developers’ Forum Design Productivity Santa Clara, CA st [email protected] October 21 , 2004 The Next Major Advance in Chip-Level Design Productivity A. Richard Newton University of California, Berkeley Synopsys EDA Interoperability Developers’ Forum Santa Clara, CA October 21st, 2004 Fundamental Drivers of Future Chip Designs (1) (2) (3) (4) Silicon Scaling Rising Design Growing Complexity Increased System Requires Concurrency Drives Drives Chip Cost Drives Software-Based Multiple Processor Capacity Programmability Solutions Architectures SoC Becomes A “Sea Of Processors” SoC Programmable Software-Centric Sea-of-Processors Platforms Design Design Source: Chris Rowen, Tensilica Page 1 The Next Major Advance in Chip-Level Synopsys EDA Interoperability Developers’ Forum Design Productivity Santa Clara, CA st [email protected] October 21 , 2004 Key Points The future mainstream building-block of electronic system-level design will present a (configurable) clocked synchronous Von Neumann programmer’s model to the system-level application developer (ASIP or TSP) The majority of large silicon systems will consist of many such processors, connected in an asynchronous network These processors may be integrated on a single chip (CMP) and/or as a (possibly very large) collection of chips These conclusions lead to a number of critical design-technology research challenges and new business opportunities Fundamental Drivers of Future Chip Designs (1) (2) Silicon Scaling -

ADVANCED MICRO DEVICES, INC. (Exact Name of Registrant As Specified in Its Charter)

UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 FORM 8-K CURRENT REPORT Pursuant to Section 13 or 15(d) of the Securities Exchange Act of 1934 July 20, 2006 Date of Report (Date of earliest event reported) ADVANCED MICRO DEVICES, INC. (Exact name of registrant as specified in its charter) Delaware 001-07882 94-1692300 (State of Incorporation) (Commission File Number) (IRS Employer Identification Number) One AMD Place P.O. Box 3453 Sunnyvale, California 94088-3453 (Address of principal executive offices) (Zip Code) (408) 749-4000 (Registrant’s telephone number, including area code) N/A (Former Name or Former Address, if Changed Since Last Report) Check the appropriate box below if the Form 8-K filing is intended to simultaneously satisfy the filing obligation of the registrant under any of the following provisions: ¨ Written communications pursuant to Rule 425 under the Securities Act (17 CFR 230.425) ¨ Soliciting material pursuant to Rule 14a-12 under the Exchange Act (17 CFR 240.14a-12) ¨ Pre-commencement communications pursuant to Rule 14d-2(b) under the Exchange Act (17 CFR 240.14d-2(b)) ¨ Pre-commencement communications pursuant to Rule 13e-4(c) under the Exchange Act (17 CFR 240.13e-4(c)) Item 2.02. Results of Operations and Financial Condition Item 7.01. Regulation FD Disclosure The information in this Report, including the Exhibit 99.1 attached hereto, is furnished pursuant to Item 2.02 and Item 7.01 of this Form 8-K. Consequently, it is not deemed “filed” for the purposes of Section 18 of the Securities and Exchange Act of 1934, or otherwise subject to the liabilities of that section. -

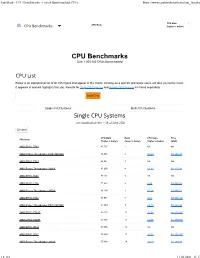

Passmark - CPU Benchmarks - List of Benchmarked Cpus

PassMark - CPU Benchmarks - List of Benchmarked CPUs https://www.cpubenchmark.net/cpu_list.php CPU Mark Rank CPU Name CPU Benchmarks (higher is better) (lower is better) CPU Benchmarks Over 1,000,000 CPUs Benchmarked CPU List Below is an alphabetical list of all CPU types that appear in the charts. Clicking on a specific processor name will take you to the chart it appears in and will highlight it for you. Results for Single CPU Systems and Multiple CPU Systems are listed separately. Find CPU Single CPU Systems Multi CPU Systems Single CPU Systems Last updated on the 11th of June 2021 Column CPU Mark Rank CPU Value Price CPU Name (higher is better) (lower is better) (higher is better) (USD) AMD EPYC 7763 87,767 1 NA NA AMD Ryzen Threadripper PRO 3995WX 86,096 2 15.69 $5,486.99 AMD EPYC 7713 85,887 3 NA NA AMD Ryzen Threadripper 3990X 81,290 4 13.30 $6,110.99 AMD EPYC 7643 77,101 5 NA NA AMD EPYC 7702 71,686 6 8.43 $8,499.00 AMD Ryzen Threadripper 3970X 64,139 7 26.28 $2,440.51 AMD EPYC 7742 64,071 8 8.01 $7,995.94* AMD Ryzen Threadripper PRO 3975WX 61,259 9 22.70 $2,698.99 AMD EPYC 7702P 60,273 10 12.96 $4,650.00* AMD EPYC 7443P 58,896 11 44.05 $1,337.00* AMD EPYC 7R32 58,556 12 NA NA AMD EPYC 7542 56,809 13 13.53 $4,198.95* AMD Ryzen Threadripper 3960X 55,004 14 39.29 $1,399.99 1 z 112 11.06.2021, 11:17 PassMark - CPU Benchmarks - List of Benchmarked CPUs https://www.cpubenchmark.net/cpu_list.php CPU Mark Rank CPU Value Price CPU Name (higher is better) (lower is better) (higher is better) (USD) CPU Mark Rank CPU Name CPU Benchmarks (higher -

For the AMD Geode™ NX Processors Powerful Software Development

•AMD0040 31760b_nxdb1500_pb.qxd 9/7/04 2:08 PM Page 1 AMD Geode™ NX DB1500 Development Board THE INTEGER GROUP 1999 Bryan Street, Suite 1700 Dallas, Tx 75201 (214)758-6800 Fax (214)758-6904 Date: 09/07/04 Job#: AMD0040 Powerful Software Development Capability PJ Name: NX1250@6W Product Brief File Name:AMD0040 31760b_nxdb1500_pb.qxd for the AMD Geode™ NX Processors Item Name: Product Brief Program: QuarkXpress 4.1 Art Director: Studio Artist: Ron Moser Quality Control: Toni Lotz PRINTER SPECS Art Measures: 8.5”w. x 11”h. Art Scaled To: 100% Product Overview Enlarge: 0% The AMD Geode™ NX DB1500 development board is an integrated Final Trim: 8.5 ”w. x 11 ”h. Final Fold: hardware and software system solution that leverages the power Bleed: X Die Needed: of the AMD Geode™ NX processors. The NX DB1500 development Fonts: Avenir Book, Heavy board is a complete solution that optimizes the design of high- performance, low power-consumption solutions for embedded Images:speedbar.tif, Recycled_W.eps, applications. It is a comprehensive evaluation unit for customers AMDh_P_4cr.eps, NXDB1500 BlockDiag.eps, AMD_Geode_Logo_PMSp.eps, designing with the NX processor family. 31760b-01geodenxdb1500.tif Turnkey Solution 4/C Process: X PMS Colors: The AMD Geode NX DB1500 development board is a turnkey solution that integrates a processor, memory, and peripherals Bring High-Performance Embedded Replace Lo Res Images With Hi Res. This file has not been manually trapped! together on a single board along with debugging assist features and Applications to Market Faster Photoshop files need to be trapped software. The NX DB1500 development board underscores AMD’s by Printer. -

AMD Geode GX1 Processor Data Book

AMD Geode™ GX1 Processor Data Book December 2003 Publication ID: Revision 5.0 AMD Geode™ GX1 Processor Data Book © 2003 Advanced Micro Devices, Inc. All rights reserved. The contents of this document are provided in connection with Advanced Micro Devices, Inc. (“AMD”) products. AMD makes no representations or warranties with respect to the accuracy or completeness of the contents of this publication and reserves the right to make changes to specifications and product descriptions at any time without notice. No license, whether express, implied, arising by estoppel or otherwise, to any intellectual property rights is granted by this publication. Except as set forth in AMD’s Standard Terms and Conditions of Sale, AMD assumes no liability whatsoever, and disclaims any express or implied warranty, relating to its products including, but not limited to, the implied warranty of mer- chantability, fitness for a particular purpose, or infringement of any intellectual property right. AMD’s products are not designed, intended, authorized or warranted for use as components in systems intended for surgical implant into the body, or in other applications intended to support or sustain life, or in any other application in which the failure of AMD’s product could create a situation where personal injury, death, or severe property or environmental damage may occur. AMD reserves the right to discontinue or make changes to its products at any time without notice. Contacts www.amd.com [email protected] Trademarks AMD, the AMD Arrow logo, and combinations thereof, and Geode, Virtual System Architecture, XpressGRAPHICS, XpressAUDIO, and XpressRAM are trademarks of Advanced Micro Devices, Inc. -

AMD Sempron 2800+ - SDA2800DUT3D (SDA2800BOX)

AMD Sempron 2800+ - SDA2800DUT3D (SDA2800BOX) http://www.cpu-world.com/CPUs/K7/AMD-Sempron%202800+%20... SEARCH CPU-WORLD News · Chips · Information · Forum · Links · About BSP · CPU · FPU · MCU · SoC · Other ICs · Curiosities Search site contents: Primergy Fujitsu PRIMERGY Server. Mit Intel® Xeon®. de.fujitsu.com/Make_IT_Dynamic Go Industrie-PC-Komponenten Industrie Panel-PCs, Box-PCs, ITX, Motherboards, Mobile & Medical PCs www.LEAD.de ROHM / Embedded Chipset For Intel®Atom Processor E600 Offers maximum performance! www.rohm.com IDENTIFY PART Identify CPU, FPU or MCU: AMD Sempron 2800+ - SDA2800DUT3D (SDA2800BOX) CPU » K7 family » Sempron » 2800+ Go Specifications Pictures (1) CPU ID (1) Comments (2) RELATED LINKS Compare with... | Get HTML code Architecture General information Identification Pinouts Type CPU / Microprocessor Support chips Market segment Desktop Price Chart Family AMD Sempron Add comment Model number ? 2800+ Add picture CPU part number SDA2800DUT3D Link to this page Box part number SDA2800BOX WHERE TO BUY Stepping codes BCXJB BIXJB Frequency (MHz) ? 2800 (rated) Search on eBay 2000 (actual) Bus speed (MHz) ? 333 RELATED CPUS Clock multiplier ? 12 Package 453-pin organic PGA Find Sempron CPUs with: 1.95" x 1.95" (4.95 cm x 4.95 cm) Any frequency AMD Package numbers 27648, 28104 Any bus speed Socket Socket A (Socket 462) Introduction date July 28, 2004 Same socket Price at introduction $109 Find Architecture / Microarchitecture Processor core ? Thoroughbred (Model 8) Manufacturing process 0.13 micron Hochzuverlässige Server