Ropes Are Better Than Strings Hans-)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On the Mechanics of the Bow and Arrow 1

On the Mechanics of the Bow and Arrow 1 B.W. Kooi Groningen, The Netherlands 1983 1B.W. Kooi, On the Mechanics of the Bow and Arrow PhD-thesis, Mathematisch Instituut, Rijksuniversiteit Groningen, The Netherlands (1983), Supported by ”Netherlands organization for the advancement of pure research” (Z.W.O.), project (63-57) 2 Contents 1 Introduction 5 1.1 Prefaceandsummary.............................. 5 1.2 Definitionsandclassifications . .. 7 1.3 Constructionofbowsandarrows . .. 11 1.4 Mathematicalmodelling . 14 1.5 Formermathematicalmodels . 17 1.6 Ourmathematicalmodel. 20 1.7 Unitsofmeasurement.............................. 22 1.8 Varietyinarchery................................ 23 1.9 Qualitycoefficients ............................... 25 1.10 Comparison of different mathematical models . ...... 26 1.11 Comparison of the mechanical performance . ....... 28 2 Static deformation of the bow 33 2.1 Summary .................................... 33 2.2 Introduction................................... 33 2.3 Formulationoftheproblem . 34 2.4 Numerical solution of the equation of equilibrium . ......... 37 2.5 Somenumericalresults . 40 2.6 A model of a bow with 100% shooting efficiency . .. 50 2.7 Acknowledgement................................ 52 3 Mechanics of the bow and arrow 55 3.1 Summary .................................... 55 3.2 Introduction................................... 55 3.3 Equationsofmotion .............................. 57 3.4 Finitedifferenceequations . .. 62 3.5 Somenumericalresults . 68 3.6 On the behaviour of the normal force -

Simply String Art Carol Beard Central Michigan University, [email protected]

International Textile and Apparel Association 2015: Celebrating the Unique (ITAA) Annual Conference Proceedings Nov 11th, 12:00 AM Simply String Art Carol Beard Central Michigan University, [email protected] Follow this and additional works at: https://lib.dr.iastate.edu/itaa_proceedings Part of the Fashion Design Commons Beard, Carol, "Simply String Art" (2015). International Textile and Apparel Association (ITAA) Annual Conference Proceedings. 74. https://lib.dr.iastate.edu/itaa_proceedings/2015/design/74 This Event is brought to you for free and open access by the Conferences and Symposia at Iowa State University Digital Repository. It has been accepted for inclusion in International Textile and Apparel Association (ITAA) Annual Conference Proceedings by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. Santa Fe, New Mexico 2015 Proceedings Simply String Art Carol Beard, Central Michigan University, USA Key Words: String art, surface design Purpose: Simply String Art was inspired by an art piece at the Saint Louis Art Museum. I was intrigued by a painting where the artist had created a three dimensional effect with a string art application over highlighted areas of his painting. I wanted to apply this visual element to the surface of fabric used in apparel construction. The purpose of this piece was to explore string art as unique artistic interpretation for a surface design element. I have long been interested in intricate details that draw the eye and take something seemingly simple to the realm of elegance. Process: The design process began with a research of string art and its many interpretations. -

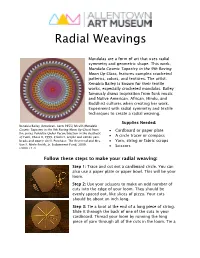

Radial Weavings

Radial Weavings Mandalas are a form of art that uses radial symmetry and geometric shape. This work, Mandala Cosmic Tapestry in the 9th Roving Moon Up-Close, features complex crocheted patterns, colors, and textures. The artist, Xenobia Bailey is known for their textile works, especially crocheted mandalas. Bailey famously draws inspiration from funk music and Native American, African, Hindu, and Buddhist cultures when creating her work. Experiment with radial symmetry and textile techniques to create a radial weaving. Supplies Needed: Xenobia Bailey (American, born 1955) Mv:#9 (Mandala Cosmic Tapestry in the 9th Roving Moon Up-Close) from • Cardboard or paper plate the series Paradise Under Reconstruction in the Aesthetic of Funk, Phase II, 1999, Crochet, acrylic and cotton yarn, • A circle tracer or compass beads and cowrie shell. Purchase: The Reverend and Mrs. • Yarn, string or fabric scraps Van S. Merle-Smith, Jr. Endowment Fund, 2000. • Scissors (2000.17.2) Follow these steps to make your radial weaving: Step 1: Trace and cut out a cardboard circle. You can also use a paper plate or paper bowl. This will be your loom. Step 2: Use your scissors to make an odd number of cuts into the edge of your loom. They should be evenly spaced out, like slices of pizza. Your cuts should be about an inch long. Step 3: Tie a knot at the end of a long piece of string. Slide it through the back of one of the cuts in your cardboard. Thread your loom by running the long piece of yarn through all of the cuts in the loom. -

Data Structure

EDUSAT LEARNING RESOURCE MATERIAL ON DATA STRUCTURE (For 3rd Semester CSE & IT) Contributors : 1. Er. Subhanga Kishore Das, Sr. Lect CSE 2. Mrs. Pranati Pattanaik, Lect CSE 3. Mrs. Swetalina Das, Lect CA 4. Mrs Manisha Rath, Lect CA 5. Er. Dillip Kumar Mishra, Lect 6. Ms. Supriti Mohapatra, Lect 7. Ms Soma Paikaray, Lect Copy Right DTE&T,Odisha Page 1 Data Structure (Syllabus) Semester & Branch: 3rd sem CSE/IT Teachers Assessment : 10 Marks Theory: 4 Periods per Week Class Test : 20 Marks Total Periods: 60 Periods per Semester End Semester Exam : 70 Marks Examination: 3 Hours TOTAL MARKS : 100 Marks Objective : The effectiveness of implementation of any application in computer mainly depends on the that how effectively its information can be stored in the computer. For this purpose various -structures are used. This paper will expose the students to various fundamentals structures arrays, stacks, queues, trees etc. It will also expose the students to some fundamental, I/0 manipulation techniques like sorting, searching etc 1.0 INTRODUCTION: 04 1.1 Explain Data, Information, data types 1.2 Define data structure & Explain different operations 1.3 Explain Abstract data types 1.4 Discuss Algorithm & its complexity 1.5 Explain Time, space tradeoff 2.0 STRING PROCESSING 03 2.1 Explain Basic Terminology, Storing Strings 2.2 State Character Data Type, 2.3 Discuss String Operations 3.0 ARRAYS 07 3.1 Give Introduction about array, 3.2 Discuss Linear arrays, representation of linear array In memory 3.3 Explain traversing linear arrays, inserting & deleting elements 3.4 Discuss multidimensional arrays, representation of two dimensional arrays in memory (row major order & column major order), and pointers 3.5 Explain sparse matrices. -

Bath Time Travellers Weaving

Bath Time Travellers Weaving Did you know? The Romans used wool, linen, cotton and sometimes silk for their clothing. Before the use of spinning wheels, spinning was carried out using a spindle and a whorl. The spindle or rod usually had a bump on which the whorl was fitted. The majority of the whorls were made of stone, lead or recycled pots. A wisp of prepared wool was twisted around the spindle, which was then spun and allowed to drop. The whorl acts to keep the spindle twisting and the weight stretches the fibres. By doing this, the fibres were extended and twisted into yarn. Weaving was probably invented much later than spinning around 6000 BC in West Asia. By Roman times weaving was usually done on upright looms. None of these have survived but fortunately we have pictures drawn at the time to show us what they looked like. A weaver who stood at a vertical loom could weave cloth of a greater width than was possible sitting down. This was important as a full sized toga could measure as much as 4-5 metres in length and 2.5 metres wide! Once the cloth had been produced it was soaked in decayed urine to remove the grease and make it ready for dying. Dyes came from natural materials. Most dyes came from sources near to where the Romans settled. The colours you wore in Roman times told people about you. If you were rich you could get rarer dyes with brighter colours from overseas. Activity 1 – Weave an Owl Hanging Have a close look at the Temple pediment. -

Precise Analysis of String Expressions

Precise Analysis of String Expressions Aske Simon Christensen, Anders Møller?, and Michael I. Schwartzbach BRICS??, Department of Computer Science University of Aarhus, Denmark aske,amoeller,mis @brics.dk f g Abstract. We perform static analysis of Java programs to answer a simple question: which values may occur as results of string expressions? The answers are summarized for each expression by a regular language that is guaranteed to contain all possible values. We present several ap- plications of this analysis, including statically checking the syntax of dynamically generated expressions, such as SQL queries. Our analysis constructs flow graphs from class files and generates a context-free gram- mar with a nonterminal for each string expression. The language of this grammar is then widened into a regular language through a variant of an algorithm previously used for speech recognition. The collection of resulting regular languages is compactly represented as a special kind of multi-level automaton from which individual answers may be extracted. If a program error is detected, examples of invalid strings are automat- ically produced. We present extensive benchmarks demonstrating that the analysis is efficient and produces results of useful precision. 1 Introduction To detect errors and perform optimizations in Java programs, it is useful to know which values that may occur as results of string expressions. The exact answer is of course undecidable, so we must settle for a conservative approxima- tion. The answers we provide are summarized for each expression by a regular language that is guaranteed to contain all its possible values. Thus we use an upper approximation, which is what most client analyses will find useful. -

The Musical Kinetic Shape: a Variable Tension String Instrument

The Musical Kinetic Shape: AVariableTensionStringInstrument Ismet Handˇzi´c, Kyle B. Reed University of South Florida, Department of Mechanical Engineering, Tampa, Florida Abstract In this article we present a novel variable tension string instrument which relies on a kinetic shape to actively alter the tension of a fixed length taut string. We derived a mathematical model that relates the two-dimensional kinetic shape equation to the string’s physical and dynamic parameters. With this model we designed and constructed an automated instrument that is able to play frequencies within predicted and recognizable frequencies. This prototype instrument is also able to play programmed melodies. Keywords: musical instrument, variable tension, kinetic shape, string vibration 1. Introduction It is possible to vary the fundamental natural oscillation frequency of a taut and uniform string by either changing the string’s length, linear density, or tension. Most string musical instruments produce di↵erent tones by either altering string length (fretting) or playing preset and di↵erent string gages and string tensions. Although tension can be used to adjust the frequency of a string, it is typically only used in this way for fine tuning the preset tension needed to generate a specific note frequency. In this article, we present a novel string instrument concept that is able to continuously change the fundamental oscillation frequency of a plucked (or bowed) string by altering string tension in a controlled and predicted Email addresses: [email protected] (Ismet Handˇzi´c), [email protected] (Kyle B. Reed) URL: http://reedlab.eng.usf.edu/ () Preprint submitted to Applied Acoustics April 19, 2014 Figure 1: The musical kinetic shape variable tension string instrument prototype. -

Regular Languages

id+id*id / (id+id)*id - ( id + +id ) +*** * (+)id + Formal languages Strings Languages Grammars 2 Marco Maggini Language Processing Technologies Symbols, Alphabet and strings 3 Marco Maggini Language Processing Technologies String operations 4 • Marco Maggini Language Processing Technologies Languages 5 • Marco Maggini Language Processing Technologies Operations on languages 6 Marco Maggini Language Processing Technologies Concatenation and closure 7 Marco Maggini Language Processing Technologies Closure and linguistic universe 8 Marco Maggini Language Processing Technologies Examples 9 • Marco Maggini Language Processing Technologies Grammars 10 Marco Maggini Language Processing Technologies Production rules L(G) = {anbncn | n ≥ 1} T = {a,b,c} N = {A,B,C} production rules 11 Marco Maggini Language Processing Technologies Derivation 12 Marco Maggini Language Processing Technologies The language of G • Given a grammar G the generated language is the set of strings, made up of terminal symbols, that can be derived in 0 or more steps from the start symbol LG = { x ∈ T* | S ⇒* x} Example: grammar for the arithmetic expressions T = {num,+,*,(,)} start symbol N = {E} S = E E ⇒ E * E ⇒ ( E ) * E ⇒ ( E + E ) * E ⇒ ( num + E ) * E ⇒ (num+num)* E ⇒ (num + num) * num language phrase 13 Marco Maggini Language Processing Technologies Classes of generative grammars 14 Marco Maggini Language Processing Technologies Regular languages • Regular languages can be described by different formal models, that are equivalent ▫ Finite State Automata (FSA) ▫ Regular -

Formal Languages

Formal Languages Discrete Mathematical Structures Formal Languages 1 Strings Alphabet: a finite set of symbols – Normally characters of some character set – E.g., ASCII, Unicode – Σ is used to represent an alphabet String: a finite sequence of symbols from some alphabet – If s is a string, then s is its length ¡ ¡ – The empty string is symbolized by ¢ Discrete Mathematical Structures Formal Languages 2 String Operations Concatenation x = hi, y = bye ¡ xy = hibye s ¢ = s = ¢ s ¤ £ ¨© ¢ ¥ § i 0 i s £ ¥ i 1 ¨© s s § i 0 ¦ Discrete Mathematical Structures Formal Languages 3 Parts of a String Prefix Suffix Substring Proper prefix, suffix, or substring Subsequence Discrete Mathematical Structures Formal Languages 4 Language A language is a set of strings over some alphabet ¡ Σ L Examples: – ¢ is a language – ¢ is a language £ ¤ – The set of all legal Java programs – The set of all correct English sentences Discrete Mathematical Structures Formal Languages 5 Operations on Languages Of most concern for lexical analysis Union Concatenation Closure Discrete Mathematical Structures Formal Languages 6 Union The union of languages L and M £ L M s s £ L or s £ M ¢ ¤ ¡ Discrete Mathematical Structures Formal Languages 7 Concatenation The concatenation of languages L and M £ LM st s £ L and t £ M ¢ ¤ ¡ Discrete Mathematical Structures Formal Languages 8 Kleene Closure The Kleene closure of language L ∞ ¡ i L £ L i 0 Zero or more concatenations Discrete Mathematical Structures Formal Languages 9 Positive Closure The positive closure of language L ∞ i L £ L -

Formal Languages

Formal Languages Wednesday, September 29, 2010 Reading: Sipser pp 13-14, 44-45 Stoughton 2.1, 2.2, end of 2.3, beginning of 3.1 CS235 Languages and Automata Department of Computer Science Wellesley College Alphabets, Strings, and Languages An alphabet is a set of symbols. E.g.: 1 = {0,1}; 2 = {-,0,+} 3 = {a,b, …, y, z}; 4 = {, , a , aa } A string over is a seqqfyfuence of symbols from . The empty string is ofte written (Sipser) or ; Stoughton uses %. * denotes all strings over . E.g.: * • 1 contains , 0, 1, 00, 01, 10, 11, 000, … * • 2 contains , -, 0, +, --, -0, -+, 0-, 00, 0+, +-, +0, ++, ---, … * • 3 contains , a, b, …, aa, ab, …, bar, baz, foo, wellesley, … * • 4 contains , , , a , aa, …, a , …, a aa , aa a ,… A lnlanguage over (Stoug hto nns’s -lnlanguage ) is any sbstsubset of *. I.e., it’s a (possibly countably infinite) set of strings over . E.g.: •L1 over 1 is all sequences of 1s and all sequences of 10s. •L2 over 2 is all strings with equal numbers of -, 0, and +. •L3 over 3 is all lowercase words in the OED. •L4 over 4 is {, , a aa }. Formal Languages 11-2 1 Languages over Finite Alphabets are Countable A language = a set of strings over an alphabet = a subset of *. Suppose is finite. Then * (and any subset thereof) is countable. Why? Can enumerate all strings in lexicographic (dictionary) order! • 1 = {0,1} 1 * = {, 0, 1 00, 01, 10, 11 000, 001, 010, 011, 100, 101, 110, 111, …} • for 3 = {a,b, …, y, z}, can enumerate all elements of 3* in lexicographic order -- we’ll eventually get to any given element. -

String Solving with Word Equations and Transducers: Towards a Logic for Analysing Mutation XSS (Full Version)

String Solving with Word Equations and Transducers: Towards a Logic for Analysing Mutation XSS (Full Version) Anthony W. Lin Pablo Barceló Yale-NUS College, Singapore Center for Semantic Web Research [email protected] & Dept. of Comp. Science, Univ. of Chile, Chile [email protected] Abstract to name a few (e.g. see [3] for others). Certain applications, how- We study the fundamental issue of decidability of satisfiability ever, require more expressive constraint languages. The framework over string logics with concatenations and finite-state transducers of satisfiability modulo theories (SMT) builds on top of the basic as atomic operations. Although restricting to one type of opera- constraint satisfaction problem by interpreting atomic propositions tions yields decidability, little is known about the decidability of as a quantifier-free formula in a certain “background” logical the- their combined theory, which is especially relevant when analysing ory, e.g., linear arithmetic. Today fast SMT-solvers supporting a security vulnerabilities of dynamic web pages in a more realis- multitude of background theories are available including Boolector, tic browser model. On the one hand, word equations (string logic CVC4, Yices, and Z3, to name a few (e.g. see [4] for others). The with concatenations) cannot precisely capture sanitisation func- backbones of fast SMT-solvers are usually a highly efficient SAT- tions (e.g. htmlescape) and implicit browser transductions (e.g. in- solver (to handle disjunctions) and an effective method of dealing nerHTML mutations). On the other hand, transducers suffer from with satisfiability of formulas in the background theory. the reverse problem of being able to model sanitisation functions In the past seven years or so there have been a lot of works and browser transductions, but not string concatenations. -

A Gis Tool to Demonstrate Ancient Harappan

A GIS TOOL TO DEMONSTRATE ANCIENT HARAPPAN CIVILIZATION _______________ A Thesis Presented to the Faculty of San Diego State University _______________ In Partial Fulfillment of the Requirements for the Degree Master of Science in Computer Science _______________ by Kesav Srinath Surapaneni Summer 2011 iii Copyright © 2011 by Kesav Srinath Surapaneni All Rights Reserved iv DEDICATION To my father Vijaya Nageswara rao Surapaneni, my mother Padmaja Surapaneni, and my family and friends who have always given me endless support and love. v ABSTRACT OF THE THESIS A GIS Tool to Demonstrate Ancient Harappan Civilization by Kesav Srinath Surapaneni Master of Science in Computer Science San Diego State University, 2011 The thesis focuses on the Harappan civilization and provides a better way to visualize the corresponding data on the map using the hotlink tool. This tool is made with the help of MOJO (Map Objects Java Objects) provided by ESRI. The MOJO coding to read in the data from CSV file, make a layer out of it, and create a new shape file is done. A suitable special marker symbol is used to show the locations that were found on a base map of India. A dot represents Harappan civilization links from where a user can navigate to corresponding web pages in response to a standard mouse click event. This thesis also discusses topics related to Indus valley civilization like its importance, occupations, society, religion and decline. This approach presents an effective learning tool for students by providing an interactive environment through features such as menus, help, map and tools like zoom in, zoom out, etc.