"Vertigo Effect" on Your Smartphone: Dolly Zoom Via Single Shot View

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Techniques of Cinematography: 2 (SUPROMIT MAITI)

Dept. of English, RNLKWC--SEM- IV—SEC 2—Techniques of Cinematography: 2 (SUPROMIT MAITI) The Department of English RAJA N.L. KHAN WOMEN’S COLLEGE (AUTONOMOUS) Midnapore, West Bengal Course material- 2 on Techniques of Cinematography (Some other techniques) A close-up from Mrinal Sen’s Bhuvan Shome (1969) For SEC (English Hons.) Semester- IV Paper- SEC 2 (Film Studies) Prepared by SUPROMIT MAITI Faculty, Department of English, Raja N.L. Khan Women’s College (Autonomous) Prepared by: Supromit Maiti. April, 2020. 1 Dept. of English, RNLKWC--SEM- IV—SEC 2—Techniques of Cinematography: 2 (SUPROMIT MAITI) Techniques of Cinematography (Film Studies- Unit II: Part 2) Dolly shot Dolly shot uses a camera dolly, which is a small cart with wheels attached to it. The camera and the operator can mount the dolly and access a smooth horizontal or vertical movement while filming a scene, minimizing any possibility of visual shaking. During the execution of dolly shots, the camera is either moved towards the subject while the film is rolling, or away from the subject while filming. This process is usually referred to as ‘dollying in’ or ‘dollying out’. Establishing shot An establishing shot from Death in Venice (1971) by Luchino Visconti Establishing shots are generally shots that are used to relate the characters or individuals in the narrative to the situation, while contextualizing his presence in the scene. It is generally the shot that begins a scene, which shoulders the responsibility of conveying to the audience crucial impressions about the scene. Generally a very long and wide angle shot, establishing shot clearly displays the surroundings where the actions in the Prepared by: Supromit Maiti. -

295 Platinum Spring 2021 Syllabus Online (No Contact)

CTPR 295 Cinematic Arts Laboratory 4 Units Spring 2021 Concurrent enrollment: CTPR 294 Directing in Television, Fiction, and Documentary Platinum/Section#18488D Meeting times: Sound/Cinematography: Friday 9-11:50AM Producing/Editing: Friday 1-3:50PM Producing Laboratory (online) Instructor: Stephen Gibler Office Hours: by appointment SA: Kayla Sun Cinematography Laboratory (online) Instructor: Gary Wagner Office Hours: by appointment SA: Gerardo Garcia Editing Laboratory (online) Instructor: Avi Glick Office Hours: by appointment SA: Alessia Crucitelli Sound Laboratory (online) Instructor: Sahand Nikoukar Office Hours: by appointment SA: Georgia Conrad Important Phone Numbers: * NO CALLS AFTER 9:00pm * Joe Wallenstein (213) 740-7126 Student Prod. Office - SPO (213) 740-2895 Prod. Faculty Office (213) 740-3317 Campus Cruiser (213) 740-4911 1 Course Structure and Schedule: CTPR 295 consists of four laboratories which, in combination, introduce Cinematic Arts Film and Television Production students to major disciplines of contemporary cinematic practice. Stu- dents will learn the basic technology, computer programs, and organizational principles of the four course disciplines that are necessary for the making of a short film. 1) Producing 2) Cinematography 3) Editing 4) Sound Each laboratory has six or seven sessions. Students will participate in exercises, individual projects, lectures and discussions designed to give them a strong foundation, both technical and theoretical, in each of the disciplines. Producing and Cinematography laboratories meet alternate weeks on the same day and time, for three-hour sessions, but in different rooms, Editing and Sound laboratories meet alternate weeks on the same day and time, for three-hour sessions, but in different rooms. Students, therefore, have six hours of CTPR 295 each week. -

10 Tips on How to Master the Cinematic Tools And

10 TIPS ON HOW TO MASTER THE CINEMATIC TOOLS AND ENHANCE YOUR DANCE FILM - the cinematographer point of view Your skills at the service of the movement and the choreographer - understand the language of the Dance and be able to transmute it into filmic images. 1. The Subject - The Dance is the Star When you film, frame and light the Dance, the primary subject is the Dance and the related movement, not the dancers, not the scenography, not the music, just the Dance nothing else. The Dance is about movement not about positions: when you film the dance you are filming the movement not a sequence of positions and in order to completely comprehend this concept you must understand what movement is: like the French philosopher Gilles Deleuze said “w e always tend to confuse movement with traversed space…” 1. The movement is the act of traversing, when you film the Dance you film an act not an aestheticizing image of a subject. At the beginning it is difficult to understand how to film something that is abstract like the movement but with practice you will start to focus on what really matters and you will start to forget about the dancers. Movement is life and the more you can capture it the more the characters are alive therefore more real in a way that you can almost touch them, almost dance with them. The Dance is a movement with a rhythm and when you film it you have to become part of the whole rhythm, like when you add an instrument to a music composition, the vocabulary of cinema is just another layer on the whole art work. -

![Camera Movement]](https://docslib.b-cdn.net/cover/2221/camera-movement-432221.webp)

Camera Movement]

MPPP 1383 – Video Technology Production Teknik Asas Melakukan Penggambaran Bergerak [CAMERA MOVEMENT] Prepared BY : NUR J A N NA H BINTI JAMIL FAT I M A H SARAH BINTI YAACOB 8 BASIC OF CAMERA MOVEMENTS PAN D O L LY Z O O M T I LT TRACK/TRUCK ZOOM ARC D O L LY FOLLOW 1 Canon EOS 60D TOOLS (as secondary video record) Sony HXR-NX30U Palm Size NXCAM HD Camcorder with Projector & 96GB HDD (as main video record) Microdolly Hollywood TH-650DV Tripod system WF-553T Tripod How to do? PAN Rotating a camera to the left or right in horizontal movement. (turning your head to left/right before cross a road) Purpose? To follow a subject or show the distance between two objects. Pan shots also work great for panoramic views such as a shot from a mountaintop to the valley below. 2 How to do? TILT Rotating a camera up or down in vertical movement. (like shake your head up/down Purpose? Like panning, to follow a subject or to show the top and bottom of a stationary object. With a tilt, you can also show how high something is. For example, a slow tilt up a Giant Sequoia tree shows its grandness and enormity. Here's a good tip. In general, when you tilt up and shoot an object or a person they look larger and thicker. The subject looks smaller and thinner when you tilt down. How to do? DOLLY Moving a camera closer or further from subject over time. Purpose? To follow an object smoothly to get a unique perspective. -

Film Terminology

Film Terminology Forms of Fiction English 12 Camera SHOTS camera shot is the amount of space that is seen in one shot or frame. Camera shots are used to demonstrate different aspects of a film's setting, characters and themes. As a result, camera shots are very important in shaping meaning in a film. Extreme long shot A framing in which the scale of the object shown is very small; a building, landscape, or crowd of people would fill the screen. Extreme long shot/Establishing shot This shot, usually involving a distant framing, that shows the spatial relations among the important figures, objects, and setting in a scene. Long Shot A framing in which the scale of the object shown is very small A standing human figure would appear nearly half the height of the screen. It is often used to show scenes of action or to establish setting - Sometimes called an establishing shot Medium long shot A framing at a distance that makes an object about four or five feet high appear to fill most of the screen vertically Medium Shot A framing in which the scale of the object shown is of moderate size A human figure seen from the waist up would fill most of the screen Over the shoulder This shot is framed from behind a person who is looking at the subject This shot helps to establish the position of each person and get the feel of looking at one person from the other’s point of view It is common to cut between these shots during conversation Medium close up A framing in which the scale of the object is fairly large a human figure seen from the chest up would fill most the screen Close-up Shot A framing in which the scale of the object shown is relatively large; most commonly a person’s head seen from the neck up, or an object of a comparable size that fills most of the screen. -

Introduction

CINEMATOGRAPHY Mailing List the first 5 years Introduction This book consists of edited conversations between DP’s, Gaffer’s, their crew and equipment suppliers. As such it doesn’t have the same structure as a “normal” film reference book. Our aim is to promote the free exchange of ideas among fellow professionals, the cinematographer, their camera crew, manufacturer's, rental houses and related businesses. Kodak, Arri, Aaton, Panavision, Otto Nemenz, Clairmont, Optex, VFG, Schneider, Tiffen, Fuji, Panasonic, Thomson, K5600, BandPro, Lighttools, Cooke, Plus8, SLF, Atlab and Fujinon are among the companies represented. As we have grown, we have added lists for HD, AC's, Lighting, Post etc. expanding on the original professional cinematography list started in 1996. We started with one list and 70 members in 1996, we now have, In addition to the original list aimed soley at professional cameramen, lists for assistant cameramen, docco’s, indies, video and basic cinematography. These have memberships varying from around 1,200 to over 2,500 each. These pages cover the period November 1996 to November 2001. Join us and help expand the shared knowledge:- www.cinematography.net CML – The first 5 Years…………………………. Page 1 CINEMATOGRAPHY Mailing List the first 5 years Page 2 CINEMATOGRAPHY Mailing List the first 5 years Introduction................................................................ 1 Shooting at 25FPS in a 60Hz Environment.............. 7 Shooting at 30 FPS................................................... 17 3D Moving Stills...................................................... -

The “Vertigo Effect” on Your Smartphone: Dolly Zoom Via Single Shot View Synthesis Supplement

The “Vertigo Effect” on Your Smartphone: Dolly Zoom via Single Shot View Synthesis Supplement Yangwen Liang Rohit Ranade Shuangquan Wang Dongwoon Bai Jungwon Lee Samsung Semiconductor Inc {liang.yw, rohit.r7, shuangquan.w, dongwoon.bai, jungwon2.lee}@samsung.com 1. View Synthesis based on Camera Geometry where k is the same as in the paper such that subject size on focus plane remains the same, i.e. Recall from our paper, consider two pin–hole cameras A C C B and B with camera centers at locations A and B, respec- f1 D0 − t1 tively. From [2], based on the coordinate system of camera k = A = . (5) f1 D0 A, the projections of the same point P ∈ R3 onto these two camera image planes have the following closed–form rela- From Eq. 1, we can then obtain the closed–form solution B A tionship for u1 in terms of u1 as: uB DA uA KBT A A K R K −1 B D1 (D0 − t1) A t1(D1 − D0) = B ( A) + (1) u u u0 1 DB 1 DB 1 = A 1 + A D0(D1 − t1) D0(D1 − t1) T A (6) where can also be written as (D0 − t1)f1 m1 − A . n1 T = R (CA − CB) . (2) D0(D1 − t1) Here, the 2 × 1 vector uX , the 3 × 3 matrix KX , and the By setting m1 = n1 = 0 and t1 = t, Eq. 6 reduces to scalar DX are the pixel coordinates on the image plane, the Eq. (3) in our paper. P intrinsic parameters, and the depths of for camera X, 1.2. -

Glossary of Filmmaker Terms

Above the Line Clapboard Generally the portion of a film's budget that covers A small black or white board with a hinged stick on the costs associated with major creative talent: the top that displays identifying information for each shot stars, the director, the producer(s) and the writer(s). in the movie. Assists with organizing shots during (See also Below the Line) editing process; the clap of the stick allows easier Art Director synchronization of sound and video within each shot. The crew member responsible for the design, look Construction Coordinator and feel of a film's set. Includes props, furniture, sets, Also known as the construction manager, this person etc. Reports to the production designer. supervises and manages the physical construction of Assistant Director (A.D.) sets and reports to the art director and production Carries out the director’s instructions and runs the set. designer. The first A.D. is responsible for preparing the Dailies production schedule and script breakdown, making The rough shots viewed immediately after shooting sure shooting stays on schedule and on budget. The each day by the director, along with the second A.D. is responsible for distributing information cinematographer or editor. Used to help ensure and cast notifications, keeping track of hours worked proper coverage and the quality of the shots gathered. by cast and crew, management of extras, signing Director actors in and out and preparing call sheets. The The person in charge of the overall cinematic vision of second A.D. is also in charge of the production the film and the performance of the actors. -

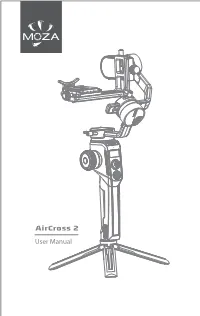

MOZA Aircross2 User Manual

User Manual Contents MOZA AirCross 2 Overview 1 Installation and Balance Adjustment 2 Installing the Battery 2 Attaching the Tripod 2 Unlocking Motors 2 Mounting the Camera 3 Balancing 3 B uttons and OLED Display 4 Button Functions 4 LED Indicators 5 Main Interface 5 Menu Description 6 Features Description 8 Camera Control 8 Motor Output 9 PFV,Sport Gear Mode 10 Manual Positioning 11 Button Customization 11 Inception Mode 11 Balance Check 12 Sensor Calibration 13 Language Switch 14 User Conguration Management 14 Extension 15 Manfrotto Quick Release System 15 Two Camera Mounting Directions 15 Smartphone and PC Connection 16 Phone Holder 16 Firmware Upgrade 16 SPECS 17 AirCross 2 Overview 21 19 20 23 22 24 11 12 25 13 14 26 15 27 29 30 10 28 16 31 32 17 18 Tilt Knob 3/8”Screw 17 USB Type-C 25 Pan Motor Lock Charging Port Tilt Motor 10 Trigger 18 Battery Level 26 OLED Screen Indicator Tilt Arm 11 Roll Motor Lock 19 Safety Lock 27 Joystick Camera 12 Pan Knob 20 Roll Motor Lock 28 Dial Wheel Control Port Baseplate Knob 13 Smart Wheel 21 Multi-CAN Port 29 USB Port Pan Arm 14 Indicator Light 22 Roll Arm 30 Multi-CAN Port Ring Crash Pad 15 Power Button 23 Roll Knob 31 Battery Pan Motor 16 Power Supply 24 Roll Motor 32 Battery Lock Electrode 1 Installation and Balance Adjustment Installing the Battery a. Press the battery lock downwards; b. Take out the battery; c d c. Remove the insulating lm at the electrode; b d. -

A Guide to Working Effectively on the Set for Each Classification in the Cinematographers Guild

The International Cinematographers Guild IATSE Local 600 Setiquette A Guide to Working Effectively on the Set for Each Classification in The Cinematographers Guild Including definitions of the job requirements and appropriate protocols for each member of the camera crew and for publicists 2011 The International Cinematographers Guild IATSE Local 600 Setiquette A Guide to Working Effectively on the Set for each Classification in The Cinematographers Guild CONTENTS Rules of Professional Conduct by Bill Hines (page 2) Practices to be encouraged, practices to be avoided Directors of Photography compiled by Charles L. Barbee (page 5) Responsibilities of the Cinematographer (page 7) (adapted from the American Society of Cinematographers) Camera Operators compiled by Bill Hines (page 11) Pedestal Camera Operators by Paul Basta (page 12) Still/Portrait Photographers compiled by Kim Gottlieb-Walker (page 13) With the assistance of Doug Hyun, Ralph Nelson, David James, Melinda Sue Gordon and Byron Cohen 1st and 2 nd Camera Assistants complied by Mitch Block (page 17) Loaders compiled by Rudy Pahoyo (page 18) Digital Classifications Preview Technicians by Tony Rivetti (page 24) News Photojournalists compiled by Gary Brainard (page 24 ) EPK Crews by Charles L. Barbee (page 26) Publicists by Leonard Morpurgo (page 27) (Unit, Studio, Agency and Photo Editor) Edited by Kim Gottlieb-Walker Third Edition, 2011 (rev. 5/11) RULES OF PROFESSIONAL CONDUCT by Bill Hines, S.O.C. The following are well-established production practices and are presented as guidelines in order to aid members of the International Cinematographers Guild, Local 600, IATSE, function more efficiently, effectively, productively and safely performing their crafts, during the collaborative process of film and video cinematic production. -

Factfile: Gce As Level Moving Image Arts Alfred Hitchcock’S Cinematic Style

FACTFILE: GCE AS LEVEL MOVING IMAGE ARTS ALFRED HITCHCOCK’S CINEMATIC STYLE Alfred Hitchcock’s Cinematic Style Learning outcomes – high angle shots; – expressive use of the close-up; Students should be able to: – cross-cutting; • discuss and explain the purpose of the following – montage editing; elements of Hitchcock’s cinematic style: – expressionist lighting techniques; and – point-of-view (POV) camera and editing – using music to create emotion. technique; – dynamic camera movements; Course Content The French director Francois Truffaut praised Films such as Vertigo (1958) and Rear Window Alfred Hitchcock’s, ability to film the thoughts (1954) are seen almost entirely from the point of of his characters without having to use dialogue. view of the main character (both played by James Over the course of a long career in cinema that Stewart) and this allows the director to create and stretched from the silent era into the 1970’s, sustain suspense as we look through the character’s Hitchcock developed and refined a number of filmic eyes as he attempts to solve a mystery. techniques that allowed him to experiment with, and imaginatively explore, the unique potential of In Strangers on a Train (1951), before the visual storytelling. murderous Bruno strangles Miriam, we see a POV shot from his perspective, her face suddenly illuminated by his cigarette lighter. The Point-of-view shot (POV) Hitchcock’s signature technique is the use of a subjective camera. The POV shot places the audience in the perspective of the protagonist so that we experience the different emotions of the character, whether it be desire, confusion, shock or fear. -

F9 Production Information 1

1 F9 PRODUCTION INFORMATION UNIVERSAL PICTURES PRESENTS AN ORIGINAL FILM/ONE RACE FILMS/PERFECT STORM PRODUCTION IN ASSOCIATION WITH ROTH/KIRSCHENBAUM FILMS A JUSTIN LIN FILM VIN DIESEL MICHELLE RODRIGUEZ TYRESE GIBSON CHRIS ‘LUDACRIS’ BRIDGES JOHN CENA NATHALIE EMMANUEL JORDANA BREWSTER SUNG KANG WITH HELEN MIRREN WITH KURT RUSSELL AND CHARLIZE THERON BASED ON CHARACTERS CREATED BY GARY SCOTT THOMPSON PRODUCED BY NEAL H. MORITZ, p.g.a. VIN DIESEL, p.g.a. JUSTIN LIN, p.g.a. JEFFREY KIRSCHENBAUM, p.g.a. JOE ROTH CLAYTON TOWNSEND, p.g.a. SAMANTHA VINCENT STORY BY JUSTIN LIN & ALFREDO BOTELLO AND DANIEL CASEY SCREENPLAY BY DANIEL CASEY & JUSTIN LIN DIRECTED BY JUSTIN LIN 2 F9 PRODUCTION INFORMATION PRODUCTION INFORMATION TABLE OF CONTENTS THE SYNOPSIS ................................................................................................... 3 THE BACKSTORY .............................................................................................. 4 THE CHARACTERS ............................................................................................ 7 Dom Toretto – Vin Diesel ............................................................................................................. 8 Letty – Michelle Rodriguez ........................................................................................................... 8 Roman – Tyrese Gibson ............................................................................................................. 10 Tej – Chris “Ludacris” Bridges ...................................................................................................