Themes and Trends in Space Science Data Processing and Visualization

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

University of Iowa Instruments in Space

University of Iowa Instruments in Space A-D13-089-5 Wind Van Allen Probes Cluster Mercury Earth Venus Mars Express HaloSat MMS Geotail Mars Voyager 2 Neptune Uranus Juno Pluto Jupiter Saturn Voyager 1 Spaceflight instruments designed and built at the University of Iowa in the Department of Physics & Astronomy (1958-2019) Explorer 1 1958 Feb. 1 OGO 4 1967 July 28 Juno * 2011 Aug. 5 Launch Date Launch Date Launch Date Spacecraft Spacecraft Spacecraft Explorer 3 (U1T9)58 Mar. 26 Injun 5 1(U9T68) Aug. 8 (UT) ExpEloxrpelro r1e r 4 1915985 8F eJbu.l y1 26 OEGxOpl o4rer 41 (IMP-5) 19697 Juunlye 2 281 Juno * 2011 Aug. 5 Explorer 2 (launch failure) 1958 Mar. 5 OGO 5 1968 Mar. 4 Van Allen Probe A * 2012 Aug. 30 ExpPloiorenre 3er 1 1915985 8M Oarc. t2. 611 InEjuxnp lo5rer 45 (SSS) 197618 NAouvg.. 186 Van Allen Probe B * 2012 Aug. 30 ExpPloiorenre 4er 2 1915985 8Ju Nlyo 2v.6 8 EUxpKlo 4r e(rA 4ri1el -(4IM) P-5) 197619 DJuenc.e 1 211 Magnetospheric Multiscale Mission / 1 * 2015 Mar. 12 ExpPloiorenre 5e r 3 (launch failure) 1915985 8A uDge.c 2. 46 EPxpiolonreeerr 4130 (IMP- 6) 19721 Maarr.. 313 HMEaRgCnIe CtousbpeShaetr i(cF oMxu-1ltDis scaatelell itMe)i ssion / 2 * 2021081 J5a nM. a1r2. 12 PionPeioenr e1er 4 1915985 9O cMt.a 1r.1 3 EExpxlpolorerer r4 457 ( S(IMSSP)-7) 19721 SNeopvt.. 1263 HMaalogSnaett oCsupbhee Sriact eMlluitlet i*scale Mission / 3 * 2021081 M5a My a2r1. 12 Pioneer 2 1958 Nov. 8 UK 4 (Ariel-4) 1971 Dec. 11 Magnetospheric Multiscale Mission / 4 * 2015 Mar. -

Notice Should the Sun Become Unusually Exciting Plasma Instabilities by the Motion of the Active

N O T I C E THIS DOCUMENT HAS BEEN REPRODUCED FROM MICROFICHE. ALTHOUGH IT IS RECOGNIZED THAT CERTAIN PORTIONS ARE ILLEGIBLE, IT IS BEING RELEASED IN THE INTEREST OF MAKING AVAILABLE AS MUCH INFORMATION AS POSSIBLE _4^ M SOLAR TERREST RIA L PROGRAMS A. Five-Year Plan (NASA -TM- 82351) SOLAR TERRESTRIAL PROGRA MS; N81 -23992 A FIVE YEAR PLAN (NASA) 99 P HC A05/MF A01 CSCL 03B Unclas G3/92 24081 August 1978 ANN I ^ ^ Solar Terl &;;rraf Prugram5 Qtfic;e ~ `\^ Oft/ce of e Selanco,5 \ National A prcnautres and Space Administration ^• p;^f1 SOLAR TERRESTRIAL PROGRAMS A Five-Year Plan Prepared by 13,1-vd P. Stern Laboratory for Extraterrestrial Physiscs Goddard Space Flight Center, Greenbelt, Md. Solar Terrestrial Division Office of Space Sciences National Aeronautics and Space Administration "Perhaps the strongest area that we face now is the area of solar terrestrial interaction research, where we are still investigating it in a basic science sense: Clow doe the Sun work? What are all these cycles about? What do they have to .Jo with the Sun's magnetic field? Is the Sun a dynamo? What is happening to drive it in an energetic way? Why is it a, variable star to the extent to which it is variable, which is not very much, but enough to be troublesome to us? And what does all that mean in terms of structure? How does the Sun's radiation, either in a photon sense or a particle sense, the solar wind, affect the environment of the Earth, and does it have anything to do with the dynamics of the atmosphere? We have conic at that over centuries, in a sense, as a scientific problem, and we are working it as part of space science, and thinking of a sequence of missions in terms of space science. -

Initial Survey of the Wave Distribution Functions for Plasmaspheric Hiss

JOURNAL OF GEOPHYSICAL RESEARCH, VOL. 96, NO. All, PAGES 19,469-19,489, NOVEMBER 1, 1991 InitialSurvey of the Wave Distribution Functions for PlasmasphericHiss Observedby ISEE I L. R. O. S•o•s¾, • F . Lsrsvvgs, 2 M . PARROT,2 L . CAm6, • AND R. R. ANDERSON4 MulticomponentELF/VLF wavedata from the ISEE 1 satellitehave been analyzed with the aim of identifying the generationmechanism of plasmaspherichiss, and especiallyof determining whether it involveswave propagationon cyclictrajectories. The data were taken from four passes of the satellite, of which two were close to the geomagneticequatorial plane and two were farther from it; all four occurred during magnetically quiet periods. The principal method of analysis was calculation of the wave distribution functions. The waves appear to have been generated over a wide range of altitudes within the plasmasphere,and most, though not all, of them were propagating obliquely with respect to the Earth's magnetic field. On one of the passes near the equator, some wave energy was observed at small wave normal angles, and these waves may have been propagating on cyclic trajectories. Even here, however, obliquely propagating waves werepredominant, a finding that is difficultto reconcilewith the classicalquasi-linear generation mech•sm or its variants. The conclusion is that another mechanism, probably nonlinear, must have been generating most of the hiss observedon these four passes. 1. INTRODUCTION mals parallelto the field on the average[Smith et al., 1960; Plasmaspheric hiss is a broad-band and -

An Overview of the Space Physics Data Facility (SPDF) in the Context of “Big Data”

An Overview of the Space Physics Data Facility (SPDF) in the Context of “Big Data” Bob McGuire, SPDF Project Scientist Heliophysics Science Division (Code 670) NASA Goddard Space Flight Center Presented to the Big Data Task Force, June 29, 2016 Topics • As an active Final Archive, what is SPDF? – Scope, Responsibilities and Major Elements • Current Data • Future Plans and BDTF Questions REFERENCE URL: http://spdf.gsfc.nasa.gov 8/3/16 2:33 PM 2 SPDF in the Heliophysics Science Data Management Policy • One of two (active) Final Archives in Heliophysics – Ensure the long-term preservation and ongoing (online) access to NASA heliophysics science data • Serve and preserve data with metadata / software • Understand past / present / future mission data status • NSSDC is continuing limited recovery of older but useful legacy data from media – Data served via FTP/HTTP, via user web i/f, via webservices – SPDF focus is non-solar missions and data • Heliophysics Data Environment (HpDE) critical infrastructure – Heliophysics-wide dataset inventory (VSPO->HDP) – APIs (e.g. webservices) into SPDF system capabilities and data • Center of Excellence for science-enabling data standards and for science-enabling data services 8/3/16 2:33 PM 3 SPDF Services • Emphasis on multi-instrument, multi-mission science (1) Specific mission/instrument data in context of other missions/data (2) Specific mission/instrument data as enriching context for other data (3) Ancillary services & software (orbits, data standards, special products) • Specific services include -

Artificial Earth Satellites Designed and Fabricated by the Johns Hopkins University Applied Physics Laboratory

SDO-1600 lCL 7 (Revised) tQ SARTIFICIAL EARTH SATELLITES DESIGNED AND FABRICATED 9 by I THE JOHNS HOPKINS UNIVERSITY APPLIED PHYSICS LABORATORY I __CD C-:) PREPARED i LJJby THE SPACE DEPARTMENT -ow w - THE JOHNS HOPKINS UNIVERSITY 0 APPLIED PHYSICS LABORATORY Johns Hopkins Road, Laurel, Maryland 20810 Operating under Contract N00024 78-C-5384 with the Department of the Navv Approved for public release; distributiort uni mited. 7 9 0 3 2 2, 0 74 Unclassified PLEASE FOLD BACK IF NOT NEEDED : FOR BIBLIOGRAPHIC PURPOSES SECURITY CLASSIFICATION OF THIS PAGE REPORT DOCUMENTATION PAGE ER 2. GOVT ACCESSION NO 3. RECIPIENT'S CATALOG NUMBER 4._ TITLE (and, TYR- ,E, . COVERED / Artificial Earth Satellites Designed and Fabricated / Status Xept* L959 to date by The Johns Hopkins University Applied Physics Laboratory. U*. t APL/JHU SDO-1600 7. AUTHOR(s) 8. CONTRACTOR GRANT NUMBER($) Space Department N00024-=78-C-5384 9. PERFORMING ORGANIZATION NAME & ADDRESS 10. PROGRAM ELEMENT, PROJECT. TASK AREA & WORK UNIT NUMBERS The Johns Hopkins University Applied Physics Laboratory Task Y22 Johns Hopkins Road Laurel, Maryland 20810 11.CONTROLLING OFFICE NAME & ADDRESS 12.R Naval Plant Representative Office Julp 078 Johns Hopkins Road 13. NUMBER OF PAGES MyLaurel,rland 20810 235 14. MONITORING AGENCY NAME & ADDRESS , -. 15. SECURITY CLASS. (of this report) Naval Plant Representative Office j Unclassified - Johns Hopkins Road f" . Laurel, Maryland 20810 r ' 15a. SCHEDULEDECLASSIFICATION/DOWNGRADING 16 DISTRIBUTION STATEMENT (of th,s Report) Approved for public release; distribution N/A unlimited 17. DISTRIBUTION STATEMENT (of the abstrat entered in Block 20. of tifferent from Report) N/A 18. -

The International Space Science Institute

— E— Glossaries and Acronyms E.1 Glossary of Metrology This section is intended to provide the readers with the definitions of certain terms often used in the calibration of instruments. The definitions were taken from the Swedish National Testing and Research Institute (http://www.sp.se/metrology/eng/ terminology.htm), the Guide to the Measurement of Pressure and Vacuum, National Physics Laboratory and Institute of Measurement & Control, London, 1998 and VIM, International Vocabulary of Basic and General Terms in Metrology, 2nd Ed., ISO, Geneva, 1993. Accuracy The closeness of the agreement between a test result and the accepted reference value [ISO 5725]. See also precision and trueness. Adjustment Operation of bringing a measuring instrument into a state of performance suitable for its use. Bias The difference between the expectation of the test results and an accepted reference value [ISO 5725]. Calibration A set of operations that establish, under specified conditions, the relationship between values of quantities indicated by a measuring instrument (or values repre- sented by a material measure) and the corresponding values realized by standards. The result of a calibration may be recorded in a document, e.g. a calibration certifi- cate. The result can be expressed as corrections with respect to the indications of the instrument. Calibration in itself does not necessarily mean that an instrument is performing in accordance with its specification. Certification A process performed by a third party that confirms that a defined product, process or service conforms with, for example, a standard. Confirmation Metrological confirmation is a set of operations required to ensure that an item of measuring equipment is in a state of compliance with requirements for its intended use. -

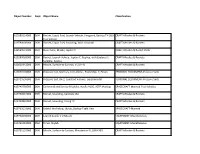

Object Number Dept. Object Name Classification A19580114000 DSH Rocket, Liquid Fuel, Launch Vehicle, Vanguard, Backup TV-2BU

Object Number Dept. Object Name Classification A19580114000 DSH Rocket, Liquid Fuel, Launch Vehicle, Vanguard, Backup TV-2BU CRAFT-Missiles & Rockets Test Vehicle A19590009000 DSH Rocket, Liquid Fuel, Sounding, WAC Corporal CRAFT-Missiles & Rockets A19590031000 DSH Nose Cone, Missile, Jupiter C CRAFT-Missiles & Rocket Parts A19590068000 DSH Rocket, Launch Vehicle, Jupiter-C, Replica, with Explorer 1 CRAFT-Missiles & Rockets Satellite, Replica A19600342000 DSH Missile, Surface-to-Surface, V-2 (A-4) CRAFT-Missiles & Rockets A19670178000 DSH Pressure Suit, Mercury, John Glenn, Friendship 7, Flown PERSONAL EQUIPMENT-Pressure Suits A19720536000 DSH Pressure Suit, RX-2, Constant Volume, Experimental PERSONAL EQUIPMENT-Pressure Suits A19740798000 DSH Command and Service Modules, Apollo #105, ASTP Mockup SPACECRAFT-Manned-Test Vehicles A19760034000 DSH Rocket, Sounding, Aerobee 150 CRAFT-Missiles & Rockets A19760843000 DSH Rocket, Sounding, Viking 12 CRAFT-Missiles & Rockets A19761033000 DSH Orbital Workshop, Skylab, Backup Flight Unit SPACECRAFT-Manned A19761038000 DSH Launch Stand, V-2 Missile EQUIPMENT-Miscellaneous A19761052000 DSH Timer, Skylab EQUIPMENT-Miscellaneous A19761115000 DSH Missile, Surface-to-Surface, Minuteman III, LGM-30G CRAFT-Missiles & Rockets A19761669000 DSH Bicycle Ergometer, Skylab EQUIPMENT-Medical A19761672000 DSH Rotating Litter Chair, Skylab EQUIPMENT-Medical A19761805000 DSH Shoes, Restraint, Skylab, Kerwin PERSONAL EQUIPMENT-Footwear A19761828000 DSH Meteorological Satellite, ITOS SPACECRAFT-Unmanned A19770335000 -

Dynamics of the Earth's Radiation Belts and Inner Magnetosphere Newfoundland and Labrador, Canada 17 – 22 July 2011

AGU Chapman Conference on Dynamics of the Earth's Radiation Belts and Inner Magnetosphere Newfoundland and Labrador, Canada 17 – 22 July 2011 Conveners Danny Summers, Memorial University of Newfoundland, St. John's (Canada) Ian Mann, University of Alberta, Edmonton (Canada) Daniel Baker, University of Colorado, Boulder (USA) Program Committee David Boteler, Natural Resources Canada, Ottawa, Ontario (Canada) Sebastien Bourdarie, CERT/ONERA, Toulouse (France) Joseph Fennell, Aerospace Corporation, Los Angeles, California (USA) Brian Fraser, University of Newcastle, Callaghan, New South Wales (Australia) Masaki Fujimoto, ISAS/JAXA, Kanagawa (Japan) Richard Horne, British Antarctic Survey, Cambridge (UK) Mona Kessel, NASA Headquarters, Washington, D.C. (USA) Craig Kletzing, University of Iowa, Iowa City (USA) Janet Kozy ra, University of Michigan, Ann Arbor (USA) Lou Lanzerotti, New Jersey Institute of Technology, Newark (USA) Robyn Millan, Dartmouth College, Hanover, New Hampshire (USA) Yoshiharu Omura, RISH, Kyoto University (Japan) Terry Onsager, NOAA, Boulder, Colorado (USA) Geoffrey Reeves, LANL, Los Alamos, New Mexico (USA) Kazuo Shiokawa, STEL, Nagoya University (Japan) Harlan Spence, Boston University, Massachusetts (USA) David Thomson, Queen's University, Kingston, Ontario (Canada) Richard Thorne, Univeristy of California, Los Angeles (USA) Andrew Yau, University of Calgary, Alberta (Canada) Financial Support The conference organizers acknowledge the generous support of the following organizations: Cover photo: Andy Kale ([email protected]) -

Seasonal-Latitudinal Variations in the Upper Atmosphere Luigi G

i\' u > DIURNAL AND SEASONAL-LATITUDINAL VARIATIONS IN THE UPPER ATMOSPHERE LUIGI G. JACCHIA and JACK W SLOWEY GPO PRICE $ CFSTI PRICE(S) $ Hard copy (HC) Microfiche (MF) .6< ff 653 July 65 Smithsonian Astrophysical Observatory SPECIAL REPORT 242- ZOO YlYOl AlIl13Vl Research in Space Science SA0 Special Report No. 242 DIURNAL AND SEASONAL-LATITUDINAL VARIATIONS IN THE UPPER ATMOSPHERE Luigi G. Jacchia and Jack W. Slowey June 6, 1967 Smithsonian Institution Astrophysical Obs e rvato ry Cambridge, Massachusetts, 021 38 705-28 TABLE OF CONTENTS Section Page ABSTRACT .................................. V 1 THE EFFECT OF THE DIURNAL VARIATION ON SATELLITE DRAG ..................................... 1 . 2 RESULTS FROM LOW-INCLINATION SATELLITES ....... 4 3 AMPLITUDE AND PHASE OF THE DIURNAL VARIATION. .. 9 4 RESULTS FROM HIGH-INCLINATION SATELLITES, SEASONAL VARIATIONS ......................... 22 5 ACKNOWLEDGMENTS. .......................... 29 6 REFERENCES ................................ 30 BIOGRAPHICAL NOTES ......................... 32 ii LIST OF ILLUSTRATIONS Figure Page The diurnal temperature variation as derived from the drag of Explorer 1. ............................... 5 The diurnal temperature variation as derived from the drag of Explorer 8. ............................... 6 The diurnal temperature variation as derived from the drag of Vanguard 2. ............................... 7 Relative amplitude of the diurnal temperature variation as derived from the drag of three satellites with moderate orbital inclinations, plotted as a function of time and com- pared with the smoothed 10. 7-cm solar flux ........... 15 5 Relative amplitude of the diurnal temperature variation as derived from the drag of three satellites with moderate orbital inclinations, plotted as a function of the smoothed 10. 7-cm solar flux. ........................... 17 6 The diurnal temperature variation as derived from the drag of Injun 3. -

APL's Space Department After 40 Years: an Overview

THE SPACE DEPARTMENT AFTER 40 YEARS: AN OVERVIEW APL’s Space Department After 40 Years: An Overview Stamatios M. Krimigis The APL Space Department came into being in 1959 to implement the APL- invented global satellite navigation concept for the Navy. The Transit System compiled unparalleled availability and reliability records. Space engineering “firsts” spawned by Transit have constituted standard designs for spacecraft systems ever since. By the mid- 1960s, missions were designed to perform geodesy investigations for NASA and, later, astronomy and radiation experiments. In the 1970s, space science missions began to transform the Department from an engineering to a science-oriented organization. The program for ballistic missile defense in the mid-1980s became the principal focus of the Department’s work with overlapping engineering and applied science activity, and lasted through the early 1990s. After the Cold War, science missions in both planetary and Sun–Earth connections plus experiments on NASA spacecraft shifted the projects from some 70% DoD to about 70% NASA. The unique blend of world-class science and instrumentation combined with innovative engineering represents a powerful combination that augurs well for the future of the Space Department. (Keywords: History, Space Department, Space science, Space technology.) EARLY HISTORY The Space Department was officially established by Geiger-Mueller counters, with the objective of measur- memorandum on 24 December 1959 as the Space ing various spectral lines and the intensity of primary Development Division, approximately 3 months after cosmic radiation as it was attenuated through the the failed launch of Transit 1A on 17 September 1959.1 Earth’s atmosphere.2 Van Allen’s group also obtained a Dr. -

Gpo Price $ a Aurora and the Lower Ionosphere in Relation to Satellite Observations of Particle Precipitation

https://ntrs.nasa.gov/search.jsp?R=19650024104 2020-03-24T03:50:06+00:00Z View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by NASA Technical Reports Server GPO PRICE $ A AURORA AND THE LOWER IONOSPHERE IN RELATION TO SATELLITE OBSERVATIONS OF PARTICLE PRECIPITATION Bengt Hultqvis t::: Goddard Space Flight Center Greenbelt, Maryland ABSTRACT 9404 The direct observations of electron- precipitation by means of rockets and satellites is reviewed. Upon com- parison of the observed energy fluxes with those expected on the basis of optical measurements of aurora, the agree- ment is found to be good. It is demonstrated that the satel- lite observations make understandable the observed variable degree of correlation between visual aurora and auroral absorption of radio waves. The precipitated electrons con- tribute significantly to the night time ionosphere not only in the auroral zone but also over the polar caps and in sub- auroral latitudes. It does not seem impossible that the observed precipitation of electrons is the main source of the nighttime ionization in the lower ionosphere. The air- glow is briefly discussed in relation to observed particle precipitation. Finally, the recent demonstrations of the in- sufficiency of the Van Allen belt as a source for the precipi- tated electrons is briefly reviewed. *NASA- National Academy of Sciences -National Research Council Senior Post-Doctoral Resident Research Associate on leave of absence from Kiruna Geophysical Observatory, Kiruna, Sweden. i AURORA AND THE LOWER IONOSPHERE IN RELATION TO SATELLITE OBSERVATIONS OF PARTICLE PRECIPITATION Introduction The first rocket investigations of particles precipitated into the atmosphere were made by the Iowa group in the early 1950's. -

REINTERPRETING PIONEER DEEP SPACE STATION Alexander Paul

REINTERPRETING PIONEER DEEP SPACE STATION Alexander Paul Ray Submitted in partial fulfillment of the requirements for the degree Master of Science in Historic Preservation Graduate School of Architecture, Planning, and Preservation Columbia University May 2017 Ray 0 Acknowledgements …………………………………………………………………......….1 List of figures …………………………………………………………………………........2 Introduction ……………………….…………………………………………………...…..3 Part I: Relevance to the preservation field Definition of a space probe Why should preservationists be involved? What should audiences take away? Part II: Methodology Literature review Case study selection Terminology Chapter 1: Historical context ……………………………………………………….…..17 Why are space probes historically significant? History of robotic space exploration in the United States Chapter 2: Interpretive content ………………………………………………….……..48 Pioneer as case study Statement of interpretive themes Development of interpretive themes Chapter 3: Current interpretations of historic space probes ………………….……...62 Public perception Audience Current interpretation Effective methods Chapter 4: Proposal for reinterpreting Pioneer Deep Space Station …………….…..83 Conclusion …………………………………………….………………………………...111 Bibliography ……………………………………………………………………….…....113 Ray 0 Acknowledgements First and foremost, I want to thank my thesis advisor, Jessica Williams. Simply put, I could not have imagined a better person to work with on this thing. Thanks also to all of the Historic Preservation faculty for their support, and in particular Paul Bentel and Chris Neville for their helpful feedback last semester. I am also especially grateful to my thoughtful readers, Will Raynolds and Mark Robinson. My gratitude also goes out to the entire Lunar Reconnaissance Obiter Camera team at Arizona State University. Having the opportunity to meet with these talented and welcoming people got me excited to write about space probes. Last but not least, thank you to all of my classmates.