Aes 143Rd Convention Program October 18–21, 2017

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Special Appearances by Dr. Angela Davis, Haim, Saweetie, Elaine Welteroth, and More Added to the Recording Academy®'S Women in the Mix® Grammy® Week Event

SPECIAL APPEARANCES BY DR. ANGELA DAVIS, HAIM, SAWEETIE, ELAINE WELTEROTH, AND MORE ADDED TO THE RECORDING ACADEMY®'S WOMEN IN THE MIX® GRAMMY® WEEK EVENT WHO: Joining the previously announced lineup for the Recording Academy®'s virtual Women In The Mix® event are Terri Lyne Carrington, current GRAMMY® -nominated artist; Lanre Gaba, General Manager/Senior Vice President of Urban A&R, Atlantic Records; IV Jay, singer/songwriter; Emily Lazar, current three-time GRAMMY-nominated mastering engineer; Joanie Leeds, current GRAMMY-nominated artist; Saweetie, rapper and songwriter; and Elaine Welteroth, journalist and New York Times best-selling author. Viewers can also expect special appearances by Dr. Angela Davis and current two-time GRAMMY nominees HAIM. Previously announced participants include Christine Albert, Chair Emeritus, Recording Academy Board of Trustees; Ingrid Andress, current three-time GRAMMY-nominated singer/songwriter; Denisia "Blu June" Andrews (Nova Wav), current three-time GRAMMY- nominated songwriter; Valeisha Butterfield Jones, Chief Diversity, Equity & Inclusion Officer, Recording Academy; Brittany "Chi" Coney (Nova Wav), current three-time GRAMMY- nominated songwriter; Rocsi Diaz, television personality; Maureen Droney, Senior Managing Director, Recording Academy Producers & Engineers Wing®; Chloe Flower, classical pianist & composer; Tera Healy, Senior Director, East Region, Recording Academy; Tammy Hurt, Vice Chair, Recording Academy Board of Trustees; Leslie Ann Jones, five-time GRAMMY-winning engineer and Recording -

Brief History of Electronic and Computer Musical Instruments

Brief History of Electronic and Computer Musical Instruments Roman Kogan April 15th, 2008 1 Theremin: the birth of electronic music It is impossible to speak of electronic music and not speak of Theremin (remember that high-pitch melody sound sound in Good Vibrations ?) Theremin was the instrument that has started it all. Invented remarkably early - around 1917 - in Russia by Leon Termen (or Theremin, spelling varies) it was the first practical (and portable) electronic music instrument, and also the one that brought the electronic sound to the masses (see [27]). It was preceded by Thelarmonium, a multi-ton monstrocity that never really get a lot of attention (although technically very innovative, see [25]), and some other instruments that fell into obscurity. On the other hand, Leon Theremin got popular well beyond the Soviet Union (where even Lenin got to play his instrument once!). He became a star in the US and taught a generation of Theremin players, Clara Rockmore being the most famous one. In fact, RCA even manufactured Theremins under Leon's design in 1929 ( [27])!. So what was this instrument ? It was a box with two antennas that produced continuous, high-pitch sounds. The performer would approach the instrument and wave hands around the antennas to play it. The distance to the right (vertical) antenna would change the pitch, while the distance to the left (horizontal) antenna would change the volume of the sound (see [2], [3] for more technical details). The Theremin is difficult to play, since, like on violin, the notes and the volume are not quantized (the change in pitch is continuous). -

2017 ANNUAL REPORT 2017 Annual Report Table of Contents the Michael J

Roadmaps for Progress 2017 ANNUAL REPORT 2017 Annual Report Table of Contents The Michael J. Fox Foundation is dedicated to finding a cure for 2 A Note from Michael Parkinson’s disease through an 4 Annual Letter from the CEO and the Co-Founder aggressively funded research agenda 6 Roadmaps for Progress and to ensuring the development of 8 2017 in Photos improved therapies for those living 10 2017 Donor Listing 16 Legacy Circle with Parkinson’s today. 18 Industry Partners 26 Corporate Gifts 32 Tributees 36 Recurring Gifts 39 Team Fox 40 Team Fox Lifetime MVPs 46 The MJFF Signature Series 47 Team Fox in Photos 48 Financial Highlights 54 Credits 55 Boards and Councils Milestone Markers Throughout the book, look for stories of some of the dedicated Michael J. Fox Foundation community members whose generosity and collaboration are moving us forward. 1 The Michael J. Fox Foundation 2017 Annual Report “What matters most isn’t getting diagnosed with Parkinson’s, it’s A Note from what you do next. Michael J. Fox The choices we make after we’re diagnosed Dear Friend, can open doors to One of the great gifts of my life is that I've been in a position to take my experience with Parkinson's and combine it with the perspectives and expertise of others to accelerate possibilities you’d improved treatments and a cure. never imagine.’’ In 2017, thanks to your generosity and fierce belief in our shared mission, we moved closer to this goal than ever before. For helping us put breakthroughs within reach — thank you. -

Architecture and Acoustics of Recording Studios Analysis to Prediction to Realization

Distinguished Guest Lecture Thursday, April 30 School of Architecture Greene Gallery Department of Architectural Acoustics Architecture and Acoustics of Recording Studios Analysis to Prediction to Realization presented by John Storyk, R.A., AES, Principal Walters-Storyk Design Group The recording studio has changed dramatically in the past 10 years in ways that were almost unpredictable. Both the business models and the studio design/equipment configurations are markedly different, with changes continuing virtually every week. The shift from large ‘all at once’ sessions to a file-based mixing process has ushered in a new era of small, yet extremely powerful and highly flexible audio content creation environments. These ‘next generation’ rooms continue to depend on pristine acoustics, and the aesthetic and comfort factors remain high priorities. Studios will always want to provide the ultimate critical listening conditions and standards. Digital recording and mixing technology have changed, but the skills of recording, mixing and mastering engineers will continue to drive the creative process. These rooms will continue to depend on the architect/acoustician’s ability to create or ‘tune’ the environment to maximize its acoustic potential. Jungle City Studios, New York, NY Diante de trono, Brazil Berklee College of Music opened the doors to its 160 Massachusetts Avenue residence tower in January 2014. The ten-studio Walters-Storyk Design Group designed audio education component represents a pinnacle of contemporary studio planning. Paul Epworth, The Church, London John Storyk, Co Founder and Principal of Walters-Storyk Design Group has been responsible for designing over 3000 media and content creation facilities worldwide. Credits include Jimi Hendrix's Electric Lady Studios (1969); NYC’s Jazz At Lincoln Center and Le Poisson Rouge; broadcast facilities for The Food Network, ESPN, and WNET; major education complexes for NYU and Berklee College of Music, Boston (2015 TEC winner) and Valencia, Spain; and media rooms for such corporate clients as Hoffman La Roche. -

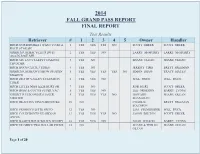

Fall Grand Pass Report Final Report

2014 FALL GRAND PASS REPORT FINAL REPORT Test Results Retriever # 1 2 3 4 5 Owner Handler HRCH NORTHFORKS I WANT TO BE A 1 YES YES YES NO SCOTT GREER SCOTT GREER ROCK STAR SH GRHRCH UH MAC'S LET'S DO IT 2 YES YES NO LARRY McMURRY LARRY McMURRY AGAIN JOSIE MH HRCH UH LUCY'S LEFTY DAKOTA 3 YES NO SHANE OLEAN SHANE OLEAN THUNDER HRCH BOO'S CLICK 3 TIMES 4 YES NO JEREMY TIMS BRETT FREEMAN GRHRCH UH SHAW'S SHOW STOPPIN' 5 YES YES YES YES NO SIMON SHAW TRACY HAYES SHADOW HRCH DEATH VALLEY'S CLEMSON 6 YES YES NO WILL PRICE WILL PRICE TIGER HRCH LITTLE MISS IZZIE HURT SH 7 YES NO ROB HURT SCOTT GREER HRCH BOSS & COCO'S SUPER VAC 8 YES YES NO LEE ORGERON BARRY LYONS GRHRCH UH BOOMER'S JAGER 9 YES YES YES NO HOWARD SHANE OLEAN MEISTER SHANAHAN HRCH DEACON'S VINEYARD DUKE 10 NO CHARLIE BRETT FREEMAN SOLOMON HRCH FROGGY'S LITTE FRITO 12 YES NO LISA STEMBRIDGE WILL PRICE HRCH COLDFRONTS GUARDIAN 13 YES YES YES NO JASON BELTON SCOTT GREER ANGEL HRCH BASIC'S ROUX WILD'N WOODY 14 YES YES NO NOAH WILSON BARRY LYONS HRCH UH SDK'S TWO DOLLAR PISTOL 15 NO SHANE & TERI JO SHANE OLEAN OLEAN Page 1 of 20 HRCH ACADEMY'S HIGHWAY JUNKIE 16 YES YES YES NO DEREK & MELINDA BRETT FREEMAN RANDLE HRCH MEGAN'S BLACK ROSE 17 NO MARSHA HINCHER TRACY HAYES HRCH BULLET'S BIG TRIGGER 18 YES YES YES YES YES BRIAN SULLIVAN WILL PRICE HRCH SPOOKS TIMBER TIN LIZZY 19 YES YES YES NO WADE OLIVER SCOTT GREER HRCH BLUE'S & ROUX'S RAJIN 20 YES YES NO GLENN POST BARRY LYONS PUNKIN HRCH SDK'S MORE COWBELL 21 YES YES YES NO SHANE & TERI JO SHANE OLEAN OLEAN HRCH ACADEMY'S DO WHAT I DO -

Pure Magazine

PURE MAGAZINOctobeEr 2017 Jack O’Rourke Talks Music, Electric Picnic, Father John Misty and more… The Evolution Of A Modern Gentleman Jimmy Star chats with Hollywood actor... Sean Kanan Sony’s VR capabilities establishes them as serious contenders in the Virtual Reality headset war! Eli Lieb Talks Music, Creating & Happiness… Featured Artist Dionne Warwick Marilyn Manson Cheap Trick Otherkin Eileen Shapiro chats with award winning artist Emma Langford about her Debut + much more EalbumM, tour neMws plus mAore - T urLn to pAage 16 >N> GFORD @PureMzine Pure M Magazine ISSUE 22 Mesmerising WWW.PUREMZINE.COM forty seven Editor in Chief Trevor Padraig [email protected] and a half Editorial Paddy Dunne [email protected] minutes... Sarah Swinburne [email protected] Shane O’ Rielly [email protected] Marilyn Manson Heaven Upside Down Contributors Dave Simpson Eileen Shapiro Jimmy Star Garrett Browne lt-rock icon Marilyn Manson is back “Say10” showcases a captivatingly creepy Danielle Holian brandishing a brand new album coalescence of hauntingly hushed verses and Simone Smith entitled Heaven Upside Down. fantastically fierce choruses next as it Irvin Gamede ATackling themes such as sex, romance, saunters unsettlingly towards the violence and politics, the highly-anticipated comparatively cool and catchy “Kill4Me”. Front Cover - Ken Coleman sequel to 2015’s The Pale Emperor features This is succeeded by the seductively ten typically tumultuous tracks for fans to psychedelic “Saturnalia”, the dreamy yet www.artofkencoleman.com feast upon. energetic delivery of which ensures it stays It’s introduced through the sinister static entrancing until “Je$u$ Cri$i$” arrives to of “Revelation #12” before a brilliantly rivet with its raucous refrain and rebellious bracing riff begins to blare out beneath a instrumentation. -

Download This List As PDF Here

QuadraphonicQuad Multichannel Engineers of 5.1 SACD, DVD-Audio and Blu-Ray Surround Discs JULY 2021 UPDATED 2021-7-16 Engineer Year Artist Title Format Notes 5.1 Production Live… Greetins From The Flow Dishwalla Services, State Abraham, Josh 2003 Staind 14 Shades of Grey DVD-A with Ryan Williams Acquah, Ebby Depeche Mode 101 Live SACD Ahern, Brian 2003 Emmylou Harris Producer’s Cut DVD-A Ainlay, Chuck David Alan David Alan DVD-A Ainlay, Chuck 2005 Dire Straits Brothers In Arms DVD-A DualDisc/SACD Ainlay, Chuck Dire Straits Alchemy Live DVD/BD-V Ainlay, Chuck Everclear So Much for the Afterglow DVD-A Ainlay, Chuck George Strait One Step at a Time DTS CD Ainlay, Chuck George Strait Honkytonkville DVD-A/SACD Ainlay, Chuck 2005 Mark Knopfler Sailing To Philadelphia DVD-A DualDisc Ainlay, Chuck 2005 Mark Knopfler Shangri La DVD-A DualDisc/SACD Ainlay, Chuck Mavericks, The Trampoline DTS CD Ainlay, Chuck Olivia Newton John Back With a Heart DTS CD Ainlay, Chuck Pacific Coast Highway Pacific Coast Highway DTS CD Ainlay, Chuck Peter Frampton Frampton Comes Alive! DVD-A/SACD Ainlay, Chuck Trisha Yearwood Where Your Road Leads DTS CD Ainlay, Chuck Vince Gill High Lonesome Sound DTS CD/DVD-A/SACD Anderson, Jim Donna Byrne Licensed to Thrill SACD Anderson, Jim Jane Ira Bloom Sixteen Sunsets BD-A 2018 Grammy Winner: Anderson, Jim 2018 Jane Ira Bloom Early Americans BD-A Best Surround Album Wild Lines: Improvising on Emily Anderson, Jim 2020 Jane Ira Bloom DSD/DXD Download Dickinson Jazz Ambassadors/Sammy Anderson, Jim The Sammy Sessions BD-A Nestico Masur/Stavanger Symphony Anderson, Jim Kverndokk: Symphonic Dances BD-A Orchestra Anderson, Jim Patricia Barber Modern Cool BD-A SACD/DSD & DXD Anderson, Jim 2020 Patricia Barber Higher with Ulrike Schwarz Download SACD/DSD & DXD Anderson, Jim 2021 Patricia Barber Clique Download Svilvay/Stavanger Symphony Anderson, Jim Mortensen: Symphony Op. -

From the Sound Board to the GRAMMY Board John Poppo, ’84, Chairman of the Recording Academy’S Board of Trustees

THE MAGAZINE FOR FREDONIA ALUMNI AND FRIENDS SPRING 2016 From the sound board to the GRAMMY board John Poppo, ’84, Chairman of The Recording Academy’s Board of Trustees Coming of age Fredonia senior Steve Moses wins ‘Big Brother 17’ on CBS A tandem in tune Singer Nia Drummond and manager Michelle Cope form unique partnership College of Education Launches new Language and Learning master’s program SPRING 2016 COVER STORY From the sound board to the GRAMMY board THE MAGAZINE FOR FREDONIA ALUMNI AND FRIENDS 4 John Poppo, ’84, Chairman of The Recording Academy’s Board of Trustees 20 ADMISSIONS EVENTS Open House Dates 12 Monday, Feb. 15 Presidents Day 10 Saturday, April 9 Accepted Student Reception Saturday Visit Dates Saturday, March 5 Saturday, March 12 Saturday, April 23 Saturday, June 11 Students and families can also visit any day during the academic year. Just contact Admissions to arrange an appointment. To learn more, visit: fredonia.edu/ 14 18 admissions/visit or call 1-800-252-1212. ALUMNI AND CAMPUS EVENTS CALENDAR • Please check alumni.fredonia.edu as details are confirmed. For event registration and Los Angeles, Calif., Brunch Chicago, Ill., Reception SEPTEMBER payment, go to http://alumni. Sunday, March 20 Saturday, May 21, 2–4 p.m. New York State Tour fredonia.edu/Events.aspx, or Getty Museum, 11 a.m.–1 p.m. Midwest Youth Organization Monday – Thursday, Sept. 12–15 contact the Alumni Affairs office 200 Getty Center Drive (Music School) Locations TBA at (716) 673-3553. $25 878 Lyster Road, Highwood, IL Self-paid parking $15* give 30 Hosted by alumnus Allan and OCTOBER FEBRUARY minutes to ride tram to museum. -

Community Services Report 2009-2010

Community Services Report 2009-2010 Music is a gift that has touched all our lives. When musicians and those who support them share the gift of music, they bring people together in a way nothing else has the power to do. In 1989, The Recording Academy® established MusiCares® to sustain music and its makers. MISSION MusiCares provides a safety net of critical assistance for music people in times of need. MusiCares’ services and resources cover a wide range of financial, medical and personal emergencies, and each case is treated with integrity and confidentiality. MusiCares also focuses the resources and attention of the music industry on human service issues that directly impact the health and welfare of the music community. In 2010, MusiCares experienced a landmark year by serving the largest number of clients to date — more than 2,200 — with $2.5 million in aid. OUR PROGRAMS AND SERVICES Since its inception, MusiCares has developed into a premier support system for music people by “Thank you so much for providing innovative programs and services designed to meet the specific needs of its constituents. supporting our family and remembering the players.” EMERGENCY FINANCIAL ASSISTANCE PROGRAM — MusiCares Client The “heart and soul” of MusiCares is the Emergency Financial Assistance Program. With a commitment to providing help to those in need as quickly as possible, this program provides assistance for basic living expenses including rent, utilities and car payments; medical expenses including doctor, dentist and hospital bills; psychotherapy; and treatment for HIV/ AIDS, Parkinson’s disease, Alzheimer’s disease, hepatitis C and other critical illnesses. -

7 41 F.2D 896 United States Court of Appeals, Seventh Circuit

7 41 F.2d 896 United States Court of Appeals, Seventh Circuit. Ronald H. SELLE, Plaintiff-Appellant, v. Barry GIBB, et al., Defendants-Appellants, and Ronald H. SELLE, Plaintiff-Appellee, v. Barry GIBB, et al., Defendants-Appellants. Nos. 83–2484, 83–2545. | Argued April 13, 1984. | Decided July 23, 1984. Opinion CUDAHY, Circuit Judge. The plaintiff, Ronald H. Selle, brought a suit against three brothers, Maurice, Robin and Barry Gibb, known collectively as the popular singing group, the Bee Gees, alleging that the Bee Gees, in their hit tune, “How Deep Is Your Love,” had infringed the copyright of his song, “Let It End.” The jury returned a verdict in plaintiff’s favor on the issue of liability in a bifurcated trial. The district court, Judge George N. Leighton, granted the defendants’ motion for judgment notwithstanding the verdict and, in the alternative, for a new trial. Selle v. Gibb, 567 F.Supp. 1173 (N.D.Ill.1983). We affirm the grant of the motion for judgment notwithstanding the verdict. I Selle composed his song, “Let It End,” in one day in the fall of 1975 and obtained a copyright for it on November 17, 1975. He played his song with his small band two or three times in the Chicago area and sent a tape and lead sheet of the music to eleven music recording and publishing companies. Eight of the companies returned the materials to Selle; three did not respond. This was the extent of the public dissemination of Selle’s song.1 Selle first became aware of the Bee Gees’ song, “How Deep Is Your Love,” in May 1978 and thought that he recognized the music as his own, although the lyrics were different. -

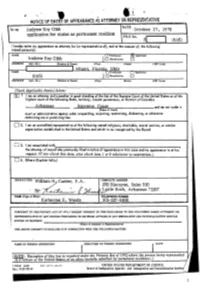

LIT2013000004 - Andy Gibb.Pdf

•, \.. .. ,-,, i ~ .«t ~' ,,; ~-· ·I NOT\CE OF ENTR'Y.OF APPEARANCE AS AllORNE'< OR REPRESEN1' Al\VE DATE In re: Andrew Roy Gibb October 27, 1978 application for status as permanent resident FILE No. Al I (b)(6) I hereby enter my appearanc:e as attorney for (or representative of), and at the reQUest of, the fol'lowing" named person(s): - NAME \ 0 Petitioner Applicant Andrew Roy Gibb 0 Beneficiary D "ADDRESS (Apt. No,) (Number & Street) (City) (State) (ZIP Code) Mi NAME O Applicant (b)(6) D ADDRESS (Apt, No,) (Number & Street) (City} (ZIP Code) Check Applicable ltem(a) below: lXJ I I am an attorney and a member in good standing of the bar of the Supreme Court of the United States or of the highest court of the following State, territory; insular possession, or District of Columbia A;r;:ka.nsa§ Simt:eme Coy;ct and am not under -a (NBme of Court) court or administrative agency order ·suspending, enjoining, restraining, disbarring, or otherwise restricting me in practicing law. [] 2. I am an accredited representative of the following named religious, charitable, ,social service, or similar organization established in the United States and which is so recognized by the Board: [] i I am associated with ) the. attomey of record who previously fited a notice of appearance in this case and my appearance is at his request. (If '!J<?V. check this item, also check item 1 or 2 whichever is a1wropriate .) [] 4. Others (Explain fully.) '• SIGNATURE COMPLETE ADDRESS Willi~P .A. 2311 Biscayne, Suite 320 ' By: V ? Litle Rock, Arkansas 72207 /I ' f. -

Media Ecologies: Materialist Energies in Art and Technoculture, Matthew Fuller, 2005 Media Ecologies

M796883front.qxd 8/1/05 11:15 AM Page 1 Media Ecologies Media Ecologies Materialist Energies in Art and Technoculture Matthew Fuller In Media Ecologies, Matthew Fuller asks what happens when media systems interact. Complex objects such as media systems—understood here as processes, or ele- ments in a composition as much as “things”—have become informational as much as physical, but without losing any of their fundamental materiality. Fuller looks at this multi- plicitous materiality—how it can be sensed, made use of, and how it makes other possibilities tangible. He investi- gates the ways the different qualities in media systems can be said to mix and interrelate, and, as he writes, “to produce patterns, dangers, and potentials.” Fuller draws on texts by Félix Guattari and Gilles Deleuze, as well as writings by Friedrich Nietzsche, Marshall McLuhan, Donna Haraway, Friedrich Kittler, and others, to define and extend the idea of “media ecology.” Arguing that the only way to find out about what happens new media/technology when media systems interact is to carry out such interac- tions, Fuller traces a series of media ecologies—“taking every path in a labyrinth simultaneously,” as he describes one chapter. He looks at contemporary London-based pirate radio and its interweaving of high- and low-tech “Media Ecologies offers an exciting first map of the mutational body of media systems; the “medial will to power” illustrated by analog and digital media technologies. Fuller rethinks the generation and “the camera that ate itself”; how, as seen in a range of interaction of media by connecting the ethical and aesthetic dimensions compelling interpretations of new media works, the capac- of perception.” ities and behaviors of media objects are affected when —Luciana Parisi, Leader, MA Program in Cybernetic Culture, University of they are in “abnormal” relationships with other objects; East London and each step in a sequence of Web pages, Cctv—world wide watch, that encourages viewers to report crimes seen Media Ecologies via webcams.