HCI Theory HCI Th HCI T

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Igu Gsf Graphic Design Section Special Talent Exam Applications Started!

FACULTY OF FINE ARTS News and Events Bulletin • Agust 2021 • Issue: 14 IGU GSF GRAPHIC DESIGN SECTION SPECIAL TALENT EXAM APPLICATIONS STARTED! Click to Register. www.gelisim.edu.tr 0 212 422 70 00 GSF Events ISTANBUL GELISIM UNIVERSITY Istanbul Gelisim University FACULTY OF FINE ARTS Faculty of Fine Arts Student Handbook Was Published! Istanbul Gelisim University (IGU) aims to move away from the understanding of standard education, to be the leading name of quality and professional education and to maintain this success globally, to reject pure teaching, to remove the obstacles in front of original and creative thinking and to become one of the respected and leading educational institutions in the national and international arena. In this context Student Handbook was published prepared for the Faculty of Fine Arts. Read More STUDENT HANDBOOK Research Assistant Büşra Kamacıoğlu Gave Information About Applied Courses of the Communication and Design Department Research Assistant Büşra Kamacıoğlu at Istanbul Gelisim University (IGU) Faculty of Fine Arts (GSF), Communication and Design Department said the following about the practical lessons: "With the aim of educating students who can keep up with the needs of the information age, the Department of Communication and Design updated its curriculum in 2018 with the approval of the International accreditation board AQAS, focusing on the field of "Multimedia", and teaches courses in Mac/PC computer laboratories[...]" Read More GSF Events Communication and Design Department Live Broadcasted as Part of Online Promotion Days Istanbul Gelişim University (IGU) Faculty of Fine Arts (GSF) Communication and Design Department Assistant Professor Zerrin Funda Ürük moderated the live broadcast on the Instagram platform with Assistant Professor Çağlayan Hergül, Head of the Department of Communication and Design. -

Pentru Ce Împrumută Galaţiul 185 De Milioane De Lei

VINERI . 19 IULIE 2019 WWW.VIATA-LIBERA.RO ZIUA AVIAŢIEI, PROBLEME SRBTORIT LA UNITED, LA TECUCI DIN CAUZA FRF ZBOR. Sâmbătă, 20 iulie, FUTSAL. Vicecampioana United la Muzeul Aeronauticii Moldovei Galaţi se confruntă cu probleme de lot, din Tecuci se va sărbători Ziua în urma unor schimbări de regulament Aviaţiei Române, cu zboruri decise de Comitetul Executiv al Federaţiei demonstrative, prelegeri şi vizite la RoRomânemâne ddee FoFotbal.tbal. > pag.pag 007 muzeu. > pag. 09 l COTIDIAN INDEPENDENT / GALAŢI, ROMÂNIA / ANUL XXX, NR. 9065 / 20 PAGINI / 20 PAGINI SUPLIMENT TV GRATUIT / 2 LEI PRIMĂRIA STINGE DOUĂ CREDITE I CONTRACTEAZĂ ALTE DOUĂ > EVENIMENT/055 Pentru ce LA ZI cat împrumută V.L.fi Galaţiul ig s-a cali Ţ 185 Patricia de milioane succes în sferturile BRD Bucharest Open de lei 07 PentruPentru achiziachiziţiaia a 4040 dede autobuzeautobuze nonoi,i, Primăria a făcut un credit de 100 de milioane de lei, un alt împrumut, de 85 de meteo milioane de lei, fi ind destinatt refi nanţării altor două creditee mai vechi. A. Melinte 30˚ u foto Bogdan Codrescu Variabil Sistemul asigurărilor de sănătate, valuta BNR blocat de trei săptămâni €4,73 REMUS BASALIC le cu Casa Naţională de Asigurări Astfel, dacă îi dau o reţetă sau [email protected] de Sănătate (CNAS). Ieri, CNAS a un bilet de trimitere unei persoa- făcut o tentativă de repornire ne neasigurate, respectivul servi- GRATUIT $4,21 Încă de la sfârşitul lunii iunie, a programului, însă sistemul a ciu îmi va fi apoi imputat mie. cu „Viaţa liberă” Cursul de schimb valutar sistemul cardului de sănătate a rămas în continuare blocat. -

Fare Arte.Pdf

I testi e le immagini pubblicate derivano dalle due conversazioni tenute presso l’Associazione romana Acquerellisti il 2 e il 16 dicembre 2017 Codice ISSN 2420-8442 2018 Indice generale 1. Il problema di PierLuigi Albini 2. Arte e scienza di PierLuigi Albini 3. Stimoli per la conversazione di Claudio Falasca 4. Arte e tecnoscienza di PierLuigi Albini 5.Movimenti artistici nelle arti visive: il ‘900 di Claudio Falasca Bibliografie I testi di PierLuigi Albini sono adattamenti e revisioni di scritti pubblicati su Ticonzero nel 2012/2014; i testi Claudio Falasca sono stati scritti in occasione dei due seminari dell’Associazione Romana Acquerellisti. I testi possono essere letti seguendo l’ordine dell’Indice oppure nella sequenza logica: 1-2-4 e 3-5. Per l’accesso diretto ai capitoli cliccare sul titolo cercato. 1. Il problema Al centro di queste riflessioni sull’arte ci sono la scienza e le tecnologie - intese, queste ultime, in senso ampio (digitali, biologiche, dei materiali e così via) - ovvero, il loro avanzare irruento (materiali e mezzi espressivi) e il cambiamento profondo della visione tradizionale del mondo generato dalla scienza. Qui e nella prossima conversazione seguo sostanzialmente la lezione di Renato Barilli, il quale basa la sua interpretazione del concetto di ciò che è artistico su tre fattori principali: i. gli sviluppi della tecnologia o cultura materiale; ii. il raffronto tra il campo dell'arte e quelli delle altre arti e delle scienze; iii. le oscillazioni interne (intrinseche) al campo dell'arte.1 Quella di Barilli è anche una interpretazione del divenire storico, oltre che artistico, per cui la storia non si ripete certo mai, ma assume un andamento a spirale: i ritorni, che somigliano a precedenti soluzioni e stili e che tuttavia non sono mai ad essi uguali. -

Superstroke Ve Superblur Sanat ", Pamukkale Üniversitesi Sosyal Bilimler Enstitüsü Dergisi, Sayı 44, Denizli, Ss

Pamukkale Üniversitesi Sosyal Bilimler Enstitüsü Dergisi Pamukkale University Journal of Social Sciences Institute ISSN 1308-2922 E-ISSN 2147-6985 Article Info/Makale Bilgisi √Received/Geliş:09.08.2020 √Accepted/Kabul:23.10.2020 DOİ:10.30794/pausbed.778411 Araştırma Makalesi/ Research Article Cançat, A. (2021). "Superstroke ve Superblur Sanat ", Pamukkale Üniversitesi Sosyal Bilimler Enstitüsü Dergisi, Sayı 44, Denizli, ss. 41-48. SUPERSTROKE VE SUPERBLUR SANAT Aysun CANÇAT* Öz Bu çalışmada, etkin olarak sanat faaliyetlerini sürdürmeye devam eden, fakat çok fazla bilinmeyen ve telaffuz edilmeyen, Superstroke ve Superblur sanat hareketlerine değinilmiştir. Geçmiş akım ve anlayışlardan etkilenen, fakat farklı bir duruşla varlıklarını gösteren bu sanat hareketleri, amaçlarını belirten bildirilerini yayınlamışlardır. Özellikle Kübizm, Ekspresyonizm ve Puantilizm gibi sanat akımlarından ilham alan tepkili sanat hareketleri, farklı teknik ve sembol kullanımı ile oluşturdukları üretimleriyle sanat tarihi içerisinde ilgi çekicidirler. Bu tepkiler; Batı’lı anlayışlara bağlı kalmadan ve var olan resim geleneklerinden de yola çıkılarak kendine özgü yeni üslûplar yaratılabileceğine dairdir. Çünkü, bazı ülkeler bu yaklaşımlarla süregelen sanat üslûplarında bir yön değişikliği içerisine girebilmiştir. Bu anlayışlarla, araştırmada Superstroke ve Superblur sanat hareketleri, hem birbirleri arasında hem de diğer akımlarla birlikte karşılıklı olarak ve sanatçılardan örneklerle değerlendirilmiştir. Anahtar Sözcükler: Superstroke, Superblur, Sanat. SUPERSTROKE -

An Art Miscellany for the Weary & Perplex'd. Corsham

An art miscellany for the weary & perplex’d Conceived and compiled for the benefit of [inter alios] novice teachers by Richard Hickman Founder of ZArt ii For Anastasia, Alexi.... and Max Cover: Poker Game Oil on canvas 61cm X 86cm Cassius Marcellus Coolidge (1894) Retrieved from Wikimedia Commons [http://creativecommons.org/licenses/by-sa/3.0/] Frontispiece: (My Shirt is Alive With) Several Clambering Doggies of Inappropriate Hue. Acrylic on board 60cm X 90cm Richard Hickman (1994) [From the collection of Susan Hickman Pinder] iii An art miscellany for the weary & perplex’d First published by NSEAD [www.nsead.org] ISBN: 978-0-904684-34-6 This edition published by Barking Publications, Midsummer Common, Cambridge. © Richard Hickman 2018 iv Contents Acknowledgements vi Preface vii Part I: Hickman's Art Miscellany Introductory notes on the nature of art 1 DAMP HEMs 3 Notes on some styles of western art 8 Glossary of art-related terms 22 Money issues 45 Miscellaneous art facts 48 Looking at art objects 53 Studio techniques, materials and media 55 Hints for the traveller 65 Colours, countries, cultures and contexts 67 Colours used by artists 75 Art movements and periods 91 Recent art movements 94 World cultures having distinctive art forms 96 List of metaphorical and descriptive words 106 Part II: Art, creativity, & education: a canine perspective Introductory remarks 114 The nature of art 112 Creativity (1) 117 Art and the arts 134 Education Issues pertaining to classification 140 Creativity (2) 144 Culture 149 Selected aphorisms from Max 'the visionary' dog 156 Part III: Concluding observations The tail end 157 Bibliography 159 Appendix I 164 v Illustrations Cover: Poker Game, Cassius Coolidge (1894) Frontispiece: (My Shirt is Alive With) Several Clambering Doggies of Inappropriate Hue, Richard Hickman (1994) ii The Haywain, John Constable (1821) 3 Vagveg, Tabitha Millett (2013) 5 Series 1, No. -

Art 5307 Contemporary Art History Professor Carol H. Fairlie

Art 5307 Contemporary Art History Professor Carol H. Fairlie OFFICE: 09--Fine Arts Building OFFICE HOURS: By appointment CELL PHOVE 432-294-1313 OFFICE PHONE: 837-8258 Email: [email protected] Office Hours Available by text 432-294-1313 after 10 am until midnight. I am in the classroom usually 1-2 pm. POST COVID COURSE INFO If you are not feeling well, please do the work which will be on-line. Due to the impact of the virus, if you have not received a vaccine, your mask must be worn at all times, and social separation of 6 feet must be maintained. Failure to do this will result in you being asked to leave the classroom REQUIRED TEXT : Try interlibrary loans, digital or used copies. The Western Humanities, sixth edition by Roy T. Matthews and F. Dewitt Platt (2008-08-01) Art Today: Edward Lucie Smith ISBN-10- 0714818062 Themes of Contemporary Art after 1980: Jean Robertson isbn-10: 0190276622 After Modern Art: 1945-2017 (Oxford History of Art) 2nd Edition: David Hopkins ISBN-10 : 0199218455 OBJECTIVE: To fully cover the concepts and movements of Art in the last twenty years, one must have a firm understanding of History for the past 100 years. The class will be required to do a lot of reading, note taking research and analysis. The textbooks and DVD’s will be relied upon to give us a basis about the class. Aesthetics, art history, exhibitions, artists, and art criticism will be relied upon to conduct the last few days of the class. The emphasis of the class will be to look at Art of the 1980's through the Present. -

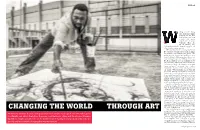

Changing the World Through Art Superblur Art Movement

Big Read alking into the Living Arts Emporium (LAE) workshop at the Ellis Park Stadium, you know instantly that you’ve stepped into an artists’ workshop. There are life-size paintings lying haphazardly around the hall, works in progress, and some complete masterpieces too. LAE was founded over a year ago by Conrad Bo to promote contemporary art made in Africa by promising and talented young artists. “The venture was not planned. I was working at a run- down building in Maboneng when some artists came looking for space and LAE was born,” says Bo. Bo also happens to be the godfather of two art movements: Superstroke and Superblur. In the former, paintings and sculptures are executed using expressive, sometimes even violent, brushstrokes on parts of the painting and on the surface. “When mediums such as pens and pencils are utilised, they should be used in a manner that’s overly expressive to be considered Superstroke,” explains Bo. There are barcode lines visible throughout the paintings that employ this method. Subject matters such as Africa, light, dark, life and death are encouraged, and collages, stencils and calligraphy can be used for impact. Superblur, on the other hand, refers to a method of creating art using the definition of the word ‘blur’. It focuses largely on making the object or classification of the art unclear or less distinct, and pays great attention to elements that cannot be heard or seen clearly. “In these techniques the abstract barcode is the symbol of the Superblur Art Movement, just like the cube can be seen as the symbol for the Cubism Art Movement,” explains Bo. -

Autumn / Winter 15-16 Forecast

Lifestyle AUTUMN / WINTER 15-16 The community will find new methods for creating a product, bringing into picture FORECAST −skill, people and quality. aking pride in the idea of creation and innovation, the winter of T 2015 will be a season of global celebration. Graduating from a Colours hard hitting recession, to a summer which believed in exploration Colour palettes are derived and restoring what felt lost, we will evolve into a season of total from lifestyles, bringing out rediscovery. The community will find new methods of making a the feeling of preferences and choices made product, a notch higher than cheap industrialisation, based on the mind and hand bringing into picture − skill, people and quality. Craft and technology together will be well tried and well accepted notions that will form a new foundation of our day to day life. The ‘cloud’ age will connect the world in ways which are exhilarating to Yarns imagine and the planet will become smaller than ever. Long gone Fibres intertwine together ideas will reemerge to form new ones, and conversing with the creating yarns inspired by green of nature will be of utmost importance. In all, from a recent nature, science, culture and technology time where we were discovering different media of art and using them as only as an expression to be noticed, we will evolve into a time where society would be investing in such ideas, and the price will be paid for by the ethereal value of a product. Fabrics Cultural influences, technical finishes, natural rough surfaces and traditional knitting techniques together bring out the fabric themes. -

November 1999

BRANFORD MARSALIS'S JEFF "TAIN" WATTS A new solo disc proves this amazing skinsman has a world of ideas up his sleeve. by Ken Micallef 52 MD's GUIDE TO DRUMSET TUNING It's a lot more important than you think, and with our pro tips, probably a lot easier, too! by Rich Watson 66 La Raia SANTANA'S Paul RODNEY HOLMES Even Santana's rhythmic wonderland can't UPDATE contain this monster. Tom Petty's Steve Ferrone by T. Bruce Wittet Ricky Martin's Jonathan Joseph 92 Ed Toth of Vertical Horizon Steven Drozd of The Flaming Lips Brett Michaels' Brett Chassen SUICIDAL TENDENCIES' 20 BROOKS WACKERMAN What's a nice guy like you doing in a band like this? REFLECTIONS by Matt Peiken VINNIE COLAIUTA 106 The distinguished Mr. C shares some deep thoughts on drumming's giants. by Robyn Flans ARTIST ON TRACK 26 ART BLAKEY, PART 2 Wherein the jazz giant draws the maps so many UP & COMING would follow. BYRON McMACKIN by Mark Griffith OF PENNYWISE 136 Pushin' punk's parameters. by Matt Schild 122 Volume 23, Number 11 Cover photo by Paul La Raia departments education 112 STRICTLY TECHNIQUE 4 AN EDITOR'S OVERVIEW Musical Accents, Part 3 Learning To Schmooze by Ted Bonar and Ed Breckenfeld by William F. Miller 116 ROCK 'N' JAZZ CLINIC 6 READERS' PLATFORM Implied Metric Modulation by Steve Smith 14 ASK A PRO 118 ROCK PERSPECTIVES Graham Lear, Vinnie Paul, and Ian Paice The Double Bass Challenge by Ken Vogel 16 IT'S QUESTIONABLE 142 PERCUSSION TODAY 130 ON THE MOVE Street Beats by Robin Tolleson 132 CRITIQUE 148 THE MUSICAL DRUMMER The Benefits Of Learning A Second Instrument by Ted Bonar 152 INDUSTRY HAPPENINGS New Orleans Jazz & Heritage Festival and more, including Taking The Stage equipment 160 DRUM MARKET Including Vintage Showcase 32 NEW AND NOTABLE 168 INSIDE TRACK Dave Mattacks 38 PRODUCT CLOSE-UP by T. -

Technologizuoto Meno Samprata: Objekto Ir Subjekto Komunikacija Interaktyviose Instaliacijose Magistro Baigiamojo Darbo Teorinė Dalis

VILNIAUS DAILĖS AKADEMIJA AUKŠTŲJŲ STUDIJŲ FAKULTETAS ŠIUOLAIKINĖS SKULPTŪROS KATEDRA Technologizuoto meno samprata: objekto ir subjekto komunikacija interaktyviose instaliacijose Magistro baigiamojo darbo teorinė dalis Studentas: Jokūbas Lukenskas, šiuolaikinė skulptūra, II mag. k. Teorinio darbo vadovas: Dr. Vytautas Michelkevičius Praktinio darbo vadovas: Doc. Antanas Šnaras Vilnius, 2013 1 Turinys Įvadas…………………………………………………………………………………….…3 Sąvokų apibrėţimai………………………………………...…………………………….…6 I. Objekto ir subjekto sąveika I. 1. Interaktyvumo samprata…………………………….………………………8 I. 2. Interpasyvumas…………………………………………………………….12 I. 3. Objekto ir subjekto inversija……………………………………………….15 II. Realybės tyrinėjimai mene, erdvės percepsija II. 1. Kubistų „simuliacijos―...................................................................................19 II. 2. Ambient menas...............................................................................................23 II. 3. Video menas………………………………………………………………..24 III. Technologija, medija ir menas III. 1. Technologinė ir komunikacinė medijos samprata…………...………………25 III. 2. Technologinio meno samprata……………………………………………….26 IV. Meno kūrinių pavyzdţiai IV. 1. Interaktyvios technologizuotos instaliacijos pasaulio kontekste…….……..31 IV. 2. Interaktyvios technologizuotos instaliacijos Lietuvos kontekste…………..40 Išvados...................................................................................................................................43 Iliustracijų sąrašas…………………………………………………………………………...64 Literatūra................................................................................................................................67 -

Arte Visual Ilustração Infantil

ARTE VISUAL CONTEMPORÂNEA, ILUSTRAÇÃO E LITERATURA PARA A INFÂNCIA: FAZENDO CONEXÕES ENTRE MUNDOS CRIATIVOS Leonardo Charréu1 Resumo O texto que se segue parte de duas premissas básicas normalmente aceitas pela comunidade cientifica que estuda, no vasto campo das ciências da educação, aquele que diz respeito à educação infantil. A primeira premissa é a de que uma literatura para a infância de qualidade, experienciada e proporcionada logo no início de qualquer processo, ou ciclo educativo, é de crucial importância para a formação e atração para a leitura por parte de um imaginário futuro leitor, mais a montante, na sua idade adulta. A leitura é efetivamente de fundamental importância para a constituição subjetiva da criança. A segunda premissa parte do princípio que uma imagem visual de qualidade, veiculada pela ilustração infantil, é igualmente importante na ampliação, nos efeitos e nos impactos dos conteúdos da escrita e da “história contada” sobre os(as) seus(suas) pequenos(as) leitor(es)(as). Por fim, uma outra ideia não menos importante, e que será, por assim dizer, a tese defendida neste texto, é a de que a arte visual contemporânea, fenômeno cultural que encerra, sem sombra de dúvida, muita hibridez, diversidade e complexidade, apresenta muitas soluções estéticas que influenciam um número apreciável de ilustradores de livros infantis. De algum modo, o contato visual das crianças com formas visuais que possuem uma dimensão performativa e experimental que é, em parte, transferida das propostas artísticas visuais atuais, também é uma forma paralela de educação estética visual. Assim as crianças não aprendem só a partir do textual, aprendem também a partir do visual, começando a preparar-se para as complexas e vibrantes visualidades com que são confrontados no seu cotidiano. -

Redalyc.ARTE VISUAL CONTEMPORÂNEA, ILUSTRAÇÃO

Revista Digital do LAV E-ISSN: 1983-7348 [email protected] Universidade Federal de Santa Maria Brasil Charréu, Leonardo ARTE VISUAL CONTEMPORÂNEA, ILUSTRAÇÃO E LITERATURA PARA A INFÂNCIA: FAZENDO CONEXÕES ENTRE MUNDOS CRIATIVOS Revista Digital do LAV, vol. 5, núm. 9, septiembre-, 2012, pp. 1-20 Universidade Federal de Santa Maria Santa Maria, Brasil Disponível em: http://www.redalyc.org/articulo.oa?id=337027360003 Como citar este artigo Número completo Sistema de Informação Científica Mais artigos Rede de Revistas Científicas da América Latina, Caribe , Espanha e Portugal Home da revista no Redalyc Projeto acadêmico sem fins lucrativos desenvolvido no âmbito da iniciativa Acesso Aberto ARTE VISUAL CONTEMPORÂNEA, ILUSTRAÇÃO E LITERATURA PARA A INFÂNCIA: FAZENDO CONEXÕES ENTRE MUNDOS CRIATIVOS Leonardo Charréu1 Resumo O texto que se segue parte de duas premissas básicas normalmente aceitas pela comunidade cientifica que estuda, no vasto campo das ciências da educação, aquele que diz respeito à educação infantil. A primeira premissa é a de que uma literatura para a infância de qualidade, experienciada e proporcionada logo no início de qualquer processo, ou ciclo educativo, é de crucial importância para a formação e atração para a leitura por parte de um imaginário futuro leitor, mais a montante, na sua idade adulta. A leitura é efetivamente de fundamental importância para a constituição subjetiva da criança. A segunda premissa parte do princípio que uma imagem visual de qualidade, veiculada pela ilustração infantil, é igualmente importante na ampliação, nos efeitos e nos impactos dos conteúdos da escrita e da “história contada” sobre os(as) seus(suas) pequenos(as) leitor(es)(as).