Gradual Program Verification

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

(1) Western Culture Has Roots in Ancient and ___

5 16. (50) If a 14th-century composer wrote a mass. what would be the names of the movement? TQ: Why? Chapter 3 Kyrie, Gloria, Credo, Sanctus, Agnus Dei. The text remains Roman Liturgy and Chant the same for each day throughout the year. 1. (47) Define church calendar. 17. (51) What is the collective title of the eight church Cycle of events, saints for the entire year services different than the Mass? Offices [Hours or Canonical Hours or Divine Offices] 2. TQ: What is the beginning of the church year? Advent (four Sundays before Christmas) 18. Name them in order and their approximate time. (See [Lent begins on Ash Wednesday, 46 days before Easter] Figure 3.3) Matins, before sunrise; Lauds, sunrise; Prime, 6 am; Terce, 9 3. Most important in the Roman church is the ______. am; Sext, noon; Nones, 3 pm; Vespers, sunset; Mass Compline, after Vespers 4. TQ: What does Roman church mean? 19. TQ: What do you suppose the function of an antiphon is? Catholic Church To frame the psalm 5. How often is it performed? 20. What is the proper term for a biblical reading? What is a Daily responsory? Lesson; musical response to a Biblical reading 6. (48) Music in Context. When would a Gloria be omitted? Advent, Lent, [Requiem] 21. What is a canticle? Poetic passage from Bible other than the Psalms 7. Latin is the language of the Church. The Kyrie is _____. Greek 22. How long does it take to cycle through the 150 Psalms in the Offices? 8. When would a Tract be performed? Less than a week Lent 23. -

Introitus: the Entrance Chant of the Mass in the Roman Rite

Introitus: The Entrance Chant of the mass in the Roman Rite The Introit (introitus in Latin) is the proper chant which begins the Roman rite Mass. There is a unique introit with its own proper text for each Sunday and feast day of the Roman liturgy. The introit is essentially an antiphon or refrain sung by a choir, with psalm verses sung by one or more cantors or by the entire choir. Like all Gregorian chant, the introit is in Latin, sung in unison, and with texts from the Bible, predominantly from the Psalter. The introits are found in the chant book with all the Mass propers, the Graduale Romanum, which was published in 1974 for the liturgy as reformed by the Second Vatican Council. (Nearly all the introit chants are in the same place as before the reform.) Some other chant genres (e.g. the gradual) are formulaic, but the introits are not. Rather, each introit antiphon is a very unique composition with its own character. Tradition has claimed that Pope St. Gregory the Great (d.604) ordered and arranged all the chant propers, and Gregorian chant takes its very name from the great pope. But it seems likely that the proper antiphons including the introit were selected and set a bit later in the seventh century under one of Gregory’s successors. They were sung for papal liturgies by the pope’s choir, which consisted of deacons and choirboys. The melodies then spread from Rome northward throughout Europe by musical missionaries who knew all the melodies for the entire church year by heart. -

A Comparison of the Two Forms of the Roman Rite

A Comparison of the Two Forms of the Roman Rite Mass Structures Orientation Language The purpose of this presentation is to prepare you for what will very likely be your first Traditional Latin Mass (TLM). This is officially named “The Extraordinary Form of the Roman Rite.” We will try to do that by comparing it to what you already know - the Novus Ordo Missae (NOM). This is officially named “The Ordinary Form of the Roman Rite.” In “Mass Structures” we will look at differences in form. While the TLM really has only one structure, the NOM has many options. As we shall see, it has so many in fact, that it is virtually impossible for the person in the pew to determine whether the priest actually performs one of the many variations according to the rubrics (rules) for celebrating the NOM. Then, we will briefly examine the two most obvious differences in the performance of the Mass - the orientation of the priest (and people) and the language used. The orientation of the priest in the TLM is towards the altar. In this position, he is facing the same direction as the people, liturgical “east” and, in a traditional church, they are both looking at the tabernacle and/or crucifix in the center of the altar. The language of the TLM is, of course, Latin. It has been Latin since before the year 400. The NOM was written in Latin but is usually performed in the language of the immediate location - the vernacular. [email protected] 1 Mass Structure: Novus Ordo Missae Eucharistic Prayer Baptism I: A,B,C,D Renewal Eucharistic Prayer II: A,B,C,D Liturgy of Greeting: Penitential Concluding Dismissal: the Word: A,B,C Rite: A,B,C Eucharistic Prayer Rite: A,B,C A,B,C Year 1,2,3 III: A,B,C,D Eucharistic Prayer IV: A,B,C,D 3 x 4 x 3 x 16 x 3 x 3 = 5184 variations (not counting omissions) Or ~ 100 Years of Sundays This is the Mass that most of you attend. -

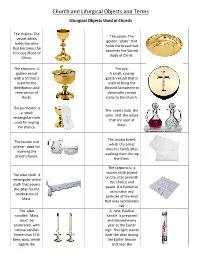

Church and Liturgical Objects and Terms

Church and Liturgical Objects and Terms Liturgical Objects Used in Church The chalice: The The paten: The vessel which golden “plate” that holds the wine holds the bread that that becomes the becomes the Sacred Precious Blood of Body of Christ. Christ. The ciborium: A The pyx: golden vessel A small, closing with a lid that is golden vessel that is used for the used to bring the distribution and Blessed Sacrament to reservation of those who cannot Hosts. come to the church. The purificator is The cruets hold the a small wine and the water rectangular cloth that are used at used for wiping Mass. the chalice. The lavabo towel, The lavabo and which the priest pitcher: used for dries his hands after washing the washing them during priest's hands. the Mass. The corporal is a square cloth placed The altar cloth: A on the altar beneath rectangular white the chalice and cloth that covers paten. It is folded so the altar for the as to catch any celebration of particles of the Host Mass. that may accidentally fall The altar A new Paschal candles: Mass candle is prepared must be and blessed every celebrated with year at the Easter natural candles Vigil. This light stands (more than 51% near the altar during bees wax), which the Easter Season signify the and near the presence of baptismal font Christ, our light. during the rest of the year. It may also stand near the casket during the funeral rites. The sanctuary lamp: Bells, rung during A candle, often red, the calling down that burns near the of the Holy Spirit tabernacle when the to consecrate the Blessed Sacrament is bread and wine present there. -

PARTS of the TRIDENTINE MASS INTRODUCTORY RITES Priest And

PARTS OF THE TRIDENTINE MASS INTRODUCTORY RITES PRAYERS AT THE FOOT OF THE ALTAR Priest and ministers pray that God forgive his, and the people=s sins. KYRIE All ask the Lord to have mercy. GLORIA All praise the Glory of God. COLLECT Priest=s prayer about the theme of Today=s Mass. MASS OF CATECHUMENS EPISTLE New Testament reading by one of the Ministers. GRADUAL All praise God=s Word. GOSPEL Priest reads from one of the 4 Gospels SERMON The Priest now tells the people, in their language, what the Church wants them to understand from today=s readings, or explains a particular Church teaching/rule. CREED All profess their faith in the Trinity, the Catholic Church, baptism and resurrection of the dead. LITURGY OF THE EUCHARIST THE OFFERTORY The bread and wine are brought to the Altar and prepared for consecration. THE RITE OF CONSECRATION The Preface Today=s solemn intro to the Canon, followed by the Sanctus. The Canon The fixed prayers/actions for the consecration of the bread/wine. Before the Consecration The Church, gathered around the pope and in union with the saints, presents the offerings to God and prays that they are accepted to become the Body/Blood of Christ. The Consecration ΑThis is not a prayer: it is the recital of what took place at the Last Supper: the priest does again what the Lord did, speaks the Lord=s own words.≅ After the Consecration The Church offers Christ=s own sacrifice anew to God, to bring the Church together in peace, save those in Purgatory and Αus sinners≅ , so that Christ may give honor to the Father. -

Vestments and Sacred Vessels Used at Mass

Vestments and Sacred Vessels used at Mass Amice (optional) This is a rectangular piece of cloth with two long ribbons attached to the top corners. The priest puts it over his shoulders, tucking it in around the neck to hide his cassock and collar. It is worn whenever the alb does not completely cover the ordinary clothing at the neck (GI 297). It is then tied around the waist. It symbolises a helmet of salvation and a sign of resistance against temptation. 11 Alb This long, white, vestment reaching to the ankles and is worn when celebrating Mass. Its name comes from the Latin ‘albus’ meaning ‘white.’ This garment symbolises purity of heart. Worn by priest, deacon and in many places by the altar servers. Cincture (optional) This is a long cord used for fastening some albs at the waist. It is worn over the alb by those who wear an alb. It is a symbol of chastity. It is usually white in colour. Stole A stole is a long cloth, often ornately decorated, of the same colour and style as the chasuble. A stole traditionally stands for the power of the priesthood and symbolises obedience. The priest wears it around the neck, letting it hang down the front. A deacon wears it over his right shoulder and fastened at his left side like a sash. Chasuble The chasuble is the sleeveless outer vestment, slipped over the head, hanging down from the shoulders and covering the stole and alb. It is the proper Mass vestment of the priest and its colour varies according to the feast. -

English-Latin Missal

ENGLISH-LATIN MISSAL International Union of Guides and Scouts of Europe © www.romanliturgy.org, 2018: for the concept of booklets © apud Administrationem Patrimonii Sedis Apostolicæ in Civitate Vaticana, 2002: pro textibus lingua latina © International Commission on English in the Liturgy Corporation, 2010: all rights reserved for the English texts The Introductory Rites When the people are gathered, the Priest approaches the altar with the ministers while the Entrance Chant is sung. Entrance Antiphon: Monday, January 29 p. 26. Tuesday, January 30 p. 27. Wednesday, January 31 p. 30. When he has arrived at the altar, after making a profound bow with the ministers, the Priest venerates the altar with a kiss and, if appropriate, incenses the cross and the altar. Then, with the ministers, he goes to the chair. When the Entrance Chant is concluded, the Priest and the faithful, standing, sign themselves with the Sign of the Cross, while the Priest, facing the people, says: In nómine Patris, et Fílii, et Spíritus In the name of the Father, and of the Sancti. Son, and of the Holy Spirit. The people reply: Amen. Amen. Then the Priest, extending his hands, greets the people, saying: Grátia Dómini nostri Iesu Christi, et The grace of our Lord Jesus Christ, and cáritas Dei, et communicátio Sancti the love of God, and the communion of Spíritus sit cum ómnibus vobis. the Holy Spirit, be with you all. Or: Grátia vobis et pax a Deo Patre nostro Grace to you and peace from God our et Dómino Iesu Christo. Father and the Lord Jesus Christ. -

Assisting at the Parish Eucharist

The Parish of Handsworth: The Church of St Mary: Assisting at the Parish Eucharist 1st Lesson Sunday 6th June Celebrant: The Rector Gen 3:8-15 N Gogna Entrance Hymn: Let us build a house (all are welcome) Reader: 2nd Lesson 2 Cor 4: 1st After Trinity Liturgical Deacon: A Treasure D Arnold Gradual Hymn: Jesus, where’er thy people meet Reader: 13-5:1 Preacher: The Rector Intercessor: S Taylor Offertory Hymn: There’s a wideness in God’s mercy Colour: Green MC (Server): G Walters Offertory Procession: N/A Communion Hymn: Amazing grace Thurifer: N/A N/A Final Hymn: He who would valiant be Mass Setting: Acolytes: N/A Chalice: N/A St Thomas (Thorne) Sidespersons: 8.00am D Burns 11.00am R & C Paton-Devine Live-Stream: A Lubin & C Perry 1st Lesson Ezek 17: Sunday 13th June Celebrant: The Rector M Bradley Entrance Hymn: The Church’s one foundation Reader: 22-end 2nd Lesson 2nd After Trinity Liturgical Deacon: P Stephen 2 Cor 5:6-17 A Cash Gradual Hymn: Sweet is the work my God and king Reader: Preacher: P Stephen Intercessor: R Cooper Offertory Hymn: God is working his purpose out Colour: Green MC (Server): G Walters Offertory Procession: N/A Communion Hymn: The king of love my shepherd is Thurifer: N/A N/A Final Hymn: The Kingdom of God is justice… Mass Setting: Acolytes: N/A Chalice: N/A St Thomas (Thorne) Sidespersons: 8.00am E Simkin 11.00am L Colwill & R Hollins Live-Stream: W & N Senteza Churchwarden: Keith Hemmings & Doreen Hemmings Rector: Fr Bob Stephen Organist: James Jarvis The Parish of Handsworth: The Church of St Mary: Assisting at -

Preface Dialogue and the Eucharistic Prayer Kristopher W

Preface Dialogue and the Eucharistic Prayer Kristopher W. Seaman As Roman Catholics, we tend to The first and third lines of the assume that the center and climax of people have changed. Like other areas the Eucharistic liturgy, the Mass, is in the liturgy, “And also with you” the Communion procession. However, has been changed to the literal trans- according to the General Instruction lation of the official Latin edition of of the Roman Missal, the Eucharistic The Roman Missal: “And with your Prayer is “center and high point” spirit.” The same principle applies to of the Mass (GIRM, 78). It is from “It is right and just.” this very prayer that we have one of Some other changes in the text the terms for which we call Mass: of Eucharistic Prayer include the Eucharist. Eucharist means “thanks- following: giving.” Notice thanksgiving is a verb — Sanctus: The first line now something we do. Eucharist is an reads: “Holy, Holy, Holy Lord God of activity, it is a way of life, a way of hosts” rather than “of power and doing what Christian disciples do: might.” God is Lord of hosts, not just give thanks. Why thanksgiving? As the powerful and mighty, but also the human persons, we can do nothing, last and least. but thank the Triune God for all of Institution Narrative: The his works in and through our lives. Institution Narrative is the words of Thanksgiving is a response to what God does for us, to us, and Jesus used at the last Supper over the bread and wine. -

Traditional Latin Mass (TLM), Otherwise Known As the Extraordinary Form, Can Seem Confusing, Uncomfortable, and Even Off-Putting to Some

For many who have grown up in the years following the liturgical changes that followed the Second Vatican Council, the Traditional Latin Mass (TLM), otherwise known as the Extraordinary Form, can seem confusing, uncomfortable, and even off-putting to some. What I hope to do in a series of short columns in the bulletin is to explain the mass, step by step, so that if nothing else, our knowledge of the other half of the Roman Rite of which we are all a part, will increase. Also, it must be stated clearly that I, in no way, place the Extraordinary Form above the Ordinary or vice versa. Both forms of the Roman Rite are valid, beautiful celebrations of the liturgy and as such deserve the support and understanding of all who practice the Roman Rite of the Catholic Church. Before I begin with the actual parts of the mass, there are a few overarching details to cover. The reason the priest faces the same direction as the people when offering the mass is because he is offering the sacrifice on behalf of the congregation. He, as the shepherd, standing in persona Christi (in the person of Christ) leads the congregation towards God and towards heaven. Also, it’s important to note that a vast majority of what is said by the priest is directed towards God, not towards us. When the priest does address us, he turns around to face us. Another thing to point out is that the responses are always done by the server. If there is no server, the priest will say the responses himself. -

Training Your Chalice Assistants Liturgy File 506

Training your Chalice Assistants Liturgy File 506 Authorisation ‘At present the diocesan bishop may give permission to named lay people to assist in the distribution of the sacrament in both kinds in the course of a service of Holy Communion in church. In order for this to happen, request is made to the suffragan bishop by the incumbent supported by a vote of the PCC.’ Ad Clerum 8 This permission does not extend to the administration of the elements by lay persons to those who are sick or housebound, for which further special permission from the Bishop is needed. It follows that selection of such ministers (subsequently termed ‘assistants’) should rest on both the actual need within the church and that the parish priest and PCC should be content regarding their moral stature and good standing within the Christian Community. It should be emphasised to all that, episcopal permission is for a three year period only, after which this lapses. This provides a regular opportunity for review by the incumbent and PCC. Theological training Unlike some other English dioceses which have a formal course for candidates for this ministry, in the Diocese of St Albans, training is done entirely in the local church. It is preferable that some insight is given into the history and development of the Eucharist in the Church of England, and that as a result, candidates will have some awareness of the breadth of Eucharistic practice, both of administration and reception of communion in traditions other than their own. This is especially important when ministering to visitors from other churches. -

Gradual (Franciscan Use) in Latin with Some Portuguese, Illuminated Manuscript on Parchment Portugal, C

Gradual (Franciscan Use) In Latin with some Portuguese, illuminated manuscript on parchment Portugal, c. 1575-1600 209 pages, on parchment, preceded by a paper flyleaf, missing a leaf at the end and perhaps another in the core of the manuscript, mostly in quires of 8 (collation: i4, ii8, iii8, iv8, v8, vi8, vii7 (missing 8; likely a cancelled blank as text and liturgical sequence seems uninterrupted [see pp.102-103]), viii8, ix8, x4, xi8, xii8, xiii8, xiv7 (missing 7, with 8 a blank leaf)), written in a rounded gothic liturgical bookhand on up to 16 lines per page in brown to darker brown ink, ruled in plummet (justification 430 x 285 mm), no catchwords, a few quire signatures, rubrics in bright red, pitches marked in red over words, some corrections and contemporary annotations in the margin; later annotations in the margins (in Portuguese), elaborate calligraphic pen flourishing swirling in the margins in purple, dark blue and red ink, larger cadels in black ink with elaborate calligraphic penwork (e.g. ff. 197-198), a number of initials traced in blue or red (2- to 5-lines high) enhanced with calligraphic penwork and sometimes with floral motifs, fruits, faces, putti or satyrs (e.g. pp. 82-83; p. 151), a few initials pasted in on small paper strips (cadels on decorated grounds, see f. 171), numerous decorated initials in colors (and some liquid gold) and acanthus leaves of varying size (1-line high, larger 3- line high) (e.g. ff. 9-10 et passim), 13 historiated initials with elaborate calligraphic penwork, often with a variety of birds, putti, insects, bestiary, fruit etc., large illuminated bracket border of strewn illusionistic flowers on a yellow ground (inspired from the Flemish illusionistic borders) with a purple decorated initial with similar illusionistic floral motifs (f.