Advances in Artificial Intelligence Require Progress Across All of Computer Science

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

FUNDAMENTALS of COMPUTING (2019-20) COURSE CODE: 5023 502800CH (Grade 7 for ½ High School Credit) 502900CH (Grade 8 for ½ High School Credit)

EXPLORING COMPUTER SCIENCE NEW NAME: FUNDAMENTALS OF COMPUTING (2019-20) COURSE CODE: 5023 502800CH (grade 7 for ½ high school credit) 502900CH (grade 8 for ½ high school credit) COURSE DESCRIPTION: Fundamentals of Computing is designed to introduce students to the field of computer science through an exploration of engaging and accessible topics. Through creativity and innovation, students will use critical thinking and problem solving skills to implement projects that are relevant to students’ lives. They will create a variety of computing artifacts while collaborating in teams. Students will gain a fundamental understanding of the history and operation of computers, programming, and web design. Students will also be introduced to computing careers and will examine societal and ethical issues of computing. OBJECTIVE: Given the necessary equipment, software, supplies, and facilities, the student will be able to successfully complete the following core standards for courses that grant one unit of credit. RECOMMENDED GRADE LEVELS: 9-12 (Preference 9-10) COURSE CREDIT: 1 unit (120 hours) COMPUTER REQUIREMENTS: One computer per student with Internet access RESOURCES: See attached Resource List A. SAFETY Effective professionals know the academic subject matter, including safety as required for proficiency within their area. They will use this knowledge as needed in their role. The following accountability criteria are considered essential for students in any program of study. 1. Review school safety policies and procedures. 2. Review classroom safety rules and procedures. 3. Review safety procedures for using equipment in the classroom. 4. Identify major causes of work-related accidents in office environments. 5. Demonstrate safety skills in an office/work environment. -

Supercomputing in Plain English: Overview

Supercomputing in Plain English An Introduction to High Performance Computing Part I: Overview: What the Heck is Supercomputing? Henry Neeman, Director OU Supercomputing Center for Education & Research Goals of These Workshops To introduce undergrads, grads, staff and faculty to supercomputing issues To provide a common language for discussing supercomputing issues when we meet to work on your research NOT: to teach everything you need to know about supercomputing – that can’t be done in a handful of hourlong workshops! OU Supercomputing Center for Education & Research 2 What is Supercomputing? Supercomputing is the biggest, fastest computing right this minute. Likewise, a supercomputer is the biggest, fastest computer right this minute. So, the definition of supercomputing is constantly changing. Rule of Thumb: a supercomputer is 100 to 10,000 times as powerful as a PC. Jargon: supercomputing is also called High Performance Computing (HPC). OU Supercomputing Center for Education & Research 3 What is Supercomputing About? Size Speed OU Supercomputing Center for Education & Research 4 What is Supercomputing About? Size: many problems that are interesting to scientists and engineers can’t fit on a PC – usually because they need more than 2 GB of RAM, or more than 60 GB of hard disk. Speed: many problems that are interesting to scientists and engineers would take a very very long time to run on a PC: months or even years. But a problem that would take a month on a PC might take only a few hours on a supercomputer. OU Supercomputing -

Computer Hardware Architecture Lecture 4

Computer Hardware Architecture Lecture 4 Manfred Liebmann Technische Universit¨atM¨unchen Chair of Optimal Control Center for Mathematical Sciences, M17 [email protected] November 10, 2015 Manfred Liebmann November 10, 2015 Reading List • Pacheco - An Introduction to Parallel Programming (Chapter 1 - 2) { Introduction to computer hardware architecture from the parallel programming angle • Hennessy-Patterson - Computer Architecture - A Quantitative Approach { Reference book for computer hardware architecture All books are available on the Moodle platform! Computer Hardware Architecture 1 Manfred Liebmann November 10, 2015 UMA Architecture Figure 1: A uniform memory access (UMA) multicore system Access times to main memory is the same for all cores in the system! Computer Hardware Architecture 2 Manfred Liebmann November 10, 2015 NUMA Architecture Figure 2: A nonuniform memory access (UMA) multicore system Access times to main memory differs form core to core depending on the proximity of the main memory. This architecture is often used in dual and quad socket servers, due to improved memory bandwidth. Computer Hardware Architecture 3 Manfred Liebmann November 10, 2015 Cache Coherence Figure 3: A shared memory system with two cores and two caches What happens if the same data element z1 is manipulated in two different caches? The hardware enforces cache coherence, i.e. consistency between the caches. Expensive! Computer Hardware Architecture 4 Manfred Liebmann November 10, 2015 False Sharing The cache coherence protocol works on the granularity of a cache line. If two threads manipulate different element within a single cache line, the cache coherency protocol is activated to ensure consistency, even if every thread is only manipulating its own data. -

Florida Course Code Directory Computer Science Course Information 2019-2020

FLORIDA COURSE CODE DIRECTORY COMPUTER SCIENCE COURSE INFORMATION 2019-2020 Section 1007.2616, Florida Statutes, was amended by the Florida Legislature to include the definition of computer science and a requirement that computer science courses be identified in the Course Code Directory published on the Department of Education’s website. DEFINITION: The study of computers and algorithmic processes, including their principles, hardware and software designs, applications, and their impact on society, and includes computer coding and computer programming. SECONDARY COMPUTER SCIENCE COURSES Middle and high schools in each district, including combination schools in which any of grades 6-12 are taught, must provide an opportunity for students to enroll in a computer science course. If a school district does not offer an identified computer science course the district must provide students access to the course through the Florida Virtual School (FLVS) or through other means. COURSE NUMBER COURSE TITLE 0200000 M/J Computer Science Discoveries 0200010 M/J Computer Science Discoveries 1 0200020 M/J Computer Science Discoveries 2 0200305 Computer Science Discoveries 0200315 Computer Science Principles 0200320 AP Computer Science A 0200325 AP Computer Science A Innovation 0200335 AP Computer Science Principles 0200435 PRE-AICE Computer Studies IGCSE Level 0200480 AICE Computer Science 1 AS Level 0200485 AICE Computer Science 2 A Level 0200490 AICE Information Technology 1 AS Level 0200495 AICE Information Technology 2 A Level 0200800 IB Computer -

Safety and Security Challenge

SAFETY AND SECURITY CHALLENGE TOP SUPERCOMPUTERS IN THE WORLD - FEATURING TWO of DOE’S!! Summary: The U.S. Department of Energy (DOE) plays a very special role in In fields where scientists deal with issues from disaster relief to the keeping you safe. DOE has two supercomputers in the top ten supercomputers in electric grid, simulations provide real-time situational awareness to the whole world. Titan is the name of the supercomputer at the Oak Ridge inform decisions. DOE supercomputers have helped the Federal National Laboratory (ORNL) in Oak Ridge, Tennessee. Sequoia is the name of Bureau of Investigation find criminals, and the Department of the supercomputer at Lawrence Livermore National Laboratory (LLNL) in Defense assess terrorist threats. Currently, ORNL is building a Livermore, California. How do supercomputers keep us safe and what makes computing infrastructure to help the Centers for Medicare and them in the Top Ten in the world? Medicaid Services combat fraud. An important focus lab-wide is managing the tsunamis of data generated by supercomputers and facilities like ORNL’s Spallation Neutron Source. In terms of national security, ORNL plays an important role in national and global security due to its expertise in advanced materials, nuclear science, supercomputing and other scientific specialties. Discovery and innovation in these areas are essential for protecting US citizens and advancing national and global security priorities. Titan Supercomputer at Oak Ridge National Laboratory Background: ORNL is using computing to tackle national challenges such as safe nuclear energy systems and running simulations for lower costs for vehicle Lawrence Livermore's Sequoia ranked No. -

Embedded System Introduction.Pdf

EmbeddedEmbedded SystemSystem IntroductionIntroduction ANDES Confidential WWW.ANDESTECH.COM Embedded System vs Desktop Page 2 相同點 CPU Storage I/O 相異點 Desktop ‧可執行多種功能 ‧作業系統對於系統資源的管理較為複雜 Embedded System ‧執行特定功能 ‧作業系統對於系統資源的管理較為簡單 Page 3 SystemSystem LayerLayer Application Application Operating System Operating System Firmware Firmware Firmware Hardware Hardware Hardware Desktop computer Complex embedded Simple embedded computer computer Page 4 HardwareHardware ArchitectureArchitecture DesktopDesktop ComputerComputer SystemSystem HardwareHardware ArchitectureArchitecture CPU AGP Slot North Bridge Memory PCI Interface USB Interface South Bridge IDE Interface System BIOS ISA Interface Super IO Port Page 5 HardwareHardware ArchitectureArchitecture EmbeddedEmbedded SystemSystem ComputerComputer HardwareHardware ArchitectureArchitecture ADC CPU SPI Digital I/O ROM IIC Host RAM Timers Computer UART Bus Interface Memory Network Interface I/O Page 6 What is the Embedded System? Page 7 IntroductionIntroduction Challenges in embedded system design. Design methodologies. Page 8 EmbeddedEmbedded SystemSystem ?? •An embedded system is a special-purpose computer system designed to perform one or a few dedicated functions •with real-time computing constraints • include hardware, software and mechanical parts Page 9 EmbeddingEmbedding aa computercomputer Page 10 ComponentsComponents ofof anan embeddedembedded systemsystem Characteristics Low power Closed operating environment Cost sensitive Page 11 ComponentsComponents ofof anan embeddedembedded -

14 Los Alamos National Laboratory

14 Los Alamos National Laboratory Every year for the past 17 years, the director of Los Alamos alternative for assessing the safety, reliability, and perfor- National Laboratory has had a legally required task: write mance of the stockpile: virtual-world simulations. a letter—a personal assessment of Los Alamos–designed warheads and bombs in the U.S. nuclear stockpile. Th i s I, Iceberg letter is sent to the secretaries of Energy and Defense and to Hollywood movies such as the Matrix series or I, Robot the Nuclear Weapons Council. Th rough them the letter goes typically portray supercomputers as massive, room-fi lling to the president of the United States. machines that churn out answers to the most complex questions—all by themselves. In fact, like the director’s Th e technical basis for the director’s assessment comes from Annual Assessment Letter, supercomputers are themselves the Laboratory’s ongoing execution of the nation’s Stockpile the tip of an iceberg. Stewardship Program; Los Alamos’ mission is to study its portion of the aging stockpile, fi nd any problems, and address them. And for the past 17 years, the director’s letter has said, Without people, a supercomputer in eff ect, that any problems that have arisen in Los Alamos would be no more than a humble jumble weapons are being addressed and resolved without the need for full-scale underground nuclear testing. of wires, bits, and boxes. When it comes to the Laboratory’s work on the annual assess- Although these rows of huge machines are the most visible ment, the director’s letter is just the tip of the iceberg. -

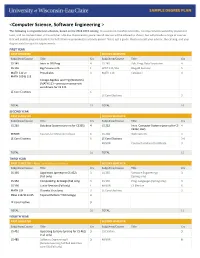

<Computer Science, Software Engineering >

<Computer Science, Software Engineering > The following is a hypothetical schedule, based on the 2018-2019 catalog. It assumes no transferred credits, no requirements waived by placement tests, and no courses taken in the summer. UW-Eau Claire cannot guarantee all courses will be offered as shown, but will provide a range of courses that will enable prepared students to fulfill their requirements in a timely period. This is just a guide. Please consult your advisor, the catalog, and your degree audit for specific requirements. FIRST YEAR FIRST SEMESTER SECOND SEMESTER Subj/Area/Course Title Crs Subj/Area/Course Title Crs CS 145 Intro to OO Prog 4 CS 245 Adv. Prog. Data Structures 4 CS 146 Big Picture in CS 1 WRIT 114/116 Blugold Seminar 5 MATH 112 or Precalculus 4 MATH 114 Calculus I 4 MATH 109 & 113 College Algebra and Trig (Winterim) (MATH 113 – prereq or concurrent enrollment for CS 245 LE Core Electives 6 LE Core Electives 3 TOTAL 15 TOTAL 16 SECOND YEAR FIRST SEMESTER SECOND SEMESTER Subj/Area/Course Title Crs Subj/Area/Course Title Crs CS 260 Database Systems (prereq for CS355) 4 CS 252 Intro. Computer Systems (prereq for CS 4 CS352, 452) MINOR Courses for Minor/Certificate 6 CS 268 Web Systems 3 LE Core Electives 6 LE Core Electives 3-6 MINOR Courses for Minor/Certificate 3 TOTAL 16 TOTAL 15 THIRD YEAR FIRST SEMESTER – Apply for Admission to Major SECOND SEMESTER Subj/Area/Course Title Crs Subj/Area/Course Title Crs CS 335 Algorithms (prereq for CS 452) 3 CS 355 Software Engineering I 3 (Fall only) (Spring only) CS 352 ComputeOrg. -

Advanced Engineering Mathematics

5 Linear Systems of ODEs 5.1 Systems of ODEs In a sense, Chapter 5 equals Chapter 2 “plus” Chapter 3, in the sense that Chapter 5 combines use of matrix theory and ordinary differential equation (ODE) methods. When we have more than one linear ODE, results from matrix theory turn out to be useful. Example 5.1 For the circuit shown in Figure 5.1, let v(t) be the voltage drop across the capacitor and I(t) be the loop current. The input V(t) is a given function. Assume, as usual, that L, R, and C are constants. Write down a system of ODEs in R2 that models this circuit. Method: The series RLC circuit shown in Figure 5.1 is analogous to the DC series RLC circuit discussed near the end of Section 3.3. The first ODE models the voltage drop across the capacitor being v(t) = 1 q(t),whereq(t) is the charge on the capacitor and C ˙ q˙(t) = I(t). The second ODE in the system is Kirchhoff’s voltage law, LI(t)+RI(t)+v(t) = V(t), after dividing through by L. The system is ⎧ ⎫ ⎨˙( ) = 1 ( ) ⎬ v t C I t ⎩ ⎭ . (5.1) ˙( ) = 1 ( ( ) − ( ) − ( )) I t L V t RI t v t More generally, consider a system of two ODEs in unknowns x1(t), x2(t): ⎧ ⎫ ˙ ⎨x1(t) = F1 t, x1(t), x2(t) ⎬ ⎩ ⎭ . (5.2) ˙ x2(t) = F2 t, x1(t), x2(t) A special case is ⎧ ⎫ ˙ ⎨x1(t) = a11(t)x1 + a12(t)x2 + f1(t)⎬ ⎩ ⎭ , (5.3) ˙ x2(t) = a21(t)x1 + a22(t)x2 + f2(t) which is called a linear system. -

Hardware Components and Internal PC Connections

Technological University Dublin ARROW@TU Dublin Instructional Guides School of Multidisciplinary Technologies 2015 Computer Hardware: Hardware Components and Internal PC Connections Jerome Casey Technological University Dublin, [email protected] Follow this and additional works at: https://arrow.tudublin.ie/schmuldissoft Part of the Engineering Education Commons Recommended Citation Casey, J. (2015). Computer Hardware: Hardware Components and Internal PC Connections. Guide for undergraduate students. Technological University Dublin This Other is brought to you for free and open access by the School of Multidisciplinary Technologies at ARROW@TU Dublin. It has been accepted for inclusion in Instructional Guides by an authorized administrator of ARROW@TU Dublin. For more information, please contact [email protected], [email protected]. This work is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 4.0 License Higher Cert/Bachelor of Technology – DT036A Computer Systems Computer Hardware – Hardware Components & Internal PC Connections: You might see a specification for a PC 1 such as "containing an Intel i7 Hexa core processor - 3.46GHz, 3200MHz Bus, 384 KB L1 cache, 1.5MB L2 cache, 12 MB L3 cache, 32nm process technology; 4 gigabytes of RAM, ATX motherboard, Windows 7 Home Premium 64-bit operating system, an Intel® GMA HD graphics card, a 500 gigabytes SATA hard drive (5400rpm), and WiFi 802.11 bgn". This section aims to discuss a selection of hardware parts, outline common metrics and specifications -

Artificial Intelligence Program 1

Artificial Intelligence Program 1 Artificial Intelligence Program Reid Simmons, Director of the BSAI program (NSH 3213) 15-122 Principles of Imperative Computation 10 (students without credit or a waiver for 15-112, Jean Harpley, Program Coordinator (NSH 1517) Fundamentals of Programming and Computer www.cs.cmu.edu/bs-in-artificial-intelligence (http://www.cs.cmu.edu/bs-in- Science, must take 15-112 before 15-122) artificial-intelligence/) 15-150 Principles of Functional Programming 10 15-210 Parallel and Sequential Data Structures and 12 Overview Algorithms 15-213 Introduction to Computer Systems 12 Carnegie Mellon University has led the world in artificial intelligence 15-251 Great Ideas in Theoretical Computer Science 12 education and innovation since the field was created. It's only natural, then, that the School of Computer Science would offer the nation's first bachelor's degree in Artificial Intelligence, which started in Fall 2018. Artificial Intelligence The new BSAI program gives students the in-depth knowledge needed to transform large amounts of data into actionable decisions. The program All of the following three AI core courses: Units and its curriculum focus on how complex inputs — such as vision, language 07-180 Concepts in Artificial Intelligence 5 and huge databases — can be used to make decisions or enhance human 15-281 Artificial Intelligence: Representation and 12 capabilities. The curriculum includes coursework in computer science, Problem Solving math, statistics, computational modeling, machine learning and symbolic computation. Because Carnegie Mellon is devoted to AI for social good, 10-315 Introduction to Machine Learning (SCS Majors) 12 students will also take courses in ethics and social responsibility, with the plus one of the following AI core courses: option to participate in independent study projects that change the world for 16-385 Computer Vision 12 the better — in areas like healthcare, transportation and education. -

Rapid Mapping of Digital Integrated Circuit Logic Gates Via Multi-Spectral Backside Imaging

RAPID MAPPING OF DIGITAL INTEGRATED CIRCUIT LOGIC GATES VIA MULTI-SPECTRAL BACKSIDE IMAGING RONEN ADATO1;4;∗, AYDAN UYAR1;4, MAHMOUD ZANGENEH1;4, BOYOU ZHOU1;4, AJAY JOSHI1;4, BENNETT GOLDBERG1;2;3;4 AND M SELIM UNL¨ U¨ 1;3;4 Abstract. Modern semiconductor integrated circuits are increasingly fabricated at untrusted third party foundries. There now exist myriad security threats of malicious tampering at the hardware level and hence a clear and pressing need for new tools that enable rapid, robust and low-cost validation of circuit layouts. Optical backside imaging offers an attractive platform, but its limited resolution and throughput cannot cope with the nanoscale sizes of modern circuitry and the need to image over a large area. We propose and demonstrate a multi-spectral imaging approach to overcome these obstacles by identifying key circuit elements on the basis of their spectral response. This obviates the need to directly image the nanoscale components that define them, thereby relaxing resolution and spatial sampling requirements by 1 and 2 - 4 orders of magnitude respectively. Our results directly address critical security needs in the integrated circuit supply chain and highlight the potential of spectroscopic techniques to address fundamental resolution obstacles caused by the need to image ever shrinking feature sizes in semiconductor integrated circuits. 1. Introduction Semiconductor integrated circuits (ICs) are pervasive and essential components in virtually all modern devices, from personal computers, to medical equipment, to varied military systems and technologies. Their functionality is defined by a massive number (∼ 106 − 109 currently) of in- terconnected logic gates that correspond physically to various nanoscale doped regions, polysilicon and metal (usually copper and tungsten) structures.