Camouflaged Object Segmentation with Distraction Mining

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Download The

MAS Context Issue 22 / Summer ’14 Surveillance MAS Context Issue 22 / Summer ’14 Surveillance 3 MAS CONTEXT / 22 / SURVEILLANCE / 22 / CONTEXT MAS Welcome to our Surveillance issue. This issue examines the presence of surveillance around us— from the way we are being monitored in the physical and virtual world, to the potential of using the data we generate to redefine our relationship to the built environment. Organized as a sequence of our relationship with data, the contributions address monitoring, collecting, archiving, and using the traces that we leave, followed by camouflaging and deleting the traces that we leave. By exploring different meanings of surveillance, this issue seeks to generate a constructive conversation about the history, policies, tools, and applications of the information that we generate and how those aspects are manifested in our daily lives. MAS Context is a quarterly journal that addresses issues that affect the urban context. Each issue delivers a comprehensive view of a single topic through the active participation of people from different fields and different perspectives who, together, instigate the debate. MAS Context is a 501(c)(3) not for profit organization based in Chicago, Illinois. It is partially supported by a grant from the Graham Foundation for Advanced Studies in the Fine Arts. MAS Context is also supported by Wright. With printing support from Graphic Arts Studio. ISSN 2332-5046 5 “It felt more like a maximum security prison than a gated community SURVEILLANCE / 22 / CONTEXT MAS when the Chicago Housing Authority tried to beef up the safety of the neighborhood. Our privacy was invaded with police cameras Urban watching our every move. -

VOL 1, No 69 (69) (2021) the Scientific Heritage (Budapest, Hungary

VOL 1, No 69 (69) (2021) The scientific heritage (Budapest, Hungary) The journal is registered and published in Hungary. The journal publishes scientific studies, reports and reports about achievements in different scientific fields. Journal is published in English, Hungarian, Polish, Russian, Ukrainian, German and French. Articles are accepted each month. Frequency: 24 issues per year. Format - A4 ISSN 9215 — 0365 All articles are reviewed Free access to the electronic version of journal Edition of journal does not carry responsibility for the materials published in a journal. Sending the article to the editorial the author confirms it’s uniqueness and takes full responsibility for possible consequences for breaking copyright laws Chief editor: Biro Krisztian Managing editor: Khavash Bernat • Gridchina Olga - Ph.D., Head of the Department of Industrial Management and Logistics (Moscow, Russian Federation) • Singula Aleksandra - Professor, Department of Organization and Management at the University of Zagreb (Zagreb, Croatia) • Bogdanov Dmitrij - Ph.D., candidate of pedagogical sciences, managing the laboratory (Kiev, Ukraine) • Chukurov Valeriy - Doctor of Biological Sciences, Head of the Department of Biochemistry of the Faculty of Physics, Mathematics and Natural Sciences (Minsk, Republic of Belarus) • Torok Dezso - Doctor of Chemistry, professor, Head of the Department of Organic Chemistry (Budapest, Hungary) • Filipiak Pawel - doctor of political sciences, pro-rector on a management by a property complex and to the public relations -

A Catalogue of the Collection of American Paintings in the Corcoran Gallery of Art

A Catalogue of the Collection of American Paintings in The Corcoran Gallery of Art VOLUME I THE CORCORAN GALLERY OF ART WASHINGTON, D.C. A Catalogue of the Collection of American Paintings in The Corcoran Gallery of Art Volume 1 PAINTERS BORN BEFORE 1850 THE CORCORAN GALLERY OF ART WASHINGTON, D.C Copyright © 1966 By The Corcoran Gallery of Art, Washington, D.C. 20006 The Board of Trustees of The Corcoran Gallery of Art George E. Hamilton, Jr., President Robert V. Fleming Charles C. Glover, Jr. Corcoran Thorn, Jr. Katherine Morris Hall Frederick M. Bradley David E. Finley Gordon Gray David Lloyd Kreeger William Wilson Corcoran 69.1 A cknowledgments While the need for a catalogue of the collection has been apparent for some time, the preparation of this publication did not actually begin until June, 1965. Since that time a great many individuals and institutions have assisted in com- pleting the information contained herein. It is impossible to mention each indi- vidual and institution who has contributed to this project. But we take particular pleasure in recording our indebtedness to the staffs of the following institutions for their invaluable assistance: The Frick Art Reference Library, The District of Columbia Public Library, The Library of the National Gallery of Art, The Prints and Photographs Division, The Library of Congress. For assistance with particular research problems, and in compiling biographi- cal information on many of the artists included in this volume, special thanks are due to Mrs. Philip W. Amram, Miss Nancy Berman, Mrs. Christopher Bever, Mrs. Carter Burns, Professor Francis W. -

Mitl63 Pages.V3 Web.Indd

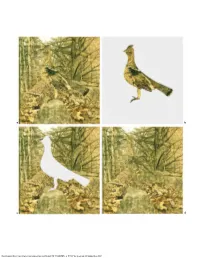

a b c d Downloaded from http://www.mitpressjournals.org/doi/pdf/10.1162/LEON_a_01337 by guest on 26 September 2021 G e N eral article seeing through camouflage Abbott Thayer, Background-Picturing and the Use of Cutout Silhouettes r o y r . b e h r e n s In the first decade of the twentieth century, while researching protective ments, reversible figure-ground was used in lowbrow puzzles coloration in nature, artist-naturalist Abbott H. Thayer (1849–1921), and games long before its use in art. In one example from working with his son, Gerald H. Thayer (1883–1939), hypothesized the nineteenth century, we view what seems at first to be an a kind of camouflage that he called “background-picturing.” It was his ABSTRACT contention that, in many animals, the patterns on their bodies make it image of trees on the island of Elba, and then, by a switch seem as if one could “see through” them, as if they were transparent. of attention, the space between the trees becomes the pro- This essay revisits that concept, Thayer’s descriptions and demonstrations file of Napoleon in exile. In another, Abraham Lincoln, on of it, and compares it to current computer-based practices of replacing horseback, rides through a benign grove of trees—and then, gaps in images with “content-aware” digital patches. suddenly, the background space between the trees becomes his horrific assassin, John Wilkes Booth. As I write this, a news article has recently appeared in which This same device resurfaced at the beginning of the twen- the paintings of Belgian surrealist René Magritte are de- tieth century, when it was put to more serious use by an scribed as having been “structured like jokes” [1]. -

Copperhead Snake on Dead Leaves, Study for Book Concealing Coloration in the Animal Kingdom Ca

June 2012 Copperhead Snake on Dead Leaves, study for book Concealing Coloration in the Animal Kingdom ca. 1910-1915 Abbott Handerson Thayer Born: Boston, Massachusetts 1849 Died: Monadnock, New Hampshire 1921 watercolor on cardboard mounted on wood panel sight 9 1/2 x 15/1/2 in. (24.1 x 39.3 cm) Smithsonian American Art Museum Gift of the heirs of Abbott Handerson Thayer 1950.2.15 Not currently on view Collections Webpage and High Resolution Image The Smithsonian American Art Museum owns eighty-seven works of art and studies by the artist Abbott Handerson Thayer made as illustrations for his book Concealing Coloration in the Animal Kingdom. Researcher Liz wanted to learn more about Thayer’s ideas on animal coloration and whether these were accepted by modern biologists. What did Thayer believe about animal coloration and its function? Abbott Handerson Thayer was an artist but had a lifelong fascination with animals and nature. He was a member of the American Ornithologists’ Union in whose publication, The Auk, his coloration studies were first published in 1896. Thayer and his son, Gerald Handerson Thayer, collaborated on the writing and illustrations for their 1909 book, Concealing-Coloration in the Animal Kingdom; an Exposition of the Laws of Disguise through Color and Pattern: Being a Summary of Abbott H. Thayer's Discoveries. Several of these original watercolors and oil paintings are on view in the Luce Foundation Center, including Blue Jays in Winter, Male Wood Duck in a Forest Pool, Red Flamingoes, Sunrise or Sunset, Roseate Spoonbill, and Roseate Spoonbills. Copperhead Snake on Dead Leaves illustrates Thayer’s contention that some animals have “ground-picturing” patterns that mimic the appearance of their surroundings. -

Selected Works Selected Works Works Selected

Celebrating Twenty-five Years in the Snite Museum of Art: 1980–2005 SELECTED WORKS SELECTED WORKS S Snite Museum of Art nite University of Notre Dame M useum of Art SELECTED WORKS SELECTED WORKS Celebrating Twenty-five Years in the Snite Museum of Art: 1980–2005 S nite M useum of Art Snite Museum of Art University of Notre Dame SELECTED WORKS Snite Museum of Art University of Notre Dame Published in commemoration of the 25th anniversary of the opening of the Snite Museum of Art building. Dedicated to Rev. Anthony J. Lauck, C.S.C., and Dean A. Porter Second Edition Copyright © 2005 University of Notre Dame ISBN 978-0-9753984-1-8 CONTENTS 5 Foreword 8 Benefactors 11 Authors 12 Pre-Columbian and Spanish Colonial Art 68 Native North American Art 86 African Art 100 Western Arts 264 Photography FOREWORD From its earliest years, the University of Notre Dame has understood the importance of the visual arts to the academy. In 1874 Notre Dame’s founder, Rev. Edward Sorin, C.S.C., brought Vatican artist Luigi Gregori to campus. For the next seventeen years, Gregori beautified the school’s interiors––painting scenes on the interior of the Golden Dome and the Columbus murals within the Main Building, as well as creating murals and the Stations of the Cross for the Basilica of the Sacred Heart. In 1875 the Bishops Gallery and the Museum of Indian Antiquities opened in the Main Building. The Bishops Gallery featured sixty portraits of bishops painted by Gregori. In 1899 Rev. Edward W. J. -

Introduction and Classification of Mimicry Systems

Chapter 1 INTRODUCTION AND CLASSIFICATION OF MIMICRY SYSTEMS It is hardly an exaggeration to say, that whilst reading and reflecting on the various facts given in this Memoir, we feel to be as near witnesses, as we can ever hope to be, of the creation of a new species on this earth. Charles Darwin (1863) referring to Henry Bates’ 1862 account of mimicry in Brazil COPYRIGHTED MATERIAL Mimicry, Crypsis, Masquerade and other Adaptive Resemblances, First Edition. Donald L. J. Quicke. © 2017 John Wiley & Sons Ltd. Published 2017 by John Wiley & Sons Ltd. 1 0003114056.INDD 1 7/14/2017 12:48:07 PM 2 Donald L. J. Quicke A BRIEF HISTORY at Oxford University. He describes the results of extensive experiments in which insects were presented to a captive The first clear definition of biological mimicry was that of monkey and its responses observed. The article is over 100 Henry Walter Bates (1825–92), a British naturalist who pages long and in the foreword he notes that a lot of the spent some 11 years collecting and researching in the observations are tabulated rather than given seriatim Amazonas region of Brazil (Bates 1862, 1864, 1981, G. because of the “great increase in the cost of printing”. Woodcock 1969). However, as pointed out by Stearn Nevertheless, such observations are essential first steps in (1981), Bates’ concept of the evolution of mimicry would understanding whether species are models or mimics or quite possibly have gone unnoticed were it not for Darwin’s have unsuspected defences. review of his book in The Natural History Review of 1863. -

2014-2015 Newsletter

WILLIAMS GRADUATE PROGRAM IN THE HISTORY OF ART OFFERED IN COLLABORATION WITH THE CLARK ART INSTITUTE WILLIAMS GRAD ART THE CLARK 2014–2015 NEWSLETTER LETTER FROM THE DIRECTOR Marc Gotlieb Dear Alumni, You will find more information inside this news- letter. I very much hope you enjoy this year’s Greetings from Williamstown, and welcome edition. And once again, many thanks to Kristen to the 2014 – 15 edition of the Graduate Art Oehlrich for shepherding this complicated publi- Newsletter. Our first year class has just returned cation from initial conception into your hands! from an exciting trip to Vienna and Paris, while here on the ground we are in the final weeks With all best wishes, before the phased re-opening of the Manton Research Center. The Marc Graduate Program suite will be completely refurbished as part of the renovation, including a larger classroom and other important ameni- ties—please come take a look. This has also been a significant year for transitions—Michael Holly has returned to teach for a year, while a search gets underway for a new director of the Clark, a new curatorial staff too. For its part the Williams Art Department will also be making new, continuing appointments this year and in the years ahead. All these changes will of course profoundly color the teaching complement of the Graduate Program in the years ahead, as a new generation of instructors arrives in Williamstown and at once embraces and remakes the Williams experi- TEACH IT FORWARD 3 ence in graduate education. FACULTY AND STAFF NEWS 5 This past fall Williams launched its campaign Teach it Forward. -

American Paintings, 1900–1945

National Gallery of Art NATIONAL GALLERY OF ART ONLINE EDITIONS American Paintings, 1900–1945 American Paintings, 1900–1945 Published September 29, 2016 Generated September 29, 2016 To cite: Nancy Anderson, Charles Brock, Sarah Cash, Harry Cooper, Ruth Fine, Adam Greenhalgh, Sarah Greenough, Franklin Kelly, Dorothy Moss, Robert Torchia, Jennifer Wingate, American Paintings, 1900–1945, NGA Online Editions, http://purl.org/nga/collection/catalogue/american-paintings-1900-1945/2016-09-29 (accessed September 29, 2016). American Paintings, 1900–1945 © National Gallery of Art, Washington National Gallery of Art NATIONAL GALLERY OF ART ONLINE EDITIONS American Paintings, 1900–1945 CONTENTS 01 American Modernism and the National Gallery of Art 40 Notes to the Reader 46 Credits and Acknowledgments 50 Bellows, George 53 Blue Morning 62 Both Members of This Club 76 Club Night 95 Forty-two Kids 114 Little Girl in White (Queenie Burnett) 121 The Lone Tenement 130 New York 141 Bluemner, Oscar F. 144 Imagination 152 Bruce, Patrick Henry 154 Peinture/Nature Morte 164 Davis, Stuart 167 Multiple Views 176 Study for "Swing Landscape" 186 Douglas, Aaron 190 Into Bondage 203 The Judgment Day 221 Dove, Arthur 224 Moon 235 Space Divided by Line Motive Contents © National Gallery of Art, Washington National Gallery of Art NATIONAL GALLERY OF ART ONLINE EDITIONS American Paintings, 1900–1945 244 Hartley, Marsden 248 The Aero 259 Berlin Abstraction 270 Maine Woods 278 Mount Katahdin, Maine 287 Henri, Robert 290 Snow in New York 299 Hopper, Edward 303 Cape Cod Evening 319 Ground Swell 336 Kent, Rockwell 340 Citadel 349 Kuniyoshi, Yasuo 352 Cows in Pasture 363 Marin, John 367 Grey Sea 374 The Written Sea 383 O'Keeffe, Georgia 386 Jack-in-Pulpit - No. -

![Arxiv:2104.10475V1 [Cs.CV] 21 Apr 2021 Camouflage Is the Concealment of Animals Or Objects by Ground](https://docslib.b-cdn.net/cover/4716/arxiv-2104-10475v1-cs-cv-21-apr-2021-camou-age-is-the-concealment-of-animals-or-objects-by-ground-5444716.webp)

Arxiv:2104.10475V1 [Cs.CV] 21 Apr 2021 Camouflage Is the Concealment of Animals Or Objects by Ground

Camouflaged Object Segmentation with Distraction Mining Haiyang Mei1 Ge-Peng Ji2,4 Ziqi Wei3,? Xin Yang1,? Xiaopeng Wei1 Deng-Ping Fan4 1 Dalian University of Technology 2 Wuhan University 3 Tsinghua University 4 IIAI https://mhaiyang.github.io/CVPR2021_PFNet/index Abstract Camouflaged object segmentation (COS) aims to iden- tify objects that are “perfectly” assimilate into their sur- roundings, which has a wide range of valuable applications. The key challenge of COS is that there exist high intrinsic similarities between the candidate objects and noise back- ground. In this paper, we strive to embrace challenges to- Image SINet Ours GT wards effective and efficient COS. To this end, we develop a bio-inspired framework, termed Positioning and Focus Figure 1. Visual examples of camouflaged object segmentation. Network (PFNet), which mimics the process of predation While the state-of-the-art method SINet [12] confused by the in nature. Specifically, our PFNet contains two key mod- background region which shares similar appearance with the cam- ules, i.e., the positioning module (PM) and the focus module ouflaged objects (pointed to by a arrow in the top row) or the cam- (FM). The PM is designed to mimic the detection process in ouflaged region that cluttered in the background (pointed to by a predation for positioning the potential target objects from arrow in the bottom row), our method can eliminate these distrac- a global perspective and the FM is then used to perform tions and generate accurate segmentation results. the identification process in predation for progressively re- fining the coarse prediction via focusing on the ambiguous regions. -

Sculpture As Artifice: Mimetic Form in the Environment

Sculpture as Artifice: Mimetic Form in the Environment Mark Booth A thesis in fulfilment of requirements for the degree of Master of Fine Arts (Research) The University of New South Wales Faculty of Arts, Design and Architecture May 2021 SCULPTURE AS ARTIFICE: MIMETIC FORM IN THE ENVIRONMENT ii Table of Contents Page Acknowledgements vi List of Figures vii Chapter One. Introduction 1.1 Sculpture and Site 1 1.2 Conundrum 2 1.3 List of components 2 1.4 Camouflage 3 1.5 Theory 4 1.6 Artists 5 1.7 Site/Non-site 5 Chapter Two. Camouflage: A brief history 2.1 Natural camouflage 8 2.2 Charles Darwin 8 2.3 Abbott Thayer 9 2.4 Biomimetics 11 2.5 Artificial camouflage 12 2.6 Camouflage in the military 12 2.7 Dazzle 13 2.8 Jellybean 15 2.9 United States Army field manuals 15 2.10 Ames Room 17 2.11 Ganzfeld effect 18 SCULPTURE AS ARTIFICE: MIMETIC FORM IN THE ENVIRONMENT iii 2.12 Voronoi diagrams 19 2.13 Military biotechnology 20 Chapter Three. Unorthodox art practices 3.1 Monochromatic colour 22 3.2 Optics 24 3.3 Algorithms 25 3.4 Displacement 26 3.5 Photosynthesis 30 Chapter Four. Retrospective art practice 4.1 Pixelation 33 4.2 UCP 34 4.3 Tessellation 36 4.4 Background blending 36 4.5 Illusion and Scale 38 4.6 Assimilation 40 4.7 Conundrum 41 4.8 Parasitism 42 Chapter Five. Site/Non-site 5.1 Site 43 5.2 Site centricity 43 5.3 Situationism 45 5.4 Nature’s materials 47 5.5 Gwion Gwion 48 5.6 Non-site 49 SCULPTURE AS ARTIFICE: MIMETIC FORM IN THE ENVIRONMENT iv 5.7 Wasteland 51 Chapter Six. -

Front Matter

Cambridge University Press 978-1-108-47759-8 — Mimicry and Display in Victorian Literary Culture Will Abberley Frontmatter More Information MIMICRY AND DISPLAY IN VICTORIAN LITERARY CULTURE Revealing the web of mutual influences between nineteenth-century scientific and cultural discourses of appearance, Mimicry and Display in Victorian Literary Culture argues that Victorian science and culture biologized appearance, reimagining imitation, concealment and self- presentation as evolutionary adaptations. Exploring how studies of animal crypsis and visibility drew on artistic theory and techniques to reconceptualise nature as a realm of signs and interpretation, Abber- ley shows that, in turn, this science complicated religious views of nature as a text of divine meanings, inspiring literary authors to rethink human appearances and perceptions through a Darwinian lens. Providing fresh insights into writers from Alfred Russel Wallace and Thomas Hardy to Oscar Wilde and Charlotte Perkins Gilman, Abberley reveals how the biology of appearance generated new understandings of deception, identity and creativity; reacted upon narrative forms such as crime fiction and the pastoral; and infused the rhetoric of cultural criticism and political activism. is Senior Lecturer in Victorian Literature at the University of Sussex. His other books are English Fiction and the Evolution of Language – (Cambridge University Press, ) and Underwater Worlds: Submerged Visions in Science and Culture (). He is a BBC New Generation Thinker and Philip Lever- hulme Prize recipient. © in this web service Cambridge University Press www.cambridge.org Cambridge University Press 978-1-108-47759-8 — Mimicry and Display in Victorian Literary Culture Will Abberley Frontmatter More Information - General editor Gillian Beer, University of Cambridge Editorial board Isobel Armstrong, Birkbeck, University of London Kate Flint, University of Southern California Catherine Gallagher, University of California, Berkeley D.