Jorge Marx Gómez.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Influence of Agglomerations on the Development of Tourism in the Lviv Region

Studia Periegetica nr 3(27)/2019 DOI: 10.26349/st.per.0027.03 HALYNA LABINSKA* Influence of Agglomerations on the Development of Tourism in the Lviv Region Abstract. The author proposes a method of studying the influence of agglomerations on the development of tourism. The influence of agglomerations on the development of tourism is -il lustrated by the case of the Lviv region and the use of correlation analysis. In addition, official statistics about the main indicator of the tourism industry by region and city are subjected to centrographic analysis. The coincidence of weight centers confirms the exceptional influence of the Lviv agglomeration on the development of tourism in the region, which is illustrated with a cartographic visualization. Keywords: agglomeration, tourism, research methodology 1. Introduction Urbanization, as a complex social process, affects all aspects of society. The role of cities, especially large cities, in the life of Ukraine and its regions will only grow. The growing influence of cities on people’s lives and their activities was noted in the early 20th century by the professor V. Kubiyovych [1927]. Mochnachuk and Shypovych identified three stages of urbanization in Ukraine during the second half of the 20th century: 1) urbanization as a process of urban growth; 2) suburbanization – erosion of urban nuclei, formation of ag- glomerations; 3) rurbanization – urbanization of rural settlements within urban- ized areas [Mochnachuk, Shypovych 1972: 41-48]. The stage of rurbanization is ** Ivan Franko National University of Lviv (Ukraine), Department of Geography of Ukraine, e-mail: [email protected], orcid.org/0000-0002-9713-6291. 46 Halyna Labinska consistent with the classic definition of “agglomeration” in the context of Euro- pean urbanism: a system that includes the city and its environs (Pierre Merlen and Francoise Shoe). -

Quarterly Report

QUARTERLY REPORT for the Development Initiative for Advocating Local Governance in Ukraine (DIALOGUE) Project October – December, 2014 1 1 QUARTERLY REPORT October - December 2014 TABLE OF CONTENTS RESUME 5 Chapter 1. KEY ACHIEVEMENTS IN THE REPORTING PERIOD 6 Chapter 2. PROJECT IMPLEMENTATION 10 2.1. Component 1: Legal Framework 10 Activity 2.1.1. Legislation drafting based on local governments legislative needs 10 Assessment of local government institutions needs in legislation and activities 10 on the Technical Area Profiles Studying the public opinion on decentralisation and its aspects 11 Legislation monitoring 13 Activity 2.1.2. Expert evaluation of conformity of draft legislation 17 to the European Charter of Local Self-Governance Activity 2.1.3. Introduction of institutional tools for local governments 17 to participate in legislation drafting Round table discussions in AUC Regional Offices and meetings of AUC Professional 17 Setting up a network of lawyers to participate in legislation drafting 22 2.2. Component 2: Policy dialogue 23 Activity 2.2.1. Increasing the participation of the AUC member cities 23 in the policy dialogue established be the Association at the national level Day of Reforms at the Ukrainian Municipal Forum 23 Cooperation with central government authorities 23 Budget decentralisation: amendments to the Budget and Tax Codes 28 Parliamentary local government support inter-faction group (local government caucus) 31 Participation in the work of parliamentary committees 32 Activity 2.2.2. Setting up advisory boards at the regional level with participation 34 Working sessions of Local Government Regional Advisory Boards 34 Selection of issues to be discussed at working meetings of Local Government 36 Regional Advisory Boards in 2015 Activity 2.2.3. -

City Size and Functional Specialization As Factors of Smart Management: a Case of Lviv Oblast, Ukraine”

“City size and functional specialization as factors of smart management: A case of Lviv Oblast, Ukraine” Roman Lozynskyy Oleh Hrymak Lesya Kushnir AUTHORS Oksana Terletska Myroslava Vovk Roman Lozynskyy, Oleh Hrymak, Lesya Kushnir, Oksana Terletska and ARTICLE INFO Myroslava Vovk (2021). City size and functional specialization as factors of smart management: A case of Lviv Oblast, Ukraine. Problems and Perspectives in Management, 19(2), 384-397. doi:10.21511/ppm.19(2).2021.31 DOI http://dx.doi.org/10.21511/ppm.19(2).2021.31 RELEASED ON Monday, 28 June 2021 RECEIVED ON Monday, 22 February 2021 ACCEPTED ON Thursday, 10 June 2021 LICENSE This work is licensed under a Creative Commons Attribution 4.0 International License JOURNAL "Problems and Perspectives in Management" ISSN PRINT 1727-7051 ISSN ONLINE 1810-5467 PUBLISHER LLC “Consulting Publishing Company “Business Perspectives” FOUNDER LLC “Consulting Publishing Company “Business Perspectives” NUMBER OF REFERENCES NUMBER OF FIGURES NUMBER OF TABLES 48 3 5 © The author(s) 2021. This publication is an open access article. businessperspectives.org Problems and Perspectives in Management, Volume 19, Issue 2, 2021 Roman Lozynskyy (Ukraine), Oleh Hrymak (Ukraine), Lesya Kushnir (Ukraine), Oksana Terletska (Ukraine), Myroslava Vovk (Ukraine) City size and functional BUSINESS PERSPECTIVES specialization as factors LLC “СPС “Business Perspectives” Hryhorii Skovoroda lane, 10, Sumy, 40022, Ukraine of smart management: www.businessperspectives.org A case of Lviv Oblast, Ukraine Abstract The process of understanding the factors that affect the implementation of smart man- Received on: 22nd of February, 2021 agement in cities is pivotal for using this concept to improve the well-being of the Accepted on: 10th of June, 2021 population. -

© Мамчур З., Драч Ю., Притула С., 2020 Удк 582.323:581.9](477.83

ISSN 0206-5657. Вісник Львівського університету. Серія біологічна. 2020 Випуск 82. С. 110–120 Visnyk of the Lviv University. Series Biology. 2020. Issue 82. P. 110–120 УДК 582.323:581.9](477.83) https://doi.org/10.30970/vlubs.2020.82.09 SPHAGNUM MOSSES OF THE MALE POLISSYA (LVIV REGION) Z. Mamchur, Yu. Drach, S. Prytula Ivan Franko National University of Lviv 4, Hrushevskyi St., Lviv 79005, Ukraine e-mail: [email protected] The article summarizes current data about the condition and spread of Sphagnum mosses on the territory of Male Polissya in Lviv Region based on the material from our own field research, materials of National Herbarium of Ukraine (KW), the Herbarium National Museum of Natural History of the National Academy of Sciences of Ukraine (LWS) and literature data. An annotated list of the species of the genus Sphagnum was compiled and it includes 15 species together with an indication of place and date of collection, the names of collectors, the spread in Ukraine and biotopes in which the species may occur according to The National Habitat Catalogue of Ukraine and EUNIS. Sphagnum fallax (Klinggr.) Kling- gr., S. fimbriatum Wils. and S. palustre L. were determined as the most widespread species of the genus Sphagnum in the research area. Two species S. angustifolium and S. inundatum are indicated for the first time for the territory of Lviv region. Six regionally rare species were found: S. capillifolium, S. cuspidаtum, S. fаllax, S. fimbriаtum, S. obtusum and S. papillosum. The possible disappearance of a species Sphag- num centrale, S. contortum, S. -

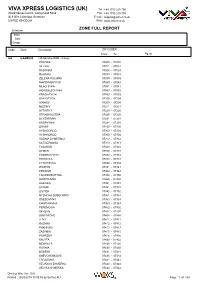

Viva Xpress Logistics (Uk)

VIVA XPRESS LOGISTICS (UK) Tel : +44 1753 210 700 World Xpress Centre, Galleymead Road Fax : +44 1753 210 709 SL3 0EN Colnbrook, Berkshire E-mail : [email protected] UNITED KINGDOM Web : www.vxlnet.co.uk Selection ZONE FULL REPORT Filter : Sort : Group : Code Zone Description ZIP CODES From To Agent UA UAAOD00 UA-Ukraine AOD - 4 days POLISKE 07000 - 07004 VILCHA 07011 - 07012 RADYNKA 07024 - 07024 RAHIVKA 07033 - 07033 ZELENA POLIANA 07035 - 07035 MAKSYMOVYCHI 07040 - 07040 MLACHIVKA 07041 - 07041 HORODESCHYNA 07053 - 07053 KRASIATYCHI 07053 - 07053 SLAVUTYCH 07100 - 07199 IVANKIV 07200 - 07204 MUSIIKY 07211 - 07211 DYTIATKY 07220 - 07220 STRAKHOLISSIA 07225 - 07225 OLYZARIVKA 07231 - 07231 KROPYVNIA 07234 - 07234 ORANE 07250 - 07250 VYSHGOROD 07300 - 07304 VYSHHOROD 07300 - 07304 RUDNIA DYMERSKA 07312 - 07312 KATIUZHANKA 07313 - 07313 TOLOKUN 07323 - 07323 DYMER 07330 - 07331 KOZAROVYCHI 07332 - 07332 HLIBOVKA 07333 - 07333 LYTVYNIVKA 07334 - 07334 ZHUKYN 07341 - 07341 PIRNOVE 07342 - 07342 TARASIVSCHYNA 07350 - 07350 HAVRYLIVKA 07350 - 07350 RAKIVKA 07351 - 07351 SYNIAK 07351 - 07351 LIUTIZH 07352 - 07352 NYZHCHA DUBECHNIA 07361 - 07361 OSESCHYNA 07363 - 07363 KHOTIANIVKA 07363 - 07363 PEREMOGA 07402 - 07402 SKYBYN 07407 - 07407 DIMYTROVE 07408 - 07408 LITKY 07411 - 07411 ROZHNY 07412 - 07412 PUKHIVKA 07413 - 07413 ZAZYMIA 07415 - 07415 POHREBY 07416 - 07416 KALYTA 07420 - 07422 MOKRETS 07425 - 07425 RUDNIA 07430 - 07430 BOBRYK 07431 - 07431 SHEVCHENKOVE 07434 - 07434 TARASIVKA 07441 - 07441 VELIKAYA DYMERKA 07442 - 07442 VELYKA -

The Vinnytsia Mass Graves and Some Other Soviet Sites of Execution

TABLE OF CONTENTS Preface by L.R. Wynar...................................................... vii Acknowledgements ......................................................... xii Editorial Policy ............................................................... xv I. INTRODUCTION by lhor Kamenetsky Humanitarian and Anti-Humanitarian Trends in Modern History ............................................................1 The Vinnytsia Case and the Yezhovshchyna Era.. ................ .15 The Role of the Local Ukrainian Population and of the German Occupation Forces in the Vinnytsia Case .....................................................22 The Vinnytsia Mass Graves and Some Other Soviet Sites of Execution ........................ 31 Conclusion.. ................................................................... .35 11. TESTIMONIES AND HEARINGS M. Seleshko, Vinnystsia - The Katyn of Ukraine (A Report by an Eyewitness) ............................41 Petro Pavloyvch, I Saw Hell: Fragment of Reminiscences........................................................... .52 Bishop Sylvester, The Vynnytsya Tragedy.. ........................ .54 Archbishop Hyhoriy, P. Pavlovych, K. Sybirsky, Testimony on the Crime in Vynnytsya ............................................................ .56 lhor Kamenetsky, Interview with an Eyewitness in August 1987 .......................................... .60 Archbishop Hyhoriy, Funeral Eulogy Delivered at Vinnytsia, October 3, 1943.. ....................... .63 Congressional Hearings, The Crimes of Khrushchev, Part -

The Ukrainian Weekly 2004, No.27

www.ukrweekly.com INSIDE:• Ukraine’s economy sizzles during first five months of 2004 — page 3. • Art by Olga Maryschuk, Aka Pereyma, Christina Pereyma — pages 12-13. • Ivan Franko as depicted on philatelic issues — centerfold. Published by the Ukrainian National Association Inc., a fraternal non-profit association Vol. LXXII HE KRAINIANNo. 27 THE UKRAINIAN WEEKLY SUNDAY, JULY 4, 2004 EEKLY$1/$2 in Ukraine UkrainianT communityU marks 40th anniversary PACE expressesW concern over Rada’s of Taras Shevchenko monument in Washington new move on constitutional reform by Serhiy Zhykharev Church in the U.S.A. Ukraine’s by Roman Woronowycz before presidential elections were to be Ukrainian National Information Service Ambassador to the United States Kyiv Press Bureau held. Mr. Oliinyk said during a press con- Mykhailo Reznik was joined at the cere- ference in Kyiv that PACE members had WASHINGTON – The Ukrainian mony by the general secretary of the KYIV – The Parliamentary Assembly stated that they would take a very serious community in the United States com- Ukrainian World Congress (UWC), of the Council of Europe (PACE) reacted look at the situation in Ukraine during the memorated the 40th anniversary of the Victor Pedenko, as well as representa- negatively on June 23 to word that the organization’s autumn session. pro-presidential majority coalition in unveiling of the Taras Shevchenko mon- tives of various Ukrainian American “It looks like we are going to have Ukraine’s Verkhovna Rada had success- ument in Washington with a special pro- organizations. very serious problems in October,” com- fully moved an initial reading of a new gram here on Saturday, June 26. -

Russian Oppression in Ukraine

икттіт Review і і ? Ч б THE UKRAINIAN REVIEW A Quarterly Magazine devoted to the study of Ukraine, EDITORIAL BOARD Mrs. Slava Stetzko, M.A. Volodymyr Bohdaniuk, B.A., B. Litt. Editor Associate Editor Marta Savchuk, M.A. Dr. Anatol Bedriy Associate Editor Associate Editor Professor Lew Shankowsky Oleh S. Romanyshyn, M.A. Associate Editor Associate Editor Irene Osyczko, B.A. Associate Editor Cover designed by R. Lisovskyy Price: 75p or $2.00 a single copy Annual Subscription: £3.00 or $8.00 Editorial correspondence should be sent to: The Editors, “The Ukrainian Review” 200 Liverpool Road, London, N1 ILF. Subscriptions should be sent to: “The Ukrainian Review” (Administration). c/o Association of Ukrainians in Great Britain, Ltd. 49 Linden Gardens, London, W2 4HG. Overseas representatives: USA: Organization for Defense of Four Freedoms for Ukraine, Inc. P.O. Box 304, Cooper Station, New York, N.Y. 10003. Canada: Canadian League for Ukraine’s Liberation. 140 Bathurst Street, Toronto, Ont., M5V 2R3 Printed in Great Britain by the Ukrainian Publishers Limited 200 Liverpool Road, N1 ILF Tel.: 01 THE UKRAINIAN REVIEW Vol. X X II No. 1 Spring 1976 A Quarterly Magazine v - = WE APPEAL TO THE CONSCIENCE OF ALL MEN OF GOOD | WILL IN THE WORLD TO HELP SECURE THE RELEASE J FROM SOVIET RUSSIAN PRISONS AND CONCENTRATION I CAMPS OF ALL UKRAINIANS — FORMER RED CROSS sl PERSONNEL, POLITICAL PRISONERS AND ALL THOSE | PUNISHED FOR DEMANDING HUMAN RIGHTS AND I NATIONAL INDEPENDENCE. 3 For information please write to us or to any <1l Ukrainian Organization in the Free World. Published by The Association of Ukrainians in Great Britain, Ltd. -

Źródła Finansowania „Studia Periegetica” 3(27)/2019

Źródła finansowania „Studia Periegetica” 3(27)/2019 Projekt współfinansowany ze środków Ministerstwa Nauki i Szkolnictwa Wyższego stanowiących pomoc de minimis, przyznaną w ramach programu „Wsparcie dla czasopism naukowych” na lata 2019-2020 na podstawie umowy nr 475/WCN/2019/1 z dnia 31.07.2019 r. oraz ze środków Wyższej Szkoły Bankowej w Poznaniu The project is co-financed from the funds of the Ministry of Science and Higher Education constituting de minimis aid, granted under the “Support for scientific journals” program for the years 2019-2020 under agreement no. 475/WCN/2019/1 dated 31.07.2019 and from the funds of the WSB University in Poznań Studia Periegetica nr 3(27)/2019 Turystyka i rekreacja w społeczno-ekonomicznym i przestrzennym rozwoju miast redaktor naukowy Agata Basińska-Zych Wydawnictwo Wyższej Szkoły Bankowej w Poznaniu Poznań 2019 Studia Periegetica No. 3(27)/2019 Tourism and Recreation in the Socio-economic and Spatial Development of Cities volume editor Agata Basińska-Zych The WSB University in Poznan Press Poznań 2019 Redaktor naczelny czasopisma / Editor-in-chief Marek Nowacki (WSB University in Poznań, Poland) Kolegium redakcyjne / Associate Editors Arnold Bernaciak (WSB University in Poznań, Poland) Grzegorz Gołembski (WSB University in Poznań, Poland) Agata Basińska-Zych (WSB University in Poznań, Poland) – sekretarz redakcji / Editorial Secretary Rada naukowa / International Editorial Advisory Board Alexander Adamovsky (Ukrainian National Forestry Univeristy, Lviv, Ukraine) Ryszard Asienkiewicz (University -

Urgently for Publication (Procurement Procedures)

Bulletin No�6 (185) February 11, 2014 001902 Urgently for publication Road Facilities Administration (procurement procedures) of Odesa City Council 22 Henerala Petrova St., 65078 Odesa Dieliierhiev Hennadii Heorhiiovych 001820 tel.: (048) 784–08–42; United Motor Transport Fleet of Health Care tel./fax: (048) 784–08–42; Establishments and Institutions of Poltava Oblast 7 Kotsiubynskoho St., 36039 Poltava e–mail: [email protected] Denysko Victor Anatolyovych Website of the Authorized agency which contains information on procurement: tel.: (05322) 7–31–34; www.tender.me.gov.ua tel./fax: (05322) 2–96–26; Procurement subject: current repair of street and road network of Odesa, e–mail: [email protected] Square of asphalt concrete surface, which is under current repair is 19 574,5 m2 Website of the Authorized agency which contains information on procurement: (details are specified in the competitive bidding documents – addition No.1) www.tender.me.gov.ua Supply/execution: Odesa; during 2014 Procurement subject: code 19.20.2 – Liquid fuel and gas, lubricating oil 5.2. Procurement procedure: open tender Number of products or volume of works or services – 1 lot – petrol A–92 – Obtaining of competitive bidding documents: at the customer’s address, 670 000 l; petrol A–95 – 45 000 l; diesel fuel – 141 000 l; diesel fuel office 213, personally or by mail “Euro” – 174 000 l; lot 2 – lubrication Litol 24 – 170 kg, car oil – 5896 l: Submission: at the customer’s address, office 213 lot 1 – Gasoline, diesel fuel, diesel fuel “Euro” – petrol A–92 – 670 000 l; -

Kierunki Rozwoju Centrów Małych Przygranicznych Miast Obwodu Lwowskiego (Na Przykładzie Mościsk I Rawy Ruskiej) Development

KIERUNKI ROZWOJU CENTRÓW MAŁYCH PRZYGRANICZNYCH MIAST OBWODU LWOWSKIEGO (NA PRZYKŁADZIE MOŚCISK I RAWY RUSKIEJ) DEVELOPMENT AREAS OF LVIV REGION SMALL BORDER CITIES CENTRES (E.G. MOSTYSKA AND RAVA-RUSKA) Bogdan Posatskyy Dr arch. professor Lviv Polytechnic National University, Institute of Architecture, Department of Urban Planning Yuliya Idak Dr arch. docent Lviv Polytechnic National University, Institute of Architecture, Department of Design STRESZCZENIE Przedmiotem artykułu jest analiza uwarunkowań socjalno-ekonomicznych i kierunków rozwoju przestrzennego małych miast obwodu lwowskiego, leżących w 30-kilometrowej strefie przygranicznej. Na charakterystycznych przykładach Mościsk i Rawy Ruskiej opi- sano w porządku chronologicznym rozwój przestrzenny ich centrów w drugiej połowie XX wieku, kładąc nacisk na brak efektywnych rozwiązań uwzględniających takie czynniki, jak kształtowanie przestrzeni publicznych, ochrona zabytków i obsługa międzynarodowego ruchu turystycznego. Opisano koncepcje projektowe kształtowania przestrzeni publicz- nych w centrach wymienionych miast. Słowa kluczowe: centrum, małe miasto, przestrzeń publiczna, rekonstrukcja centrum. ABSTRACT The subject of the article is to analyse the socio-economic backgrounds and directions of spatial development of small towns in the 30 km border area of Lviv region. The spatial development of the centres of Mostyska and Rava-Ruska, as typical examples, is chrono- logically described in the second half of the XX century. Emphasizing the lack of effective solutions for the public space formation, protection of historical heritage and maintenance of international tourism. We describe the design concept of the public spaces formation in the centers of these cities. Keywords: center reconstruction, public space, small town, the city center. 150 s p a c e & FORM | przestrzeń i FORMa ‘24/1_2015 1. THE PROBLEM FORMULATION Lviv region is one of three regions in Ukraine (except for Volyn and Transcarpathia), where territories are located near the border with Poland, Ukraine. -

List of Cities of Ukraine

City Name City Name (in Ukrainian) Subdivision City status Population (2001 Census) Kyiv Київ Kyiv S 2,847,200 Kharkiv Харків Kharkiv Oblast O 1,470,902 Dnipropetrovsk Дніпропетровськ Dnipropetrovsk Oblast O 1,065,008 Odesa Одеса Odessa Oblast O 1,029,049 Donetsk Донецьк Donetsk Oblast O 1,016,194 Zaporizhia Запоріжжя Zaporizhia Oblast O 815,256 Lviv Львів Lviv Oblast O 732,818 Kryvyi Rih Кривий ріг Dnipropetrovsk Oblast R 668,980 Mykolaiv Миколаїв Mykolaiv Oblast O 514,136 Mariupol Маріуполь Donetsk Oblast R 492,176 Luhansk Луганськ Luhansk Oblast O 463,097 Makiivka Макіївка Donetsk Oblast R 389,589 Vinnytsia Вінниця Vinnytsia Oblast O 356,665 Simferopol Сімферополь Crimea O 343,644 Sevastopol Севастополь Sevastopol S 342,451 Kherson Херсон Kherson Oblast O 328,360 Poltava Полтава Poltava Oblast O 317,998 Chernihiv Чернігів Chernihiv Oblast O 304,994 Cherkasy Черкаси Cherkasy Oblast O 295,414 Sumy Суми Sumy Oblast O 293,141 Horlivka Горлівка Donetsk Oblast R 292,250 Zhytomyr Житомир Zhytomyr Oblast O 284,236 Dniprodzerzhynsk Дніпродзержинськ Dnipropetrovsk Oblast R 255,841 Kirovohrad Кіровоград Kirovohrad Oblast O 254,103 Khmelnytskyi Хмельницький Khmelnytskyi Oblast O 253,994 Rivne Рівне Rivne Oblast O 248,813 Chernivtsi Чернівці Chernivtsi Oblast O 240,621 Kremenchuk Кременчук Poltava Oblast R 234,073 Ternopil Тернопіль Ternopil Oblast O 227,755 Ivano-Frankivsk Івано-Франківськ Ivano-Frankivsk Oblast O 218,359 Lutsk Луцьк Volyn Oblast O 208,816 Bila Tserkva Біла Церква Kiev Oblast R 200,131 Kramatorsk Краматорськ Donetsk Oblast R 181,025