A Thesis by Valkyrie Arline Savage

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Das Große Alan-Bangs-Nachtsession -Archiv Der

Das große Alan-Bangs-Nachtsession1-Archiv der FoA Alan Bangs hatte beim Bayerischen Rundfunk seit Februar 2000 einen besonderen Sendeplatz, an dem er eine zweistündige Sendung moderieren konnte: „Durch die Initiative von Walter Meier wurde Alan ständiger Gast DJ in den Nachtsession‘s, welche jeweils in der Nacht vom Freitag zum Samstag auf Bayern2 ausgestrahlt werden. Seine 1.Nachtsession war am 18.02.2000 und seit dieser ist er jeweils 3 bis 4mal pro Jahr zu hören – die letzte Sendung wurde am 1.12.2012 ausgestrahlt. Im Jahr 2001 gab es keine Nachtsession, 2002 und 2003 jeweils nur eine Sendung.“ (Zitat aus dem bearbeiteten Alan-Bangs-Wiki auf http://www.friendsofalan.de/alans-wiki/ Ab 2004 gab es dann bis zu vier Sendungen im Jahr, die letzte Sendung war am 1.12. 2010. Alan Bangs‘ Nachtsession- Sendungen wurden zeitweise mit Titeln oder Themen versehen, die auf der Webseite bei Bayern 2 erschienen. Dazu gab es teilweise einleitende Texte zusätzlich zu den Playlisten dieser Sendungen. Soweit diese (noch) recherchierbar waren, sind sie in dieses Archiv eingepflegt. Credits: Zusammengestellt durch FriendsofAlan (FoA) unter Auswertung von Internet- Quellen insbes. den auf den (vorübergehend) öffentlich zugänglichen Webseiten von Bayern 2 (s. die aktuelle Entwicklung dort im folgenden Kasten ) IN MEMORIUM AUCH DIESES SENDEFORMATS Nachtsession Der Letzte macht das Licht aus Nacht auf Donnerstag, 07.01.2016 - 00:05 bis 02:00 Uhr Bayern 2 Der Letzte macht das Licht aus Die finale Nachtsession mit den Pop-Toten von 2015 Mit Karl Bruckmaier Alle Jahre wieder kommt der Sensenmann - und heuer haut er die Sendereihe Nachtsession gleich mit um. -

Shared Worlds 2018

SHARED WORLDS 2018 Shared Worlds 2018 By the teens at the Shared Worlds Camp 2018 Wofford College Spartanburg, North Carolina Cheeky Frawg Books Tallahassee, Florida Shared Worlds Summer Writing Camp Wofford College, Spartanburg, South Carolina With thanks to Cheeky Frawg Books and VanderMeer Creative, Inc., for making the printing of this anthology possible. The Shared Worlds Student Writing Book Copyright ©2018, Shared Worlds and Wofford College All stories and bestiary text copyright © 2018 to the individual students Introduction copyright © 2018 Jeremy LC Jones Cover & Art Design © 2018 by Jeremy Zerfoss Compiled by Jackie Gitlin Edited by Kari J. Wolfe Direct all queries to: Wofford College Shared Worlds c/o Tim Schmitz 429 North Church Street, Spartanburg, SC 29303-3663 The Shared Worlds concept is a creation of Jeremy L.C. Jones with Jeff VanderMeer and sponsored by Wofford College. The stories included in this anthology are works of collected fiction. All events portrayed in this book are fictitious, and any resemblance to real people or events is purely coincidental, All rights reserved, including the right to reproduce this book or portions thereof in any form without the express permission of the author and publisher. For registration and donation information, visit our website: http://www.sharedworldscamp.com ISBN: 978-0-9863177-7-4 CONTENTS 6 Introduction 9 Ollidamra 10 Isabel Astwood 12 Mextli Garcia 14 Christina Grier 16 Britney Jean 19 Avner (Lauren) Lyons 21 Sarah Crosby McKay 24 King Snider 27 Bryton Tanner 31 Michelle Taylor 34 Pen Zuleger 37 Aigyptos System 38 Georgia Bailey 41 Samuel Conteras 43 Sophia Harlow 46 Murphy Kalil 49 Felix M. -

(Bass & Vocals), Bruce Gilbert

WIRE 1977–1980 Wire were formed by Colin Newman (guitar & vocals), Graham Lewis (bass & vocals), Bruce Gilbert (guitar) and Robert Grey (drums). The band subjected themselves to a lengthy period of intense, 12-hour rehearsals, honing their (admittedly basic) musical skills until razor-sharp. What emerged was a version of rock shorn of all ornamentation and showbusiness. Newman and Gilbert’s chord sequences often took unexpected turns, Graham Lewis’s unique lyrics were vivid but oblique, and Grey’s drumming was minimal and metronomic. They may have arrived at the same time as punk and drawn on its initial burst of energy, but Wire’s forward-looking attitude meant they always stood apart from it. In fact, Wire could easily lay claim to having been the very first post- punk band. And yet, it’s equally true to say they could be viewed as part of the British tradition of left-field art-school bands that includes Roxy Music and The Pink Floyd. Wire played their debut proper at London’s Roxy club on 1 April, 1977. The band were immediately picked up by prodigious EMI offshoot label Harvest, and their debut album Pink Flag was released in December of the same year. The album was a collection of 21 songs, many of them sharp, abrasive and extremely short – six didn’t even make the one-minute mark. Yet many tracks, such as ‘12XU’ and ‘Ex-Lion Tamer’, are now rightly regarded as classics. Pink Flag received near universal acclaim and would be cited as a major influence on a panoramic range of artists, including Big Black, My Bloody Valentine, Blur, Henry Rollins, Joy Division and R.E.M. -

The Animate Object of Kinetic Art, 1955-1968

University of Pennsylvania ScholarlyCommons Publicly Accessible Penn Dissertations 2017 The Animate Object Of Kinetic Art, 1955-1968 Marina C. Isgro University of Pennsylvania, [email protected] Follow this and additional works at: https://repository.upenn.edu/edissertations Part of the History of Art, Architecture, and Archaeology Commons Recommended Citation Isgro, Marina C., "The Animate Object Of Kinetic Art, 1955-1968" (2017). Publicly Accessible Penn Dissertations. 2353. https://repository.upenn.edu/edissertations/2353 This paper is posted at ScholarlyCommons. https://repository.upenn.edu/edissertations/2353 For more information, please contact [email protected]. The Animate Object Of Kinetic Art, 1955-1968 Abstract This dissertation examines the development of kinetic art—a genre comprising motorized, manipulable, and otherwise transformable objects—in Europe and the United States from 1955 to 1968. Despite kinetic art’s popularity in its moment, existing scholarly narratives often treat the movement as a positivist affirmation of postwar technology or an art of mere entertainment. This dissertation is the first comprehensive scholarly project to resituate the movement within the history of performance and “live” art forms, by looking closely at how artists created objects that behaved in complex, often unpredictable ways in real time. It argues that the critical debates concerning agency and intention that surrounded moving artworks should be understood within broader aesthetic and social concerns in the postwar period—from artists’ attempts to grapple with the legacy of modernist abstraction, to popular attitudes toward the rise of automated labor and cybernetics. It further draws from contemporaneous phenomenological discourses to consider the ways kinetic artworks modulated viewers’ experiences of artistic duration. -

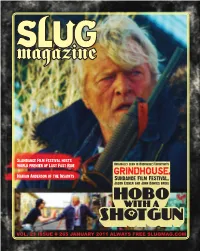

Vol. 21 Issue # 265 January 2011 Always Free Slugmag.Com

VOL. 21 ISSUE # 265 JANUARY 2011 ALWAYS FREE SLUGMAG.COM SaltLakeUnderGround 1 2 SaltLakeUnderGround SaltLakeUnderGround 3 SaltLakeUnderGround • Vol. 21• Issue # 265 • January 2011 • slugmag.com Publisher: Eighteen Percent Gray Shauna Brennan Editor: Angela H. Brown [email protected] Managing Editor: Marketing Coordinator: Bethany Jeanette D. Moses Fischer Editorial Assistant: Ricky Vigil Marketing: Ischa Buchanan, Jea- Action Sports Editor: nette D. Moses, Jessica Davis, Billy Adam Dorobiala Ditzig, Hailee Jacobson, Stephanie Copy Editing Team: Jeanette D. Buschardt, Giselle Vickery, Veg Vollum, Moses, Rebecca Vernon, Ricky Josh Dussere, Chrissy Hawkins, Emily Vigil, Esther Meroño, Liz Phillips, Katie Burkhart, Rachel Roller, Jeremy Riley. Panzer, Rio Connelly, Joe Maddock, SLUG GAMES Coordinators: Mike Alexander Ortega, Mary Enge, Kolbie Brown, Jeanette D. Moses, Mike Stonehocker, Cody Kirkland, Hannah Reff, Sean Zimmerman-Wall, Adam Christian. Dorobiala, Jeremy Riley, Katie Panzer, Issue Design: Joshua Joye Jake Vivori, Brett Allen, Dave Brewer, Daily Calendar Coordinator: Billy Ditzig. Jessica Davis Distribution Manager: Eric Granato [email protected] Distro: Eric Granato, Tommy Dolph, Social Networking Coordinator: Tony Bassett, Joe Jewkes, Jesse Katie Rubio Hawlish, Nancy Burkhart, Adam Heath, Design Interns: Adam Dorobiala, Adam Okeefe, Manuel Aguilar, Ryan Eric Sapp, Bob Plumb. Worwood, Chris Proctor, David Ad Designers: Todd Powelson, Frohlich. Kent Farrington, Sumerset Bivens, Office Interns: Jessica Davis, Jeremy Jaleh -

XXXIV Edizione Time Zones Programma

XXXIV edizione 21 Settembre – 16 Novembre 2019 “AD MUSEUM” Sabato 21 Settembre Ore 21,00 – Chiostro di Santa Chiara – Bari Midori Hirano (JAP) Musicista, compositrice, sound artist e producer giapponese, nata a Kyoto e residente a Berlino dal 2008. Ha pubblicato in totale sette album da solista e si è esibita in eventi come Boiler Room Berlin, Club Transmediale, Heroines of Sound Festival, Rotterdam International Film Festival e L.E.V. Festival. Delphine Dora (FR) Pianista, cantante, improvvisatrice, compositrice e fondatrice del marchio Wild Silence, Nel suo album “Eudaimon”, licenziato da three: four records, mette in musica i versi della poetessa Kaithleen Raine. La sua musica è considerata intima, selvaggia, strana, inclassificabile. *** Domenica 22 Settembre Ore 21,00 – Chiostro di Santa Chiara – Bari Surma (POR) La musica di Surma è un mix insolito di suoni elettronici, che combina con loop ed effetti. Il risultato è una musica tenue e accattivante, che si colloca tra jazz e ambient. La sua origine portoghese affiora con un soffio di nostalgia e a tratti sembra ricordare Björk e Imogen Heap. Carmine Padula (ITA) Giovanissimo pianista e compositore italiano, nato a Foggia nel 2000, incomincia gli studi di pianoforte all’età di 11 anni. Vincitore di numerosi concorsi nazionali ed internazionali, nel luglio 2015 ha vinto il I Festival della città di Foggia con due sue composizioni per pianoforte ed ensemble, aggiudicandosi il primo posto assoluto tra oltre 80 gruppi partecipanti. *** Sabato 28 Settembre Ore 21,00 – Palazzo De Mari – Acquaviva delle Fonti (Ba) Arcoiris Ensemble (ITA-IND) I due chitarristi acustici Fausto Ciotti e Andrea Moriconi, insieme al tablista indiano Sanjay Kansa Banik, propongono la loro originale miscela di world music: atmosfere mediterranee e bossa nova con un pizzico di funky. -

(12) United States Patent (1O) Patent No.: US 7,805,276 B1 Byers Et Al

(12) United States Patent (1o) Patent No.: US 7,805,276 B1 Byers et al. (45) Date of Patent: Sep. 28, 2010 (54) IMPACT DETECTION SYSTEM 4,770,527 A 9/1988 Park 4,939,407 A 7/1990 Goo et al. (75) Inventors: Terry Byers, League City, TX (US); 4,975,616 A 12/1990 Park Frank L. Gibbons, Houston, TX (US); 5,089,741 A 2/1992 Park et al. Eric L. Christiansen, Houston, TX (US) 5,195,046 A 3/1993 Gerardietal. 5,447,315 A 9/1995 Perkins (73) Assignee: The United States of America as 5,911,158 A 6/1999 Henderson et al. represented by the Administrator of 6,551,205 131 * 4/2003 Koelzer et al . .............. 473/454 the National Aeronautics and Space 6,664,716 132 12/2003 Cuhat et al. Administration, Washington, DC (US) 6,700,314 132 3/2004 Cuhat et al. 6,998,759 132 2/2006 Lee et al. (*) Notice: Subject to any disclaimer, the term of this 2003/0001462 Al* 1/2003 Lee et al . .................... 310/365 patent is extended or adjusted under 35 2006/0053534 Al* 3/2006 Mullen .......................... 2/456 U.S.C. 154(b) by 422 days. FOREIGN PATENT DOCUMENTS (21) Appl. No.: 11/958,937 JP 62106334 A 5/1987 JP 1223935 A 9/1989 (22) Filed: Dec. 18, 2007 (51) Int. Cl. * cited by examiner GOIC 9100 (2006.01) Primary Examiner Sujoy K Kundu GOIC 17/00 (2006.01) (74) Attorney, Agent, or Firm Theodore U. Ro (52) U.S. Cl . ......................... 702/150; 702/35; 702/141; 702/149; 73/1.39; 73/583; 310/311; 310/334; (57) ABSTRACT 310/364; 473/454 (58) Field of Classification Search .................. -

THE Screamboard

THE SCREAMboard A Hands-free Digital Processing Solution for Acoustic Improvisers by HUNTER PORTERFIELD EWEN B.A., Case Western Reserve University / Cleveland Institute of Music Joint Music Program, 2007 B.S., Case Western Reserve University, 2007 M.M., University of Colorado at Boulder, 2009 Research Document Project TMUS 8329 A thesis submitted to the Faculty of the Graduate School of the University of Colorado in partial fulfillment of the requirements for the degree of Doctor of Musical Arts College of Music 2013 This thesis entitled: The SCREAMboard: A Hands-free Digital Processing Solution for Acoustic Improvisers written by Hunter Porterfield Ewen has been approved for the College of Music ___________________________________ (John Drumheller) ___________________________________ (Michael Theodore) ___________________________________ (Dan Kellogg) Date______________ The final contents of this thesis have been examined by the signatories, and we find that both the content and the form meet acceptable presentation standards of scholarly work in the above mentioned discipline. iii Ewen, Hunter (D.M.A., Music Composition and Theory) The SCREAMboard: A Hands-free Digital Processing Solution for Acoustic Improvisers Thesis directed by Instructor and Director of Music Technology John Drumheller While many devices offer electronic musicians opportunities to digitally record, augment, and modulate their sounds, there is a gap for acoustic, instrumental performers. Two primary problems that acoustic instrumentalists experience when using electronics -

16-01-20 FULL STOCK LIST A-Z Drone

DRONE RECORDS - FULL STOCK LIST A-Z - 20. Jan. 2016 Artist - Title - Format - Release Year - Label & Cat.-Nr - Price in Euro 1000SCHOEN Yoshiwara (do-CD, 2011, Nitkie label patch seven, €15.5) Amish Glamour (do-CD, 2012, Nitkie Records Patch ten, €17) 1000SCHOEN / AB INTRA Untitled (do-CD, 2014, Zoharum ZOHAR 070-2, €15.5) 15 DEGREES BELOW ZERO Under a Morphine Sky (CD, 2007, Force of Nature FON07, €8) Between Checks and Artillery. Between Work and Image (10, 2007, Angle Records A.R.10.03, €10) New Travel (CD, 2007, Edgetone Records EDT4062, €13) Morphine Dawn (maxi-CD, 2004, Crunch Pod CRUNCH 32, €7) Resting on A (CD, 2009, Edgetone Records EDT4088, €13) 21 GRAMMS Water-Membrane (CD, 2012, Greytone grey009, €12) 23 SKIDOO The Culling is Coming (CD, 2003, LTM Publishing / Boutique BOUCD 6604, €15) Seven Songs (CD, 2008, LTM Publishing LTMCD 2528, €14.5) 2:13 PM Anus Dei (CD, 2012, 213Records 213cd07, €10) 2KILOS & MORE 9,21 (mCD-R, 2006, Taalem alm 37, €5) 8floors lower (CD, 2007, Jeans Records 04, €13) 3/4HADBEENELIMINATED Theology (CD, 2007, Soleilmoon Recordings SOL 148, €19.5) Oblivion (CD, 2010, Die Schachtel DSZeit11, €14) 300 BASSES Sei Ritornelli (CD, 2012, Potlatch P212, €15) 400 LONELY THINGS same (LP, 2003, Bronsonunlimited BRO 000 LP, €12) 5IVE Hesperus (CD, 2008, Tortuga TR-037, €16) 5UU'S Crisis in Clay (CD, 1997, ReR Megacorp ReR 5uu2, €14) Hunger's Teeth (CD, 1994, ReR Megacorp ReR 5uu1, €14) 7JK (SIEBEN & JOB KARMA) Anthems Flesh (CD, 2012, Redroom Records REDROOM 010 CD , €13) 87 CENTRAL Formation (CD, 2003, Staalplaat -

Love Objects

Avoiding the Subject Avoiding the Subject MEDIA, CULTURE AND THE OBJECT Justin Clemens | Dominic Pettman A U P Avoiding the Subject Avoiding the Subject Media, Culture and the Object Justin Clemens Dominic Pettman AMSTERDAM UNIVERSITY PRESS Cover illustration: Merritt Symes Cover design: Sabine Mannel, N.A.P., Amsterdam Lay-out: PROgrafici, Goes ISBN 90 5356 716 X NUR 736 © Amsterdam University Press, Amsterdam 2004 All rights reserved. Without limiting the rights under copyright reserved above, no part of this book may be reproduced, stored in or introduced into a retrieval sys- tem, or transmitted, in any form or by any means (electronic, mechanical, photo- copying, recording or otherwise) without the written permission of both the copy- right owner and the author of the book. Table of Contents 5 Acknowledgments / 7 Introduction / 9 The Influence of Anxiety / 11 Chapter 1: The Aesthetic Object / 23 A Break in Transmission: Art, Appropriation and Accumulation / 25 Chapter 2: The Love Object / 37 Relations with Concrete Others / 39 (or, How We Learned to Stop Worrying and Love the Berlin Wall) Chapter 3: The Elusive Object / 57 “Look at the Bunny”: The Rabbit as Virtual Totem / 59 (or, What Roger Rabbit Can Teach Us About the Second Gulf War) Chapter 4: The Media(ted) Object / 81 From September 11 to the 7-11: Popular Propaganda and the Internet’s War on Terrorism / 83 Chapter 5: The Shared Object / 93 Abandoned Commonplaces: Some Belated Thoughts on Big Brother / 95 Chapter 6: The Moveable Object / 109 Public Transport: Jaunting from -

Classifiche 2008

===== ELEPHANT TALK ===== <http://www.burioni.it/forum/ridi/et/ethome.htm> rivista musicale elettronica diretta da Riccardo Ridi <[email protected]> vicedirettore Filippo Tagliaferri -------------------------------------------------- Anno XIV Numero 74 (16 Marzo 2009) -------------------------------------------------- INDICE - LE METACLASSIFICHE 2008 DI ET / di FT - LA METACLASSIFICA 2008 DEI REDATTORI E DEI LETTORI DI ET / a cura di FT - LA METACLASSIFICA 2008 DELLE ALTRE RIVISTE E SITI / a cura di FT - LE CLASSIFICHE 2008 DEI REDATTORI E DEI LETTORI DI ET / a cura di RR - LE CLASSIFICHE 2008 DELLE ALTRE RIVISTE E SITI (ITALIANI) / a cura di RR - LE CLASSIFICHE 2008 DELLE ALTRE RIVISTE E SITI (STRANIERI) / a cura di RR - ANEDDOTI DI UN MUSICOFILO (ABOUT LOST!) / di Marco Misuri -------------------------------------------------- - LE METACLASSIFICHE 2008 DI ET / di Filippo Tagliaferri Un altro anno è ormai passato da qualche mese e più o meno puntualmente ci ritroviamo a farne il consuntivo. La prima impressione che abbiamo avuto è che il 2008 abbia fornito alcuni titoli 'sostanziosi', su cui si è concentrata l'attenzione della maggior parte degli ascoltatori (riviste, siti o semplici votanti di ET). Salta infatti subito all'occhio, scorrendo tra le varie classifiche, la costante presenza di dischi come il terzo dei Portishead o di Dear Science dei TV On The Radio, pressoché votati, ed ai piani alti, da tutti. Si tratta, in entrambi i casi, di piacevoli conferme. Per quanto riguarda i Portishead, non ci speravamo davvero più, a dieci anni di distanza dal precedente disco e non possiamo che compiacerci del loro ottimo stato di salute artistica. Quanto ai TV On The Radio, è fuori luogo parlarne ancora come di una band nuova o emergente, trovandoci ormai di fronte al terzo disco (su tre) di alto livello.