Ironclad Apps: End-To-End Security Via Automated Full-System Verification Chris Hawblitzel, Jon Howell, and Jacob R

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Radiohead's Pre-Release Strategy for in Rainbows

Making Money by Giving It for Free: Radiohead’s Pre-Release Strategy for In Rainbows Faculty Research Working Paper Series Marc Bourreau Telecom ParisTech and CREST Pinar Dogan Harvard Kennedy School Sounman Hong Yonsei University July 2014 RWP14-032 Visit the HKS Faculty Research Working Paper Series at: http://web.hks.harvard.edu/publications The views expressed in the HKS Faculty Research Working Paper Series are those of the author(s) and do not necessarily reflect those of the John F. Kennedy School of Government or of Harvard University. Faculty Research Working Papers have not undergone formal review and approval. Such papers are included in this series to elicit feedback and to encourage debate on important public policy challenges. Copyright belongs to the author(s). Papers may be downloaded for personal use only. www.hks.harvard.edu Makingmoneybygivingitforfree: Radiohead’s pre-release strategy for In Rainbows∗ Marc Bourreau†,Pınar Dogan˘ ‡, and Sounman Hong§ June 2014 Abstract In 2007 a prominent British alternative-rock band, Radiohead, pre-released its album In Rainbows online, and asked their fans to "pick-their-own-price" (PYOP) for the digital down- load. The offer was available for three months, after which the band released and commercialized the album, both digitally and in CD. In this paper, we use weekly music sales data in the US between 2004-2012 to examine the effect of Radiohead’s unorthodox strategy on the band’s al- bum sales. We find that Radiohead’s PYOP offer had no effect on the subsequent CD sales. Interestingly, it yielded higher digital album sales compared to a traditional release. -

A Critical Review of Acquisitions Within the Australian Vocational Education and Training Sector 2012 to 2017 Kristina M. Nicho

A Critical Review of Acquisitions within the Australian Vocational Education and Training Sector 2012 to 2017 Kristina M. Nicholls Victoria University Business School Submitted in fulfilment of requirements for the degree of Doctor of Business Administration 2020 Abstract A Critical Review of Acquisitions within the Vocational Education and Training Sector 2012 to 2017 Organisations often look to acquisitions as a means of achieving their growth strategy. However, notwithstanding the theoretical motivations for engaging in acquisitions, research has shown that the acquiring organisation, following the acquisition, frequently experiences a fall in share price and degraded operating performance. Given the failure rates that are conservatively estimated at over 50%, the issue of acquisitions is worthy of inquiry in order to determine what factors make for a successful or alternately an unsuccessful outcome. The focus of this study is the vocational education sector in Australia, where private registered training organisations [RTOs] adopted acquisitions as a strategy to increase their market share and/or support growth strategies prompted by deregulation and a multi-billion dollar training investment by both Australian State and Federal governments in the past ten years. Fuelled by these changes in Government policy, there was a dramatic growth in RTO acquisitions between the period 2012 and 2017. Many of these acquisitions ended in failure, including several RTOs that listed on the Australian Stock Exchange [ASX]. This study investigates acquisitions of Australian RTOs, focusing on the period from 2012 to 2017 [study period]. The aim is to understand what factors contributed to the success and/or failure of acquisitions of registered training organisations in the Australian Private Education Sector. -

Total Cost of Ownership and Open Source Software

What Place Does Open Source Software Have In Australian And New Zealand Schools’ and Jurisdictions’ ICT Portfolios? TOTAL COST OF OWNERSHIP AND OPEN SOURCE SOFTWARE Research paper by Kathryn Moyle Department of Education and Children’s Services South Australia July 2004 1 Contents Contents 2 List of tables and diagrams 3 Abbreviations 4 Acknowledgements 5 Executive summary 6 Options for future actions 7 Introduction 9 Key questions 9 Open source software and standards 9 Comparison of open source and proprietary software licences 11 Building on recent work 12 Contexts 14 Use of ICT in schools 14 Current use of open source software in Australia and New Zealand 14 Procurement and deployment of ICT 15 Department of Education and Children’s Services, South Australia 16 What is total cost of ownership? 17 Purpose of undertaking a total cost of ownership analysis 17 Why undertake total cost of ownership work? 17 How can total cost of ownership analyses help schools, regions and central agencies plan? 17 Total cost of ownership analyses should not be undertaken in isolation 18 Total cost of ownership and open source software 18 Review of literature 19 Open source software in government schools 19 Total cost of ownership 20 Total cost of ownership in schools 21 Total cost of ownership, open source software and schools 23 Summary 25 Undertaking a financial analysis 26 Principles underpinning a total cost of ownership 26 Processes 27 Testing a financial model: Total Cost of Ownership in a school 33 Scenario 33 Future plans 40 ICT deployment options -

Page 14 Street, Hudson, 715-386-8409 (3/16W)

JOURNAL OF THE AMERICAN THEATRE ORGAN SOCIETY NOVEMBER | DECEMBER 2010 ATOS NovDec 52-6 H.indd 1 10/14/10 7:08 PM ANNOUNCING A NEW DVD TEACHING TOOL Do you sit at a theatre organ confused by the stoprail? Do you know it’s better to leave the 8' Tibia OUT of the left hand? Stumped by how to add more to your intros and endings? John Ferguson and Friends The Art of Playing Theatre Organ Learn about arranging, registration, intros and endings. From the simple basics all the way to the Circle of 5ths. Artist instructors — Allen Organ artists Jonas Nordwall, Lyn Order now and recieve Larsen, Jelani Eddington and special guest Simon Gledhill. a special bonus DVD! Allen artist Walt Strony will produce a special DVD lesson based on YOUR questions and topics! (Strony DVD ships separately in 2011.) Jonas Nordwall Lyn Larsen Jelani Eddington Simon Gledhill Recorded at Octave Hall at the Allen Organ headquarters in Macungie, Pennsylvania on the 4-manual STR-4 theatre organ and the 3-manual LL324Q theatre organ. More than 5-1/2 hours of valuable information — a value of over $300. These are lessons you can play over and over again to enhance your ability to play the theatre organ. It’s just like having these five great artists teaching right in your living room! Four-DVD package plus a bonus DVD from five of the world’s greatest players! Yours for just $149 plus $7 shipping. Order now using the insert or Marketplace order form in this issue. Order by December 7th to receive in time for Christmas! ATOS NovDec 52-6 H.indd 2 10/14/10 7:08 PM THEATRE ORGAN NOVEMBER | DECEMBER 2010 Volume 52 | Number 6 Macy’s Grand Court organ FEATURES DEPARTMENTS My First Convention: 4 Vox Humana Trevor Dodd 12 4 Ciphers Amateur Theatre 13 Organist Winner 5 President’s Message ATOS Summer 6 Directors’ Corner Youth Camp 14 7 Vox Pops London’s Musical 8 News & Notes Museum On the Cover: The former Lowell 20 Ayars Wurlitzer, now in Greek Hall, 10 Professional Perspectives Macy’s Center City, Philadelphia. -

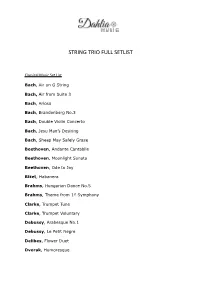

String Trio Full Setlist

STRING TRIO FULL SETLIST Classical Music Set List Bach, Air on G String Bach, Air from Suite 3 Bach, Arioso Bach, Brandenberg No.3 Bach, Double Violin Concerto Bach, Jesu Man’s Desiring Bach, Sheep May Safely Graze Beethoven, Andante Cantabile Beethoven, Moonlight Sonata Beethoven, Ode to Joy Bizet, Habanera Brahms, Hungarian Dance No.5 Brahms, Theme from 1st Symphony Clarke, Trumpet Tune Clarke, Trumpet Voluntary Debussy, Arabesque No.1 Debussy, Le Petit Negre Delibes, Flower Duet Dvorak, Humoresque Dvorak, Largo from New World Symphony Einaudi, Svanire Elgar, Chanson de Matin Elgar, Salut d’Amoure Faure, Pavane Franck, Panis Angelicus Gardel, Tango Por Una Cabeza Gilbert and Sullivan, Three Little Maids Gounod, Ave Maria Handel, Air in D Handel, Air in F Handel, Arrival of the Queen of Sheba Handel, Bourree in F Handel, Coro Handel, Hornpipe in D Handel, Hornpipe in F Handel, La Rejouissance Handel, La Paix Handel, Largo from Xerxes Handel, Minuet in D Handel, Minuet in D Minor Handel, Minuet in G Minor Handel, Queen of Sheba Handel, Water Music Jenkins, Palladio Joplin, The Sycamore Kreisler, Schon Rosmarin Mascagni, Intermezzo from Cavalleria Rusticana Mendelssohn, Wedding March Mouret, Rondeau Mozart, Eine Kleine Nachtmusik Mozart, Rondo Alla Turca Pachelbel, Canon in D Pucchini, O Mio Babbino Caro Purcell, Two Rondaux Abdelazar and the Fairy Queen Schubert, Ave Maria Schubert, Moment Musicale Schubert, Standchen Schumann, Romance No.2 Severn, Polish Dance Strauss, Pizzicato Polka Tchaikovsky, Romeo and Juliet Love Theme Tchaikovsky, -

View Song List

Song Artist What's Up 4 Non Blondes You Shook Me All Night Long AC-DC Song of the South Alabama Mountain Music Alabama Piano Man Billy Joel Austin Blake Shelton Like a Rolling Stone Bob Dylan Boots of Spanish Leather Bob Dylan Summer of '69 Bryan Adams Tennessee Whiskey Chris Stapleton Mr. Jones Counting Crows Ants Marching Dave Matthews Dust on the Bottle David Lee Murphy The General Dispatch Drift Away Dobie Gray American Pie Don McClain Castle on the Hill Ed Sheeran Thinking Out Loud Ed Sheeran Rocket Man Elton John Tiny Dancer Elton John Your Song Elton John Drink In My Hand Eric Church Give Me Back My Hometown Eric Church Springsteen Eric Church Like a Wrecking Ball Eric Church Record Year Eric Church Wonderful Tonight Eric Clapton Rock & Roll Eric Hutchinson OK It's Alright With Me Eric Hutchinson Cruise Florida Georgia Line Friends in Low Places Garth Brooks Thunder Rolls Garth Brooks The Dance Garth Brooks Every Storm Runs Out Gary Allan Hey Jealousy Gin Blossoms Slide Goo Goo Dolls Friend of the Devil Grateful Dead Let Her Cry Hootie and the Blowfish Bubble Toes Jack Johnson Flake Jack Johnson Barefoot Bluejean Night Jake Owen Carolina On My Mind James Taylor Fire and Rain James Taylor I Won't Give Up Jason Mraz Margaritaville Jimmy Buffett Leaving on a Jet Plane John Denver All of Me John Legend Jack & Diane John Mellancamp Folsom Prison Blues Johnny Cash Don't Stop Believing Journey Faithfully Journey Walking in Memphis Marc Cohn Sex and Candy Marcy's Playground 3am Matchbox 20 Unwell Matchbox 20 Bright Lights Matchbox 20 You Took The Words Right Out Of Meatloaf All About That Bass Megan Trainor Man In the Mirror Michael Jackson To Be With You Mr. -

The Dark Side of the Attack on Colonial Pipeline

“Public GitHub is 54 often a blind spot in the security team’s perimeter” Jérémy Thomas Co-founder and CEO GitGuardian Volume 5 | Issue 06 | June 2021 Traceable enables security to manage their application and API risks given the continuous pace of change and modern threats to applications. Know your application DNA Download the practical guide to API Security Learn how to secure your API's. This practical guide shares best practices and insights into API security. Scan or visit Traceable.ai/CISOMag EDITOR’S NOTE DIGITAL FORENSICS EDUCATION MUST KEEP UP WITH EMERGING TECHNOLOGIES “There is nothing like first-hand evidence.” Brian Pereira - Sherlock Holmes Volume 5 | Issue 06 Editor-in-Chief June 2021 f the brilliant detective Sherlock Holmes and his dependable and trustworthy assistant Dr. Watson were alive and practicing today, they would have to contend with crime in the digital world. They would be up against cybercriminals President & CEO Iworking across borders who use sophisticated obfuscation and stealth techniques. That would make their endeavor to Jay Bavisi collect artefacts and first-hand evidence so much more difficult! As personal computers became popular in the 1980s, criminals started using PCs for crime. Records of their nefarious Editorial Management activities were stored on hard disks and floppy disks. Tech-savvy criminals used computers to perform forgery, money Editor-in-Chief Senior Vice President laundering, or data theft. Computer Forensics Science emerged as a practice to investigate and extract evidence from Brian Pereira* Karan Henrik personal computers and associated media like floppy disk, hard disk, and CD-ROM. This digital evidence could be used [email protected] [email protected] in court to support cases. -

Operating System Security – a Short Note

Operating System Security – A Short Note 1,2Mr. Kunal Abhishek, 2Dr. E. George Dharma Prakash Raj 1Society for Electronic Transactions and Security (SETS), Chennai 2Bharathidasan University, Trichy [email protected], [email protected] 1. Introduction An Operating System (OS) is viewed as a Reference Monitor (RM) or a Reference Validation Mechanism (RVM) that provides basic level security. In [1], Anderson reported three design requirements for a Reference Monitor or Operating System. He suggested that an OS or RM should be tamper proof that means OS programs are not alterable, OS should always be invoked and OS must be small enough for analysis and testing purposes so that completeness of which can be assured. These OS design requirements became the deriving principle of OS development. A wide range of operating systems follow Anderson’s design principles in modern time. It was also observed in [2] that most of the attacks are imposed either on OS itself or on the programs running on the OS. The attacks on OS can be mitigated through formal verification to a great extent which prove the properties of OS code on various criteria like safeness, reliability, validity and completeness etc. Also, formal verification of OS is an intricate task which is feasible only when RVM or RM is small enough for analysis and testing within a reasonable time frame. Other way of attacking an OS is to attack the programs like device drivers running on top of it and subsequently inject malware through these programs interfacing with the OS. Thus, a malware can be injected in to the sensitive kernel code to make OS malfunction. -

November 1981

... - ~.~ AMERICAN VIOLA SOCIETY American Chapter of the INTERNATIONALE VIOLA FORSCHUNGSGESELLSCHAFr November NKtNSLETTEFt 21 19t5l A MESSAGE -.FftOK OUR- NEW- PRESIDENT-_.-_-- A Tribute to Myron ftoaenblulD I want to thank the members of the American Viola Society for trle honor you have beatowed on me in the recent electiona. I am indeed grateful, but alac awed by the responsibility of being the prei1dent of the American Viola Society. It wll1~be very difficult to fill the ahoes of Myron Roaenblum, the founder of our Society. Durin! hi. tenure as prealdemt, the society member ah1p has grown to over 38' memberlil. For many yearl, Myron was the preslden.t, secretary, treasurer, and edltorof the Newsletter of our organization. In addition, his home was stored with booka, music, and recordinga w·hich were ma.de available to members of the Society at reduced ratel. Mra. Pto8enblum ahould a1ao receive due credit for a811atanoe and interest in this project, which did not include any monetary profit. The New.letter, which Myron hal ed1ted, hag been a. source of information 1n all ares.& perralnin~ to the viola. ",:tTe all regret that this will be the la&t Newiletter written by Myron. He will continue, however, to contribute articles and act a8 .n advlaer for future issues. The recently ratified iy-La.wl of the American Viola Society provide that the immediate Past-Prealdent will contluue to serve aa an officer. Thil 1. indeed fortunate for the new president. I aha.II rely on Myren for advice and aaalatance during the next two yeara, whenever new problema confront the Society. -

Advanced Development of Certified OS Kernels Prof

Advanced Development of Certified OS Kernels Zhong Shao (PI) and Bryan Ford (Co-PI) Department of Computer Science Yale University P.O.Box 208285 New Haven, CT 06520-8285, USA {zhong.shao,bryan.ford}yale.edu July 15, 2010 1 Innovative Claims Operating System (OS) kernels form the bedrock of all system software—they can have the greatest impact on the resilience, extensibility, and security of today’s computing hosts. A single kernel bug can easily wreck the entire system’s integrity and protection. We propose to apply new advances in certified software [86] to the development of a novel OS kernel. Our certified kernel will offer safe and application-specific extensibility [8], provable security properties with information flow control, and accountability and recovery from hardware or application failures. Our certified kernel builds on proof-carrying code concepts [74], where a binary executable includes a rigorous machine-checkable proof that the software is free of bugs with respect to spe- cific requirements. Unlike traditional verification systems, our certified software approach uses an expressive general-purpose meta-logic and machine-checkable proofs to support modular reason- ing about sophisticated invariants. The rich meta-logic enables us to verify all kinds of low-level assembly and C code [10,28,31,44,68,77,98] and to establish dependability claims ranging from simple safety properties to advanced security, correctness, and liveness properties. We advocate a modular certification framework for kernel components, which mirrors and enhances the modularity of the kernel itself. Using this framework, we aim to create not just a “one-off” lump of verified kernel code, but a statically and dynamically extensible kernel that can be incrementally built and extended with individual certified modules, each of which will provably preserve the kernel’s overall safety and security properties. -

And Big Companies

The Pmarca Blog Archives (select posts from 2007-2009) Marc Andreessen copyright: Andreessen Horowitz cover design: Jessica Hagy produced using: Pressbooks Contents THE PMARCA GUIDE TO STARTUPS Part 1: Why not to do a startup 2 Part 2: When the VCs say "no" 10 Part 3: "But I don't know any VCs!" 18 Part 4: The only thing that matters 25 Part 5: The Moby Dick theory of big companies 33 Part 6: How much funding is too little? Too much? 41 Part 7: Why a startup's initial business plan doesn't 49 matter that much THE PMARCA GUIDE TO HIRING Part 8: Hiring, managing, promoting, and Dring 54 executives Part 9: How to hire a professional CEO 68 How to hire the best people you've ever worked 69 with THE PMARCA GUIDE TO BIG COMPANIES Part 1: Turnaround! 82 Part 2: Retaining great people 86 THE PMARCA GUIDE TO CAREER, PRODUCTIVITY, AND SOME OTHER THINGS Introduction 97 Part 1: Opportunity 99 Part 2: Skills and education 107 Part 3: Where to go and why 120 The Pmarca Guide to Personal Productivity 127 PSYCHOLOGY AND ENTREPRENEURSHIP The Psychology of Entrepreneurial Misjudgment: 142 Biases 1-6 Age and the Entrepreneur: Some data 154 Luck and the entrepreneur: The four kinds of luck 162 Serial Entrepreneurs 168 THE BACK PAGES Top 10 science Dction novelists of the '00s ... so far 173 (June 2007) Bubbles on the brain (October 2009) 180 OK, you're right, it IS a bubble (October 2009) 186 The Pmarca Guide to Startups Part 1: Why not to do a startup In this series of posts I will walk through some of my accumu- lated knowledge and experience in building high-tech startups. -

Taking Place This Week BRIT POP QUIZ…

WEEKLY BLOG wk02. Available each week on the Quiz On Demand website and as a pdf download that you can print and take with you. This week’s birthdays, a featured Brit Pop quiz, mid-week sport, this week in history, and some of the fab quiz questions that didn’t make it onto this week’s quizzes. MID-WEEK SPORT… taking place this week Monday 11 January 2021 Celtic v Hibernian Tuesday 12 January 2021 Sheffield United v Newcastle United Burnley v Manchester United Wolverhampton Wanderers v Everton Wednesday 13 January 2021 Manchester City v Brighton & Hove Albion Aston Villa v Tottenham Hotspur Thursday 14 January 2021 Arsenal v Crystal Palace BRIT POP QUIZ… From the hazy crazy days of the nineties, here’s a Brit Pop feature quiz you can add as an extra round to your weekly pub quiz. Give it a go!!! 1 What is the surname of the Oasis brothers Liam and Noel? GALLAGHER 2 'Female of the Species' was the fourth single off which Liverpool band’s debut album ‘Spiders’? SPACE 3 Richard Ashcroft was the singer with which nineties band? THE VERVE 4 In the lyrics of the Pulp song 'Common People, 'She came from Greece she had a thirst for knowledge. She studied what at Saint Martin's College? SCULPTURE 5 Which Blur song shares its name with a Manchester music festival? PARKLIFE 6 'Good Enough' and 'Staying Out For The Summer' were which bands best known songs? DODGY 7 Which Brit Pop band formed in York in the early nineties? SHED 7 8 Which band won a gong for best British Single at the 1996 Brit Award for ‘Roll With It’? OASIS 9 Briefly known as Seymour,