Lizardfs Documentation Release Latest

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

FOSDEM 2017 Schedule

FOSDEM 2017 - Saturday 2017-02-04 (1/9) Janson K.1.105 (La H.2215 (Ferrer) H.1301 (Cornil) H.1302 (Depage) H.1308 (Rolin) H.1309 (Van Rijn) H.2111 H.2213 H.2214 H.3227 H.3228 Fontaine)… 09:30 Welcome to FOSDEM 2017 09:45 10:00 Kubernetes on the road to GIFEE 10:15 10:30 Welcome to the Legal Python Winding Itself MySQL & Friends Opening Intro to Graph … Around Datacubes Devroom databases Free/open source Portability of containers software and drones Optimizing MySQL across diverse HPC 10:45 without SQL or touching resources with my.cnf Singularity Welcome! 11:00 Software Heritage The Veripeditus AR Let's talk about The State of OpenJDK MSS - Software for The birth of HPC Cuba Game Framework hardware: The POWER Make your Corporate planning research Applying profilers to of open. CLA easy to use, aircraft missions MySQL Using graph databases please! 11:15 in popular open source CMSs 11:30 Jockeying the Jigsaw The power of duck Instrumenting plugins Optimized and Mixed License FOSS typing and linear for Performance reproducible HPC Projects algrebra Schema Software deployment 11:45 Incremental Graph Queries with 12:00 CloudABI LoRaWAN for exploring Open J9 - The Next Free It's time for datetime Reproducible HPC openCypher the Internet of Things Java VM sysbench 1.0: teaching Software Installation on an old dog new tricks Cray Systems with EasyBuild 12:15 Making License 12:30 Compliance Easy: Step Diagnosing Issues in Webpush notifications Putting Your Jobs Under Twitter Streaming by Open Source Step. Java Apps using for Kinto Introducing gh-ost the Microscope using Graph with Gephi Thermostat and OGRT Byteman. -

ZFS 2018 and Onward

SEE TEXT ONLY 2018 and Onward BY ALLAN JUDE 2018 is going to be a big year for ZFS RAID-Z Expansion not only FreeBSD, but for OpenZFS • 2018Q4 as well. OpenZFS is the collaboration of developers from IllumOS, FreeBSD, The ability to add an additional disk to an existing RAID-Z VDEV to grow its size with- Linux, Mac OS X, many industry ven- out changing its redundancy level. For dors, and a number of other projects example, a RAID-Z2 consisting of 6 disks to maintain and advance the open- could be expanded to contain 7 disks, source version of Sun’s ZFS filesystem. increasing the available space. This is accomplished by reflowing the data across Improved Pool Import the disks, so as to not change an individual • 2018Q1 block’s offset from the start of the VDEV. The new disk will appear as a new column on the A new patch set changes the way pools are right, and the data will be relocated to main- imported, such that they depend less on con- tain the offset within the VDEV. Similar to figuration data from the system. Instead, the reflowing a paragraph by making it wider, possibly outdated configuration from the sys- without changing the order of the words. In tem is used to find the pool, and partially open the end this means all of the new space shows it read-only. Then the correct on-disk configura- up at the end of the disk, without any frag- tion is loaded. This reduces the number of mentation. -

Best Practices for Openzfs L2ARC in the Era of Nvme

Best Practices for OpenZFS L2ARC in the Era of NVMe Ryan McKenzie iXsystems 2019 Storage Developer Conference. © Insert Your Company Name. All Rights Reserved. 1 Agenda ❏ Brief Overview of OpenZFS ARC / L2ARC ❏ Key Performance Factors ❏ Existing “Best Practices” for L2ARC ❏ Rules of Thumb, Tribal Knowledge, etc. ❏ NVMe as L2ARC ❏ Testing and Results ❏ Revised “Best Practices” 2019 Storage Developer Conference. © iXsystems. All Rights Reserved. 2 ARC Overview ● Adaptive Replacement Cache ● Resides in system memory NIC/HBA ● Shared by all pools ● Used to store/cache: ARC OpenZFS ○ All incoming data ○ “Hottest” data and Metadata (a tunable ratio) SLOG vdevs ● Balances between ○ Most Frequently Used (MFU) Data vdevs L2ARC vdevs ○ Most Recently zpool Used (MRU) FreeBSD + OpenZFS Server 2019 Storage Developer Conference. © iXsystems. All Rights Reserved. 3 L2ARC Overview ● Level 2 Adaptive Replacement Cache ● Resides on one or more storage devices NIC/HBA ○ Usually Flash ● Device(s) added to pool ARC OpenZFS ○ Only services data held by that pool ● Used to store/cache: ○ “Warm” data and SLOG vdevs metadata ( about to be evicted from ARC) Data vdevs L2ARC vdevs ○ Indexes to L2ARC zpool blocks stored in ARC headers FreeBSD + OpenZFS Server 2019 Storage Developer Conference. © iXsystems. All Rights Reserved. 4 ZFS Writes ● All writes go through ARC, written blocks are “dirty” until on stable storage ACK NIC/HBA ○ async write ACKs immediately ARC OpenZFS ○ sync write copied to ZIL/SLOG then ACKs ○ copied to data SLOG vdevs vdev in TXG ● When no longer dirty, written blocks stay in Data vdevs L2ARC vdevs ARC and move through zpool MRU/MFU lists normally FreeBSD + OpenZFS Server 2019 Storage Developer Conference. -

Referência Debian I

Referência Debian i Referência Debian Osamu Aoki Referência Debian ii Copyright © 2013-2021 Osamu Aoki Esta Referência Debian (versão 2.85) (2021-09-17 09:11:56 UTC) pretende fornecer uma visão geral do sistema Debian como um guia do utilizador pós-instalação. Cobre muitos aspetos da administração do sistema através de exemplos shell-command para não programadores. Referência Debian iii COLLABORATORS TITLE : Referência Debian ACTION NAME DATE SIGNATURE WRITTEN BY Osamu Aoki 17 de setembro de 2021 REVISION HISTORY NUMBER DATE DESCRIPTION NAME Referência Debian iv Conteúdo 1 Manuais de GNU/Linux 1 1.1 Básico da consola ................................................... 1 1.1.1 A linha de comandos da shell ........................................ 1 1.1.2 The shell prompt under GUI ......................................... 2 1.1.3 A conta root .................................................. 2 1.1.4 A linha de comandos shell do root ...................................... 3 1.1.5 GUI de ferramentas de administração do sistema .............................. 3 1.1.6 Consolas virtuais ............................................... 3 1.1.7 Como abandonar a linha de comandos .................................... 3 1.1.8 Como desligar o sistema ........................................... 4 1.1.9 Recuperar uma consola sã .......................................... 4 1.1.10 Sugestões de pacotes adicionais para o novato ................................ 4 1.1.11 Uma conta de utilizador extra ........................................ 5 1.1.12 Configuração -

Openzfs on Linux Hepix 2014 October 16, 2014 Brian Behlendorf [email protected]

OpenZFS on Linux HEPiX 2014 October 16, 2014 Brian Behlendorf [email protected] LLNL-PRES-XXXXXX This work was performed under the auspices of the U.S. Department of Energy by Lawrence Livermore National Laboratory under Contract DE-AC52-07NA27344. Lawrence Livermore National Security, LLC 1 Lawrence Livermore National Laboratory LLNL-PRES-xxxxxx High Performance Computing Advanced Simulation Massive Scale Data Intensive Top 500 (June 2014) #3 Sequoia 20.1 Peak PFLOP/s 1,572,864 cores 55 PB of storage at 850 GB/s #9 Vulcan 5.0 Peak PFLOPS/s 393,216 cores 6.7 PB of storage at 106 GB/s World class computing resources 2 Lawrence Livermore National Laboratory LLNL-PRES-xxxxxx Linux Clusters 2001 – First Linux cluster Today – ~10 large Linux clusters (100– 3,000 nodes) and 10-20 smaller ones Near-commodity hardware Open source software stack (TOSS) Tri-Labratory Operating System Stack Modified RHEL targeted for HPC RHEL kernel optimized for clusters Moab and SLURM for scheduling Lustre parallel filesystem Additional packages for monitoring, power/console, compilers, etc LLNL Loves Linux 3 Lawrence Livermore National Laboratory LLNL-PRES-xxxxxx Lustre Lustre is our parallel filesystem of choice Livermore Computing Filesystems: Open – 20PB in 5 filesystems Secure – 22PB in 3 filesystems Plus the 55PB Sequoia filesystem 1000+ storage servers Billions of files Users want: Access to their data from every cluster Good performance High availability How do we design a system to handle this? Lustre is used extensively -

ZFS Basics Various ZFS RAID Lebels = & . = a Little About Zetabyte File System ( ZFS / Openzfs)

= BDNOG11 | Cox's Bazar | Day # 3 | ZFS Basics & Various ZFS RAID Lebels. = = ZFS Basics & Various ZFS RAID Lebels . = A Little About Zetabyte File System ( ZFS / OpenZFS) =1= = BDNOG11 | Cox's Bazar | Day # 3 | ZFS Basics & Various ZFS RAID Lebels. = Disclaimer: The scope of this topic here is not to discuss in detail about the architecture of the ZFS (OpenZFS) rather Features, Use Cases and Operational Method. =2= = BDNOG11 | Cox's Bazar | Day # 3 | ZFS Basics & Various ZFS RAID Lebels. = What is ZFS? =0= ZFS is a combined file system and logical volume manager designed by Sun Microsystems and now owned by Oracle Corporation. It was designed and implemented by a team at Sun Microsystems led by Jeff Bonwick and Matthew Ahrens. Matt is the founding member of OpenZFS. =0= Its development started in 2001 and it was officially announced in 2004. In 2005 it was integrated into the main trunk of Solaris and released as part of OpenSolaris. =0= It was described by one analyst as "the only proven Open Source data-validating enterprise file system". This file =3= = BDNOG11 | Cox's Bazar | Day # 3 | ZFS Basics & Various ZFS RAID Lebels. = system also termed as the “world’s safest file system” by some analyst. =0= ZFS is scalable, and includes extensive protection against data corruption, support for high storage capacities, efficient data compression and deduplication. =0= ZFS is available for Solaris (and its variants), BSD (and its variants) & Linux (Canonical’s Ubuntu Integrated as native kernel module from version 16.04x) =0= OpenZFS was announced in September 2013 as the truly open source successor to the ZFS project. -

Matt Ahrens [email protected] Brian Behlendorf Behlendorf1@Llnl

LinuxCon 2013 September 17, 2013 Brian Behlendorf, Open ZFS on Linux LLNL-PRES-643675 This work was performed under the auspices of the U.S. Department of Energy by Lawrence Livermore National Laboratory under Contract DE-AC52-07NA27344. Lawrence Livermore National Security, LLC High Performance Computing ● Advanced Simulation – Massive Scale – Data Intensive ● Top 500 – #3 Sequoia ● 20.1 Peak PFLOP/s ● 1,572,864 cores ● 55 PB of storage at 850 GB/s – #8 Vulcan ● 5.0 Peak PFLOPS/s ● 393,216 cores ● 6.7 PB of storage at 106 GB/s ● ● World class computing resources 2 Lawrence Livermore National Laboratory LLNL-PRES-xxxxxx Linux Clusters ● First Linux cluster deployed in 2001 ● Near-commodity hardware ● Open Source ● Clustered HiGh Availability OperatinG System (CHAOS) – Modified RHEL Kernel – New packaGes – monitorinG, power/console, compilers, etc – Lustre Parallel Filesystem – ZFS Filesystem LLNL Loves Linux 3 Lawrence Livermore National Laboratory LLNL-PRES-643675 Lustre Filesystem ● Massively parallel distributed filesystem ● Lustre servers use a modified ext4 – Stable and fast, but... – No sCalability – No data integrity – No online manageability ● Something better was needed – Use XFS, BTRFS, etC? – Write a filesystem from sCratCh? – Port ZFS to Linux? Existing Linux filesystems do not meet our requirements 4 Lawrence Livermore National Laboratory LLNL-PRES-643675 ZFS on Linux History ● 2008 – Prototype to determine viability ● 2009 – Initial ZVOL and Lustre support ● 2010 – Development moved to Github ● 2011 – POSIX layer -

Freebsd VS. Linux: ZFS by ALLAN JUDE

SEE TEXT ONLY FreeBSD VS. Linux: ZFS BY ALLAN JUDE Why Use ZFS? It is not that other filesystems are bad; they just make the mistake of trusting your storage hardware to return your data when you ask for it. As it turns out, hard drives are pretty good at that, but pretty good is often not good enough. ZFS is the only open-source, OpenZFS is available on many plat- production-quality filesystem that can not only forms, including FreeBSD and Solaris detect but correct the errors when a disk derivatives like IllumOS, as well as Mac returns incorrect data. By combining the roles of filesystem and volume manager, ZFS is also OS X and Linux. However, not all of the able to ensure your data is safe, even in the functionality is available on the latter absence of one or more disks, depending on platforms. The FreeBSD Project has fully your configuration. ZFS doesn’t trust your adopted ZFS, putting significant effort hardware; it verifies that the correct data was into integrating it with the system and returned from each read from the disk. The primary design consideration for ZFS is management tools to make ZFS a seam- the safety of the data. Every block that is writ- less part of the OS, rather than a bolt- ten to the filesystem is accompanied by a ed-on extra. OpenZFS is better inte- checksum of the data, stored with the other grated, instrumented, and documented metadata. That metadata block also has a on FreeBSD than on any of the various checksum, as does its parent, all the way up to the top-level block, called the uber block. -

Executive Summary

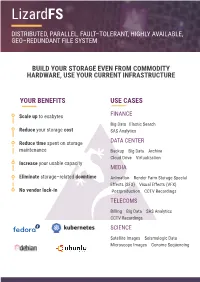

LizardFS DISTRIBUTED, PARALLEL, FAULT–TOLERANT, HIGHLY AVAILABLE, GEO–REDUNDANT FILE SYSTEM BUILD YOUR STORAGE EVEN FROM COMMODITY HARDWARE, USE YOUR CURRENT INFRASTRUCTURE YOUR BENEFITS USE CASES Scale up to exabytes FINANCE Big Data Elastic Search Reduce your storage cost SAS Analytics Reduce time spent on storage DATA CENTER maintenance Backup Big Data Archive Cloud Drive Virtualization Increase your usable capacity MEDIA Eliminate storage–related downtime Animation Render Farm Storage Special Effects (SFX) Visual Effects (VFX) No vendor lock-in Postproduction CCTV Recordings TELECOMS Billing Big Data SAS Analytics CCTV Recordings SCIENCE Satellite Images Seismologic Data Microscope Images Genome Sequencing DATA SAFETY REDUCING RTO Thanks to High Availability and Erasure Coding Thanks to LizardFS, the time needed to mechanisms, server failures will not affect your recover from a disaster is reduced business continuity. Your data will always stay massively. The stability of your business is safe. what really matters. REDUCED MAINTENANCE TIME FULL TRANSPARENCY Disc failures are handled automatically with no LizardFS is an open-source solution. You can need of human intervention. Thanks to that you trust hundreds of our Community don’t have to hire an army of sysadmins. members, not just us. USE YOUR CURRENT INFRASTRUCTURE GEO REPLICATION LizardFS is 100% hardware agnostic. You can run it It allows you to create geo stretched clusters on any hardware you want. stretching virtually any distance. PLUG AND SCALE INCREASED PERFORMANCE Scale vertically as well as horizontally by simply LizardFS allows for parallel writes and reads, adding or removing a single drive or node. thus enhancing performance. COST EFFICIENCY EASY TO INSTALL AND MAINTAIN With us your existing storage keeps growing inste- You don’t have to graduate from the School of ad of being replaced every few years. -

Freebsd Enterprise Storage Polish BSD User Group Welcome 2020/02/11 Freebsd Enterprise Storage

FreeBSD Enterprise Storage Polish BSD User Group Welcome 2020/02/11 FreeBSD Enterprise Storage Sławomir Wojciech Wojtczak [email protected] vermaden.wordpress.com twitter.com/vermaden bsd.network/@vermaden https://is.gd/bsdstg FreeBSD Enterprise Storage Polish BSD User Group What is !nterprise" 2020/02/11 What is Enterprise Storage? The wikipedia.org/wiki/enterprise_storage page tells nothing about enterprise. Actually just redirects to wikipedia.org/wiki/data_storage page. The other wikipedia.org/wiki/computer_data_storage page also does the same. The wikipedia.org/wiki/enterprise is just meta page with lin s. FreeBSD Enterprise Storage Polish BSD User Group What is !nterprise" 2020/02/11 Common Charasteristics o Enterprise Storage ● Category that includes ser$ices/products designed &or !arge organizations. ● Can handle !arge "o!umes o data and !arge num%ers o sim#!tano#s users. ● 'n$olves centra!ized storage repositories such as SA( or NAS de$ices. ● )equires more time and experience%expertise to set up and operate. ● Generally costs more than consumer or small business storage de$ices. ● Generally o&&ers higher re!ia%i!it'%a"aila%i!it'%sca!a%i!it'. FreeBSD Enterprise Storage Polish BSD User Group What is !nterprise" 2020/02/11 EnterpriCe or EnterpriSe? DuckDuckGo does not pro$ide search results count +, Goog!e search &or enterprice word gi$es ~ 1 )00 000 results. Goog!e search &or enterprise word gi$es ~ 1 000 000 000 results ,1000 times more). ● /ost dictionaries &or enterprice word sends you to enterprise term. ● Given the *+,CE o& many enterprise solutions it could be enterPRICE 0 ● 0 or enterpri$e as well +. -

855400Cd46b6c9e7683b5ace58a55234.Pdf

Ars TeAchrsn iTceachnica UK Register Log in ▼ ▼ Ars Technica has arrived in Europe. Check it out! × Encryption options are great, but Apple's attitude on checksums is still funky. by Adam H. Leventhal - Jun 26, 2016 1:00pm UTC KEEPING ON TRUCKING INDEPENDENCE, WHAT INDEPENDENCE? Two hours or so of WWDC keynoting and Tim Cook didn't mention a new file system once? Andrew Cunningham This article was originally published on Adam Leventhal's blog in multiple parts. Programmer Andrew Nõmm: "I had to be made Apple announced a new file system that will make its way into all of its OS variants (macOS, tvOS, iOS, an example of as a warning to all IT people." watchOS) in the coming years. Media coverage to this point has been mostly breathless elongations of Apple's developer documentation. With a dearth of detail I decided to attend the presentation and Q&A with the APFS team at WWDC. Dominic Giampaolo and Eric Tamura, two members of the APFS team, gave an overview to a packed room; along with other members of the team, they patiently answered questions later in the day. With those data points and some first-hand usage I wanted to provide an overview and analysis both as a user of Apple-ecosystem products and as a long-time operating system and file system developer. The overview is divided into several sections. I'd encourage you to jump around to topics of interest or skip right to the conclusion (or to the tweet summary). Highest praise goes to encryption; ire to data integrity. -

Sibylfs: Formal Specification and Oracle-Based Testing for POSIX and Real-World File Systems

SibylFS: formal specification and oracle-based testing for POSIX and real-world file systems Tom Ridge1 David Sheets2 Thomas Tuerk3 Andrea Giugliano1 Anil Madhavapeddy2 Peter Sewell2 1University of Leicester 2University of Cambridge 3FireEye http://sibylfs.io/ Abstract 1. Introduction Systems depend critically on the behaviour of file systems, Problem File systems, in common with several other key but that behaviour differs in many details, both between systems components, have some well-known but challeng- implementations and between each implementation and the ing properties: POSIX (and other) prose specifications. Building robust and portable software requires understanding these details and differences, but there is currently no good way to system- • they provide behaviourally complex abstractions; atically describe, investigate, or test file system behaviour • there are many important file system implementations, across this complex multi-platform interface. each with its own internal complexities; In this paper we show how to characterise the envelope • different file systems, while broadly similar, nevertheless of allowed behaviour of file systems in a form that enables behave quite differently in some cases; and practical and highly discriminating testing. We give a math- • other system software and applications often must be ematically rigorous model of file system behaviour, SibylFS, written to be portable between file systems, and file sys- that specifies the range of allowed behaviours of a file sys- tems themselves are sometimes ported from one OS to tem for any sequence of the system calls within our scope, another, or written to support application portability. and that can be used as a test oracle to decide whether an ob- served trace is allowed by the model, both for validating the File system behaviour, and especially these variations in be- model and for testing file systems against it.