ZFS Basics Various ZFS RAID Lebels = & . = a Little About Zetabyte File System ( ZFS / Openzfs)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Elastic Storage for Linux on System Z IBM Research & Development Germany

Peter Münch T/L Test and Development – Elastic Storage for Linux on System z IBM Research & Development Germany Elastic Storage for Linux on IBM System z © Copyright IBM Corporation 2014 9.0 Elastic Storage for Linux on System z Session objectives • This presentation introduces the Elastic Storage, based on General Parallel File System technology that will be available for Linux on IBM System z. Understand the concepts of Elastic Storage and which functions will be available for Linux on System z. Learn how you can integrate and benefit from the Elastic Storage in a Linux on System z environment. Finally, get your first impression in a live demo of Elastic Storage. 2 © Copyright IBM Corporation 2014 Elastic Storage for Linux on System z Trademarks The following are trademarks of the International Business Machines Corporation in the United States and/or other countries. AIX* FlashSystem Storwize* Tivoli* DB2* IBM* System p* WebSphere* DS8000* IBM (logo)* System x* XIV* ECKD MQSeries* System z* z/VM* * Registered trademarks of IBM Corporation The following are trademarks or registered trademarks of other companies. Adobe, the Adobe logo, PostScript, and the PostScript logo are either registered trademarks or trademarks of Adobe Systems Incorporated in the United States, and/or other countries. Cell Broadband Engine is a trademark of Sony Computer Entertainment, Inc. in the United States, other countries, or both and is used under license therefrom. Intel, Intel logo, Intel Inside, Intel Inside logo, Intel Centrino, Intel Centrino logo, Celeron, Intel Xeon, Intel SpeedStep, Itanium, and Pentium are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries. -

Copy on Write Based File Systems Performance Analysis and Implementation

Copy On Write Based File Systems Performance Analysis And Implementation Sakis Kasampalis Kongens Lyngby 2010 IMM-MSC-2010-63 Technical University of Denmark Department Of Informatics Building 321, DK-2800 Kongens Lyngby, Denmark Phone +45 45253351, Fax +45 45882673 [email protected] www.imm.dtu.dk Abstract In this work I am focusing on Copy On Write based file systems. Copy On Write is used on modern file systems for providing (1) metadata and data consistency using transactional semantics, (2) cheap and instant backups using snapshots and clones. This thesis is divided into two main parts. The first part focuses on the design and performance of Copy On Write based file systems. Recent efforts aiming at creating a Copy On Write based file system are ZFS, Btrfs, ext3cow, Hammer, and LLFS. My work focuses only on ZFS and Btrfs, since they support the most advanced features. The main goals of ZFS and Btrfs are to offer a scalable, fault tolerant, and easy to administrate file system. I evaluate the performance and scalability of ZFS and Btrfs. The evaluation includes studying their design and testing their performance and scalability against a set of recommended file system benchmarks. Most computers are already based on multi-core and multiple processor architec- tures. Because of that, the need for using concurrent programming models has increased. Transactions can be very helpful for supporting concurrent program- ming models, which ensure that system updates are consistent. Unfortunately, the majority of operating systems and file systems either do not support trans- actions at all, or they simply do not expose them to the users. -

ZFS (On Linux) Use Your Disks in Best Possible Ways Dobrica Pavlinušić CUC Sys.Track 2013-10-21 What Are We Going to Talk About?

ZFS (on Linux) use your disks in best possible ways Dobrica Pavlinušić http://blog.rot13.org CUC sys.track 2013-10-21 What are we going to talk about? ● ZFS history ● Disks or SSD and for what? ● Installation ● Create pool, filesystem and/or block device ● ARC, L2ARC, ZIL ● snapshots, send/receive ● scrub, disk reliability (smart) ● tuning zfs ● downsides ZFS history 2001 – Development of ZFS started with two engineers at Sun Microsystems. 2005 – Source code was released as part of OpenSolaris. 2006 – Development of FUSE port for Linux started. 2007 – Apple started porting ZFS to Mac OS X. 2008 – A port to FreeBSD was released as part of FreeBSD 7.0. 2008 – Development of a native Linux port started. 2009 – Apple's ZFS project closed. The MacZFS project continued to develop the code. 2010 – OpenSolaris was discontinued, the last release was forked. Further development of ZFS on Solaris was no longer open source. 2010 – illumos was founded as the truly open source successor to OpenSolaris. Development of ZFS continued in the open. Ports of ZFS to other platforms continued porting upstream changes from illumos. 2012 – Feature flags were introduced to replace legacy on-disk version numbers, enabling easier distributed evolution of the ZFS on-disk format to support new features. 2013 – Alongside the stable version of MacZFS, ZFS-OSX used ZFS on Linux as a basis for the next generation of MacZFS. 2013 – The first stable release of ZFS on Linux. 2013 – Official announcement of the OpenZFS project. Terminology ● COW - copy on write ○ doesn’t -

VIA RAID Configurations

VIA RAID configurations The motherboard includes a high performance IDE RAID controller integrated in the VIA VT8237R southbridge chipset. It supports RAID 0, RAID 1 and JBOD with two independent Serial ATA channels. RAID 0 (called Data striping) optimizes two identical hard disk drives to read and write data in parallel, interleaved stacks. Two hard disks perform the same work as a single drive but at a sustained data transfer rate, double that of a single disk alone, thus improving data access and storage. Use of two new identical hard disk drives is required for this setup. RAID 1 (called Data mirroring) copies and maintains an identical image of data from one drive to a second drive. If one drive fails, the disk array management software directs all applications to the surviving drive as it contains a complete copy of the data in the other drive. This RAID configuration provides data protection and increases fault tolerance to the entire system. Use two new drives or use an existing drive and a new drive for this setup. The new drive must be of the same size or larger than the existing drive. JBOD (Spanning) stands for Just a Bunch of Disks and refers to hard disk drives that are not yet configured as a RAID set. This configuration stores the same data redundantly on multiple disks that appear as a single disk on the operating system. Spanning does not deliver any advantage over using separate disks independently and does not provide fault tolerance or other RAID performance benefits. If you use either Windows® XP or Windows® 2000 operating system (OS), copy first the RAID driver from the support CD to a floppy disk before creating RAID configurations. -

Optimizing the Ceph Distributed File System for High Performance Computing

Optimizing the Ceph Distributed File System for High Performance Computing Kisik Jeong Carl Duffy Jin-Soo Kim Joonwon Lee Sungkyunkwan University Seoul National University Seoul National University Sungkyunkwan University Suwon, South Korea Seoul, South Korea Seoul, South Korea Suwon, South Korea [email protected] [email protected] [email protected] [email protected] Abstract—With increasing demand for running big data ana- ditional HPC workloads. Traditional HPC workloads perform lytics and machine learning workloads with diverse data types, reads from a large shared file stored in a file system, followed high performance computing (HPC) systems consequently need by writes to the file system in a parallel manner. In this case, to support diverse types of storage services. Ceph is one possible candidate for such HPC environments, as Ceph provides inter- a storage service should provide good sequential read/write faces for object, block, and file storage. Ceph, however, is not performance. However, Ceph divides large files into a number designed for HPC environments, thus it needs to be optimized of chunks and distributes them to several different disks. This for HPC workloads. In this paper, we find and analyze problems feature has two main problems in HPC environments: 1) Ceph that arise when running HPC workloads on Ceph, and propose translates sequential accesses into random accesses, 2) Ceph a novel optimization technique called F2FS-split, based on the F2FS file system and several other optimizations. We measure needs to manage many files, incurring high journal overheads the performance of Ceph in HPC environments, and show that in the underlying file system. -

Proxmox Ve Mit Ceph &

PROXMOX VE MIT CEPH & ZFS ZUKUNFTSSICHERE INFRASTRUKTUR IM RECHENZENTRUM Alwin Antreich Proxmox Server Solutions GmbH FrOSCon 14 | 10. August 2019 Alwin Antreich Software Entwickler @ Proxmox 15 Jahre in der IT als Willkommen! System / Netzwerk Administrator FrOSCon 14 | 10.08.2019 2/33 Proxmox Server Solutions GmbH Aktive Community Proxmox seit 2005 Globales Partnernetz in Wien (AT) Proxmox Mail Gateway Enterprise (AGPL,v3) Proxmox VE (AGPL,v3) Support & Services FrOSCon 14 | 10.08.2019 3/33 Traditionelle Infrastruktur FrOSCon 14 | 10.08.2019 4/33 Hyperkonvergenz FrOSCon 14 | 10.08.2019 5/33 Hyperkonvergente Infrastruktur FrOSCon 14 | 10.08.2019 6/33 Voraussetzung für Hyperkonvergenz CPU / RAM / Netzwerk / Storage Verwende immer genug von allem. FrOSCon 14 | 10.08.2019 7/33 FrOSCon 14 | 10.08.2019 8/33 Was ist ‚das‘? ● Ceph & ZFS - software-basierte Storagelösungen ● ZFS lokal / Ceph verteilt im Netzwerk ● Hervorragende Performance, Verfügbarkeit und https://ceph.io/ Skalierbarkeit ● Verwaltung und Überwachung mit Proxmox VE ● Technischer Support für Ceph & ZFS inkludiert in Proxmox Subskription http://open-zfs.org/ FrOSCon 14 | 10.08.2019 9/33 FrOSCon 14 | 10.08.2019 10/33 FrOSCon 14 | 10.08.2019 11/33 ZFS Architektur FrOSCon 14 | 10.08.2019 12/33 ZFS ARC, L2ARC and ZIL With ZIL Without ZIL ARC RAM ARC RAM ZIL ZIL Application HDD Application SSD HDD FrOSCon 14 | 10.08.2019 13/33 FrOSCon 14 | 10.08.2019 14/33 FrOSCon 14 | 10.08.2019 15/33 Ceph Network Ceph Docs: https://docs.ceph.com/docs/master/ FrOSCon 14 | 10.08.2019 16/33 FrOSCon -

Red Hat Openstack* Platform with Red Hat Ceph* Storage

Intel® Data Center Blocks for Cloud – Red Hat* OpenStack* Platform with Red Hat Ceph* Storage Reference Architecture Guide for deploying a private cloud based on Red Hat OpenStack* Platform with Red Hat Ceph Storage using Intel® Server Products. Rev 1.0 January 2017 Intel® Server Products and Solutions <Blank page> Intel® Data Center Blocks for Cloud – Red Hat* OpenStack* Platform with Red Hat Ceph* Storage Document Revision History Date Revision Changes January 2017 1.0 Initial release. 3 Intel® Data Center Blocks for Cloud – Red Hat® OpenStack® Platform with Red Hat Ceph Storage Disclaimers Intel technologies’ features and benefits depend on system configuration and may require enabled hardware, software, or service activation. Learn more at Intel.com, or from the OEM or retailer. You may not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein. No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from course of performance, course of dealing, or usage in trade. Copies of documents which have an order number and are referenced in this document may be obtained by calling 1-800-548-4725 or by visiting www.intel.com/design/literature.htm. -

Building Reliable Massive Capacity Ssds Through a Flash Aware RAID-Like Protection †

applied sciences Article Building Reliable Massive Capacity SSDs through a Flash Aware RAID-Like Protection † Jaeho Kim 1 and Jung Kyu Park 2,* 1 Department of Aerospace and Software Engineering & Engineering Research Institute, Gyeongsang National University, Jinju 52828, Korea; [email protected] 2 Department of Computer Software Engineering, Changshin University, Changwon 51352, Korea * Correspondence: [email protected] † This Paper Is an Extended Version of Paper Published in the IEEE International Conference on Consumer Electronics (ICCE) 2020, Las Vegas, NV, USA, 4–6 January 2020. Received: 14 November 2020; Accepted: 16 December 2020; Published: 21 December 2020 Abstract: The demand for mass storage devices has become an inevitable consequence of the explosive increase in data volume. The three-dimensional (3D) vertical NAND (V-NAND) and quad-level cell (QLC) technologies rapidly accelerate the capacity increase of flash memory based storage system, such as SSDs (Solid State Drives). Massive capacity SSDs adopt dozens or hundreds of flash memory chips in order to implement large capacity storage. However, employing such a large number of flash chips increases the error rate in SSDs. A RAID-like technique inside an SSD has been used in a variety of commercial products, along with various studies, in order to protect user data. With the advent of new types of massive storage devices, studies on the design of RAID-like protection techniques for such huge capacity SSDs are important and essential. In this paper, we propose a massive SSD-Aware Parity Logging (mSAPL) scheme that protects against n-failures at the same time in a stripe, where n is protection strength that is specified by the user. -

IBM Spectrum Scale CSI Driver for Container Persistent Storage

Front cover IBM Spectrum Scale CSI Driver for Container Persistent Storage Abhishek Jain Andrew Beattie Daniel de Souza Casali Deepak Ghuge Harald Seipp Kedar Karmarkar Muthu Muthiah Pravin P. Kudav Sandeep R. Patil Smita Raut Yadavendra Yadav Redpaper IBM Redbooks IBM Spectrum Scale CSI Driver for Container Persistent Storage April 2020 REDP-5589-00 Note: Before using this information and the product it supports, read the information in “Notices” on page ix. First Edition (April 2020) This edition applies to Version 5, Release 0, Modification 4 of IBM Spectrum Scale. © Copyright International Business Machines Corporation 2020. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. iii iv IBM Spectrum Scale CSI Driver for Container Persistent Storage Contents Notices . vii Trademarks . viii Preface . ix Authors. ix Now you can become a published author, too . xi Comments welcome. xii Stay connected to IBM Redbooks . xii Chapter 1. IBM Spectrum Scale and Containers Introduction . 1 1.1 Abstract . 2 1.2 Assumptions . 2 1.3 Key concepts and terminology . 3 1.3.1 IBM Spectrum Scale . 3 1.3.2 Container runtime . 3 1.3.3 Container Orchestration System. 4 1.4 Introduction to persistent storage for containers Flex volumes. 4 1.4.1 Static provisioning. 4 1.4.2 Dynamic provisioning . 4 1.4.3 Container Storage Interface (CSI). 5 1.4.4 Advantages of using IBM Spectrum Scale storage for containers . 5 Chapter 2. Architecture of IBM Spectrum Scale CSI Driver . 7 2.1 CSI Component Overview. 8 2.2 IBM Spectrum Scale CSI driver architecture. -

Cygwin User's Guide

Cygwin User’s Guide Cygwin User’s Guide ii Copyright © Cygwin authors Permission is granted to make and distribute verbatim copies of this documentation provided the copyright notice and this per- mission notice are preserved on all copies. Permission is granted to copy and distribute modified versions of this documentation under the conditions for verbatim copying, provided that the entire resulting derived work is distributed under the terms of a permission notice identical to this one. Permission is granted to copy and distribute translations of this documentation into another language, under the above conditions for modified versions, except that this permission notice may be stated in a translation approved by the Free Software Foundation. Cygwin User’s Guide iii Contents 1 Cygwin Overview 1 1.1 What is it? . .1 1.2 Quick Start Guide for those more experienced with Windows . .1 1.3 Quick Start Guide for those more experienced with UNIX . .1 1.4 Are the Cygwin tools free software? . .2 1.5 A brief history of the Cygwin project . .2 1.6 Highlights of Cygwin Functionality . .3 1.6.1 Introduction . .3 1.6.2 Permissions and Security . .3 1.6.3 File Access . .3 1.6.4 Text Mode vs. Binary Mode . .4 1.6.5 ANSI C Library . .4 1.6.6 Process Creation . .5 1.6.6.1 Problems with process creation . .5 1.6.7 Signals . .6 1.6.8 Sockets . .6 1.6.9 Select . .7 1.7 What’s new and what changed in Cygwin . .7 1.7.1 What’s new and what changed in 3.2 . -

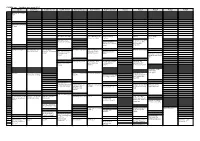

FOSDEM 2017 Schedule

FOSDEM 2017 - Saturday 2017-02-04 (1/9) Janson K.1.105 (La H.2215 (Ferrer) H.1301 (Cornil) H.1302 (Depage) H.1308 (Rolin) H.1309 (Van Rijn) H.2111 H.2213 H.2214 H.3227 H.3228 Fontaine)… 09:30 Welcome to FOSDEM 2017 09:45 10:00 Kubernetes on the road to GIFEE 10:15 10:30 Welcome to the Legal Python Winding Itself MySQL & Friends Opening Intro to Graph … Around Datacubes Devroom databases Free/open source Portability of containers software and drones Optimizing MySQL across diverse HPC 10:45 without SQL or touching resources with my.cnf Singularity Welcome! 11:00 Software Heritage The Veripeditus AR Let's talk about The State of OpenJDK MSS - Software for The birth of HPC Cuba Game Framework hardware: The POWER Make your Corporate planning research Applying profilers to of open. CLA easy to use, aircraft missions MySQL Using graph databases please! 11:15 in popular open source CMSs 11:30 Jockeying the Jigsaw The power of duck Instrumenting plugins Optimized and Mixed License FOSS typing and linear for Performance reproducible HPC Projects algrebra Schema Software deployment 11:45 Incremental Graph Queries with 12:00 CloudABI LoRaWAN for exploring Open J9 - The Next Free It's time for datetime Reproducible HPC openCypher the Internet of Things Java VM sysbench 1.0: teaching Software Installation on an old dog new tricks Cray Systems with EasyBuild 12:15 Making License 12:30 Compliance Easy: Step Diagnosing Issues in Webpush notifications Putting Your Jobs Under Twitter Streaming by Open Source Step. Java Apps using for Kinto Introducing gh-ost the Microscope using Graph with Gephi Thermostat and OGRT Byteman. -

Advanced Services for Oracle Hierarchical Storage Manager

ORACLE DATA SHEET Advanced Services for Oracle Hierarchical Storage Manager The complex challenge of managing the data lifecycle is simply about putting the right data, on the right storage tier, at the right time. Oracle Hierarchical Storage Manager software enables you to reduce the cost of managing data and storing vast data repositories by providing a powerful, easily managed, cost-effective way to access, retain, and protect data over its entire lifecycle. However, your organization must ensure that your archive software is configured and optimized to meet strategic business needs and regulatory demands. Oracle Advanced Services for Oracle Hierarchical Storage Manager delivers the configuration expertise, focused reviews, and proactive guidance to help optimize the effectiveness of your solution–all delivered by Oracle Advanced Support Engineers. KEY BENEFITS Simplify Storage Management • Preproduction Readiness Services including critical patches and Putting the right information on the appropriate tier can reduce storage costs and updates, using proven methodologies maximize return on investment (ROI) over time. Oracle Hierarchical Storage Manager and recommended practices software actively manages data between storage tiers to let companies exploit the • Production Optimization Services substantial acquisition and operational cost differences between high-end disk drives, including configuration reviews and SATA drives, and tape devices. Oracle Hierarchical Storage Manager software provides performance reviews to analyze existing