The Nonlinear World

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cumulative Michigan Notable Books List

Author(s) Title Publisher Genre Year Abbott, Jim Imperfect Ballantine Books Memoir 2013 Abood, Maureen Rose Water and Orange Blossoms: Fresh & Classic Recipes from My Lebenese Kitchen Running Press Non-fiction 2016 Ahmed, Saladin Abbott Boom Studios Fiction 2019 Airgood, Ellen South of Superior Riverhead Books Fiction 2012 Albom, Mitch Have a Little Faith: A True Story Hyperion Non-fiction 2010 Alexander, Jeff The Muskegon: The Majesty and Tragedy of Michigan's Rarest River Michigan State University Press Non-fiction 2007 Alexander, Jeff Pandora's Locks: The Opening of the Great Lakes-St. Lawrence Seaway Michigan State University Press Non-fiction 2010 Amick, Steve The Lake, the River & the Other Lake: A Novel Pantheon Books Fiction 2006 Amick, Steve Nothing But a Smile: A Novel Pantheon Books Fiction 2010 Anderson, Godfrey J. A Michigan Polar Bear Confronts the Bolsheviks: A War Memoir: the 337th Field Hospital in Northern Russia William B. Eerdmans' Publishing Co. Memoir 2011 Anderson, William M. The Detroit Tigers: A Pictorial Celebration of the Greatest Players and Moments in Tigers' History Dimond Communications Photo-essay 1992 Andrews, Nancy Detroit Free Press Time Frames: Our Lives in 2001, our City at 300, Our Legacy in Pictures Detroit Free Press Photography 2003 Appleford, Annie M is for Mitten: A Michigan Alphabet Book Sleeping Bear Press Children's 2000 Armour, David 100 Years at Mackinac: A Centennial History of the Mackinac Island State Park Commission, 1895-1995 Mackinac Island State Historic Parks History 1996 Arnold, Amy & Conway, Brian Michigan Modern: Designed that Shaped America Gibbs Smith Non-fiction 2017 Arnow, Harriette Louisa Simpson Between the Flowers Michigan State University Press Fiction 2000 Bureau of History, Michigan Historical Commission, Michigan Department of Ashlee, Laura R. -

David S. Moore, Ph.D. E-Mail: [email protected] W Cell: 626-644-5091 Webpage

David S. Moore, Ph.D. E-mail: [email protected] w Cell: 626-644-5091 Webpage: http://pzacad.pitzer.edu/~dmoore/ EMPLOYMENT 2001 – 2019 Professor of Psychology, Pitzer College and Claremont Graduate University 2016 – 2018 Director, National Science Foundation’s Developmental Sciences Program 1989 – 2019 Director, Claremont Infant Study Center, Pitzer College Spring, 2009 Visiting Researcher at Kumamoto International University, Kumamoto, Japan 2005 – 2006 Guest Professor of Psychology, Sarah Lawrence College 2004 – 2006 Professor of Psychology, Yeshiva University Spring, 2004 Visiting Professor of Psychology, Florida International University 1995 – 2001 Associate Professor of Psychology, Pitzer College and Claremont Graduate School 1989 – 1995 Assistant Professor of Psychology, Pitzer College and Claremont Graduate School 1988 – 1989 Post-Doctoral Fellowship in Developmental Psychology, City University of NY EDUCATION 1988 Ph.D. Harvard University, Psychology Major: Developmental Psychology, Minors: Psychobiology, Social Psychology Dissertation: "Auditory and Visual Integration in Very Young Infants" Advisor: Professor Jerome Kagan 1985 M.A. Harvard University, Psychology 1983 B.A. Tufts University, Psychology, Magna Cum Laude HONORS AND GRANTS 2019 – 2023 Psychometric Intelligent Agent Graphical Environment and Testbed (PIAGET). Defense Advanced Research Projects Agency (BAA Machine Common Sense [HR001119S0005]: $1,999,774 total costs). P.I.: Victoria Romero Consultants: David S. Moore, Lisa M. Oakes, & Koleen McCrink 2016 Recipient of the American Psychological Association’s William James Book Award, for an “outstanding” book that synthesizes “diverse subfields of psychology & related disciplines” 2016 Recipient of the American Psychological Association’s Eleanor Maccoby Book Award, for a book that has had a “profound effect” on Developmental Psychology 2010 – 2013 MRI: Acquisition of a High-density Electrophysiology Laboratory for Intercollegiate Research and Training in Cognitive Neuroscience. -

COMBINED BOOK EXHIBIT® / Pennyslvania & Ohio Library

COMBINED BOOK EXHIBIT® / Pennyslvania & Ohio Library Associations 11.___ DEWEY DECIMAL 080 - General Collections CLASSIFICATION: Principles and 000 - Generalities Application Revised and Expanded for 18.___ EAGLES ENCYCLOPEDIA, THE. 1.___ FAXUSA: A Directory of Facsimile DDC 22, 3rd Ed. Chan, Lois M. and Mitchell, Didinger, Roy and Lyons, Robert S.. Temple Numbers for Businesses and Joan S.. OCLC Dewey Decimal Classification University Press $35 HC (1-59213-449-1) Organizations Nationwide, 2005 12th Ed $40 HC (0-910608-72-5) 2003 £27, Euro 44* 2005 (2005 PALA Only) Omnigraphics Inc. $170 PB (0-7808-0753-7) 12.___ DEWEY DECIMAL CLASSIFICATION 19.___ GREEK SOUL: Memories of a 2004 (13th edition, ISBN 0-7808-0806-1, List AND RELATIVE INDEX ABRIDGED EDITION Detroit Childhood Sandris, Stelyani. Kay price $189, School & library price $170, ready 14. Devised by Dewey, Melvil. Edited by Joan Loveland $9.95 PB (0-595-35675-3) 2005 October 2005) S. Mitchell et al.. OCLC Dewey Decimal 20.___ HANDBOOK OF MASCOTS & Classification $99 HC (0-910608-73-3) 2003 2.___ ONLY) THE BEST/SOLO LO MEJOR: NICKNAMES, THE, 2nd Ed.. Fournier, Peter £70 (incl. Shipping UK) Euro 100 (excl. 100 Great Quotations and Proverbs/100 J.. Raja & Associates $13.95 PB (0-9741- shpping)* Gran Refranes y Frases Celebres Avila, 1364-6) 2004 Reviews: Washington Times Adrienne (ed.). With Pockell, Les. Warner 13.___ DEWEY DECIMAL CLASSIFICATION 5/04; Midwest Book Review 5/04* Trade Paperback/Warner Books/Time Warner AND RELATIVE INDEX. EDITION 22 FOUR $9.95 PB (0-446-69513-0) 2005 (2005 OHLC 21.___ STELLA'S STORY: Fighting for Life VOLUMES. -

Publishers and ISBN Prefixes

Date: 10/24/2013 Publishers and ISBN Prefixes Public Imprint Prefix Absolute Press 0-948230 0-9506785 1-4729 1-899791 1-904573 1-906650 Adlard Coles 0-229 0-7136 1-4081 1-4729 Aerie 0-8125 0-938819 1-4299 1-4668 1-55902 Arden Shakespeare 1-904271 AVA Publishing 2-88479 2-940373 2-940411 2-940496 BCF Books 0-9550026 Bedford/St. Martin's 0-312 0-314 0-324 0-395 0-495 0-534 0-538 0-547 0-618 0-669 0-7593 0-7895 0-87779 1-4130 1-4180 1-4188 1-4266 1-4282 1-4299 1-4576 Page 1 Date: 10/24/2013 Publishers and ISBN Prefixes Public Imprint Prefix Bedford/St. Martin's 1-55431 2-03 Behind the Wheel 1-4272 Berg Publishers 0-485 0-8264 0-85496 0-907582 1-8452 1-84520 1-84788 1-85973 Bloomsbury Academic 0-225 0-264 0-304 0-340 0-485 0-520 0-567 0-7131 0-7136 0-7185 0-7201 0-7220 0-7475 0-8044 0-8192 0-8264 0-85305 0-85496 0-85532 0-85785 0-86012 0-86187 0-87609 0-904286 0-907582 0-907628 0-950688 0-9506882 1-4081 1-4088 Page 2 Date: 10/24/2013 Publishers and ISBN Prefixes Public Imprint Prefix Bloomsbury Academic 1-4411 1-4482 1-4725 1-4729 1-55540 1-55587 1-56338 1-62356 1-62892 1-78093 1-84190 1-84371 1-84520 1-84706 1-84714 1-84725 1-84788 1-84930 1-84966 1-85285 1-85539 1-85567 1-85973 1-86047 1-870626 1-871478 1-898149 1-900512 1-902459 1-905422 Bloomsbury Arden Shakespeare 0-17 0-416 0-567 1-4080 1-4081 1-4411 1-4725 1-84480 1-903436 1-904271 Bloomsbury Methuen Drama 0-413 Page 3 Date: 10/24/2013 Publishers and ISBN Prefixes Public Imprint Prefix Bloomsbury Methuen Drama 0-416 0-7136 1-4081 1-4725 1-78093 Bloomsbury Press 1-59691 1-60819 1-62040 -

Children's Literature

Children's Literature Handbooks Carpenter, H., & Prichard, M. (1984). The oxford companion to children's literature. Oxford Oxfordshire ; New York: Oxford University Press. Dictionary of literary biography. Detroit, Mich: Gale Research Co. v. 42: American writers for children before 1900 v. 52: American writers for children since 1960: fiction v. 62: American writers for children since 1960: poets, illustrators, and nonfiction authors v. 141: British children's wirters, 1880 - 1914 v. 160: British children's writers, 1914 - 1960 v. 161: British childrne's writers, since 1960 v. 163: British children's writers, 1800 - 1880 Fisher, M. T. (1975). Who's who in children's books. New York: Holt, Rinehart and Winston. Harris, L. L., Gale Research Company, & Gale Research Inc. Nineteenth-Century Literature Criticism, , Irregular; Vol. 1. Helbig, A., & Perkins, A. (2002). Dictionary of american children's fiction, 1995-1999 : Books of recognized merit. Westport, Conn: Greenwood Press. Helbig, A., & Perkins, A. (1996). Dictionary of american children's fiction, 1990-1994 : Books of recognized merit. Westport, Conn: Greenwood Press. Helbig, A., & Perkins, A. (1993). Dictionary of american children's fiction, 1985-1989 : Books of recognized merit. Westport, Conn: Greenwood Press. Helbig, A., & Perkins, A. (1989). Dictionary of british children's fiction : Books of recognized merit. New York: Greenwood Press. Helbig, A., & Perkins, A. (1986). Dictionary of american children's fiction, 1960-1984 : Recent books of recognized merit. Westport, Conn: Greenwood Press. Helbig, A., & Perkins, A. (1985). Dictionary of american children's fiction, 1859-1959 : Books of recognized merit. Westport, Conn: Greenwood Press. Opie, I. A., & Opie, P. (1997). The oxford dictionary of nursery rhymes (New ed.). -

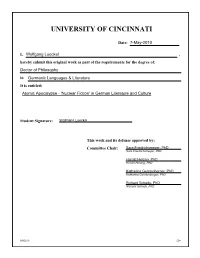

University of Cincinnati

! "# $ % & % ' % !" #$ !% !' &$ &""! '() ' #$ *+ ' "# ' '% $$(' ,) * !$- .*./- 0 #!1- 2 *,*- Atomic Apocalypse – ‘Nuclear Fiction’ in German Literature and Culture A dissertation submitted to the Graduate School of the University of Cincinnati In partial fulfillment of the requirements for the degree of DOCTORATE OF PHILOSOPHY (Ph.D.) in the Department of German Studies of the College of Arts and Sciences 2010 by Wolfgang Lueckel B.A. (equivalent) in German Literature, Universität Mainz, 2003 M.A. in German Studies, University of Cincinnati, 2005 Committee Chair: Sara Friedrichsmeyer, Ph.D. Committee Members: Todd Herzog, Ph.D. (second reader) Katharina Gerstenberger, Ph.D. Richard E. Schade, Ph.D. ii Abstract In my dissertation “Atomic Apocalypse – ‘Nuclear Fiction’ in German Literature and Culture,” I investigate the portrayal of the nuclear age and its most dreaded fantasy, the nuclear apocalypse, in German fictionalizations and cultural writings. My selection contains texts of disparate natures and provenance: about fifty plays, novels, audio plays, treatises, narratives, films from 1946 to 2009. I regard these texts as a genre of their own and attempt a description of the various elements that tie them together. The fascination with the end of the world that high and popular culture have developed after 9/11 partially originated from the tradition of nuclear fiction since 1945. The Cold War has produced strong and lasting apocalyptic images in German culture that reject the traditional biblical apocalypse and that draw up a new worldview. In particular, German nuclear fiction sees the atomic apocalypse as another step towards the technical facilitation of genocide, preceded by the Jewish Holocaust with its gas chambers and ovens. -

Penny C. Sansevieri

:gBglb]^kl@nb]^mhIn[eb\bsbg` Zg]FZkd^mbg`rhnk;hhd PENNY C. SANSEVIERI New York FROM BOOK TO BESTSELLER :gBglb]^kl@nb]^mhIn[eb\bsbg` Zg]FZkd^mbg`Rhnk;hhd by Penny C. Sansevieri © 2007. All rights reserved. No part of this publication may be reproduced or transmitted in any form or by any means, mechanical or electronic, including photocopying and record- ing, or by any information storage and retrieval system, without permission in writing from author or publisher (except by a reviewer, who may quote brief passages and/or show brief video clips in a review). ISBN: 1-60037-088-8 (Hardcover) ISBN: 1-60037-085-3 (Paperback) ISBN: 1-60037-086-1 (eBook) ISBN: 1-60037-087-X (Audio) Published by: Morgan James Publishing, LLC 1225 Franklin Ave. Ste 325 Garden City, NY 11530-1693 Toll Free 800-485-4943 www.MorganJamesPublishing.com Interior Design by: Bonnie Bushman [email protected] MORE BOOKS BY PENNY C. SANSEVIERI GhgÛ\mbhg Get Published Today (Morgan James Publishing 2006) From Book to Bestseller (PublishingGold.com, Inc. 2005) No More Rejections: Get Published Today! (PublishingGold.com, Inc. 2005) No More Rejections: Get Published Today! ,QÀQLW\3XEOLVKLQJ Get Published! $QDXWKRU·VJXLGHWRWKH RQOLQHSXEOLVKLQJUHYROXWLRQ (1st Books, 2001) ?b\mbhg 7KH&OLIIKDQJHU (iUniverse, 2000) Candlewood Lake (iUniverse, 2006) To subscribe to our free newsletter send an e-mail to ln[l\kb[^9ZfZkd^mbg`^qi^km'\hf P^]eho^rhnk_^^][Z\d' A^k^lahpmh\hgmZ\mnl3 Author Marketing Experts, Inc. 3RVW2IÀFH%R[ San Diego, CA 92142 www.amarketingexpert.com [email protected] ?hk?kZgl The best dad a girl could ever have. -

General Works

THE BRITISH LIBRARY THE AMERICAN CIVIL RIGHTS MOVEMENT A GUIDE TO MATERIALS IN THE BRITISH LIBRARY by Jean Kemble THE ECCLES CENTRE FOR AMERICAN STUDIES ISBN: 0-7123-4417-9 CONTENTS Introduction General Works Phases of the Movement Origins School Desegregation Bus Boycotts Sit-ins Freedom Rides Voter Registration and the Voting Rights Act of 1965 Black Power Civil Rights Organisations SNCC SCLC CORE NAACP National Urban League Participants in the Movement Students/Youths Whites in the Movement Women in the Movement Biographies and Autobiographies The Federal Government Executive Legislative Legal/Judicial States Alabama Arkansas Florida Georgia Louisiana Mississippi North Carolina Tennessee Virginia Washington, DC Other States Other Topics Leadership Martin Luther King, Jr. Malcolm X Public Opinion White Reaction Political Consequences Social and Economic Consequences Music of the Movement INTRODUCTION The Eccles Centre for American Studies in the British Library was established in 1991 both to promote the Library’s North American collections through bibliographical guides and exhibitions and to respond to enquiries from students, academics and the general public concerning all aspects of American history, literature and culture. During the last six years the civil rights movement of the 1950-60s has proved to be one of the most popular areas of research, particularly among undergraduates and sixth-form students. The enquiries have covered many different aspects of the movement: school desegregation, bus boycotts, sit-ins, marches, the involvement of white northern college students, the actions of individuals such as Rosa Parks and Martin Luther King, Jr., and the reactions of white southerners and the federal government. This guide will facilitate research on these topics and many others. -

ISBN Prefix Summary Report Date: 2/24/16 Publisher Public Imprint ISBN Prefix A&C Black A&C Black 0-7136 0-7475 1-4081 1-4088 1-4725 1-4729 1-905615 Bedford/St

ISBN Prefix Summary Report Date: 2/24/16 Publisher Public Imprint ISBN Prefix A&C Black A&C Black 0-7136 0-7475 1-4081 1-4088 1-4725 1-4729 1-905615 Bedford/St. Martin's Bedford/St. Martin's 0-312 0-618 1-319 1-4576 Bloomsbury Academic AVA Publishing 2-88479061 2-88479104 2-88479105 2-88479110 2-94037301 2-94037306 2-94037314 2-94037315 2-94037317 2-94037319 2-94037323 2-94037329 2-94037335 2-94037340 2-94037343 2-94037344 2-94037346 2-94037350 2-94037351 2-94037356 2-94037357 2-94037360 2-94037361 2-94037363 2-94037368 2-94037370 2-94037371 2-94037372 2-94037374 2-94037375 2-94037376 2-94037380 2-94037381 2-94037382 2-94037383 2-94037384 Bloomsbury Academic AVA Publishing 2-94037385 2-94037387 2-94037388 2-94037389 2-94037390 2-94037391 2-94037398 2-94041100 2-94041104 2-94041105 2-94041107 2-94041108 2-94041109 2-94041110 2-94041112 2-94041113 2-94041115 2-94041116 2-94041117 2-94041118 2-94041121 2-94041122 2-94041126 2-94041127 2-94041128 2-94041129 2-94041130 2-94041131 2-94041133 2-94041134 2-94041135 2-94041136 2-94041138 2-94041139 2-94041140 2-94041141 2-94041142 2-94041144 2-94041147 2-94041148 2-94041149 2-94041150 2-94041151 2-94041152 2-94041153 2-94041155 2-94041156 2-94041158 2-94041161 2-94041162 2-94041163 2-94041166 Bloomsbury Academic AVA Publishing 2-94041171 2-94041174 2-94041175 2-94041176 2-94041177 2-94041178 2-94041179 2-94041181 2-94041186 2-94041187 2-94041190 2-94041192 2-94041194 2-94041195 2-94041197 2-94041198 2-94049621 Bloomsbury Academic 0-264 0-304 0-340 0-485 0-520 0-567 0-7136 0-7156 0-7201 0-7475 -

BOOKS by TRUMAN SCHOLARS As of July 2017

BOOKS BY TRUMAN SCHOLARS As of July 2017 Abrams, Stacey (MS 94) [under the pseudonym Selena Montgomery]: Deception (Avon, 2009) Reckless (Avon, 2008) Hidden Sins (HarperTorch, 2006) Secrets and Lies (Avon, 2006) Never Tell (St. Martin’s Paperbacks, 2004) The Art of Desire (Harlequin Kimani, 2002) Power of Persuasion (Harlequin Kimani, 2002) Rules of Engagement (Harlequin Kimani, 2001) Acker, Russell (TX 89) Merchants and Guardians: Balancing U.S. Interest in Global Space Commerce (International Space Policy Forum, 1999) Exploring the Unknown Volume IV: Accessing Space: Selected Documents in the History of the U.S. Civil Space Program (CreateSpace Independent Publishing Platform, 2008) with Roger D. Launius Adelson, Wendi (FL 00) This Is Our Story (Carolina Academic Press, 2013) AleXander, Michelle (OR 87) The New Jim Crow: Mass Incarceration in the Age of Colorblindness (The New Press, 2012) Ansolabehere, Stephen (MN 82) American Government: Power and Purpose (Fourteenth Core Edition) (W. W. Norton & Company, 2017) with Benjamin Ginsberg Cheap and Clean: How Americans Think about Energy in the Age of Global Warming (The MIT Press, 2016) with David M. Konisky The End of Inequality: One Person, One Vote and the Transformation of American Politics (W.W. Norton & Company, 2008) with James M. Snyder Jr Going Negative: How Political Ads Shrink and Polarize the Electorate (Free Press, 1996) with Shanto Iyengar The Media Game: American Politics in the Television Age (Longman, 1992) with Roy Behr and Shanto Iyengar Aguiar, Mark (CT 86) The Increase in Leisure Inequality, 1965-2005 (AEI Press, 2009) with Erik Hurst Araiza, William (TX 81) Animus: A Short Introduction to Bias in the Law (NYU Press, 2017) Enforcing the Equal Protection Clause: Congressional Power, Judicial Doctrine, and Constitutional Law (NYU Press, 2016) Questions and Answers: The First Amendment (LeXisNeXis, 2015) with Russell Weaver First Amendment Law; Freedom of Expression and Freedom of Religion, 3rd ed. -

Do Real Work, Not Homework Brian P

203 9 Do Real Work, Not Homework Brian P. Coppola 9.1 Thinking About Real Work 9.1.1 Defining Real Work: Authentic Learning Experiences In his 1997 essay, “Situated Cognition and How to Overcome It,” Carl Bereiter [1] uses the fictionalized experiences of two students, Flora and Dora, to make an important and often-repeated point about learning. Both of these students pass the same Algebra I course with flying colors. But in Algebra II, while Flora con- tinues her success, Dora barely passes. Bereiter’s point is that in Flora’s case, she actually learned the mathematics of algebra in her Algebra I class, and so she could transfer (transport) this learning to Algebra II. Dora, it is supposed, did not learn algebra,butlearned about doing algebra problems, and succeeded in Algebra I through rote repetition and recognition. Many versions of the Flora/Dora story have featured in discussions of science learning, where contrast is made between meaningful learning and rote learn- ing [2, 3], or the distinction between learning about science, learning science, and learning to be a scientist is emphasized [4]. Unlike a traditional apprenticeship, where one learns specific artisanship in a materially relevant setting under the direction of a master craftsperson, school learning is distilled, abstracted, and ide- alized, with the validation of correct answers standing in as the nearly universal indicator of learning. Even at the introductory college level, there is compelling evidence that students can produce or select the proper answer in a way that is disconnected from a deep understanding of the underlying subject matter [5–10]. -

Democratizing E-Book Retailing

3,000 plus Join us and more than 3,000 publishers in Democratizing E-Book Retailing 10 Finger Press Acrobat Books Alan Phillips 121 Publications Action Publishing LLC Alazar Press 1500 Books Active Interest Media, Inc. Alban Books 3 Finger Prints Active Parenting Albion Press 3G Publishing, Inc. Adair Digital Alchimia 498 Productions, LLC Adaptive Studios Alden-Swain Press 4th & Goal Publishing Addicus Books Aleph Book Company Pvt.Ltd 5Points Publishing Adlai E. Stevenson III Algonquin Books 5x5 Publications Adm Books Ali Warren 72nd St Books Adriel C. Gray Alight/Swing Street Publishing A & A Johnston Press Advanced Perceptions Inc. Alinari 24 Ore A & A Publishers Inc Advanced Publishing LLC All Clear Publishing A C Hubbard Advantage Books All In One Books - Gregory Du- A Sense Of Nature Adventure Street Press LLC pree A&C Publishing Adventures Unlimited Press Allen & Unwin A.R.E. Press Aepisaurus Publishing, LLC Allen Press Inc AA Publishing Aesop Press ALM Media, LLC Aadarsh Pvt Ltd Affirm Press Alma Books AAPC Publishing AFG Weavings LLC Alma Rose Publishing AAPPL Aflame Books Almaden Books Aark House Publishing AFN Alpha International Aaron Blake Publishers African American Images Altruist Publishing Abdelhamid African Books Collective AMACOM Abingdon Press Afterwords Press Amanda Ballway Abny Media Group AGA Institute Press AMERICA SERIES Aboriginal Studies Press Aggor Publishers LLC American Academy of Abrams AH Comics, Inc. Oral Medicine Absolute Press Ajour Publishing House American Academy of Pediatrics Abstract Sounds Books, Ltd. AJR Publishing American Automobile Association Academic Foundation AKA Publishing Pty Ltd (AAA) Academy of Nutrition & Dietetics Akmaeon Publishing, LLC American Bar Association Acapella Publishing Aladdin American Cancer Society, Inc.