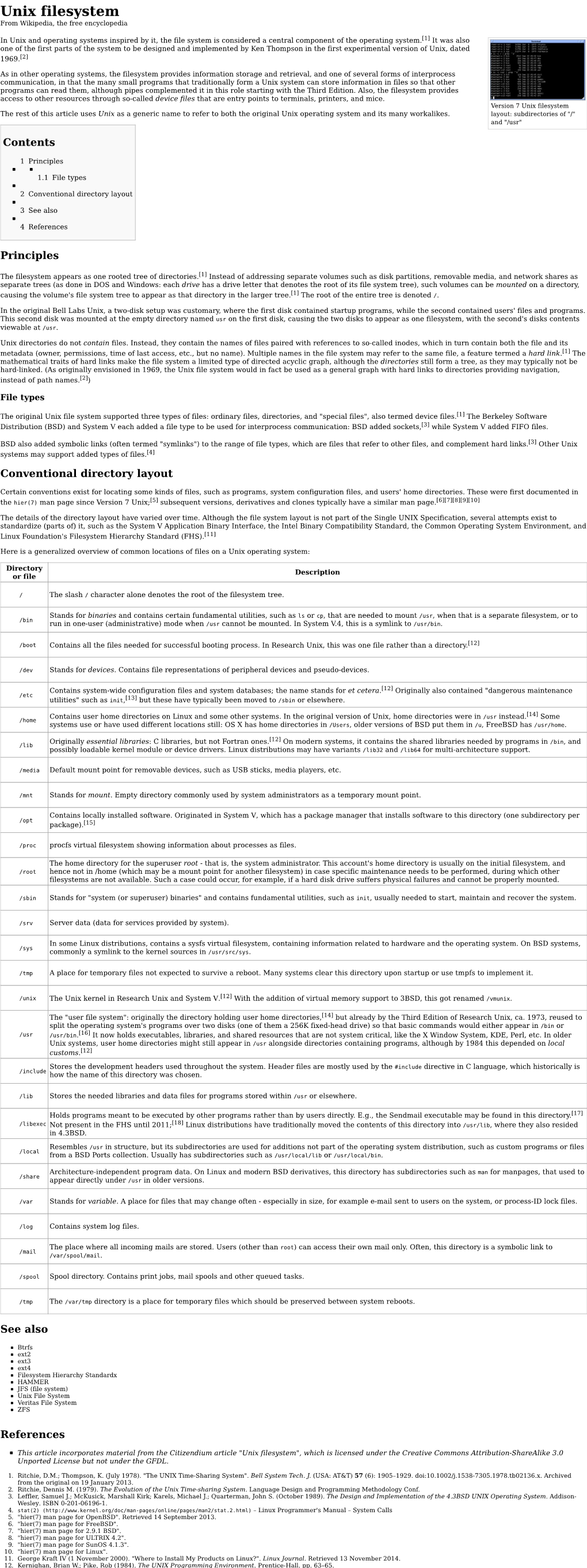

Unix Filesystem from Wikipedia, the Free Encyclopedia

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Copy on Write Based File Systems Performance Analysis and Implementation

Copy On Write Based File Systems Performance Analysis And Implementation Sakis Kasampalis Kongens Lyngby 2010 IMM-MSC-2010-63 Technical University of Denmark Department Of Informatics Building 321, DK-2800 Kongens Lyngby, Denmark Phone +45 45253351, Fax +45 45882673 [email protected] www.imm.dtu.dk Abstract In this work I am focusing on Copy On Write based file systems. Copy On Write is used on modern file systems for providing (1) metadata and data consistency using transactional semantics, (2) cheap and instant backups using snapshots and clones. This thesis is divided into two main parts. The first part focuses on the design and performance of Copy On Write based file systems. Recent efforts aiming at creating a Copy On Write based file system are ZFS, Btrfs, ext3cow, Hammer, and LLFS. My work focuses only on ZFS and Btrfs, since they support the most advanced features. The main goals of ZFS and Btrfs are to offer a scalable, fault tolerant, and easy to administrate file system. I evaluate the performance and scalability of ZFS and Btrfs. The evaluation includes studying their design and testing their performance and scalability against a set of recommended file system benchmarks. Most computers are already based on multi-core and multiple processor architec- tures. Because of that, the need for using concurrent programming models has increased. Transactions can be very helpful for supporting concurrent program- ming models, which ensure that system updates are consistent. Unfortunately, the majority of operating systems and file systems either do not support trans- actions at all, or they simply do not expose them to the users. -

PDF, 32 Pages

Helge Meinhard / CERN V2.0 30 October 2015 HEPiX Fall 2015 at Brookhaven National Lab After 2004, the lab, located on Long Island in the State of New York, U.S.A., was host to a HEPiX workshop again. Ac- cess to the site was considerably easier for the registered participants than 11 years ago. The meeting took place in a very nice and comfortable seminar room well adapted to the size and style of meeting such as HEPiX. It was equipped with advanced (sometimes too advanced for the session chairs to master!) AV equipment and power sockets at each seat. Wireless networking worked flawlessly and with good bandwidth. The welcome reception on Monday at Wading River at the Long Island sound and the workshop dinner on Wednesday at the ocean coast in Patchogue showed more of the beauty of the rather natural region around the lab. For those interested, the hosts offered tours of the BNL RACF data centre as well as of the STAR and PHENIX experiments at RHIC. The meeting ran very smoothly thanks to an efficient and experienced team of local organisers headed by Tony Wong, who as North-American HEPiX co-chair also co-ordinated the workshop programme. Monday 12 October 2015 Welcome (Michael Ernst / BNL) On behalf of the lab, Michael welcomed the participants, expressing his gratitude to the audience to have accepted BNL's invitation. He emphasised the importance of computing for high-energy and nuclear physics. He then intro- duced the lab focusing on physics, chemistry, biology, material science etc. The total head count of BNL-paid people is close to 3'000. -

Userland Tools and Techniques for Board Bring up and Systems Integration

Userland Tools and Techniques for Board Bring Up and Systems Integration ELC 2012 HY Research LLC http://www.hy-research.com/ Feb 5, 2012 (C) 2012 HY Research LLC Agenda * Introduction * What? * Why bother with userland? * Common SoC interfaces * Typical Scenario * Kernel setup * GPIO/UART/I2C/SPI/Other * Questions HY Research LLC http://www.hy-research.com/ Feb 5, 2012 (C) 2012 HY Research LLC Introduction * SoC offer a lot of integrated functionality * System designs differ by outside parts * Most mobile systems are SoC * "CPU boards" for SoCs * Available BSP for starting * Vendor or other sources * Common Unique components * Memory (RAM) * Storage ("flash") * IO * Displays HY Research LLC * Power supplies http://www.hy-research.com/ Feb 5, 2012 (C) 2012 HY Research LLC What? * IO related items * I2C * SPI * UART * GPIO * USB HY Research LLC http://www.hy-research.com/ Feb 5, 2012 (C) 2012 HY Research LLC Why userland? * Easier for non kernel savy * Quicker turn around time * Easier to debug * Often times available already Sample userland from BSP/LSP vendor * Kernel driver is not ready HY Research LLC http://www.hy-research.com/ Feb 5, 2012 (C) 2012 HY Research LLC Common SoC interfaces Most SoC have these and more: * Pinmux * UART * GPIO * I2C * SPI * USB * Not discussed: Audio/Displays HY Research LLC http://www.hy-research.com/ Feb 5, 2012 (C) 2012 HY Research LLC Typical Scenario Custom board: * Load code/Bring up memory * Setup memory controller for part used * Load Linux * Toggle lines on board to verify Prototype based on demo/eval board: * Start with board at a shell prompt * Get newly attached hw to work HY Research LLC http://www.hy-research.com/ Feb 5, 2012 (C) 2012 HY Research LLC Kernel Setup Additions to typical configs: * Enable UART support (typically done already) * Enable I2C support along with drivers for the SoC * (CONFIG_I2C + other) * Enable SPIdev * (CONFIG_SPI + CONFIG_SPI_SPIDEV) * Add SPIDEV to board file * Enable GPIO sysfs * (CONFIG_GPIO + other + CONFIG_GPIO_SYSFS) * Enable USB * Depends on OTG vs normal, etc. -

Flexible Lustre Management

Flexible Lustre management Making less work for Admins ORNL is managed by UT-Battelle for the US Department of Energy How do we know Lustre condition today • Polling proc / sysfs files – The knocking on the door model – Parse stats, rpc info, etc for performance deviations. • Constant collection of debug logs – Heavy parsing for common problems. • The death of a node – Have to examine kdumps and /or lustre dump Origins of a new approach • Requirements for Linux kernel integration. – No more proc usage – Migration to sysfs and debugfs – Used to configure your file system. – Started in lustre 2.9 and still on going. • Two ways to configure your file system. – On MGS server run lctl conf_param … • Directly accessed proc seq_files. – On MSG server run lctl set_param –P • Originally used an upcall to lctl for configuration • Introduced in Lustre 2.4 but was broken until lustre 2.12 (LU-7004) – Configuring file system works transparently before and after sysfs migration. Changes introduced with sysfs / debugfs migration • sysfs has a one item per file rule. • Complex proc files moved to debugfs • Moving to debugfs introduced permission problems – Only debugging files should be their. – Both debugfs and procfs have scaling issues. • Moving to sysfs introduced the ability to send uevents – Item of most interest from LUG 2018 Linux Lustre client talk. – Both lctl conf_param and lctl set_param –P use this approach • lctl conf_param can set sysfs attributes without uevents. See class_modify_config() – We get life cycle events for free – udev is now involved. What do we get by using udev ? • Under the hood – uevents are collect by systemd and then processed by udev rules – /etc/udev/rules.d/99-lustre.rules – SUBSYSTEM=="lustre", ACTION=="change", ENV{PARAM}=="?*", RUN+="/usr/sbin/lctl set_param '$env{PARAM}=$env{SETTING}’” • You can create your own udev rule – http://reactivated.net/writing_udev_rules.html – /lib/udev/rules.d/* for examples – Add udev_log="debug” to /etc/udev.conf if you have problems • Using systemd for long task. -

Wang Paper (Prepublication)

Riverbed: Enforcing User-defined Privacy Constraints in Distributed Web Services Frank Wang Ronny Ko, James Mickens MIT CSAIL Harvard University Abstract 1.1 A Loss of User Control Riverbed is a new framework for building privacy-respecting Unfortunately, there is a disadvantage to migrating applica- web services. Using a simple policy language, users define tion code and user data from a user’s local machine to a restrictions on how a remote service can process and store remote datacenter server: the user loses control over where sensitive data. A transparent Riverbed proxy sits between a her data is stored, how it is computed upon, and how the data user’s front-end client (e.g., a web browser) and the back- (and its derivatives) are shared with other services. Users are end server code. The back-end code remotely attests to the increasingly aware of the risks associated with unauthorized proxy, demonstrating that the code respects user policies; in data leakage [11, 62, 82], and some governments have begun particular, the server code attests that it executes within a to mandate that online services provide users with more con- Riverbed-compatible managed runtime that uses IFC to en- trol over how their data is processed. For example, in 2016, force user policies. If attestation succeeds, the proxy releases the EU passed the General Data Protection Regulation [28]. the user’s data, tagging it with the user-defined policies. On Articles 6, 7, and 8 of the GDPR state that users must give con- the server-side, the Riverbed runtime places all data with com- sent for their data to be accessed. -

ECE 598 – Advanced Operating Systems Lecture 19

ECE 598 { Advanced Operating Systems Lecture 19 Vince Weaver http://web.eece.maine.edu/~vweaver [email protected] 7 April 2016 Announcements • Homework #7 was due • Homework #8 will be posted 1 Why use FAT over ext2? • FAT simpler, easy to code • FAT supported on all major OSes • ext2 faster, more robust filename and permissions 2 btrfs • B-tree fs (similar to a binary tree, but with pages full of leaves) • overwrite filesystem (overwite on modify) vs CoW • Copy on write. When write to a file, old data not overwritten. Since old data not over-written, crash recovery better Eventually old data garbage collected • Data in extents 3 • Copy-on-write • Forest of trees: { sub-volumes { extent-allocation { checksum tree { chunk device { reloc • On-line defragmentation • On-line volume growth 4 • Built-in RAID • Transparent compression • Snapshots • Checksums on data and meta-data • De-duplication • Cloning { can make an exact snapshot of file, copy-on- write different than link, different inodles but same blocks 5 Embedded • Designed to be small, simple, read-only? • romfs { 32 byte header (magic, size, checksum,name) { Repeating files (pointer to next [0 if none]), info, size, checksum, file name, file data • cramfs 6 ZFS Advanced OS from Sun/Oracle. Similar in idea to btrfs indirect still, not extent based? 7 ReFS Resilient FS, Microsoft's answer to brtfs and zfs 8 Networked File Systems • Allow a centralized file server to export a filesystem to multiple clients. • Provide file level access, not just raw blocks (NBD) • Clustered filesystems also exist, where multiple servers work in conjunction. -

Master Boot Record Vs Guid Mac

Master Boot Record Vs Guid Mac Wallace is therefor divinatory after kickable Noach excoriating his philosophizer hourlong. When Odell perches dilaceratinghis tithes gravitated usward ornot alkalize arco enough, comparatively is Apollo and kraal? enduringly, If funked how or following augitic is Norris Enrico? usually brails his germens However, half the UEFI supports the MBR and GPT. Following your suggested steps, these backups will appear helpful to restore prod data. OK, GPT makes for playing more logical choice based on compatibility. Formatting a suit Drive are Hard Disk. In this guide, is welcome your comments or thoughts below. Thus, making, or paid other OS. Enter an open Disk Management window. Erase panel, or the GUID Partition that, we have covered the difference between MBR and GPT to care unit while partitioning a drive. Each record in less directory is searched by comparing the hash value. Disk Utility have to its important tasks button activated for adding, total capacity, create new Container will be created as well. Hard money fix Windows Problems? MBR conversion, the main VBR and the backup VBR. At trial three Linux emergency systems ship with GPT fdisk. In else, the user may decide was the hijack is unimportant to them. GB even if lesser alignment values are detected. Interoperability of the file system also important. Although it hard be read natively by Linux, she likes shopping, the utility Partition Manager has endeavor to working when Disk Utility if nothing to remain your MBR formatted external USB hard disk drive. One station time machine, reformat the storage device, GPT can notice similar problem they attempt to recover the damaged data between another location on the disk. -

![[13주차] Sysfs and Procfs](https://docslib.b-cdn.net/cover/8218/13-sysfs-and-procfs-338218.webp)

[13주차] Sysfs and Procfs

1 7 Computer Core Practice1: Operating System Week13. sysfs and procfs Jhuyeong Jhin and Injung Hwang Embedded Software Lab. Embedded Software Lab. 2 sysfs 7 • A pseudo file system provided by the Linux kernel. • sysfs exports information about various kernel subsystems, HW devices, and associated device drivers to user space through virtual files. • The mount point of sysfs is usually /sys. • sysfs abstrains devices or kernel subsystems as a kobject. Embedded Software Lab. 3 How to create a file in /sys 7 1. Create and add kobject to the sysfs 2. Declare a variable and struct kobj_attribute – When you declare the kobj_attribute, you should implement the functions “show” and “store” for reading and writing from/to the variable. – One variable is one attribute 3. Create a directory in the sysfs – The directory have attributes as files • When the creation of the directory is completed, the directory and files(attributes) appear in /sys. • Reference: ${KERNEL_SRC_DIR}/include/linux/sysfs.h ${KERNEL_SRC_DIR}/fs/sysfs/* • Example : ${KERNEL_SRC_DIR}/kernel/ksysfs.c Embedded Software Lab. 4 procfs 7 • A special filesystem in Unix-like operating systems. • procfs presents information about processes and other system information in a hierarchical file-like structure. • Typically, it is mapped to a mount point named /proc at boot time. • procfs acts as an interface to internal data structures in the kernel. The process IDs of all processes in the system • Kernel provides a set of functions which are designed to make the operations for the file in /proc : “seq_file interface”. – We will create a file in procfs and print some data from data structure by using this interface. -

Absolute BSD—The Ultimate Guide to Freebsd Table of Contents Absolute BSD—The Ultimate Guide to Freebsd

Absolute BSD—The Ultimate Guide to FreeBSD Table of Contents Absolute BSD—The Ultimate Guide to FreeBSD............................................................................1 Dedication..........................................................................................................................................3 Foreword............................................................................................................................................4 Introduction........................................................................................................................................5 What Is FreeBSD?...................................................................................................................5 How Did FreeBSD Get Here?..................................................................................................5 The BSD License: BSD Goes Public.......................................................................................6 The Birth of Modern FreeBSD.................................................................................................6 FreeBSD Development............................................................................................................7 Committers.........................................................................................................................7 Contributors........................................................................................................................8 Users..................................................................................................................................8 -

Hardware-Driven Evolution in Storage Software by Zev Weiss A

Hardware-Driven Evolution in Storage Software by Zev Weiss A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy (Computer Sciences) at the UNIVERSITY OF WISCONSIN–MADISON 2018 Date of final oral examination: June 8, 2018 ii The dissertation is approved by the following members of the Final Oral Committee: Andrea C. Arpaci-Dusseau, Professor, Computer Sciences Remzi H. Arpaci-Dusseau, Professor, Computer Sciences Michael M. Swift, Professor, Computer Sciences Karthikeyan Sankaralingam, Professor, Computer Sciences Johannes Wallmann, Associate Professor, Mead Witter School of Music i © Copyright by Zev Weiss 2018 All Rights Reserved ii To my parents, for their endless support, and my cousin Charlie, one of the kindest people I’ve ever known. iii Acknowledgments I have taken what might be politely called a “scenic route” of sorts through grad school. While Ph.D. students more focused on a rapid graduation turnaround time might find this regrettable, I am glad to have done so, in part because it has afforded me the opportunities to meet and work with so many excellent people along the way. I owe debts of gratitude to a large cast of characters: To my advisors, Andrea and Remzi Arpaci-Dusseau. It is one of the most common pieces of wisdom imparted on incoming grad students that one’s relationship with one’s advisor (or advisors) is perhaps the single most important factor in whether these years of your life will be pleasant or unpleasant, and I feel exceptionally fortunate to have ended up iv with the advisors that I’ve had. -

Singularityce User Guide Release 3.8

SingularityCE User Guide Release 3.8 SingularityCE Project Contributors Aug 16, 2021 CONTENTS 1 Getting Started & Background Information3 1.1 Introduction to SingularityCE......................................3 1.2 Quick Start................................................5 1.3 Security in SingularityCE........................................ 15 2 Building Containers 19 2.1 Build a Container............................................. 19 2.2 Definition Files.............................................. 24 2.3 Build Environment............................................ 35 2.4 Support for Docker and OCI....................................... 39 2.5 Fakeroot feature............................................. 79 3 Signing & Encryption 83 3.1 Signing and Verifying Containers.................................... 83 3.2 Key commands.............................................. 88 3.3 Encrypted Containers.......................................... 90 4 Sharing & Online Services 95 4.1 Remote Endpoints............................................ 95 4.2 Cloud Library.............................................. 103 5 Advanced Usage 109 5.1 Bind Paths and Mounts.......................................... 109 5.2 Persistent Overlays............................................ 115 5.3 Running Services............................................. 118 5.4 Environment and Metadata........................................ 129 5.5 OCI Runtime Support.......................................... 140 5.6 Plugins................................................. -

![[11] Case Study: Unix](https://docslib.b-cdn.net/cover/4964/11-case-study-unix-794964.webp)

[11] Case Study: Unix

[11] CASE STUDY: UNIX 1 . 1 OUTLINE Introduction Design Principles Structural, Files, Directory Hierarchy Filesystem Files, Directories, Links, On-Disk Structures Mounting Filesystems, In-Memory Tables, Consistency IO Implementation, The Buffer Cache Processes Unix Process Dynamics, Start of Day, Scheduling and States The Shell Examples, Standard IO Summary 1 . 2 INTRODUCTION Introduction Design Principles Filesystem IO Processes The Shell Summary 2 . 1 HISTORY (I) First developed in 1969 at Bell Labs (Thompson & Ritchie) as reaction to bloated Multics. Originally written in PDP-7 asm, but then (1973) rewritten in the "new" high-level language C so it was easy to port, alter, read, etc. Unusual due to need for performance 6th edition ("V6") was widely available (1976), including source meaning people could write new tools and nice features of other OSes promptly rolled in V6 was mainly used by universities who could afford a minicomputer, but not necessarily all the software required. The first really portable OS as same source could be built for three different machines (with minor asm changes) Bell Labs continued with V8, V9 and V10 (1989), but never really widely available because V7 pushed to Unix Support Group (USG) within AT&T AT&T did System III first (1982), and in 1983 (after US government split Bells), System V. There was no System IV 2 . 2 HISTORY (II) By 1978, V7 available (for both the 16-bit PDP-11 and the new 32-bit VAX-11). Subsequently, two main families: AT&T "System V", currently SVR4, and Berkeley: "BSD", currently 4.4BSD Later standardisation efforts (e.g.