Music Generation from MIDI Datasets

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Alive Dead Media 2020: Tracker and Chip Music

Alive Dead Media 2020: Tracker and Chip Music 1st day introduction, Markku Reunanen Pics gracefully provided by Wikimedia Commons Arrangements See MyCourses for more details, but for now: ● Whoami, who’s here? ● Schedule of this week: history, MilkyTracker with Yzi, LSDJ with Miranda Kastemaa, holiday, final concert ● 80% attendance, two tunes for the final concert and a little jingle today ● Questions about the practicalities? History of Home Computer and Game Console Audio ● This is a vast subject: hundreds of different devices and chips starting from the late 1970s ● In the 1990s starts to become increasingly standardized (or boring, if you may :) so we’ll focus on earlier technology ● Not just hardware: how did you compose music with contemporary tools? ● Let’s hear a lot of examples – not using Zoom audio The Home Computer Boom ● At its peak in the 1980s, but started somewhat earlier with Apple II (1977), TRS-80 (1977) and Commodore PET (1977) ● Affordable microprocessors, such as Zilog Z80, MOS 6502 and the Motorola 6800 series ● In the 1980s the market grew rapidly with Commodore VIC-20 (1980) and C-64 (1982), Sinclair ZX Spectrum (1982), MSX compatibles (1983) … and many more! ● From enthusiast gadgets to game machines Enter the 16-bits ● Improving processors: Motorola 68000 series, Intel 8088/8086/80286 ● More colors, more speed, more memory, from tapes to floppies, mouse(!) ● Atari ST (1984), Commodore Amiga (1985), Apple Macintosh (1984) ● IBM PC and compatibles (1981) popular in the US, improving game capability Not Just Computers ● The same technology powered game consoles of the time ● Notable early ones: Fairchild Channel F (1976), Atari VCS aka. -

January 2010

SPECIAL FEATURE: 2009 FRONT LINE AWARDS VOL17NO1JANUARY2010 THE LEADING GAME INDUSTRY MAGAZINE 1001gd_cover_vIjf.indd 1 12/17/09 9:18:09 PM CONTENTS.0110 VOLUME 17 NUMBER 1 POSTMORTEM DEPARTMENTS 20 NCSOFT'S AION 2 GAME PLAN By Brandon Sheffield [EDITORIAL] AION is NCsoft's next big subscription MMORPG, originating from Going Through the Motions the company's home base in South Korea. In our first-ever Korean postmortem, the team discusses how AION survived worker 4 HEADS UP DISPLAY [NEWS] fatigue, stock drops, and real money traders, providing budget and Open Source Space Games, new NES music engine, and demographics information along the way. Gamma IV contest announcement. By NCsoft South Korean team 34 TOOL BOX By Chris DeLeon [REVIEW] FEATURES Unity Technologies' Unity 2.6 7 2009 FRONT LINE AWARDS 38 THE INNER PRODUCT By Jake Cannell [PROGRAMMING] We're happy to present our 12th annual tools awards, representing Brick by Brick the best in game industry software, across engines, middleware, production tools, audio tools, and beyond, as voted by the Game 42 PIXEL PUSHER By Steve Theodore [ART] Developer audience. Tilin'? Stylin'! By Eric Arnold, Alex Bethke, Rachel Cordone, Sjoerd De Jong, Richard Jacques, Rodrigue Pralier, and Brian Thomas. 46 DESIGN OF THE TIMES By Damion Schubert [DESIGN] Get Real 15 RETHINKING USER INTERFACE Thinking of making a game for multitouch-based platforms? This 48 AURAL FIXATION By Jesse Harlin [SOUND] article offers a look at the UI considerations when moving to this sort of Dethroned interface, including specific advice for touch offset, and more. By Brian Robbins 50 GOOD JOB! [CAREER] Konami sound team mass exodus, Kim Swift interview, 27 CENTER OF MASS and who went where. -

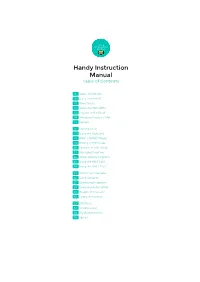

Handy Instruction Manual

Handy Instruction Manual Handy Instruction Manual Table of Contents 9 About SmileBASIC 10 Using SmileBASIC 11 About BASIC 12 About the TOP MENU 13 Projects in the Cloud 14 Managing Projects / Files 15 Options 16 Starting BASIC 17 Using the Keyboard 18 What is DIRECT Mode? 19 Writing in EDIT Mode 20 Features in EDIT Mode 21 Managing Programs 22 About Sample Programs 23 Using the HELP Tool 24 Using the SMILE Tool 25 "PRINT" and Variables 26 Using Variables 27 Conditional Judgment 28 Computer Colors (RGB) 29 Graphic Instructions 30 Sound Instructions 32 3D Effects 33 Screen Layout 34 BG (Backgrounds) 35 Sprites 9 About SmileBASIC SmileBASIC is a tool that allows you to easily write programs on a Nintendo 3DS system. As it is also compatible with the system's 3D mode, you can create programs that utilize the 3D feature. User Agreement Programs and resources such as images created using this product can be made open to the public using the Publish feature, allowing large numbers of people to view and run them. Please be sure not to publish any content that other people may find offensive, or content that reveals personally identifiable information or violates the rights of others (including portrait rights, privacy rights, and copyrights). Anyone who performs improper acts that may cause public nuisance, or who publishes obscene or libelous images, risks punishment in accordance with the applicable laws and regulations. SmileBoom Co.Ltd. assumes no responsibility for any issues resulting from information or programs published by its customers. Please note that if we receive a report from a customer that they are offended by a published project, we may delete said project unconditionally, without any prior procedure such as confirming with the relevant creator. -

Discover Moods

moods logo - DISCOVER MOODS - The first perfect software for musicians. MOODS-Project Demonstration Dissemination MOODS main features: FREE DEMO signifiant reduction of time (hence, costs -20%-) needed for modifying main scores and parts during rehearsals (the About Music time spent for a simple modification will be reduced from 30 sec to 1 sec, while for a heavy modification it will pass Glossary from several hours to less than 1 minute), Bibliography reliable, complete accuraty of inserting changes in all scores and parts, Downloads possibility of saving details usually never saved up to now Examples registering and reproducing the performing rate in which each measure os the score has been executed About this page automating the "page turning" during rehearsals and performances, fast retrieval of scores and parts, rapid, coordinated restart of pieces from any given point. visitors The MOODS Technology has been evolved/used in other Projects and products: EMAIL US FCD version of the MPEG symbolic music representation standard: w8632-MPEG-SMR-part-23-rev-public.pdf LINK to IEEE Multimedia article on Symbolic Music Representation in MPEG: other links: Demonstration Tools -- CLICK HERE to GET MPEG-4 Player with SMR decoder including-- MPEG-4 Player with SMR decoder and an example mozart.mp4 Short manual of the MPEG-4 player and its BIF application called Mozart.mp4 in BIFS w8632-MPEG-SMR-part-23-rev-public.pdf Link to MPEG SMR technology description on MEPG site: decription of MPEG SMR technology usage of MPEG SMR in the IMAESTRO tools: -

Electronic Manual

Electronic Manual Version 20200601 Operation Method ◎Flip Pages Controller Directional Buttons: Left and Right This electronic manual is a document for explaining the functions of "SmileBASIC". USB Keyboard Please refer to the inline help for specific arguments for each command. Directional Keys: ←→ Touch/Mouse * Product names and trademarks in the manual are generally trademarks or registered trademarks of each company. Slide to the left or right Display Flow from TOPMENU • The menu of SmileBASIC 4 is connected as shown in this figure. Released Works! Projects Settings Flip pages with L/R Official Website File Manager Flip pages with L/R F12 You can make a program here! TOPMENU Beginner’s Guide BASIC Make a Program How to Download and Play Works • Open the published work page, download the work, and play it locally. TOPMENU Press the R Button twice to open the published work page Published Works Local Samples Downloaded works are stored locally, As long as you are so you can select your favorite game Press L twice to return to the local page connected to the Internet, or tool and press the A Button! published works will It's so easy! R Button automatically line up even you don’t know any Public Keys. Local Samples Of course, you can also enter Public Key Direct Entry Display the Public Key directly! You cannot download them if the parental control is set How to Make a Program • Write a program in the editor and execute it in the direct mode to check the operation. TOPMENU Console Display (Direct Mode) F5 + Button Run/Stop Press the - -

PMML: a Music Description Language Supporting Algorithmic Representation of Musical Expression

PMML: A Music Description Language Supporting Algorithmic Representation of Musical Expression Satoshi Nishimura School of Computer Science and Engineering The University of Aizu Aizu-Wakamatsu city, Fukushima, 965-8580, Japan Email: nisi&u-aizu. ac . jp Abstract This paper describes a novel music description language called the Practical Music Macro Language (PMML), intended for the computer-controlled performance of expressive music using MIDI instruments. A remarkable feature of the PMML is its ability to specify expressive parameters algorithmically. A toolkit consisting of a compiler which translates a PMML source code to the Standard MIDI file, a discompiler, and an Emacs-based data entry tool is developed. 1 Introduction So far many music description languages have been proposed; however, little attention has been paid to the representation of musical expression which is usually not designated on scores. Although most languages have means for specifying expressive parameters (e.g., dynamics and tempo fluctuation) on the basis of a single note or a group of notes, it is too tiresome to describe expressive music only with these functions. Some languages such as Formula [AK911 enable us to specify those parameters according to some graphical shapes; however, we still have many things which must be done manually. For example, in order to increase the loudness of the highest-pitch note for each chord, we need to designate the velocity increment chord by chord. The PMML is a novel MIDI-based event description language addressing the aforementioned problem by supporting the procedure-based representation of musical expression. The syntax of the PMML is similar to that of the C/C++ language which would be today’s most common programming language. -

EJ Garba Music Technology an Introductory Guide

E. J. Garba Music Technology An Introductory Guide Copyright © 2007 2015 E. J. Garba. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, printing, recording, or otherwise, without the written prior permission of the author. ETHEREAL MULTIMEDIA TECHNOLOGY Block D 19, No. 45 Hammaruwa Way, Opp. Muri Hotel P. O. Box 1343 Jalingo, Taraba State, RC 1134046 NG Phone: +2348036943881 Website: https://ethereal-multimedia.com/ About The Author E. J. Garba (PhD, MEng, BSc) is a Music and Multimedia Consultant with ETHEREAL MULTIMEDIA TECHNOLOGY LTD. He possesses a PhD Degree in Music/Multimedia Technology. He is a graduate of in Computer Science, Databases and Networking from St. Petersburg State University of Information Technology, Mechanics and Optics, St. Petersburg, Russia. With Master s Degree in Software Engineering, he specializes in: Software design/development/engineering; System support; Web Design, Hosting and Digital Content Management; Multimedia/Music Technology and Digital Audio Production; Music composing and arranging; Technical documentation and writing. His key areas of research and development include: Mathematical and visual modeling of In-The-Box (ITB) 3D Soundstage; Software Framework for Real-time Automated 3D Audio Mixing and Mastering; Computational Problem Solving; Hybridized Interactive Algorithmic Composition Model. Acknowledgements I am most grateful to the Holy Spirit for his divine inspiration -

MSX Programming – Graham Bland

MSX PrograDlDling Graham Bland Pitman PITMAN PUBLISHING LIMITED 128 Long Acre, London WC2E 9AN A Longman Group Company © Graham Bland 1986 First published 1986 British Library Cataloguing in Publication Data Bland, Graham MSX programming. 1. MSX microcomputers - Programming 2. MSX BASIC (Computer program language) I. Title 001.64'24 QA76.8.M8 ISBN 0-273-02302-0 All rights reserved. No part ofthis publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording and/or otherwise, without the prior written permission of the publishers. This book may not be lent, resold, hired out or otherwise disposed of by way of trade in any form of binding or cover other than that in which it is published, without the prior consent of the publishers. Printed in Great Britain at the Bath Press, Avon Contents Preface v Acknowledgements vi Syntax notes vii 1 First principles 1 (The central processing unit (CPU), Memory, Input and output devices, Bits and bytes, Memory addressing, The MSX-BASIC interpreter, Programs, PRINT, Stopping programs, Displaying programs, Deleting program lines, Renumbering programs, Automatic line numbering, Altering the screen display, The SCREEN command, Altering colour, The function keys, Saving and loading programs, Summary) 2 Elementary MSX-BASIC 12 (Data and data types, Naming variables, The MSX-BASIC operators, Variable assignment, The GOTO statement, Conditional statements and branching, Loops, Variable housekeeping, Global variable typing, Summary) 3 Functions and subroutines 34 (Functions, User-defined functions, Subroutines, Stepwise refinement, Creating subroutine libraries, Summary) 4 Loops, interrupts and event monitoring 48 (The FOR .. -

MSX Technical Data Book

t'.liJ MSX Technical Data Book Hardware/Software Specifications Presented by Robs�/s MSX Worksho� Originaly scanned by Ivan Latorre Converted to PDF Eduardo Robsy [September 2004] SONY. MSX Technical Data Book Hardware/Software Specifications SONY. Sony Corporation 4 14 1, Asahi-cho, Atsugi-shi, Kanagawa-ken, Japan 243 Copyright Microsoft Corporation t�:' 1984 Produced by ASCII Corporation Printed in Japan PREFACE The Microsoft MSX standard was inv ented to provide end users and software developers with a standardized computer so that programs could run on any computer ev en though they were made by different manufacturers. Thi s book pr esents the MSX specifications in detail. It is intended to be a ref er ence for advanced programmers and software developers. The information is generally divided four parts. Part A, MSX HARDWARE SPECI FICATIONS, pr esents the specifications for the MSX system hardwar e. Chapter 1, Hardwar e Specif ication, cov ers the MSX standard hardware configuration in terms of the requirements for the LSis, memory siz e, interrupts, scr een, keyboard, and sound used in the main unit; and the various (cassette, floppy , pr inter, serial , and slot > interfaces and co nnectors. It also covers topics such as cartridges, expa nsion, ports, and memory maps. Part B, MSX SYSTEM SOFTWARE, contains a reference guide for MSX BAS IC and information for advanced programming. Chapter 2, Language Specification, is a guide to MSX-BASIC and is for use with advanced programming requiring machine lan guage routi nes. Pa rt C, EXPANDED MSX SYSTEM SOFTWARE, is about the advanced fea tur es of MSX, including Expa nded Disk BASIC and MSX-DOS. -

MSX Computer Magazine 40 Aktu Publications B.V

Uiteindelijk wil je toch een Sony computer Supersnelle MSX2+ Personal Computer Adviesverkoopprijs: Hfl. 1495,- 19.268 kleuren tegelijk 15 geluidskanalen (o.a. 6 sound en 5 rithme Vamaha F.M.) Snelle dubbelzijdige drive Geheugen: 368k ROM en 20mB RAM Snel heidsregelaar en pauzetoets Aansluiting: RGB (SCART) voor monitor en TV Netaansluiting : 220 Volt Importeur: Onder andere verkrijgbaar bij: MSX Centrum Fotostudio Foka W. de Withstraat 27 Kerkstraat 8 1057 XG Amsterdam 5751 BH Deurne Fax 020 - 167058 Tel.: 04930 - 12687 Tel. 020 - 167058 (bel voor informatie tussen 14.00 en 18.00 uur) DEALERS AANVRAGEN WELKOM MSX COMPUTER MAGAZINE is een uitgave van Inhoud MSX Computer Magazine 40 Aktu Publications b.v. Amsterdam Redactioneel 5 MSX Computer Magazine verschijnt acht maal De Trukendoos 34 per jaar. Kort Nieuws - Beurzen 38 Redactieadres MSX2+ uit MSX2: de hc:rdware 39 MSX Computer Magazine Harddisk-nieuws: goedkope zelfbouw-kits 44 Postbus 61264, 1005 HG, Amsterdam MSX-beurs in Zandvoort 48 Tel.: 020 - 845995 BK - luxe filecopier 51 Fax :02 ~862719 UitgeverIHoofdredacteur Wammes Witkop Rubrieken Redactie Eerste Hulp Bij Overleven 6 Max Barber, Paul te Bokkel, Ronaid Egas, Hans Niepoth, Harry van Horen, Markus The, Programma-Service 12 Marièlle Mink, André Knip, Edgar Hildering, MCM's LezersService 14 Robbert Wethmar, Lies Muller, Mathijs Perdec, Kees Reedijk, Aat van Uijen, Wim Vredevoogd, Art Gallery 45 Ries Vriend. MCM's Public'Domain 49 Vragentelefoon redactie Cursus ML op de MSX, deel 1 58 De redactie is telefonjsch alleen bereikbaar via I/O'tjes 62 02~60743. Op dit nummer staat een Oeps 66 antwoordapparaat, waarop we eventuele correcties op artikelen en listings inspreken. -

"Durexforth: Operators Manual"

Contents 1 Introduction4 1.1 Forth, the Language.........................4 1.1.1 Why Forth?..........................4 1.1.2 Comparing to other Forths.................4 1.2 Appetizers...............................5 1.2.1 Graphics...........................5 1.2.2 Fractals............................5 1.2.3 Music.............................5 2 Tutorial6 2.1 Interpreter...............................6 2.2 Editor.................................6 2.3 Assembler...............................7 2.4 Console I/O Example........................8 2.5 Avoiding Stack Crashes.......................8 2.5.1 Commenting.........................8 2.5.2 Stack Checks.........................8 2.6 Configuring durexForth.......................9 2.6.1 Stripping Modules......................9 2.6.2 Custom Start-Up.......................9 2.7 How to Learn More..........................9 2.7.1 Internet Resources......................9 2.7.2 Other............................. 10 3 Editor 11 3.1 Key Presses.............................. 11 3.1.1 Inserting Text......................... 11 3.1.2 Navigation.......................... 11 3.1.3 Saving & Quitting...................... 12 3.1.4 Text Manipulation...................... 12 4 Forth Words 13 4.1 Stack Manipulation.......................... 13 4.2 Utility................................. 14 4.3 Mathematics............................. 14 4.4 Signed Mathematics......................... 15 4.5 Logic.................................. 15 4.6 Memory................................ 16 2 4.7 Compiling.............................. -

Distributed Representations of Melodic Phrases Based on Melody Segmentation

Journal of Information Processing Vol.27 278–286 (Mar. 2019) [DOI: 10.2197/ipsjjip.27.278] Regular Paper Melody2Vec: Distributed Representations of Melodic Phrases based on Melody Segmentation Tatsunori Hirai1,a) Shun Sawada2,b) Received: June 6, 2018, Accepted: December 4, 2018 Abstract: In this paper, we present melody2vec, an extension of the word2vec framework to melodies. To apply the word2vec framework to a melody, a definition of a word within a melody is required. We assume phrases within melodies to be words and acquire these words via melody segmentation applying rules for grouping musical notes called Grouping Preference Rules (GPR) in the Generative Theory of Tonal Music (GTTM). We employed a skip- gram representation to train our model using 10,853 melody tracks extracted from MIDI files primarily constructed from pop music. Experimental results show the effectiveness of our model in representing the semantic relatedness between melodic phrases. In addition, we propose a method to edit melodies by replacing melodic phrases within a musical piece based on the similarity of the phrase vectors. The naturalness of the resulting melody was evaluated via a user study and most participants who did not know the musical piece could not point out where the melody had been replaced. Keywords: melody processing, distributed representations, word2vec, melody retrieval might make it possible to quantitatively evaluate the quality of 1. Introduction melodies. Because melodies are essential elements of music, vec- Melody is one of the most significant elements in music. Sev- tor representations of melodies are worth pursuing for future de- eral methods for handling melodies using computers have been velopments in music information retrieval (MIR) research fields.