Coalition Shareholder Letter to FB

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Departed Talent: Matt Damon, Leonardo Dicaprio, Jack Nicholson

The Departed Talent: Matt Damon, Leonardo DiCaprio, Jack Nicholson, Mark Wahlberg, Alec Baldwin, Vera Farmiga, Martin Sheen, Ray Winstone. Date of review: Thursday the 12th of October, 2006. Director: Martin Scorsese Classification: MA (15+) Duration: 152 minutes We rate it: Five stars. For any serious cinemagoer or lover of film as an art form, the arrival of a new film by Martin Scorsese is a reason to get very excited indeed. Scorsese has long been regarded as one of the greatest of America’s filmmakers; since the mid-1980s he has been regarded as one of the greatest filmmakers in the world, period. With landmark works like Taxi Driver (1976), Raging Bull (1980), Goodfellas (1990) and Bringing Out the Dead (1999) to his credit, Scorsese is a director for whom any actor will go the distance, and the casts the director manages to assemble for his every project are extraordinarily impressive. As a visualist Scorsese is a force to be reckoned with, and his soundtracks regularly become known as classic creations; his regular collaborators, including editor Thelma Schoonmaker and cinematographer Michael Ballhaus are the best in their respective fields. Paraphrasing all of this, one could justifiably describe Martin Scorsese as one of the most important and interesting directors on the face of the planet. The Departed, Scorsese’s newest film, is on one level a remake of a very successful Hong Kong action thriller from 2002 called Infernal Affairs. That film, written by Felix Chong and directed by Andrew Lau, set the box office on fire in Hong Kong and became a cult hit around the world. -

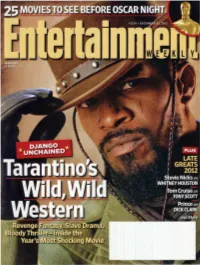

Once Upon a Time … in Santa Clarita: Tarantino Movie Opens Thursday

By: Caleb Lunetta, July 25, 2019 Once Upon a Time … In Santa Clarita: Tarantino movie opens Thursday Hollywood director Quentin Tarantino is known for his genre-defying blockbuster films that combine elements of art house, violence and comedy in his Academy Award-winning films. And the San Fernando Valley resident calls Santa Clarita “a special place,” according to local film property owners who have played host to the luminary for his latest film that debuts tonight, “Once Upon a Time … In Hollywood.” The latest installment in the Tarantino filmography follows the story of “a faded television actor and his stunt double (who) strive to achieve fame and success in the film industry during the final years of Hollywood’s Golden Age in 1969 Los Angeles,” according to IMDb.com. The film features Leonardo DiCaprio, Brad Pitt and Margot Robbie. Tarantino and movie fans were given a teaser trailer where the opening shot was that of Melody Ranch’s “Main Street” set. Veluzat said that the production team had been working with the studio for a couple months, from planning to setting up to filming, and for him, it was a special experience. Tarantino has filmed two of his last three movies at Melody Ranch in Newhall, according to studio manager Daniel Veluzat, and he’s praised the SCV in interviews and conversations with local residents. He really does like it here, said Veluzat, in reference to a question about why Tarantino has filmed his movies at Melody Ranch. “He calls it a special place,” he said. And on Thursday night, “Once Upon a Time” opens in theaters across the country, including the Santa Clarita Valley, featuring scenes shot at both Melody Ranch and the Saugus Speedway. -

NEWS RELEASE for Immediate Release RE: Chamber Annual Banquet

120 W. Ash, P.O. Box 586 • Salina, KS 67402-0586 • 785-827-9301 • fax 785-827-9758 • www.salinakansas.org NEWS RELEASE For Immediate Release RE: Chamber Annual Banquet Frank Abagnale, one the world’s most respected authorities on the subject of forgery, embezzlement, and secure documents, will be the featured speaker at the Chamber’s Annual Banquet and Membership Meeting, Thursday, February 9, 2012. (Biography’s attached) The banquet will be held in the arena of the Bicentennial Center beginning at 6:30pm. A membership networking reception will be held from 5-6pm in Heritage Hall of the Bicentennial Center. In addition, Mr. Abagnale will also conduct an identity theft seminar on February 9th from 2-4pm at Sam’s Chapel on the campus of Kansas Wesleyan University. “We are very pleased that Frank Abagnale has accepted our invitation to come to Salina as the keynote speaker for the Chamber’s annual meeting,” said Todd Davidson, Chairman of the Salina Area Chamber of Commerce. “Identify theft, forgery and embezzlement are major issues faced by businesses and individuals in our society. Mr. Abagnale has a unique set of personal experiences on these issues, from both sides of the fence. His specialized knowledge of these subjects will be enormously valuable to our audience, and his story-telling will captivate them. It should be an entertaining evening for this year’s annual banquet.” For over 30 years Mr. Abagnale has lectured to, and consulted with hundreds of financial institutions, corporations and government agencies around the world. His life story was the subject of a major motion picture entitled “Catch Me If You Can,” directed by Steven Spielberg starring Leonardo DeCaprio and Tom Hanks. -

2020 Oscars Ballot

2020 OSCARS get your party on BALLOT Who will win on Feb 9th? Here is your ballot for all the Nominees. PRINT THIS BALLOT & PLACE YOUR BETS! BEST PICTURE INTERNATIONAL FEATURE MAKEUP AND HAIRSTYLING o Ford v Ferrari o Corpus Christi o Bombshell o The Irishman o Honeyland o Joker o Jojo Rabbit o Les Misérables o Maleficent: Mistress of Evil o Joker o Pain and Glory o Judy o Little Women o Parasite o 1917 o Marriage Story o 1917 ANIMATED FEATURE PRODUCTION DESIGN o Once Upon a Time...in Hollywood o How to Train Your Dragon: The Hidden World o Once Upon a Time....in Hollywood o Parasite o I Lost My Body o The Irishman o Klaus o 1917 DIRECTOR o Missing LInk o Parasite o Bong Joon Ho, Parasite o Toy Story 4 o Jojo Rabbit o Sam Mendes, 1917 o Todd Phillips, Joker DOCUMENTARY FEATURE SOUND EDITING o Martin Scorsese, The Irishman o American Factory o Ford v Ferrari o Quentin Tarantino, Once Upon a Time...in Hollywood o The Cave o Joker o The Edge of Democracy o 1917 ACTOR IN A LEADING ROLE o For Sama o Once Upon a Time...in Hollywood o Joaquin Phoenix, Joker o Honeyland o Star Wars: The Rise of Skywalker o Leonardo DiCaprio, Once Upon a Time... in Hollywood o Antonio Banderas, Pain and Glory ORIGINAL SCORE SOUND MIXING o Adam Driver, Marriage Story o Thomas Newman, 1917 o Ad Astra o Jonathan Pryce, The Two Popes o Hildur Guðnadóttir, Joker o Ford v Ferrari o Alexandre Desplat, Little Women o Joker ACTRESS IN A LEADING ROLE o Randy Newman, Marriage Story o 1917 o Cynthia Erivo, Harriet o John Williams, Star Wars: The Rise of Skywalker o Once Upon -

Collaborations of Martin Scorsese with De Niro And

From Realism to Reinvention: Collaborations of Martin Scorsese with De Niro and DiCaprio By Travis C. Yates Martin Scorsese is considered by many to be the greatest film director of the past quarter- century. He has left an indelible mark on Hollywood with a filmography that spans more than four decades and is as influential as it is groundbreaking. The proverb goes, “It takes a village to raise a child.” The same could be said of cinema, though one popular filmmaking theory refutes this. French filmmaker Jean-Luc Godard said of the filmmaking process, “The cinema is not a craft. It is an art. It does not mean teamwork. One is always alone on the set as before the blank page” (Naremore 9). Film theorist Robert Stam discusses this concept of auteur theory in Film Theory: An Introduction , an idea that originated in postwar France in the 1950s. It is the view that the director is solely responsible for the overall look and style of a film. As Colin Tait submits in his article “When Marty Met Bobby: Collaborative Authorship in Mean Streets and Taxi Driver ,” the theory is a useful tool at best, incorrect at worst. Tait criticizes the theory in that it denies the influence of other artists involved in the production as well as the notion of collaboration. It is this spirit of collaboration in Scorsese films featuring two specific actors, Robert De Niro and Leonardo DiCaprio, which this this paper will examine. Scorsese has collaborated with De Niro on eight films, the first being Mean Streets in 1973 and the most recent being Casino in 1995. -

Was Macht Eigentlich

PEOPLE WAS MACHT EIGENTLICH ... ... FRANK ABAGNALE? Der Meisterfälscher wurde bekannt durch die Verfilmung seiner Lebens- geschichte im Hollywood Blockbuster „Catch me if you can“ von Dina Spahi in und wieder kommt ein Film in die Kinos, Hdessen Hauptfigur so faszinierend ist, dass wir gerne wüssten, was nach dem Abspann aus ihr ge- worden ist. Das gilt auch für Steven Spielbergs Film Catch Me If You Can aus dem Jahr 2002 mit Leonardo DiCaprio als Frank Abagnale und Tom Hanks als der FBI-Agent, der ihn rund um den Globus jagte. Basierend auf einem Buch, das von Herrn Abagnale selbst geschrieben wurde, umreisst die Geschichte in groben Zügen fünf Jahre seines Lebens als einer der wohl besten Trickbetrüger der Welt, der sich erfolg- reich als Pilot, Arzt, Professor und sogar als stellver- tretender Generalstaatsanwalt für den Staat Louisiana ausgab. Die geborgten Identitäten waren allerdings nur die eine Seite der Medaille, denn dem damali- gen Teenager gelang es, Millionen von Banken und Institutionen zu betrügen, indem er Schecks fälschte und Sicherheitsmassnahmen überlistete, die für einen Mann wie ihn, der scheinbar für jedes Problem eine Lösung parat hat, nicht narrensicher genug waren. Aber das war damals, und „damals“ bezieht sich auf einen bemerkenswert kurzen Abschnitt seines Lebens vor fast 50 Jahren, als er im zarten Alter von 16 Jah- ren zu seinen Abenteuern aufbrach und versuchte, der Justiz zu entkommen, um schliesslich mit 21 von einem besonders hartnäckigen FBI-Agenten gefasst zu werden. Die Geschichte, die die meisten von uns „Ich habe den Film über Frank Abagnale nicht wegen seiner Taten gedreht, sondern aufgrund dessen, was er aus seinem Leben in den letzten 30 Jahren gemacht hat.“ Leonardo DiCaprio verkörpert Frank Abagnale in dem Hollywoodstreifen „Catch me if you can“ STEVEN SPIELBERG Leonardo DiCaprio portraits Frank Abagnale in the Hollywood movie “Catch me if you can“ 8 ON LOCATION PEOPLE IN PERSON A word with Frank Abagnale. -

Cinema Against AIDS Thursday, May 22, 2014 Cannes, France

Sharon Stone Cinema Against AIDS Thursday, May 22, 2014 Cannes, France Event Produced by Andy Boose / AAB Productions A Golden Opportunity Cinema Against AIDS Cinema Against AIDS is the most eagerly anticipated and well-publicized event held during the Cannes Film Festival, and is one of the most successful and prominent charitable events in the world. The evening is always marked by unforgettable moments, such as Sharon Stone dancing to an impromptu performance by Sir Elton John and Ringo Starr, Dame Shirley Bassey giving a rousing performance of the song “Goldfinger,” George Clooney bestowing a kiss on a lucky auction bidder, and another lucky bidder winning the chance to go on a trip to space with Leonardo DiCaprio. Leonardo DiCaprio The 2012 and 2013 galas also included a spectacular fashion show curated by Carine Roitfeld and featuring the world’s leading models and one-of-a-kind looks. The event consistently has the most exciting and diverse guest list of any party held during the festival. It includes many of the celebrities and personalities associated with the film festival while also attracting familiar faces from the worlds of fashion, music, business, and international society. Madonna Natalie Portman Adrien Brody Jessica Chastain Karolína Kurková and Antonio Banderas Milla Jovovich A Star-Studded Cast amfAR’s international fundraising events are world renowned for their ability to attract a glittering list of top celebrities, entertainment industry elite, and international society—as well as the press that goes along with such star power. In just the past few years, the guest list has included such luminaries as: Ben Affleck • Jessica Alba • Prince Albert of Monaco Marc Anthony • Giorgio Armani • Lance Armstrong Lauren Bacall • Elizabeth Banks • Javier Bardem Dame Shirley Bassey • Kate Beckinsale • Harry Belafonte Gael Garcia Bernal • Beyoncé • Mary J. -

Django Unchained (Rated R) Under the Tree on Dec

-UENTIN TARANTINO knows the.secret of yuletide gift giving: Get them something they wouldn't get thf ~selves. After all, who but Tarantino, the man who achine-gunned Hitler's face off in his WWII remix (jfiourious Basterds, would think of laying a hyper- violent all-star slave-revenge-Western fairy tale like Django Unchained (rated R) under the tree on Dec. 25? Like so,many other presents, Django was wrapped just in time. After seven months of production, additional reshoots, weather complications, a revolving-door cast list, a sudden death, and a sprint to the finish in the editing room, Tarantino has managed to deliver the film for its festive release date. With that long, dusty trail now behind , him, the filmmaker slumps beneath a foreign-language . ' \Pulp Fiction poster in a corner of Do Hwa, the Korean l ' l ◄ , 1 , ~staurant he co-owns in Manhattan's West Village, and ' t av mits he's tired. "It's been a long journey, man." I i I , 26 I EW.COM Dec,a 21, 2012 (Clockwise from far left) Foxx; Foxx, Leonardo DiCaprio, and Waltz; Kerry Washington and Samuel L. Jackson; Washington Keep in mind: An exhausted Quentin Tarantino, even at 49 and a long way from his wunderkind years, is still as energetic as a regular person would be after two strong coffees. And he's char acteristically enthusiastic about his new film: a spaghetti Western silent. To a degree, Tarantino understands the response. "There transposed to the antebellum South that follows a slave (Jamie is no setup for Django, for what we're trying to do. -

Star Power, Pandemics, and Politics: the Role of Cultural Elites in Global Health Security Holly Lynne Swayne University of South Florida, [email protected]

University of South Florida Scholar Commons Graduate Theses and Dissertations Graduate School September 2018 Star Power, Pandemics, and Politics: The Role of Cultural Elites in Global Health Security Holly Lynne Swayne University of South Florida, [email protected] Follow this and additional works at: https://scholarcommons.usf.edu/etd Part of the Mass Communication Commons, Political Science Commons, and the Public Health Commons Scholar Commons Citation Swayne, Holly Lynne, "Star Power, Pandemics, and Politics: The Role of Cultural Elites in Global Health Security" (2018). Graduate Theses and Dissertations. https://scholarcommons.usf.edu/etd/7581 This Dissertation is brought to you for free and open access by the Graduate School at Scholar Commons. It has been accepted for inclusion in Graduate Theses and Dissertations by an authorized administrator of Scholar Commons. For more information, please contact [email protected]. Star Power, Pandemics, and Politics The Role of Cultural Elites in Global Health Security by Holly Lynne Swayne A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy School of Interdisciplinary Global Studies University of South Florida Major Professor: M. Scott Solomon, Ph.D. Linda Whiteford, Ph.D. Janna Merrick, Ph.D. Peter N. Funke, Ph.D. Date of Approval March 30, 2018 Keywords: global health, celebrity, activism, culture studies, mass media, power, influence. Copyright © 2018, Holly Lynne Swayne Dedication To my mom, whose strength has inspired and saved me in ways I cannot begin to describe. To my dad, who taught me by example about grace and compassion, and always believed in the beauty of my dreams. -

Quarantine Quotation Quiz Answers

Quarantine Quotation Quiz answers: 1. Robin Williams to his students in The Dead Poet's Society. 2. Tom Hanks while waiting for a bus in Forrest Gump. 3. Andy Serkis as the Gollum in The Lord of the Rings. 4. Jack Nicholson on the witness stand in A Few Good Men. 5. This line appears in several James Bond movies. 6. Renee Zellweger to returning boyfriend Tom Cruise in Jerry Maguire. 7. Judy Garland in The Wizard of Oz. 8. Marlon Brando to Rod Steiger in On the Waterfront. 9. Clark Gable to Vivian Leigh in Gone with the Wind. 10. Star Wars 11. Robert Duvall in Apocalypse Now. 12. Peter Finch yelling in Network. 13. Ingrid Bergman to the piano player in Casablanca. 14. The Treasure of Sierra Madre. 15. Joe E. Brown to Jack Lemmon in Some Like It Hot. 16. Mike Douglas giving a speech in Wallstreet. 17. Dustin Hoffman to Anne Bancroft in The Graduate. 18. Peter Sellars' ironic pronouncement in Dr. Strangelove. 19. Tom Cruise in Top Gun. 20. Leonardo DiCaprio in Titanic. Check out the movie quotes below and see how many have remained in your memory 1. "Carpe Diem. Seize the day, boys. Make your life extraordinary". 2. "My mama always said life is like a box of chocolates. You never know what you are going to get." 3. "My precious." 4. "You can't handle the truth!" 5. "A Martini. Shaken not stirred." 6. "You had me at hello." 7. "Toto, I've a feeling we are not in Kansas anymore." 8. -

Martin Scorsese's Screening Room: Theatricality, Psychoanalysis, and Modernity in Shutter Island

Chapter 10 Martin Scorsese’s Screening Room: Theatricality, Psychoanalysis, and Modernity in Shutter Island Stephen Mulhall Even a cursory survey of the critical literature on Martin Scorsese suggests that a number of shared assumptions underlie its more local disagreements: that Scorsese’s imperial phase ended around the time of Casino (1995), that this non-accidentally coincided with the end of his long collaboration with Robert De Niro and the beginning of his equally long collaboration with Leonardo DiCaprio, and that most of his subsequent non-documentary work manifests a loss of aesthetic force that reflects his personal transformation from excit- ing, experimental outsider to respectable member of the Hollywood establish- ment. The most recent edition of a book which contains one of the strongest critical accounts of Scorsese’s work makes these assumptions explicit: [Since Kundun (1997)] Scorsese has … attempted to redeem himself as a commercially viable film-maker … The period of intense cinematic ex- perimentation seems to have ended with Goodfellas … He has …, in the process, adopted Leonardo DiCaprio as a replacement for Robert De Niro as his container for fictional characters. The difference in the on-screen presence of these two actors is interesting and reveals a lot about Scors- ese’s change in perspective on his work [sic]. Under his direction, De Niro is always full of a menace that threatens, and often succeeds, in spinning out of control [sic] … He is the director’s surrogate, not so much as a char- acter but as a force of directorial assault on the very basic conventions of film-making. -

Pool Scorecard

Pool Scorecard 2020 NOMINEE BALLOT Score: /24 ACTOR IN A LEADING ROLE DOCUMENTARY FEATURE PRODUCTION DESIGN Antonio Banderas, Pain and Glory AVAILABLE NOW American Factory The Irishman TBA Leonardo DiCaprio, Once upon a Time...in Hollywood AVAILABLE NOW The Cave Jojo Rabbit FEBRUARY 18 Adam Driver, Marriage Story TBA The Edge of Democracy 1917 MARCH 24 Joaquin Phoenix, Joker AVAILABLE NOW For Sama Once upon a Time...in HollywoodAVAILABLE NOW Jonathan Pryce, The Two Popes TBA Honeyland Parasite AVAILABLE NOW ACTOR IN A SUPPORTING ROLE DOCUMENTARY SHORT SUBJECT ANIMATED SHORT FILM Tom Hanks, A Beautiful Day in the Neighorhood FEBRUARY 18 In the Absence Dcera (Daughter) Anthony Hopkins, The Two Popes TBA Learning to Skateboard in a Warzone (If You’re a Girl) Hair Love Al Pacnio, The Irishman TBA Life Overtakes Me Kitbull Joe Pesci, The Irishman TBA St. Louis Superman Memorable Brad Pitt, Once upon a Time...in Hollywood AVAILABLE NOW Walk Run Cha-Cha Sister ACTRESS IN A LEADING ROLE FILM EDITING LIVE ACTION SHORT FILM Cynthia Erivo, Harriet AVAILABLE NOW Ford v Ferrari FEBRUARY 11 Brotherhood Scarlett Johansson, Marriage Story TBA The Irishman TBA Nefta Football Club Saoirse Ronan, Little Women MARCH 24 Jojo Rabbit FEBRUARY 18 The Neighbors’ Window Charlize Theron, Bombshell MARCH 10 Joker AVAILABLE NOW Saria Renée Zellweger, Judy AVAILABLE NOW Parasite AVAILABLE NOW A Sister ACTRESS IN A SUPPORTING ROLE INTERNATIONAL FEATURE FILM SOUND EDITING FEBRUARY 11 Kathy Bates, Richard Jewell TBA Corpus Christi TBA Ford v Ferrari Laura Dern, Marriage