Intel® Technology Journal | Volume 18, Issue 2, 2014

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Beyond BIOS Developing with the Unified Extensible Firmware Interface

Digital Edition Digital Editions of selected Intel Press books are in addition to and complement the printed books. Click the icon to access information on other essential books for Developers and IT Professionals Visit our website at www.intel.com/intelpress Beyond BIOS Developing with the Unified Extensible Firmware Interface Second Edition Vincent Zimmer Michael Rothman Suresh Marisetty Copyright © 2010 Intel Corporation. All rights reserved. ISBN 13 978-1-934053-29-4 This publication is designed to provide accurate and authoritative information in regard to the subject matter covered. It is sold with the understanding that the publisher is not engaged in professional services. If professional advice or other expert assistance is required, the services of a competent professional person should be sought. Intel Corporation may have patents or pending patent applications, trademarks, copyrights, or other intellectual property rights that relate to the presented subject matter. The furnishing of documents and other materials and information does not provide any license, express or implied, by estoppel or otherwise, to any such patents, trademarks, copyrights, or other intellectual property rights. Intel may make changes to specifications, product descriptions, and plans at any time, without notice. Fictitious names of companies, products, people, characters, and/or data mentioned herein are not intended to represent any real individual, company, product, or event. Intel products are not intended for use in medical, life saving, life sustaining, critical control or safety systems, or in nuclear facility applications. Intel, the Intel logo, Celeron, Intel Centrino, Intel NetBurst, Intel Xeon, Itanium, Pentium, MMX, and VTune are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries. -

Using Intel® Math Kernel Library and Intel® Integrated Performance Primitives in the Microsoft* .NET* Framework

Using Intel® MKL and Intel® IPP in Microsoft* .NET* Framework Using Intel® Math Kernel Library and Intel® Integrated Performance Primitives in the Microsoft* .NET* Framework Document Number: 323195-001US.pdf 1 Using Intel® MKL and Intel® IPP in Microsoft* .NET* Framework INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. UNLESS OTHERWISE AGREED IN WRITING BY INTEL, THE INTEL PRODUCTS ARE NOT DESIGNED NOR INTENDED FOR ANY APPLICATION IN WHICH THE FAILURE OF THE INTEL PRODUCT COULD CREATE A SITUATION WHERE PERSONAL INJURY OR DEATH MAY OCCUR. Intel may make changes to specifications and product descriptions at any time, without notice. Designers must not rely on the absence or characteristics of any features or instructions marked "reserved" or "undefined." Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them. The information here is subject to change without notice. Do not finalize a design with this information. The products described in this document may contain design defects or errors known as errata which may cause the product to deviate from published specifications. -

Inside Intel

Inside Intel http://www.businessweek.com/magazine/content/06_02/b3966001.htm Search all of BusinessWeek.com: enter a keyword or company Register Sign In JANUARY 9, 2006 COVER STORY Get Four TODAY'S MOST Free Issues POPULAR STORIES Subscribe to BW 1. Amazon, the Company That Ate Customer Service Paul Otellini's plan will send the chipmaker into uncharted territory. And founder Andy Grove STORY TOOLS the World applauds the shift Printer-Friendly Version 2. Ten Things Only Bad Full Table of Contents Managers Say Cover Story E-Mail This Story Up Front Even the gentle clinking of silverware stopped Reader Comments 3. Nine Ways Readers Report dead. Andrew S. Grove, the revered former Employers Screw Up Corrections & Clarifications Hiring Technology & You Intel Corp. (INTC ) chief executive and now a senior adviser, had stepped up to the 4. Cable’s ESPN Voices of Innovation Dilemma: Wildly Media Centric microphone in a hotel ballroom down the street COVER RELATED ITEMS Popular—but Costly Business Outlook STORY from Intel's Santa Clara (Calif.) headquarters, The Business Week 5. What to Expect at PODCAST preparing to respond to a startling presentation Cover Image: Intel Inside Out Washington Outlook Apple's iPhone 5 by new Chief Marketing Officer Eric B. Kim. All Graphic: Remaking Intel From Event too familiar with Grove's legendary wrath, many of the 300 Top To Bottom top managers at the Oct. 20 gathering tensed in their seats Asian Business Graphic: Intel's Big Bang European Business as they waited for a tongue-lashing of epic proportions. "No Get Free RSS Feed >> Global Outlook one knew what to think," recalls one attendee. -

MOTOROLA FLIPSIDE with MOTOBLUR AT&T User's Guide

MOTOROLA FLIPSIDEIDETM with MOTOBLUR TM User’s Guidee XXXX433a.fm.fm Page 0 Monday, October 4, 2010 10:56 AM XXXX433a.fm.fm Page 1 Monday, October 4, 2010 10:56 AM Your Phone 3.5mm AT&T 3G 11:35 Power / Lisa Jones 10 minuteses agoago Headset My company is sponsoring the grand 9 Sleep AT&T 3G 11:35 Charged Hold= Lisa Jones My company is sponsoring the or new power 10 minutes ago 9 message Press= Volume Text Messaging Market Browser sleep Micro USB ? Connect . charger TextText MessagMe Market Browser or PC Camera Shift Search Delete Menu Search Alternate Press=text New Hold=voice Home Back Line Menu Space Microphone To u ch Pad Scroll/ Back Symbols Select Most of what you need is in the touchscreen and the Tip: Your phone can automatically switch to vibrate keys below it (“Menu, Home, Search, & Back Keys” on mode whenever you place it face-down. To change page 9). this, touch Menu > Settings > Sound & display > Smart Profile: Face Down to Silence Ringer. Note: Your phone might look different. Your Phone 1 XXXX433a.fm.fm Page 2 Monday, October 4, 2010 10:56 AM Contents Device Setup Device Setup . 2 Calls . 4 Assemble & Charge Home Screen . 7 1. Cover off 2. SIM in Keys . 9 3.1 Text Entry . 10 Voice Input & Search . 12 Ringtones & Settings . 12 Synchronize . 13 Contacts . 14 3. Battery in 4. Cover on Social Networking. 17 3.1 Email & Text Messages . 19 Tools. 22 Photos & Videos . 23 Apps & Updates. 26 Location Apps (GPS). 27 5. Charge up 6. -

Multiprocessing in Mobile Platforms: the Marketing and the Reality

Multiprocessing in Mobile Platforms: the Marketing and the Reality Marco Cornero, Andreas Anyuru ST-Ericsson Introduction During the last few years the mobile platforms industry has aggressively introduced multiprocessing in response to the ever increasing performance demanded by the market. Given the highly competitive nature of the mobile industry, multiprocessing has been adopted as a marketing tool. This highly visible, differentiating feature is used to pass the oversimplified message that more processors mean better platforms and performance. The reality is much more complex, because several interdependent factors are at play in determining the efficiency of multiprocessing platforms (such as the effective impact on software performance, chip frequency, area and power consumption) with the number of processors impacting only partially the overall balance, and with contrasting effects linked to the underlying silicon technologies. In this article we illustrate and compare the main technological options available in multiprocessing for mobile platforms, highlighting the synergies between multiprocessing and the disruptive FD-SOI silicon technology used in the upcoming ST-Ericsson products. We will show that compared to personal computers (PCs), the performance of single-processors in mobile platforms is still growing and how, from a software performance perspective, it is more profitable today to focus on faster dual-core rather than slower quad-core processors. We will also demonstrate how FD-SOI benefits dual-core processors even more by providing higher frequency for the same power consumption, together with a wider range of energy efficient operating modes. All of this results in a simpler, cheaper and more effective solution than competing homogeneous and heterogeneous quad-processors. -

Animatsiooni Töövõtted Ja Terminid

Multimeedium, 2D animatsioon Animatsiooni töövõtted ja terminid Nii 2D kui ka 3D animatsioonide juures kasutatakse mitmeid samu võtteid ja termineid. Mõned neist on järgnevas lahtiseletatud. Keyframe Keyframe ehk võtmekaader on selline kaader, millel autor määrab objekti(de) täpse asukoha, suuruse, kuju, värvuse jms. antud ajahetkel. Kaadrid kahe keyframe vahel genereeritakse tavaliselt animatsiooniprogrammi poolt Keyframe 1 Keyframe 2 keerukate arvutuste tulemusena. Traditsioonilise animeerimise korral joonistavad peakunstnikud vajalikud keyframe'id ning nende assistendid kõik ülejäänu. Tracing Tracing on meetod uue kaadri loomiseks eelmise või vahel ka järgmise kopeerimise (trace) teel. Kopeeritava kujutise näitamiseks kasutatakse mitmeid meetodeid, sealhulgas aluseks oleva kujutise näitamine teise värviga, sageli sinisega. Tweening Tweening on tehnika, mis etteantud keyframe'ide vahele genereerib kasutaja poolt etteantud arvu kaadreid, millede jooksul toimub järkjärguline üleminek esimeselt keyframe'ilt teisele. Võttes arvesse kahe keyframe'i erinevusi, kalkuleerib arvuti iga kaadri jaoks proportsionaalsed muudatused objektide asukoha, suuruse, värvuse, kuju jms jaoks. Mida rohkem kaadreid lastakse genereerida, seda väiksemad tulevad järjestikuste kaadrite vahelised erinevused ning seda sujuvam tuleb liikumine. Keyframe 1 genereeritud kaadrid Keyframe 2 Rendering Rendering on protsess, mille käigus objektide traatmudelid (wireframe vahel ka mesh) kaetakse pinnatekstuuridega, neile lisatakse valgusefektid ja värvid, et saada realistlik -

Stochastic Modeling and Performance Analysis of Multimedia Socs

Stochastic Modeling and Performance Analysis of Multimedia SoCs Balaji Raman, Ayoub Nouri, Deepak Gangadharan, Marius Bozga, Ananda Basu, Mayur Maheshwari, Jérôme Milan, Axel Legay, Saddek Bensalem, Samarjit Chakraborty To cite this version: Balaji Raman, Ayoub Nouri, Deepak Gangadharan, Marius Bozga, Ananda Basu, et al.. Stochastic Modeling and Performance Analysis of Multimedia SoCs. SAMOS XIII - Embedded Computer Sys- tems: Architectures, Modeling, and Simulation, Jul 2013, Agios konstantinos, Samos Island, Greece. pp.145-154, 10.1109/SAMOS.2013.6621117. hal-00878094 HAL Id: hal-00878094 https://hal.archives-ouvertes.fr/hal-00878094 Submitted on 29 Oct 2013 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Stochastic Modeling and Performance Analysis of Multimedia SoCs Balaji Raman1, Ayoub Nouri1, Deepak Gangadharan2, Marius Bozga1, Ananda Basu1, Mayur Maheshwari1, Jerome Milan3, Axel Legay4, Saddek Bensalem1, and Samarjit Chakraborty5 1 VERIMAG, France, 2 Technical Univeristy of Denmark, 3 Ecole Polytechnique, France, 4 INRIA Rennes, France, 5 Technical University of Munich, Germany. E-mail: [email protected] Abstract—Quality of video and audio output is a design-time to estimate buffer size for an acceptable output quality. The constraint for portable multimedia devices. -

Intel® Desktop Board DG965PZ Technical Product Specification

Intel® Desktop Board DG965PZ Technical Product Specification August 2006 Order Number: D56009-001US The Intel® Desktop Board DG965PZ may contain design defects or errors known as errata that may cause the product to deviate from published specifications. Current characterized errata are documented in the Intel Desktop Board DG965PZ Specification Update. Revision History Revision Revision History Date -001 First release of the Intel® Desktop Board DG965PZ Technical Product August 2006 Specification. This product specification applies to only the standard Intel® Desktop Board DG965PZ with BIOS identifier MQ96510A.86A. Changes to this specification will be published in the Intel Desktop Board DG965PZ Specification Update before being incorporated into a revision of this document. INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL’S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. INTEL PRODUCTS ARE NOT INTENDED FOR USE IN MEDICAL, LIFE SAVING, OR LIFE SUSTAINING APPLICATIONS. Intel Corporation may have patents or pending patent applications, trademarks, copyrights, or other intellectual property rights that relate to the presented subject matter. The furnishing of documents and other materials and information does not provide any license, express or implied, by estoppel or otherwise, to any such patents, trademarks, copyrights, or other intellectual property rights. -

OUTPUT-WSIB Voting Report

2006 Proxy Voting Report 3M Company Ticker Security ID: Meeting Date Meeting Status MMM CUSIP9 88579Y101 05/09/2006 Voted Issue Mgmt For/Agnst No.Description Proponent Rec Vote Cast Mgmt 1.1Elect Linda Alvarado Mgmt For For For 1.2Elect Edward Liddy Mgmt For For For 1.3Elect Robert Morrison Mgmt For For For 1.4Elect Aulana Peters Mgmt For For For 2Ratification of Auditor Mgmt For For For Amendment to Certificate of Incorporation to Declassify the 3Board Mgmt For For For STOCKHOLDER PROPOSAL REGARDING EXECUTIVE 4COMPENSATION ShrHoldr Against Against For STOCKHOLDER PROPOSAL REGARDING 3M S ANIMAL 5WELFARE POLICY ShrHoldr Against Against For STOCKHOLDER PROPOSAL REGARDING 3M S BUSINESS 6OPERATIONS IN CHINA ShrHoldr Against Against For Abbott Laboratories Inc Ticker Security ID: Meeting Date Meeting Status ABT CUSIP9 002824100 04/28/2006 Voted Issue Mgmt For/Agnst No.Description Proponent Rec Vote Cast Mgmt 1.1Elect Roxanne Austin Mgmt For For For 1.2Elect William Daley Mgmt For For For 1.3Elect W. Farrell Mgmt For For For 1.4Elect H. Laurance Fuller Mgmt For For For 1.5Elect Richard Gonzalez Mgmt For For For 1.6Elect Jack Greenberg Mgmt For For For 1.7Elect David Owen Mgmt For For For 1.8Elect Boone Powell, Jr. Mgmt For For For 1.9Elect W. Ann Reynolds Mgmt For For For 1.10Elect Roy Roberts Mgmt For For For 1.11Elect William Smithburg Mgmt For For For 1.12Elect John Walter Mgmt For For For 1.13Elect Miles White Mgmt For For For RATIFICATION OF DELOITTE & 2TOUCHE LLP AS AUDITORS. Mgmt For For For SHAREHOLDER PROPOSAL - PAY-FOR-SUPERIOR- 3PERFORMANCE ShrHoldr Against Against For Page 1 of 139 2006 Proxy Voting Report SHAREHOLDER PROPOSAL - 4POLITICAL CONTRIBUTIONS ShrHoldr Against Against For SHAREHOLDER PROPOSAL - 5THE ROLES OF CHAIR AND CEO . -

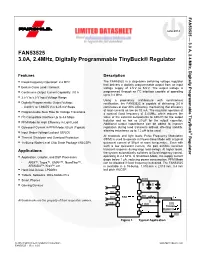

FAN53525 3.0A, 2.4Mhz, Digitally Programmable Tinybuck® Regulator

FAN53525 — 3.0 A, 2.4 MHz, June 2014 FAN53525 3.0A, 2.4MHz, Digitally Programmable TinyBuck® Regulator Digitally Programmable TinyBuck Digitally Features Description . Fixed-Frequency Operation: 2.4 MHz The FAN53525 is a step-down switching voltage regulator that delivers a digitally programmable output from an input . Best-in-Class Load Transient voltage supply of 2.5 V to 5.5 V. The output voltage is 2 . Continuous Output Current Capability: 3.0 A programmed through an I C interface capable of operating up to 3.4 MHz. 2.5 V to 5.5 V Input Voltage Range Using a proprietary architecture with synchronous . Digitally Programmable Output Voltage: rectification, the FAN53525 is capable of delivering 3.0 A - 0.600 V to 1.39375 V in 6.25 mV Steps continuous at over 80% efficiency, maintaining that efficiency at load currents as low as 10 mA. The regulator operates at Programmable Slew Rate for Voltage Transitions . a nominal fixed frequency of 2.4 MHz, which reduces the . I2C-Compatible Interface Up to 3.4 Mbps value of the external components to 330 nH for the output inductor and as low as 20 µF for the output capacitor. PFM Mode for High Efficiency in Light Load . Additional output capacitance can be added to improve . Quiescent Current in PFM Mode: 50 µA (Typical) regulation during load transients without affecting stability, allowing inductance up to 1.2 µH to be used. Input Under-Voltage Lockout (UVLO) ® At moderate and light loads, Pulse Frequency Modulation Regulator Thermal Shutdown and Overload Protection . (PFM) is used to operate in Power-Save Mode with a typical . -

An Emerging Architecture in Smart Phones

International Journal of Electronic Engineering and Computer Science Vol. 3, No. 2, 2018, pp. 29-38 http://www.aiscience.org/journal/ijeecs ARM Processor Architecture: An Emerging Architecture in Smart Phones Naseer Ahmad, Muhammad Waqas Boota * Department of Computer Science, Virtual University of Pakistan, Lahore, Pakistan Abstract ARM is a 32-bit RISC processor architecture. It is develop and licenses by British company ARM holdings. ARM holding does not manufacture and sell the CPU devices. ARM holding only licenses the processor architecture to interested parties. There are two main types of licences implementation licenses and architecture licenses. ARM processors have a unique combination of feature such as ARM core is very simple as compare to general purpose processors. ARM chip has several peripheral controller, a digital signal processor and ARM core. ARM processor consumes less power but provide the high performance. Now a day, ARM Cortex series is very popular in Smartphone devices. We will also see the important characteristics of cortex series. We discuss the ARM processor and system on a chip (SOC) which includes the Qualcomm, Snapdragon, nVidia Tegra, and Apple system on chips. In this paper, we discuss the features of ARM processor and Intel atom processor and see which processor is best. Finally, we will discuss the future of ARM processor in Smartphone devices. Keywords RISC, ISA, ARM Core, System on a Chip (SoC) Received: May 6, 2018 / Accepted: June 15, 2018 / Published online: July 26, 2018 @ 2018 The Authors. Published by American Institute of Science. This Open Access article is under the CC BY license. -

SIMD Extensions

SIMD Extensions PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 12 May 2012 17:14:46 UTC Contents Articles SIMD 1 MMX (instruction set) 6 3DNow! 8 Streaming SIMD Extensions 12 SSE2 16 SSE3 18 SSSE3 20 SSE4 22 SSE5 26 Advanced Vector Extensions 28 CVT16 instruction set 31 XOP instruction set 31 References Article Sources and Contributors 33 Image Sources, Licenses and Contributors 34 Article Licenses License 35 SIMD 1 SIMD Single instruction Multiple instruction Single data SISD MISD Multiple data SIMD MIMD Single instruction, multiple data (SIMD), is a class of parallel computers in Flynn's taxonomy. It describes computers with multiple processing elements that perform the same operation on multiple data simultaneously. Thus, such machines exploit data level parallelism. History The first use of SIMD instructions was in vector supercomputers of the early 1970s such as the CDC Star-100 and the Texas Instruments ASC, which could operate on a vector of data with a single instruction. Vector processing was especially popularized by Cray in the 1970s and 1980s. Vector-processing architectures are now considered separate from SIMD machines, based on the fact that vector machines processed the vectors one word at a time through pipelined processors (though still based on a single instruction), whereas modern SIMD machines process all elements of the vector simultaneously.[1] The first era of modern SIMD machines was characterized by massively parallel processing-style supercomputers such as the Thinking Machines CM-1 and CM-2. These machines had many limited-functionality processors that would work in parallel.