Technology Innovation and the Future of Air Force Intelligence Analysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Biography U N I T E D S T a T E S a I R F O R C E

BIOGRAPHY U N I T E D S T A T E S A I R F O R C E LIEUTENANT GENERAL TIMOTHY D. HAUGH Lt. Gen. Timothy D. Haugh is the Commander, Sixteenth Air Force; Commander, Air Forces Cyber, and Commander, Joint Force Headquarters-Cyber, Joint Base San Antonio-Lackland, Texas. General Haugh is responsible for more than 44,000 personnel conducting worldwide operations. Sixteenth Air Force Airmen deliver multisource intelligence, surveillance, and reconnaissance products, applications, capabilities and resources. In addition, they build, extend, operate, secure and defend the Air Force portion of the Department of Defense global network. Furthermore, Joint Forces Headquarters-Cyber personnel perform operational planning as part of coordinated efforts to support Air Force component and combatant commanders and, upon approval of the President and/or Secretary of Defense, the execution of offensive cyberspace operations. In his position as Sixteenth Air Force Commander, General Haugh also serves as the Commander of the Service Cryptologic Component. In this capacity, he is responsible to the Director, National Security Agency, and Chief, Central Security Service, as the Air Force’s sole authority for matters involving the conduct of cryptologic activities, including the spectrum of missions related to tactical warfighting and national-level operations. The general leads the global information warfare activities spanning cyberspace operations, intelligence, targeting, and weather for nine wings, one technical center, and an operations center. General Haugh received his commission in 1991, as a distinguished graduate of the ROTC program at Lehigh University. He has served in a variety of intelligence and cyber command and staff assignments. -

Lecture Note on Deep Learning and Quantum Many-Body Computation

Lecture Note on Deep Learning and Quantum Many-Body Computation Jin-Guo Liu, Shuo-Hui Li, and Lei Wang∗ Institute of Physics, Chinese Academy of Sciences Beijing 100190, China November 23, 2018 Abstract This note introduces deep learning from a computa- tional quantum physicist’s perspective. The focus is on deep learning’s impacts to quantum many-body compu- tation, and vice versa. The latest version of the note is at http://wangleiphy.github.io/. Please send comments, suggestions and corrections to the email address in below. ∗ [email protected] CONTENTS 1 introduction2 2 discriminative learning4 2.1 Data Representation 4 2.2 Model: Artificial Neural Networks 6 2.3 Cost Function 9 2.4 Optimization 11 2.4.1 Back Propagation 11 2.4.2 Gradient Descend 13 2.5 Understanding, Visualization and Applications Beyond Classification 15 3 generative modeling 17 3.1 Unsupervised Probabilistic Modeling 17 3.2 Generative Model Zoo 18 3.2.1 Boltzmann Machines 19 3.2.2 Autoregressive Models 22 3.2.3 Normalizing Flow 23 3.2.4 Variational Autoencoders 25 3.2.5 Tensor Networks 28 3.2.6 Generative Adversarial Networks 29 3.3 Summary 32 4 applications to quantum many-body physics and more 33 4.1 Material and Chemistry Discoveries 33 4.2 Density Functional Theory 34 4.3 “Phase” Recognition 34 4.4 Variational Ansatz 34 4.5 Renormalization Group 35 4.6 Monte Carlo Update Proposals 36 4.7 Tensor Networks 37 4.8 Quantum Machine Leanring 38 4.9 Miscellaneous 38 5 hands on session 39 5.1 Computation Graph and Back Propagation 39 5.2 Deep Learning Libraries 41 5.3 Generative Modeling using Normalizing Flows 42 5.4 Restricted Boltzmann Machine for Image Restoration 43 5.5 Neural Network as a Quantum Wave Function Ansatz 43 6 challenges ahead 45 7 resources 46 BIBLIOGRAPHY 47 1 1 INTRODUCTION Deep Learning (DL) ⊂ Machine Learning (ML) ⊂ Artificial Intelli- gence (AI). -

White Knight Review Chess E-Magazine January/February - 2012 Table of Contents

Chess E-Magazine Interactive E-Magazine Volume 3 • Issue 1 January/February 2012 Chess Gambits Chess Gambits The Immortal Game Canada and Chess Anderssen- Vs. -Kieseritzky Bill Wall’s Top 10 Chess software programs C Seraphim Press White Knight Review Chess E-Magazine January/February - 2012 Table of Contents Editorial~ “My Move” 4 contents Feature~ Chess and Canada 5 Article~ Bill Wall’s Top 10 Software Programs 9 INTERACTIVE CONTENT ________________ Feature~ The Incomparable Kasparov 10 • Click on title in Table of Contents Article~ Chess Variants 17 to move directly to Unorthodox Chess Variations page. • Click on “White Feature~ Proof Games 21 Knight Review” on the top of each page to return to ARTICLE~ The Immortal Game 22 Table of Contents. Anderssen Vrs. Kieseritzky • Click on red type to continue to next page ARTICLE~ News Around the World 24 • Click on ads to go to their websites BOOK REVIEW~ Kasparov on Kasparov Pt. 1 25 • Click on email to Pt.One, 1973-1985 open up email program Feature~ Chess Gambits 26 • Click up URLs to go to websites. ANNOTATED GAME~ Bareev Vs. Kasparov 30 COMMENTARY~ “Ask Bill” 31 White Knight Review January/February 2012 White Knight Review January/February 2012 Feature My Move Editorial - Jerry Wall [email protected] Well it has been over a year now since we started this publication. It is not easy putting together a 32 page magazine on chess White Knight every couple of months but it certainly has been rewarding (maybe not so Review much financially but then that really never was Chess E-Magazine the goal). -

AI Computer Wraps up 4-1 Victory Against Human Champion Nature Reports from Alphago's Victory in Seoul

The Go Files: AI computer wraps up 4-1 victory against human champion Nature reports from AlphaGo's victory in Seoul. Tanguy Chouard 15 March 2016 SEOUL, SOUTH KOREA Google DeepMind Lee Sedol, who has lost 4-1 to AlphaGo. Tanguy Chouard, an editor with Nature, saw Google-DeepMind’s AI system AlphaGo defeat a human professional for the first time last year at the ancient board game Go. This week, he is watching top professional Lee Sedol take on AlphaGo, in Seoul, for a $1 million prize. It’s all over at the Four Seasons Hotel in Seoul, where this morning AlphaGo wrapped up a 4-1 victory over Lee Sedol — incidentally, earning itself and its creators an honorary '9-dan professional' degree from the Korean Baduk Association. After winning the first three games, Google-DeepMind's computer looked impregnable. But the last two games may have revealed some weaknesses in its makeup. Game four totally changed the Go world’s view on AlphaGo’s dominance because it made it clear that the computer can 'bug' — or at least play very poor moves when on the losing side. It was obvious that Lee felt under much less pressure than in game three. And he adopted a different style, one based on taking large amounts of territory early on rather than immediately going for ‘street fighting’ such as making threats to capture stones. This style – called ‘amashi’ – seems to have paid off, because on move 78, Lee produced a play that somehow slipped under AlphaGo’s radar. David Silver, a scientist at DeepMind who's been leading the development of AlphaGo, said the program estimated its probability as 1 in 10,000. -

Accelerators and Obstacles to Emerging ML-Enabled Research Fields

Life at the edge: accelerators and obstacles to emerging ML-enabled research fields Soukayna Mouatadid Steve Easterbrook Department of Computer Science Department of Computer Science University of Toronto University of Toronto [email protected] [email protected] Abstract Machine learning is transforming several scientific disciplines, and has resulted in the emergence of new interdisciplinary data-driven research fields. Surveys of such emerging fields often highlight how machine learning can accelerate scientific discovery. However, a less discussed question is how connections between the parent fields develop and how the emerging interdisciplinary research evolves. This study attempts to answer this question by exploring the interactions between ma- chine learning research and traditional scientific disciplines. We examine different examples of such emerging fields, to identify the obstacles and accelerators that influence interdisciplinary collaborations between the parent fields. 1 Introduction In recent decades, several scientific research fields have experienced a deluge of data, which is impacting the way science is conducted. Fields such as neuroscience, psychology or social sciences are being reshaped by the advances in machine learning (ML) and the data processing technologies it provides. In some cases, new research fields have emerged at the intersection of machine learning and more traditional research disciplines. This is the case for example, for bioinformatics, born from collaborations between biologists and computer scientists in the mid-80s [1], which is now considered a well-established field with an active community, specialized conferences, university departments and research groups. How do these transformations take place? Do they follow similar paths? If yes, how can the advances in such interdisciplinary fields be accelerated? Researchers in the philosophy of science have long been interested in these questions. -

The Deep Learning Revolution and Its Implications for Computer Architecture and Chip Design

The Deep Learning Revolution and Its Implications for Computer Architecture and Chip Design Jeffrey Dean Google Research [email protected] Abstract The past decade has seen a remarkable series of advances in machine learning, and in particular deep learning approaches based on artificial neural networks, to improve our abilities to build more accurate systems across a broad range of areas, including computer vision, speech recognition, language translation, and natural language understanding tasks. This paper is a companion paper to a keynote talk at the 2020 International Solid-State Circuits Conference (ISSCC) discussing some of the advances in machine learning, and their implications on the kinds of computational devices we need to build, especially in the post-Moore’s Law-era. It also discusses some of the ways that machine learning may also be able to help with some aspects of the circuit design process. Finally, it provides a sketch of at least one interesting direction towards much larger-scale multi-task models that are sparsely activated and employ much more dynamic, example- and task-based routing than the machine learning models of today. Introduction The past decade has seen a remarkable series of advances in machine learning (ML), and in particular deep learning approaches based on artificial neural networks, to improve our abilities to build more accurate systems across a broad range of areas [LeCun et al. 2015]. Major areas of significant advances include computer vision [Krizhevsky et al. 2012, Szegedy et al. 2015, He et al. 2016, Real et al. 2017, Tan and Le 2019], speech recognition [Hinton et al. -

2.) COMMUNITY COLLEGE: Maricopa Co

GENERAL STUDIES COURSE PROPOSAL COVER FORM (ONE COURSE PER FORM) 1.) DATE: 3/26/19 2.) COMMUNITY COLLEGE: Maricopa Co. Comm. College District 3.) PROPOSED COURSE: Prefix: GST Number: 202 Title: Games, Culture, and Aesthetics Credits: 3 CROSS LISTED WITH: Prefix: Number: ; Prefix: Number: ; Prefix: Number: ; Prefix: Number: ; Prefix: Number: ; Prefix: Number: . 4.) COMMUNITY COLLEGE INITIATOR: KEITH ANDERSON PHONE: 480-654-7300 EMAIL: [email protected] ELIGIBILITY: Courses must have a current Course Equivalency Guide (CEG) evaluation. Courses evaluated as NT (non- transferable are not eligible for the General Studies Program. MANDATORY REVIEW: The above specified course is undergoing Mandatory Review for the following Core or Awareness Area (only one area is permitted; if a course meets more than one Core or Awareness Area, please submit a separate Mandatory Review Cover Form for each Area). POLICY: The General Studies Council (GSC) Policies and Procedures requires the review of previously approved community college courses every five years, to verify that they continue to meet the requirements of Core or Awareness Areas already assigned to these courses. This review is also necessary as the General Studies program evolves. AREA(S) PROPOSED COURSE WILL SERVE: A course may be proposed for more than one core or awareness area. Although a course may satisfy a core area requirement and an awareness area requirement concurrently, a course may not be used to satisfy requirements in two core or awareness areas simultaneously, even if approved for those areas. With departmental consent, an approved General Studies course may be counted toward both the General Studies requirements and the major program of study. -

Simon Lindh Online Computer Game English Engelska C-Uppsats

Estetisk-filosofiska fakulteten Simon Lindh Online computer game English A study on the language found in World of Warcraft Engelska C-uppsats Termin: Vårterminen 2009 Handledare: Moira Linnarud Karlstads universitet 651 88 Karlstad Tfn 054-700 10 00 Fax 054-700 14 60 [email protected] www.kau.se Abstract Titel: Online computer game English - A study on the language found in World of Warcraft Författare: Simon Lindh Engelska C, 2009 Antal sidor: 27 Abstract: The aim of this study is to examine the language from a small sample of texts from the chat channels of World of Warcraft and analyze the differences found between World of Warcraft English and Standard English. In addition, the study will compare the language found in World of Warcraft with language found on other parts of the Internet, especially chatgroups. Based on 1045 recorded chat messages, this study examines the use of abbreviations, emoticons, vocabulary, capitalization, spelling, multiple letter use and the use of rare characters. The results of the investigation show that the language of World of Warcraft differs from Standard English on several aspects, primarily in the use of abbreviations. This is supported by secondary sources. The results also show that the use of language is probably not based on the desire to deliver a message quickly, but rather to reach out to people. In addition, the results show that the language found in World of Warcraft is more advanced than a simple effort to try to imitate speech, thereby performing more than written speech. Nyckelord: computer game, World of Warcraft, Internet language, Netspeak, abbreviation, emoticons Table of contents 1. -

United States Air Force and Its Antecedents Published and Printed Unit Histories

UNITED STATES AIR FORCE AND ITS ANTECEDENTS PUBLISHED AND PRINTED UNIT HISTORIES A BIBLIOGRAPHY EXPANDED & REVISED EDITION compiled by James T. Controvich January 2001 TABLE OF CONTENTS CHAPTERS User's Guide................................................................................................................................1 I. Named Commands .......................................................................................................................4 II. Numbered Air Forces ................................................................................................................ 20 III. Numbered Commands .............................................................................................................. 41 IV. Air Divisions ............................................................................................................................. 45 V. Wings ........................................................................................................................................ 49 VI. Groups ..................................................................................................................................... 69 VII. Squadrons..............................................................................................................................122 VIII. Aviation Engineers................................................................................................................ 179 IX. Womens Army Corps............................................................................................................ -

1999/1 Layout

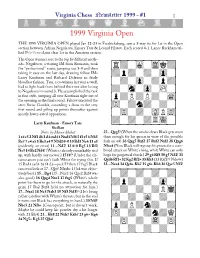

Virginia Chess Newsletter 1999 - #1 1 1999 Virginia Open THE 1999 VIRGINIA OPEN played Jan 22-24 in Fredricksburg, saw a 3-way tie for 1st in the Open section between Adrian Negulescu, Emory Tate & Leonid Filatov. Each scored 4-1. Lance Rackham tal- 1 1 lied 5 ⁄2- ⁄2 to claim clear 1st in the Amateur section. The Open winners rose to the top by different meth- ‹óóóóóóóó‹ ods. Negulescu, a visiting IM from Rumania, took õÏ›‹Ò‹ÌÙ›ú the “professional” route, jumping out 3-0 and then taking it easy on the last day, drawing fellow IMs õ›‡›‹›‹·‹ú Larry Kaufman and Richard Delaune in fairly bloodless fashion. Tate, a co-winner last year as well, õ‹›‹·‹›‡›ú had to fight back from behind this time after losing to Negulescu in round 3. He accomplished the task õ›‹Â‹·‹„‹ú in fine style, jumping all over Kaufman right out of õ‡›fi›fi›‹Ôú the opening in the final round. Filatov executed the semi Swiss Gambit, conceding a draw in the very õfl‹›‰›‹›‹ú first round and piling up points thereafter against mostly lower-rated opposition. õ‹fl‹›‹Áfiflú õ›‹›‹›ÍÛ‹ú Larry Kaufman - Emory Tate Sicilian ‹ìììììììì‹ Notes by Macon Shibut 25...Qxg5! (When the smoke clears Black gets more 1 e4 c5 2 Nf3 d6 3 d4 cxd4 4 Nxd4 Nf6 5 f3 e5 6 Nb3 than enough for his queen in view of the possible Be7 7 c4 a5 8 Be3 a4 9 N3d2 0-0 10 Bd3 Nc6 11 a3 fork on e4) 26 Qxg5 Rxf2 27 Rxf2 Nxf2 28 Qxg6 (evidently an error) 11...Nd7! 12 0-0 Bg5 13 Bf2 Nfxe4 (Now Black will regroup his pieces for a com- Nc5 14 Bc2 Nd4! (White is already remarkably tied bined attack on White’s king, while White can only up, with hardly any moves.) 15 f4!? (Under the cir- hope for perpetual check.) 29 g4 Rf8 30 g5 Nd2! 31 cumstances you can’t fault White for trying this. -

Air Force Sexual Assault Court-Martial Summaries 2010 March 2015

Air Force Sexual Assault Court-Martial Summaries 2010 March 2015 – The Air Force is committed to preventing, deterring, and prosecuting sexual assault in its ranks. This report contains a synopsis of sexual assault cases taken to trial by court-martial. The information contained herein is a matter of public record. This is the final report of this nature the Air Force will produce. All results of general and special courts-martial for trials occurring after 1 April 2015 will be available on the Air Force’s Court-Martial Docket Website (www.afjag.af.mil/docket/index.asp). SIGNIFICANT AIR FORCE SEXUAL ASSAULT CASE SUMMARIES 2010 – March 2015 Note: This report lists cases involving a conviction for a sexual assault offense committed against an adult and also includes cases where a sexual assault offense against an adult was charged and the member was either acquitted of a sexual assault offense or the sexual assault offense was dismissed, but the member was convicted of another offense involving a victim. The Air Force publishes these cases for deterrence purposes. Sex offender registration requirements are governed by Department of Defense policy in compliance with federal and state sex offender registration requirements. Not all convictions included in this report require sex offender registration. Beginning with July 2014 cases, this report also indicates when a victim was represented by a Special Victims’ Counsel. Under the Uniform Code of Military Justice, sexual assaults against those 16 years of age and older are charged as crimes against adults. The appropriate disposition of sexual assault allegations and investigations may not always include referral to trial by court-martial. -

4407.Pdf (6740 Кбайт)

Deliberate Force A Case Study in Effective Air Campaigning Final Report of the Air University Balkans Air Campaign Study Edited by Col Robert C. Owen, USAF Air University Press Maxwell Air Force Base, Alabama January 2000 Library of Congress Cataloging-in-Publication Data Deliberate force a case study in effective air campaigning : final report of the Air University Balkans air campaign study / edited by Robert C. Owen. p. cm. Includes bibliographical references and index. ISBN 1-58566-076-0 1. Yugoslav War, 1991–1995—Aerial operations. 2. Yugoslav War, 1991– 1995—Campaigns—Bosnia and Hercegovina. 3. Yugoslav War, 1991–1995—Foreign participation. 4. Peacekeeping forces—Bosnia and Hercegovina. 5. North Atlantic Treaty Organization—Armed Forces—Aviation. 6. Bosnia and Hercegovina— History, Military. I. Owen, Robert C., 1951– DR1313.7.A47 D45 2000 949.703—dc21 99-087096 Disclaimer Opinions, conclusions, and recommendations expressed or implied within are solely those of the authors and do not necessarily represent the views of Air University, the United States Air Force, the Department of Defense, or any other US government agency. Cleared for public release: distribution unlimited. ii Contents Chapter Page DISCLAIMER . ii FOREWORD . xi ABOUT THE EDITOR . xv PREFACE . xvii 1 The Demise of Yugoslavia and the Destruction of Bosnia: Strategic Causes, Effects, and Responses . 1 Dr. Karl Mueller 2 The Planning Background . 37 Lt Col Bradley S. Davis 3 US and NATO Doctrine for Campaign Planning . 65 Col Maris McCrabb 4 The Deliberate Force Air Campaign Plan . 87 Col Christopher M. Campbell 5 Executing Deliberate Force, 30 August–14 September 1995 . 131 Lt Col Mark J.