Art Styles in Computer Games and the Default Bias

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Art Styles in Computer Games and the Default Bias

University of Huddersfield Repository Jarvis, Nathan Photorealism versus Non-Photorealism: Art styles in computer games and the default bias. Original Citation Jarvis, Nathan (2013) Photorealism versus Non-Photorealism: Art styles in computer games and the default bias. Masters thesis, University of Huddersfield. This version is available at http://eprints.hud.ac.uk/id/eprint/19756/ The University Repository is a digital collection of the research output of the University, available on Open Access. Copyright and Moral Rights for the items on this site are retained by the individual author and/or other copyright owners. Users may access full items free of charge; copies of full text items generally can be reproduced, displayed or performed and given to third parties in any format or medium for personal research or study, educational or not-for-profit purposes without prior permission or charge, provided: • The authors, title and full bibliographic details is credited in any copy; • A hyperlink and/or URL is included for the original metadata page; and • The content is not changed in any way. For more information, including our policy and submission procedure, please contact the Repository Team at: [email protected]. http://eprints.hud.ac.uk/ THE UNIVERSITY OF HUDDERSFIELD Photorealism versus Non-Photorealism: Art styles in computer games and the default bias. Master of Research (MRes) Thesis Nathan Jarvis - U0859020010 18/09/2013 Supervisor: Daryl Marples Co-Supervisor: Duke Gledhill 1.0.0 – Contents. 1.0.0 – CONTENTS. 1 2.0.0 – ABSTRACT. 4 2.1.0 – LITERATURE REVIEW. 4 2.2.0 – SUMMARY OF CHANGES (SEPTEMBER 2013). -

Modelo Integral Para Entorno De Realidad Virtual Y Agentes

MINERVA: Modelo Integral para Entorno de Realidad Virtual y Agentes Gabriel López García MINERVA Modelo Integral para Entornos de Realidad Virtual y Agentes Gabriel López García Universidad de Alicante DEPARTAMENTO DE CIENCIA DE LA COMPUTACIÓN E INTELIGENCIA ARTIFICIAL TESIS DOCTORAL: Minerva Modelo Integral para Entornos de Realidad Virtual y Agentes Autor Gabriel López García Director Rafael Molina Carmona Alicante, septiembre de 2014 Resumen Uno de los problemas más importantes en los sistemas de realidad virtual es la diversidad de los dispositivos visuales y de interacción que existen en la actualidad. Junto a esto, la heterogeneidad de los motores gráficos, los motores físicos y los motores de inteligencia artificial, propicia que no exista un modelo que aúne todos estos aspectos de una forma integral y coherente. Con el objetivo de unificar toda esta diversidad, presentamos un modelo formal que afronta de forma integral el problema de la diversidad en los sistemas de realidad virtual, así como la definición de los módulos principales que los constituyen. El modelo propuesto se basa en la definición de varias gramáticas que integra su actividad, su visualización y su interacción con el usuario. La descripción de un mundo se presenta como una cadena de un lenguaje L(M) que representa una secuencia ordenada de agentes que aceptan eventos y que definen la actividad del sistema. Por otro lado, la visualización del estado actual del sistema se traduce en otra cadena del lenguaje de visualización L(V) y que está compuesta de primitivas y transformaciones. Los conceptos de primitiva y transformación son mucho más amplios de lo que es habitual en estos sistemas. -

Commodore Floppy Entwicklung 5. C64 Stammtisch in Wien Amiga

SCACOM..aktuellll Februar 2010 Ausgabe 16 www.scacom.de.vu Februar 2010 CCoommmmooddoorree FFllooppppyy EEnnttwwiicckklluunngg 55.. CC6644 SSttaammmmttiisscchh iinn WWiieenn AAmmiiggaa 33000000 EEDDSS GGeehhääuussee AArrttiilllleerryy DDuueell CChhiipp PPrroodduukkttiioonn WWuusssstteenn SSiiee……?? Seite 1 Ausgabe 16 SCACOM..aktuellll Ausgabe 16 SCACOM..aktuellll IMPRESSUM Ich verfolge keinerlei kommerzielles Interesse. Die SCACOM-Aktuell erscheint in Abständen von zwei Dies ist schon die 16. Ausgabe von SCACOM Aktuell. Mit neuen Monaten und wird kostenlos zum Hintergrundbildern und Ideen starten wir positiv in das Jahr 2010. Download angeboten. Diesmal gibt es eine komplett neue Serie an Hintergrundbildern, die wir Sie können das Magazin mit Copyright- „Elegance“ nennen. Dabei wird gerenderte Commodore Hardware auf Vermerk © Stefan Egger und Link zu spiegeldem Untergrund präsentiert – in 16:9 Full HD Auflösung mit 1080p! www.scacom.de.vu in unveränderter Doch auch andere Formate liegen bereit. Form weiter verbreiten. Außerdem haben wir im News Bereich einen Serie an Rückblicken Das Copyright der Texte liegt bei den gestartet, die zeigen soll, was uns vor zwei Jahren beschäftigt hat und Autoren der Beiträge. Keine Weiterver- weckt vielleicht Lust, ältere Ausgaben wieder mal anzusehen. wendung ohne explizite Erlaubnis der jeweiligen Autoren! . Diesmal gibt es viele Informationen über Floppies – von der 1540 und deren Schwächen bis zum Anschluss einer Commodore Floppy an Der Name „SCACOM Aktuell“ sowie den Amiga. Und wenn wir schon beim Amiga sind: Angekündigt für 2010 das Logo und das Layout unterliegen ist ein neuer Amiga, der X1000 (mehr dazu im News-Bereich). Wir finden, den Rechten des Herausgebers. dass das nach einem guten Start in das Jahr 2010 klingt und hoffen, dass Sie in diesem Jahr weiterhin gut informiert und zufrieden mit HELFEN SIE MIT! dieser und den kommenden Ausgaben der SCACOM Aktuell sind. -

Computer Demos—What Makes Them Tick?

AALTO UNIVERSITY School of Science and Technology Faculty of Information and Natural Sciences Department of Media Technology Markku Reunanen Computer Demos—What Makes Them Tick? Licentiate Thesis Helsinki, April 23, 2010 Supervisor: Professor Tapio Takala AALTO UNIVERSITY ABSTRACT OF LICENTIATE THESIS School of Science and Technology Faculty of Information and Natural Sciences Department of Media Technology Author Date Markku Reunanen April 23, 2010 Pages 134 Title of thesis Computer Demos—What Makes Them Tick? Professorship Professorship code Contents Production T013Z Supervisor Professor Tapio Takala Instructor - This licentiate thesis deals with a worldwide community of hobbyists called the demoscene. The activities of the community in question revolve around real-time multimedia demonstrations known as demos. The historical frame of the study spans from the late 1970s, and the advent of affordable home computers, up to 2009. So far little academic research has been conducted on the topic and the number of other publications is almost equally low. The work done by other researchers is discussed and additional connections are made to other related fields of study such as computer history and media research. The material of the study consists principally of demos, contemporary disk magazines and online sources such as community websites and archives. A general overview of the demoscene and its practices is provided to the reader as a foundation for understanding the more in-depth topics. One chapter is dedicated to the analysis of the artifacts produced by the community and another to the discussion of the computer hardware in relation to the creative aspirations of the community members. -

Xerox Corporation 00-00-02

00-00-00 (hex) XEROX CORPORATION 00-00-01 (hex) XEROX CORPORATION 00-00-02 (hex) XEROX CORPORATION 00-00-03 (hex) XEROX CORPORATION 00-00-04 (hex) XEROX CORPORATION 00-00-05 (hex) XEROX CORPORATION 00-00-06 (hex) XEROX CORPORATION 00-00-07 (hex) XEROX CORPORATION 00-00-08 (hex) XEROX CORPORATION 00-00-09 (hex) XEROX CORPORATION 00-00-0A (hex) OMRON TATEISI ELECTRONICS CO. 00-00-0B (hex) MATRIX CORPORATION 00-00-0C (hex) CISCO SYSTEMS, INC. 00-00-0D (hex) FIBRONICS LTD. 00-00-0E (hex) FUJITSU LIMITED 00-00-0F (hex) NEXT, INC. 00-00-10 (hex) SYTEK INC. 00-00-11 (hex) NORMEREL SYSTEMES 00-00-12 (hex) INFORMATION TECHNOLOGY LIMITED 00-00-13 (hex) CAMEX 00-00-14 (hex) NETRONIX 00-00-15 (hex) DATAPOINT CORPORATION 00-00-16 (hex) DU PONT PIXEL SYSTEMS . 00-00-17 (hex) TEKELEC 00-00-18 (hex) WEBSTER COMPUTER CORPORATION 00-00-19 (hex) APPLIED DYNAMICS INTERNATIONAL 00-00-1A (hex) ADVANCED MICRO DEVICES 00-00-1B (hex) NOVELL INC. 00-00-1C (hex) BELL TECHNOLOGIES 00-00-1D (hex) CABLETRON SYSTEMS, INC. 00-00-1E (hex) TELSIST INDUSTRIA ELECTRONICA 00-00-1F (hex) Telco Systems, Inc. 00-00-20 (hex) DATAINDUSTRIER DIAB AB 00-00-21 (hex) SUREMAN COMP. & COMMUN. CORP. 00-00-22 (hex) VISUAL TECHNOLOGY INC. 00-00-23 (hex) ABB INDUSTRIAL SYSTEMS AB 00-00-24 (hex) CONNECT AS 00-00-25 (hex) RAMTEK CORP. 00-00-26 (hex) SHA-KEN CO., LTD. 00-00-27 (hex) JAPAN RADIO COMPANY 00-00-28 (hex) PRODIGY SYSTEMS CORPORATION 00-00-29 (hex) IMC NETWORKS CORP. -

Liberty County to Pay Ex-Health Dept. Director $50,000

50¢ CLJ includes THE CALHOUN-LIBERTY News tax .com How will Santa OURNAL find your house? J Volume 31, Number 51 Wednesday, Dec. 21, 2011 I think he will find my house with his house finder and when he does he is up for a big surprise. BROCK SYKES Hosford School -------------------------------------- Santa will find my house by using his Santa Mobile Detector. His mobile de- tector device blinks red if there are children in the house. If the detector blinks white that means there are no children at the house. If Santa flies over a house “It is special because it was Rita Russell Lewis and her son, Christopher, are shown above where a naughty child lives made by a child, and it is special because in some in their brightly lit yard at Lake the detector will blink in- ways it represents the Mystic in Bristol. A dramatic digo! This allows Santa to Christmas time we all deep blue sky spreads behind know where the good boys wish we had each year.” the Hwy. 12 South home of Jake and Vanell Summers, below, and girls live and where TREASURED where a pair of lighted deer are he should deliver the coal ORNAMENTS poised in the front yard. and marbles to the naughty PAGES 17 & 24 JOE SUMMERS PHOTOS kids! GAIGE LEWIS Tolar School -------------------------------------- He knows it once he sees it. I’m one of his regulars. HELAMAN SHULER Hosford School -------------------------------------- Why do you deserve any gifts this year? I gave my little brother $2, I stopped people from fighting and I recycle. -

Games Made in Texas (1981 – 2021) Page 1 of 17

Games Made in Texas (1981 – 2021) Page 1 of 17 Release Date Name Developer Platform Location 2021-01-02 Yes! Our Kids Can Video Game - Third Grade Yes! Our Kids Can San Antonio Magnin & Associates (Magnin iOS, Android, Apple TV, 2020-09-30 Wheelchair Mobility Experience Games) Mac, PC, Xbox One Dallas; Richardson 2020-09-17 Shark Tank Tycoon Game Circus, LLC Addison Stadia currently; Steam / 2020-07-13 Orcs Must Die! 3 Robot Entertainment Xbox / PlayStation in 2021 Plano 2019-12-31 Path of the Warrior (VR) Twisted Pixel Games Austin 2019-10-29 Readyset Heroes Robot Entertainment, Inc. Mobile Plano Xbox One, Playstation 4, 2019-09-13 Borderlands 3 Gearbox Software PC Frisco 2019-08-13 Vicious Circle Rooster Teeth Austin 2019-08-01 Stranger Things 3 BonusXP Nintendo Switch Allen 2019-07-11 Defector Twisted Pixel Games Oculus Rift Austin Android, PlayStation 4, Nintendo Switch, Xbox One, iOS, Microsoft 2019-06-28 Fortnight Phase 2 Armature Studio Windows, Mac, Mobile Austin Microsoft Windows & 2019-06-21 Dreadnought 2 Six Foot Games PlayStation 4 Houston 2019-05-31 Sports Scramble Armature Studio Oculus Quest Austin 2019-05-02 Duck Game Armature Studio Nintendo Switch Austin 2019-03-15 Zombie Boss Boss Fight Entertainment, Inc Allen; McKinney Windows (Steam); Oculus 2018-12-31 Baby Hands Chicken Waffle Rift Austin 2018-12-31 Baby Hands Jr. Chicken Waffle iOS; Android Austin 2018-12-19 Arte Lumiere Triseum Microsoft Windows Bryan 2018-11-15 Dreadnought 1: Command The Colossal Six Foot, LLC Houston 2018-10-10 World Armature Studio LLC Austin 2018-10-05 Donkey Kong Country: Tropical Freeze Retro Studios Austin Windows (Steam); HTC 2018-08-27 Torn Aspyr Media Inc. -

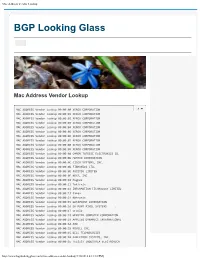

Mac Address Vendor Lookup

Mac Address Vendor Lookup BGP Looking Glass Mac Address Vendor Lookup MAC ADDRESS Vendor Lookup 00:00:00 XEROX CORPORATION 8 MAC ADDRESS Vendor Lookup 00:00:01 XEROX CORPORATION MAC ADDRESS Vendor Lookup 00:00:02 XEROX CORPORATION MAC ADDRESS Vendor Lookup 00:00:03 XEROX CORPORATION MAC ADDRESS Vendor Lookup 00:00:04 XEROX CORPORATION MAC ADDRESS Vendor Lookup 00:00:05 XEROX CORPORATION MAC ADDRESS Vendor Lookup 00:00:06 XEROX CORPORATION MAC ADDRESS Vendor Lookup 00:00:07 XEROX CORPORATION MAC ADDRESS Vendor Lookup 00:00:08 XEROX CORPORATION MAC ADDRESS Vendor Lookup 00:00:09 XEROX CORPORATION MAC ADDRESS Vendor Lookup 00:00:0A OMRON TATEISI ELECTRONICS CO. MAC ADDRESS Vendor Lookup 00:00:0B MATRIX CORPORATION MAC ADDRESS Vendor Lookup 00:00:0C CISCO SYSTEMS, INC. MAC ADDRESS Vendor Lookup 00:00:0D FIBRONICS LTD. MAC ADDRESS Vendor Lookup 00:00:0E FUJITSU LIMITED MAC ADDRESS Vendor Lookup 00:00:0F NEXT, INC. MAC ADDRESS Vendor Lookup 00:00:10 Hughes MAC ADDRESS Vendor Lookup 00:00:11 Tektrnix MAC ADDRESS Vendor Lookup 00:00:12 INFORMATION TECHNOLOGY LIMITED MAC ADDRESS Vendor Lookup 00:00:13 Camex MAC ADDRESS Vendor Lookup 00:00:14 Netronix MAC ADDRESS Vendor Lookup 00:00:15 DATAPOINT CORPORATION MAC ADDRESS Vendor Lookup 00:00:16 DU PONT PIXEL SYSTEMS . MAC ADDRESS Vendor Lookup 00:00:17 Oracle MAC ADDRESS Vendor Lookup 00:00:18 WEBSTER COMPUTER CORPORATION MAC ADDRESS Vendor Lookup 00:00:19 APPLIED DYNAMICS INTERNATIONAL MAC ADDRESS Vendor Lookup 00:00:1A AMD MAC ADDRESS Vendor Lookup 00:00:1B NOVELL INC. -

November 2001

NOVEMBER 2001 GAME DEVELOPER MAGAZINE 600 Harrison Street, San Francisco, CA 94107 t: 415.947.6000 f: 415.947.6090 www.gdmag.com GAME✎ PLAN Publisher LETTER FROM THE EDITOR Jennifer Pahlka [email protected] EDITORIAL Editor-In-Chief Jennifer Olsen [email protected] Managing Editor Laura Huber [email protected] Brave Small World Feature Editor Curt Feldman [email protected] Production Editor Olga Zundel [email protected] obile gaming: the new games on such a restrictive platform. A Product Review Editor frontier. Or is it? What fresh approach to game design is the only Tor Berg [email protected] Art Director do game developers hope for groundbreaking success on Audrey Welch [email protected] know about these mobile devices and winning support from Editor-At-Large Chris Hecker [email protected] emerging platforms, and the most casual of game players, à la the Contributing Editors what do hardware manufacturers and serv- TETRIS revolution of the late 1980s. Some- Daniel Huebner [email protected] M Jeff Lander [email protected] ice carriers for mobile devices know about one has to help lead the way. Tito Pagan [email protected] games, both those who make them and There have been to date at this early Advisory Board those who play them? stage in the life of mobile gaming more Hal Barwood LucasArts Ellen Guon Beeman Beemania Entering the mobile gaming arena is promises made than promises kept. Every- Andy Gavin Naughty Dog Joby Otero Luxoflux becoming increasingly attractive to game body seems to have a differing opinion Dave Pottinger Ensemble Studios developers at companies of all sizes and about which revenue models are sustain- George Sanger Big Fat Inc. -

Supplement to the Daily Mountain Eagle | Sunday, Dec. 24, 2017

Letters To e Fa Santa Supplement to the Daily Mountain Eagle | Sunday,Sundayy,, DecDec.. 24, 2017 A2 — LETTERS TO SANTA Jasper, Ala., Sun., Dec. 24, 2017 www.mountaineagle.com Arc of Walker County My name is Brantly. I for you. Oh, and carrots for I love you Santa. This year could I please Nerf guns Reach Pre-K am 4 years old. I attend your reindeer. Here is my Love, have, Nerf ammo Reach Pre-K at the Arc of Christmas Wish List: Maggie Dutton 1. Mario Kart 8 Deluxe Landon Shane Roberts Dear Santa, Walker County. For Christ- 1. R.C. Trucks Jasper, Ala. for the Nintendo Switch 11 years My name is Tucker. I am mas I would like a hot 2. nife 2. Lego Nexo Knights the Jasper, Ala. 4 years old. I attend Reach wheels garage, puppydog 3. Santa Costumes *********************** Stone Colossus Pre-K at the Arc of Walker Pal legos, a hatchimal, and 4. Dirt toys *** 3. Mario + Rabbids King- *********************** County. For Christmas I a potty that sprays water. 5. Simo Games dom Battle for the Nin- **** would like toys, drum set, Love, 6. forwheeler To Santa, tendo Switch. spiderman. Brantly Thank you for reading Nintedo Switch Red and Sincerely, Dear Santa, Love, my letter. Blue Controllers. Ben Harrison Merry Chrismas, if you Tucker Dear Santa, Merry Christmas, Santa! Tough Talkers Battle Jasper, Ala. will plz get me these items. My name is Claire. I am I love you, Pack. Car’s 3, electrick scooter, Dear Santa, 4 years old. I attend Reach Skyler WWE ZK18 XBox 1.