How to Calculate Object Detail Level Based on Field of View and Camera Resolution

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Estimation and Correction of the Distortion in Forensic Image Due to Rotation of the Photo Camera

Master Thesis Electrical Engineering February 2018 Master Thesis Electrical Engineering with emphasis on Signal Processing February 2018 Estimation and Correction of the Distortion in Forensic Image due to Rotation of the Photo Camera Sathwika Bavikadi Venkata Bharath Botta Department of Applied Signal Processing Blekinge Institute of Technology SE–371 79 Karlskrona, Sweden This thesis is submitted to the Department of Applied Signal Processing at Blekinge Institute of Technology in partial fulfillment of the requirements for the degree of Master of Science in Electrical Engineering with Emphasis on Signal Processing. Contact Information: Author(s): Sathwika Bavikadi E-mail: [email protected] Venkata Bharath Botta E-mail: [email protected] Supervisor: Irina Gertsovich University Examiner: Dr. Sven Johansson Department of Applied Signal Processing Internet : www.bth.se Blekinge Institute of Technology Phone : +46 455 38 50 00 SE–371 79 Karlskrona, Sweden Fax : +46 455 38 50 57 Abstract Images, unlike text, represent an effective and natural communica- tion media for humans, due to their immediacy and the easy way to understand the image content. Shape recognition and pattern recog- nition are one of the most important tasks in the image processing. Crime scene photographs should always be in focus and there should be always be a ruler be present, this will allow the investigators the ability to resize the image to accurately reconstruct the scene. There- fore, the camera must be on a grounded platform such as tripod. Due to the rotation of the camera around the camera center there exist the distortion in the image which must be minimized. -

Depth of Field in Photography

Instructor: N. David King Page 1 DEPTH OF FIELD IN PHOTOGRAPHY Handout for Photography Students N. David King, Instructor WWWHAT IS DDDEPTH OF FFFIELD ??? Photographers generally have to deal with one of two main optical issues for any given photograph: Motion (relative to the film plane) and Depth of Field. This handout is about Depth of Field. But what is it? Depth of Field is a major compositional tool used by photographers to direct attention to specific areas of a print or, at the other extreme, to allow the viewer’s eye to travel in focus over the entire print’s surface, as it appears to do in reality. Here are two example images. Depth of Field Examples Shallow Depth of Field Deep Depth of Field using wide aperture using small aperture and close focal distance and greater focal distance Depth of Field in PhotogPhotography:raphy: Student Handout © N. DavDavidid King 2004, Rev 2010 Instructor: N. David King Page 2 SSSURPRISE !!! The first image (the garden flowers on the left) was shot IIITTT’’’S AAALL AN ILLUSION with a wide aperture and is focused on the flower closest to the viewer. The second image (on the right) was shot with a smaller aperture and is focused on a yellow flower near the rear of that group of flowers. Though it looks as if we are really increasing the area that is in focus from the first image to the second, that apparent increase is actually an optical illusion. In the second image there is still only one plane where the lens is critically focused. -

The Trade-Off Between Image Resolution and Field of View: the Influence of Lens Selection

The Trade-off between Image Resolution and Field of View: the Influence of Lens Selection “I want a lens that can cover the whole parking lot and I want to be able to read a license plate.” Sound familiar? As a manufacturer of wide angle lenses, Theia Technologies is frequently asked if we have a product that allows the user to do both of these things simultaneously. And the answer is ‘it depends’. It depends on several variables - the resolution you start with from the camera, how far away the subject is from the lens, and the field of view of the lens. But keeping the first two variables constant, the impact of the lens field of view becomes clear. One of the important factors to consider when designing video surveillance installations is the trade-off between lens field of view and image resolution. Image Resolution versus Field of View One important, but often neglected consideration in video surveillance systems design is the trade-off between image resolution and field of view. With any given combination of camera and lens the native resolution from the camera is spread over the entire field of view of the lens, determining pixel density and image resolution. The wider the resolution is spread, the lower the pixel density, the lower the image resolution or image detail. The images below, taken with the same camera from the same distance away, illustrate this trade-off. The widest field of view allows you to cover the widest area but does not allow you to see high detail, while the narrowest field of view permits capture of high detail at the expense of wide area coverage. -

A Guide to Smartphone Astrophotography National Aeronautics and Space Administration

National Aeronautics and Space Administration A Guide to Smartphone Astrophotography National Aeronautics and Space Administration A Guide to Smartphone Astrophotography A Guide to Smartphone Astrophotography Dr. Sten Odenwald NASA Space Science Education Consortium Goddard Space Flight Center Greenbelt, Maryland Cover designs and editing by Abbey Interrante Cover illustrations Front: Aurora (Elizabeth Macdonald), moon (Spencer Collins), star trails (Donald Noor), Orion nebula (Christian Harris), solar eclipse (Christopher Jones), Milky Way (Shun-Chia Yang), satellite streaks (Stanislav Kaniansky),sunspot (Michael Seeboerger-Weichselbaum),sun dogs (Billy Heather). Back: Milky Way (Gabriel Clark) Two front cover designs are provided with this book. To conserve toner, begin document printing with the second cover. This product is supported by NASA under cooperative agreement number NNH15ZDA004C. [1] Table of Contents Introduction.................................................................................................................................................... 5 How to use this book ..................................................................................................................................... 9 1.0 Light Pollution ....................................................................................................................................... 12 2.0 Cameras ................................................................................................................................................ -

Notes on View Camera Geometry∗

Notes on View Camera Geometry∗ Robert E. Wheeler May 8, 2003 c 1997-2001 by Robert E. Wheeler, all rights reserved. ∗ 1 Contents 1 Desargues’s Theorem 4 2 The Gaussian Lens Equation 6 3 Thick lenses 8 4 Pivot Points 9 5 Determining the lens tilt 10 5.1Usingdistancesandangles...................... 10 5.2Usingbackfocus........................... 12 5.3Wheeler’srules............................ 13 5.4LensMovement............................ 14 5.5BackTilts............................... 14 6Depthoffield for parallel planes 15 6.1NearDOFlimit............................ 15 6.2FarDOFlimit............................ 17 6.3DOF.................................. 17 6.4Circlesofconfusion.......................... 18 6.5DOFandformat........................... 19 6.6TheDOFequation.......................... 19 6.7Hyperfocaldistance......................... 20 6.8Approximations............................ 21 6.9Focusgivennearandfarlimits................... 21 6.9.1 Objectdistances....................... 21 6.9.2 Imagedistances........................ 22 7Depthoffield, depth of focus 23 8Fuzzyimages 24 9Effects of diffractiononDOF 26 9.1Theory................................. 26 9.2Data.................................. 27 9.3Resolution............................... 29 9.4Formatconsiderations........................ 31 9.5Minimumaperture.......................... 32 9.6Theoreticalcurves.......................... 33 10 Depth of field for a tilted lens 35 10.1NearandfarDOFequations.................... 35 10.2 Near and far DOF equations in terms of ρ ............ -

Tutorials: Depth of Field

TUTORIALS: DEPTH OF FIELD Ed. Note: Information presented by Steve Fowler – 10/15/14 Camera Club Meeting Depth of field refers to the range of distance that appears acceptably sharp. It varies depending on camera type, aperture and focusing distance, although print size and viewing distance can also influence our perception of depth of field. This tutorial is designed to give a better intuitive and technical understanding for photography, and provides a depth of field calculator to show how it varies with your camera settings. The depth of field does not abruptly change from sharp to unsharp, but instead occurs as a gradual transition. In fact, everything immediately in front of or in back of the focusing distance begins to lose sharpness — even if this is not perceived by our eyes or by the resolution of the camera. CIRCLE OF CONFUSION Since there is no critical point of transition, a more rigorous term called the "circle of confusion" is used to define how much a point needs to be blurred in order to be perceived as unsharp. When the circle of confusion becomes perceptible to our eyes, this region is said to be outside the depth of field and thus no longer "acceptably sharp." The circle of confusion above has been exaggerated for clarity; in reality this would be only a tiny fraction of the camera sensor's area. When does the circle of confusion become perceptible to our eyes? An acceptably sharp circle of confusion is loosely defined as one which would go unnoticed when enlarged to a standard 8x10 inch print, and observed from a standard viewing distance of about 1 foot. -

Choosing a Lens of the Right Focal Length That You Will Get the Appropriate Angle of View

How to choose a camera lens Understanding the technology SUMMARY When you buy a camera, make sure the lens is suited for the intended purpose. With the right lens, you can greatly enhance the results you achieve in a particular type of photography and, by having a variety of lenses, you can greatly extend the usefulness of your camera. This paper provides a basic introduction to the principles of camera lenses. It explains the terminology: what is meant by focal length and f/number? Choose the right lens and taking good photographs becomes so much easier! The Basics The most important component of your camera is the lens. It’s no good having a camera with an impressively large number of megapixels if the lens is not up to the job. A lens has two basic properties: Focal length This determines the field of view of the lens. To match human vision, you’d need a lens with a focal length of about 35mm (in photography, focal length is always measured in millimetres). The normal, or standard, focal length for a camera lens is 50mm - anything smaller is called wide-angle, anything greater is called telephoto. A camera lens offering a range of focal lengths is called a zoom lens; one of fixed focal length is called a prime lens. f/number The ratio of the focal length of the lens (f) to its effective diameter: the size of its ‘window’ on the outside world , or aperture. The f/number is a measure of the light gathering power of the lens (called its speed). -

A Quick Guide to Prime Lenses

A Quick Guide to Prime Lenses PUBLISHED - 29 SEP 2016 What is a prime lens? Well, in terms of what it's not, it's a lens that isn't a zoom – rather than have a range of focal lengths, it has only one. But in terms of what it is, it's far more than simply a fixed focal length lens. So what are the benefits of using a prime lens? Get yourself comfortable, because there's a lot to get through… Smaller, lighter, more compact Prime lenses are often smaller and more compact than zooms, and tend to be faster, offering wide apertures of f/2.8, f/1.8 or even f/1.4. These large apertures let in more light, which benefits the photographer in a number of ways. First, you can use fast shutter speeds with large apertures to freeze motion. This becomes important when you're shooting in low light. By using a high ISO and a wide aperture, you'll be able to use high enough shutter speeds so you can hand-hold the camera. Secondly, because fast lenses let in lots of light, the viewfinder will be brighter, and so it will be easier to confirm focus, ensuring your images will be sharp. Also, as primes are often simpler in construction than zooms, this means they are not only often slightly less expensive to make, but they also tend to have fewer visual aberrations such a colour fringing and image distortion, giving you a more technically correct image than a zoom can at the extremes of its focal range Another popular benefit of a prime lens is the bokeh it will achieve, especially for portraits. -

PRECISE COLOR COMMUNICATION COLOR CONTROL from PERCEPTION to INSTRUMENTATION Knowing Color

PRECISE COLOR COMMUNICATION COLOR CONTROL FROM PERCEPTION TO INSTRUMENTATION Knowing color. Knowing by color. In any environment, color attracts attention. An infinite number of colors surround us in our everyday lives. We all take color pretty much for granted, but it has a wide range of roles in our daily lives: not only does it influence our tastes in food and other purchases, the color of a person’s face can also tell us about that person’s health. Even though colors affect us so much and their importance continues to grow, our knowledge of color and its control is often insufficient, leading to a variety of problems in deciding product color or in business transactions involving color. Since judgement is often performed according to a person’s impression or experience, it is impossible for everyone to visually control color accurately using common, uniform standards. Is there a way in which we can express a given color* accurately, describe that color to another person, and have that person correctly reproduce the color we perceive? How can color communication between all fields of industry and study be performed smoothly? Clearly, we need more information and knowledge about color. *In this booklet, color will be used as referring to the color of an object. Contents PART I Why does an apple look red? ········································································································4 Human beings can perceive specific wavelengths as colors. ························································6 What color is this apple ? ··············································································································8 Two red balls. How would you describe the differences between their colors to someone? ·······0 Hue. Lightness. Saturation. The world of color is a mixture of these three attributes. -

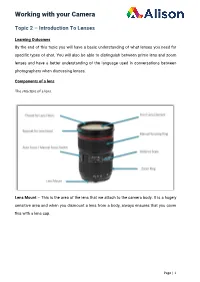

Working with Your Camera

Working with your Camera Topic 2 – Introduction To Lenses Learning Outcomes By the end of this topic you will have a basic understanding of what lenses you need for specific types of shot. You will also be able to distinguish between prime lens and zoom lenses and have a better understanding of the language used in conversations between photographers when discussing lenses. Components of a lens The structure of a lens. Lens Mount – This is the area of the lens that we attach to the camera body. It is a hugely sensitive area and when you dismount a lens from a body, always ensures that you cover this with a lens cap. Page | 1 Working with your Camera Zoom Ring – This applies to lenses that have a ranging focal length. The zoom ring adjusts the focal length of the lens, allowing you to move closer or further away from your subject. Distance Scale – The distance scale is simply an accurate reading of what your zoom ring is doing. It will tell you what focal length you are at if you are unsure. Autofocus/ Manual Focus Switch – This is simply a switch which allows you to shoot your subject using autofocus or it gives you the opportunity to manually control focus yourself. Manual Focusing Ring – This ring will help you to adjust the focus of your subject, but only if you are using the manual focus mode. On a zoom lens, this ring will adjust the focus at whatever focal length you are zoomed into. Bayonet For Lens Hood – This is simply the opposite to the lens cap. -

Depth of Field & Circle of Confusion

Advanced Photography Topic 6 - Optics – Depth of Field and Circle Of Confusion Learning Outcomes In this lesson, we will learn all about depth of field and a concept known as the Circle of Confusion. By the end of this lesson, you will have a much better understanding of what each of these terms mean and how they impact your photography. Page | 1 Advanced Photography Depth of Field Depth of field refers to the range of distance that appears acceptably sharp. It varies depending on camera type, aperture and focusing distance. The depth of field isn’t something that abruptly changes from sharp to unsharp/soft. It happens as a gradual transition. Everything immediately in front of or behind the focusing distance begins to lose sharpness, even if this is not perceived by our eyes or by the resolution of the camera. Circle of Confusion (CoF) The term called the "circle of confusion" is used to define how much a point needs to be blurred in order to be perceived as unsharp, due to the fact that there is no obvious sharp point in transition. When the circle of confusion becomes perceptible to our eyes, this region is said to be outside the depth of field and thus no longer "acceptably sharp." The circle of confusion in this example has been exaggerated for clarity; in reality this would only be a tiny portion of the camera sensor's area. When does this circle of confusion become perceptible to our eyes? Well, an acceptably sharp circle of confusion is loosely defined as one which would go unnoticed when enlarged to a standard 8x10 inch print, and observed from a standard viewing distance of about 1 foot. -

The Camera Lens Focal Length

The Camera Lens Focal Length ! " Is a measure of the power of a lens to bend light rays coming from the subject. The shorter the focal length, the greater the bending power and the closer the focal plane is the the rear of the lens (longer lens is the opposite effect) ! " Literally, the focal length of a lens is the distance from the optical center of the lens to the focal plane when the lens is focused on infinity Perspective: Zoom vs. Dolly ! " If the camera is moved closer to a subject the relative size of the foreground and background’s objects increase at different rates ! " Conversely, when you zoom (increase the focal length) the background and the foreground both increase at a rate directly proportional to the lens focal length. Different Lens Types change the angle of view Lens types Normal, Wide & Telephoto ! " A lens of medium focal length that yields an image with natural perspective ! " A wide angle lens is about half the focal length of a normal lens ! " Lenses about 150 percent longer than the normal focal length are considered telephoto Wide Angle Telephoto lens Wide Angle Lens Effects ! " Can show more image area ! " Shooting down with a wide (make a room look larger—or angle lens exaggerates depth show more of it) ! " A head and shoulder close- ! " If someone is standing on up with a wide angle lens— the edge of a wide angle lens distorts facial features it makes them look wider ! " If you are 5 feet or more ! " If someone runs from the from a subject using a wide background to the angle lens, then you do not foreground on the edge of have to worry about facial the frame—they will look like distortion they are running faster Distortion of Focal Lengths Telephoto Lenses ! " Shallow depth of field ! " 2-dimensional looking image ! " Increases image size ! " Camera shake Prime Lens vs.