Practical Approach to Developing an Automation Testing Tool

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Openbsd Gaming Resource

OPENBSD GAMING RESOURCE A continually updated resource for playing video games on OpenBSD. Mr. Satterly Updated August 7, 2021 P11U17A3B8 III Title: OpenBSD Gaming Resource Author: Mr. Satterly Publisher: Mr. Satterly Date: Updated August 7, 2021 Copyright: Creative Commons Zero 1.0 Universal Email: [email protected] Website: https://MrSatterly.com/ Contents 1 Introduction1 2 Ways to play the games2 2.1 Base system........................ 2 2.2 Ports/Editors........................ 3 2.3 Ports/Emulators...................... 3 Arcade emulation..................... 4 Computer emulation................... 4 Game console emulation................. 4 Operating system emulation .............. 7 2.4 Ports/Games........................ 8 Game engines....................... 8 Interactive fiction..................... 9 2.5 Ports/Math......................... 10 2.6 Ports/Net.......................... 10 2.7 Ports/Shells ........................ 12 2.8 Ports/WWW ........................ 12 3 Notable games 14 3.1 Free games ........................ 14 A-I.............................. 14 J-R.............................. 22 S-Z.............................. 26 3.2 Non-free games...................... 31 4 Getting the games 33 4.1 Games............................ 33 5 Former ways to play games 37 6 What next? 38 Appendices 39 A Clones, models, and variants 39 Index 51 IV 1 Introduction I use this document to help organize my thoughts, files, and links on how to play games on OpenBSD. It helps me to remember what I have gone through while finding new games. The biggest reason to read or at least skim this document is because how can you search for something you do not know exists? I will show you ways to play games, what free and non-free games are available, and give links to help you get started on downloading them. -

Edmonds Community College 2004-2006 Edmonds Community

capturing the future capturing capturing the future EDMONDS COMMUNITY COLLEGE 2004-2006 Edmonds Community College edmonds community college 20000 68th Ave W • Lynnwood, WA 98036 (425) 640-1459 • www.edcc.edu Getting Started Campus Map ENROLLMENT SERVICES Lynnwood Hall, First Floor http://getstarted.edcc.edu [email protected] • (425) 640-1459 What Program’s Right for You? Decisions, decisions...we provide information to help you choose the degree, certificate or classes that best meet your goals. We offer advising for new students, call (425) 640-1458. Use computers at the Advising Resource Center to research career and educational programs, as well as apply and register for classes online. Apply for Admission Complete an admission form online or pick one up at Enrollment Services. You’ll also need to pay the one-time admissions fee. After submitting the form, you should receive information from the college on assessment and registration within two business days. Math and English Placement Determine if, and when, you need to take the Accuplacer Assessment. If you are seeking a degree or a certificate – or if you are taking math or English classes – you must take the Accuplacer to assess your writing, reading and math skills. Test from 9 a.m.-6 p.m., Monday-Thursday, and 9 a.m.-3 p.m. Fridays in Enrollment Services. No appointment necessary. Advising and Campus Orientation Before you meet with an adviser, get a program requirement sheet online or from Enrollment Services. Advisers will help you plan your education and select the classes you need for a degree or certificate. -

Edmonds Community College Edmonds

Edmonds Community College 2008-2010 Edmonds Community College Edmonds Community College www.edcc.edu 2008-2010 20000 68th Ave W Lynnwood, WA 98036 (425) 640-1459 Looking for a high-quality Edmonds Community College Campus college? Start here. We want you to be our student! • Begin your bachelor’s degree. • Develop job skills for a new career. • Learn from experienced, caring instructors in small classes. Find the Program Go to Enrollment Services That’s Right for You In person: Lynnwood Hall, First Floor • Online: http://getstarted.edcc.edu Each year 10,500 students take courses for credit toward a certificate Step 1: Apply for admission or degree at Edmonds Community Complete an admission form online or pick one up at Enrollment Services. You’ll College. More than 50 percent seek also need to pay the one-time admissions fee (non-refundable). After submitting transfer degrees for bachelor’s the form, you should receive information from the college on assessment and programs, 29 percent seek degrees registration within two business days. You’ll receive a student ID number, too. that lead directly to jobs, and others take continuing education classes for personal enrichment and workplace Step 2: Find out what English and math classes you’ll need skills. See Chapter 2 for information Determine if you need to take the Accuplacer assessment. If you have not had to help you choose the degree, previous college-level math or English and are seeking a degree or certificate – certificate or classes that best meet or if you are taking math or English classes – take the Accuplacer assessment to your goals. -

Website Listing Ajax

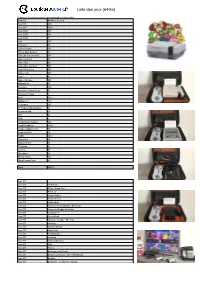

Liste des jeux (64Go) Cliquez sur le nom des consoles pour descendre au bon endroit Console Nombre de jeux Atari ST 274 Atari 800 5627 Atari 2600 457 Atari 5200 101 Atari 7800 51 C64 150 Channel F 34 Coleco Vision 151 Family Disk System 43 FBA Libretro (arcade) 647 Game & watch 58 Game Boy 621 Game Boy Advance 951 Game Boy Color 501 Game gear 277 Lynx 84 Mame (arcade) 808 Nintento 64 78 Neo-Geo 152 Neo-Geo Pocket Color 81 Neo-Geo Pocket 9 NES 1812 Odyssey 2 125 Pc Engine 291 Pc Engine Supergraphx 97 Pokémon Mini 26 PS1 54 PSP 2 Sega Master System 288 Sega Megadrive 1030 Sega megadrive 32x 30 Sega sg-1000 59 SNES 1461 Stellaview 66 Sufami Turbo 15 Thomson 82 Vectrex 75 Virtualboy 24 Wonderswan 102 WonderswanColor 83 Total 16877 Atari ST Atari ST 10th Frame Atari ST 500cc Grand Prix Atari ST 5th Gear Atari ST Action Fighter Atari ST Action Service Atari ST Addictaball Atari ST Advanced Fruit Machine Simulator Atari ST Advanced Rugby Simulator Atari ST Afterburner Atari ST Alien World Atari ST Alternate Reality - The City Atari ST Anarchy Atari ST Another World Atari ST Apprentice Atari ST Archipelagos Atari ST Arcticfox Atari ST Artificial Dreams Atari ST Atax Atari ST Atomix Atari ST Backgammon Royale Atari ST Balance of Power - The 1990 Edition Atari ST Ballistix Atari ST Barbarian : Le Guerrier Absolu Atari ST Battle Chess Atari ST Battle Probe Atari ST Battlehawks 1942 Atari ST Beach Volley Atari ST Beastlord Atari ST Beyond the Ice Palace Atari ST Black Tiger Atari ST Blasteroids Atari ST Blazing Thunder Atari ST Blood Money Atari ST BMX Simulator Atari ST Bob Winner Atari ST Bomb Jack Atari ST Bumpy Atari ST Burger Man Atari ST Captain Fizz Meets the Blaster-Trons Atari ST Carrier Command Atari ST Cartoon Capers Atari ST Catch 23 Atari ST Championship Baseball Atari ST Championship Cricket Atari ST Championship Wrestling Atari ST Chase H.Q. -

C Ou Rse D E Sc Rip Tio Ns

48 Edmonds Community College 2008-10 • www.edcc.edu Chapter 6... Course Descriptions From accounting to writing The classes offered at Edmonds Community College are listed from page 48 to 120. The college may add classes for new programs or to update current programs. See http://courses.edcc.edu or the quarterly class schedule publication for the most up-to-date course numbers, listings and descriptions. Dual Listed Course Financial Accounting II Assistance (VITA) comprehensive exam ACCT 202, 5 Credits included in cost of course. S/U grade A dual listed course is one of two courses Examine a number of complex only. Prerequisite: Recommend ACCT 214 that have the same title and content, but accounting topics involving assets, or equivalent or instructor permission. use different department abbreviations. liabilities, and equity accounts. Includes Concurrent enrollment in BUS 291 One or the other may be taken for study of bonds, financial statement required if using course to satisfy credit, not both. The student must make and analysis, stocks, and statement of internship requirement. the choice at the time of registration. cash flows. Prerequisite: ACCT 201 with Example: DIVST 120 Survey of Visual Art- grade of 2.0 and EAP 121 or BRDGE 091 PC Accounting Applications CD or ART 120 Survey of Visual Art-CD. placement. ACCT 216, 5 Credits Prepares students to interact with Accounting Managerial Accounting computerized accounting systems. Major ACCT 203, 5 Credits concepts of computerized accounting (425) 640-1636 will be introduced including trial http://acct.edcc.edu Conceptual understanding of managerial and cost accounting concepts applicable balance maintenance and financial Accounting Fundamentals to all forms of businesses. -

Office of the Graduate School

Office of the Graduate School The Office of the Graduate School, 201 Administration Building, was created to serve UTEP graduate students and to respond to graduate student needs and issues. We encourage you to visit the office and meet the professionals who will be assisting you through your degree or personal program. Our office hours are 8-5 daily, extending to 7 p.m. on Monday and Tuesday, during the Fall and Spring semesters; our telephone number is (915) 747-5491. We are pleased to respond to e-mail and can be contacted at [email protected], or access our website at www.utep.edu/graduate. Among the many and varied areas of responsibility within the Office are: • Graduate admissions • Graduate recruitment • Graduate orientation • Maintenance of academic files of graduate students • Preliminary Degree Plans and Final Programs of Study • Academic status communication • Graduate assistantships • Application for graduation We look forward to serving you. REFERENCE TELEPHONE NUMBERS Graduate School (915) 747-5491 Registration and Records (915) 747-5550 Financial Services (915) 747-5806 Financial Aid (915) 747-5204 Office of International Programs (915) 747-5664 Housing Services (915) 747-5352 Dean of Students (915) 747-5648 THE UNIVERSITY OF TEXAS AT EL PASO 2002-2004 GRADUATE CATALOG Welcome to UTEP 5 The Graduate School 15 Academic Regulations 29 Financial Information 43 Student Life Policies and Procedures 69 Facilities and Student Services 79 Colleges and Degree Programs 101 College of Business Administration 103 College of Education -

Theoretical Science Group

Theoretical Science Group 理論科学グループ 部報 198 号 | 新入生歓迎号 | 目 次 新入生自己紹介 ::::::::::::::::::::::::::::::::::::: 1 C++ 言語入門 第 1 回 ::::::::::::::::::::::::::::〔わたる〕14 初心者のための 8086 講座 ::::::::::::::::::::::::::: 〔高野〕34 文字コードの話 ::::::::::::::::::::::::::::: 〔早坂くりす〕43 TSG 用語の基礎知識 Ver. 1.0 ::::::::::::::::::::::::〔ZERO〕55 新入生自己紹介 新入生自己紹介 坂本 崇裕 坂東大五郎 丹下 吉雄 西 元 宮崎 大輔 菅原 豊 丸山 勲 時田 忠昭 鶴見 雅弘 馬本 英樹 小林 徹也 保原 麗 黒川 秀樹 長野 誠 岡崎哲也 内仲 俊輔 寺園 泰 TSG 部報 No. 199 1 新入生自己紹介 ¶ ³ 坂本 崇裕 ´ ・名前 坂本 崇裕 ・ハンドルネーム すーゆー 日本語が使えるとき TYM 日本語が使えないとき ・保有機種 PC-9821Xa13/K12 MT-8617ES BJC-400J MC288XL II PC-9801-118/PC-9821C3-B02 ■ 自己紹介など パソコンは中学 2 年から友人に勧められて使い始めました。その時のマシンは PC{ 9801DA21)でした。そして、友人達 10 人ほどの間で「My くろフロッP」なるパソコン サークルを結成し、初心者から上級者までが集まり、自主的に開発したプログラムの公 開や意見交換が行われていました。現在、受験期間ということで休止していたこのサー クルを「My くろフロッP・R」としてその活動を再開しようかどうか検討中です。 それとは別に学校のパソコン同好会 (NPCA)(現「電腦科學研究部」) に高校 1 年から 入部し、その活動を見てるだけ~(副会長だろが) でした。また、去年から、「INFINITY- SERVE」(078-435-2263) なる BBS をこのクラブで開局&運営しています。現在では神 戸では有数のあやしげな CG のたまり場となってしまいました。いつまで続くか分から ない BBS ですが、興味のある方はアクセスしてみてください。 そ~ゆ~わけで、どんなわけで~ (^_^;) 現在の私は、あまりパソコンそのものに関する活動はあまりしておらず、パソコンを a 使って何かをする (LTEX でレポートを書く 等など) 程度です。つまり、「作る」立場か ら「使う」立場に変わってしまったわけです。昔は、C/C++, 86ASM, QuickBASIC な んかを使えていたような気がしますが、今はどこまでできるやら>謎<です。 あっ、そうそう。通信に関しては始めてまだ 1ヶ月も経っていない筋金入りの初心者 です。また、今まで MS 系の OS に毒されてきたので、ECC の UNIX では戸惑うこと ばかりです。そこいらあたりのどこぞのところを教えてくださるとうれしいです。理科 1 類 19 組 (ドイツ語) にいますので、声をかけてください。 ZOB Station BBS zob19155 すーゆー いぬ。BBS INU00090 すーゆー INFINITY-SERVE INF00028 すーゆー ECC g640770 Takahiro SAKAMOTO 1)改造するにはもってこいのマシンです 2 TSG 部報 No. 199 新入生自己紹介 ¶ ³ 坂東大五郎 ´ クラス: 1 年 SI-20 -

FUJIWARA-DISSERTATION-2015.Pdf

Copyright by Luis Mario Fujiwara 2015 The Dissertation Committee for Luis Mario Fujiwara Certifies that this is the approved version of the following dissertation: THE ROLE OF THE PASTORAL DA CRIANÇA PROGRAM IN THE INFANT MORTALITY TRANSITION IN BRAZIL, 1980-2000 Committee: Chandler Stolp, Supervisor Joseph Potter, Co-Supervisor Bryan Roberts Robert Wilson Patrick Wong THE ROLE OF THE PASTORAL DA CRIANÇA PROGRAM IN THE INFANT MORTALITY TRANSITION IN BRAZIL, 1980-2000 by Luis Mario Fujiwara, BA, MA Dissertation Presented to the Faculty of the Graduate School of The University of Texas at Austin in Partial Fulfillment of the Requirements for the Degree of DOCTOR OF PHILOSOPHY The University of Texas at Austin August 2015 Dedication I dedicate this dissertation to my son Francisco, In Memoriam, who despite his very young age was an absolutely inspiring example of courage and determination in his fighting for survival. I also would like to dedicate this work to my love Vanessa, my Rock of Gibraltar, which companion makes me, every day, a much better person. This dissertation is also dedicated to my father Roberto, In Memoriam, and my mother Marilene, who inspire on me the will to fight for a better, fair, and egalitarian world. I would also like to dedicate this dissertation to my grandmother Maria Magdalena, In Memorian, who taught me the meaning of unconditional love, thank you for always had believe on me, and stimulated me to pursuit all my childhood dreams. Finally, I would like to dedicate this work to all Pastoral’s volunteers and personnel, whose devotion to saving lives and promoting human rights is absolutely inspiring, and a concrete example that another world is possible. -

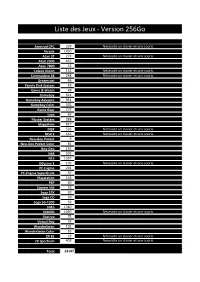

Liste Des Jeux - Version 256Go

Liste des Jeux - Version 256Go Amstrad CPC 297 Nécessite un clavier et une souris. Arcade 1430 Atari ST 273 Nécessite un clavier et une souris. Atari 2600 457 Atari 7800 52 Coleco Vision 151 Nécessite un clavier et une souris. Commodore 64 248 Nécessite un clavier et une souris. Dreamcast 66 Family Disk System 43 Game & Watch 48 Gameboy 621 Gameboy Advance 951 Gameboy Color 502 Game Gear 277 Lynx 84 Master System 288 Megadrive 817 MSX 526 Nécessite un clavier et une souris. MSX 2 146 Nécessite un clavier et une souris. Neo-Geo Pocket 9 Neo-Geo Pocket Color 81 Neo-Geo 152 N64 205 NES 1397 Odyssey 2 109 Nécessite un clavier et une souris. PC-Engine 291 PC-Engine SuperGrafx 97 Playstation 136 PSP 60 Scumm VM 33 Sega 32X 30 Sega CD 26 Sega SG-1000 59 SNES 1380 X68000 1090 Nécessite un clavier et une souris. Vectrex 75 Virtual Boy 24 WonderSwan 102 WonderSwan Color 83 ZX 81 23 Nécessite un clavier et une souris. ZX Spectrum 408 Nécessite un clavier et une souris. Total 13147 Amstrad CPC 1 180 Amstrad CPC 2 1942 Amstrad CPC 3 1943: The Battle of Midway Amstrad CPC 4 3D Boxing Amstrad CPC 5 3D Grand Prix Amstrad CPC 6 3D Starfighter Amstrad CPC 7 3D Stunt Rider Amstrad CPC 8 Ace 2: The Ultimate Head to Head Conflict Amstrad CPC 9 Ace of Aces Amstrad CPC 10 Advanced Pinball Simulator Amstrad CPC 11 Advanced Tactical Fighter Amstrad CPC 12 After Burner Amstrad CPC 13 After the War Amstrad CPC 14 Airwolf Amstrad CPC 15 Airwolf 2 Amstrad CPC 16 Alex Higgins World Pool Amstrad CPC 17 Alien 8 Amstrad CPC 18 Alien Highway - Encounter 2 Amstrad CPC